Shell, Adobe, Burberry, Columbia, Bayer — you definitely know the names. But what do the gas and oil corporation, the computer software giant, the luxury fashion house, the top outdoor brand, and the multinational pharmaceutical enterprise have in common?

The answer is simple: They use the same technology to make the most of data. Along with thousands of other data-driven organizations from different industries, the above-mentioned leaders opted for Databrick to guide strategic business decisions. In this article, we’ll highlight the reasoning behind this choice and the challenges related to it.

What is Databricks

Databricks is an analytics platform with a unified set of tools for data engineering, data management, data science, and machine learning. It combines the best elements of a data warehouse, a centralized repository for structured data, and a data lake used to host large amounts of raw data. The relatively new storage architecture powering Databricks is called a data lakehouse.

To dive deeper into details, read our article Data Lakehouse: Concept, Key Features, and Architecture Layers.

The lakehouse platform was founded by the creators of Apache Spark, a processing engine for big data workloads. The authors aimed to speed up innovation by eliminating data silos, enabling companies to run machine learning on all types of data, and simplifying collaboration across all parties involved in AI projects. Let’s see what exactly Databricks has to offer.

Databricks architecture

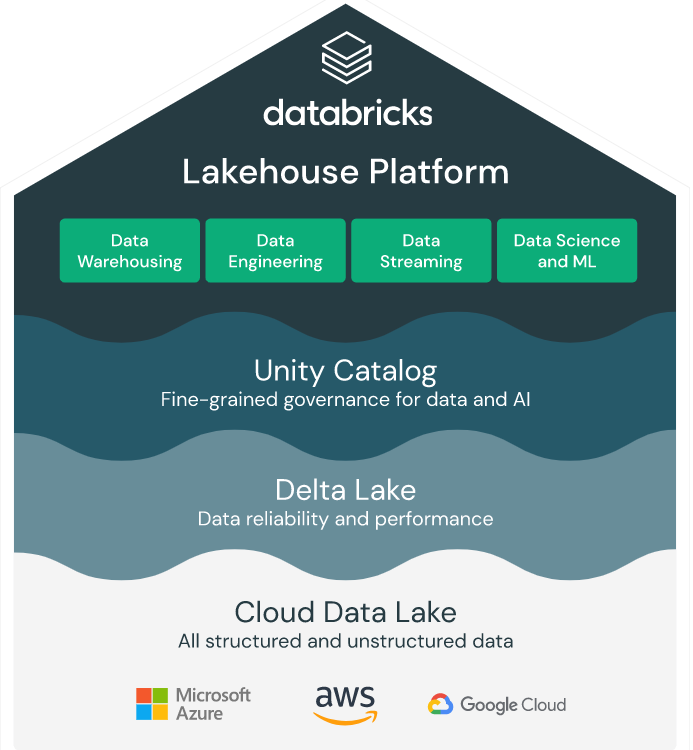

Databricks provides an ecosystem of tools and services covering the entire analytics process — from data ingestion to training and deploying machine learning models. Designed to handle big data, the platform addresses problems associated with data lakes — such as lack of data integrity, poor data quality, and low performance compared to data warehouses. These improvements become possible due to the core components of the Databricks architecture — Delta Lake and Unity Catalog.

Databricks lakehouse platform architecture. Source: Databricks

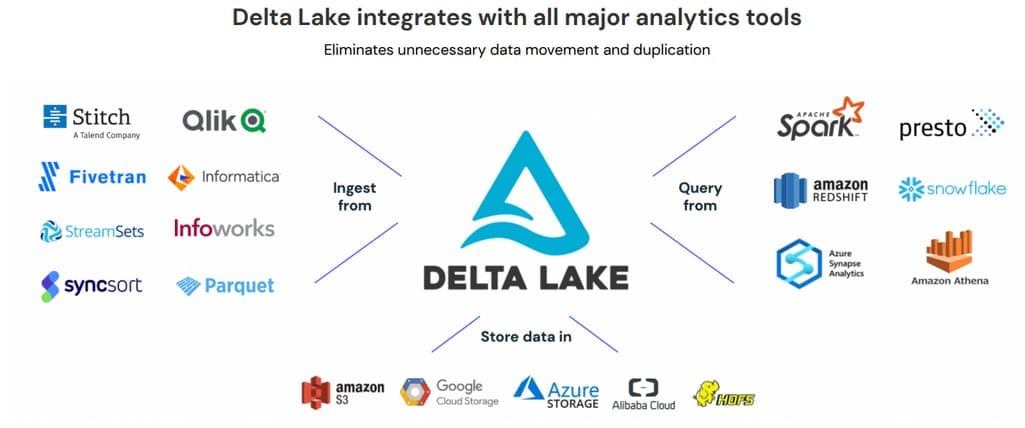

Delta Lake is an open-source, file-based storage layer that adds reliability and functionality to existing data lakes built on Amazon S3, Google Cloud Storage, Azure Data Lake Storage, Alibaba Cloud, HDFS (Hadoop distributed file system), and others. Built around open-source standardized data file format Parquet, it extends its capabilities with

- ACID (atomicity, consistency, isolation, durability) transactions;

- big data versioning, also called time travel;

- simple data manipulation language (DLM) commands — such as Create, Update, Insert, Delete, and Merge; and

- data quality checks on schema and value levels.

This way, Delta Lake brings warehouse features to cloud object storage — an architecture for handling large amounts of unstructured data in the cloud.

Delta Lake integrations. Source: The Data Team’s Guide to the Databricks Lakehouse Platform

Integrating with Apache Spark and other analytics engines, Delta Lake supports both batch and stream data processing. Besides that, it’s fully compatible with various data ingestion and ETL tools.

Unity catalog serves as a centralized metadata management and data governance layer for all Databricks data assets, including tables, files, dashboards, and machine learning models. The catalog provides fine-grained access control, built-in data search, and automated data lineage (tracking flows of data to understand its origins.)

What Databricks is used for

Use cases for Databricks are as diverse and numerous as the types of data and the range of tools and operations it supports. Here are four main applications of the platform across industries:

- data warehousing — SQL queries and business intelligence (BI) at scale;

- data engineering — building and maintaining data pipelines, running ETL and ELT workloads;

- data streaming and real-time analytics, and

- data science and machine learning projects.

Watch our video to learn more about one of the key Databricks applications — data engineering.

How data engineering works in 14 minutes

It’s worth noting that Databricks facilitates DevOps practices and adds automation to the data analytics lifecycle (DataOps). Besides that, its native integration with MLflow, an open-source tool for building machine learning pipelines in production, backs MLOps initiatives.

Databricks advantages

As you already know, Databricks has the best of both worlds — a data warehouse and a data lake. It works with structured and unstructured data, supports various workloads, and is equally helpful for any member of the data science team: from data engineer to data analyst to machine learning engineer.

Watch our video to learn more about the roles involved in the analytics process.

Who makes data useful?

The platform can become a pillar of a modern data stack, especially for large-scale companies. And here are several more reasons in favor of this choice.

Big data democratization and collaboration opportunities

The Databricks team aims to make big data analytics easier for enterprises. The platform is built around Spark, designed specifically to process large amounts of information in batches and micro-batches (for near-real-time computation.) It’s also pre-integrated with many other data engineering, data science, and ML instruments, so you can do almost everything related to data within one platform.

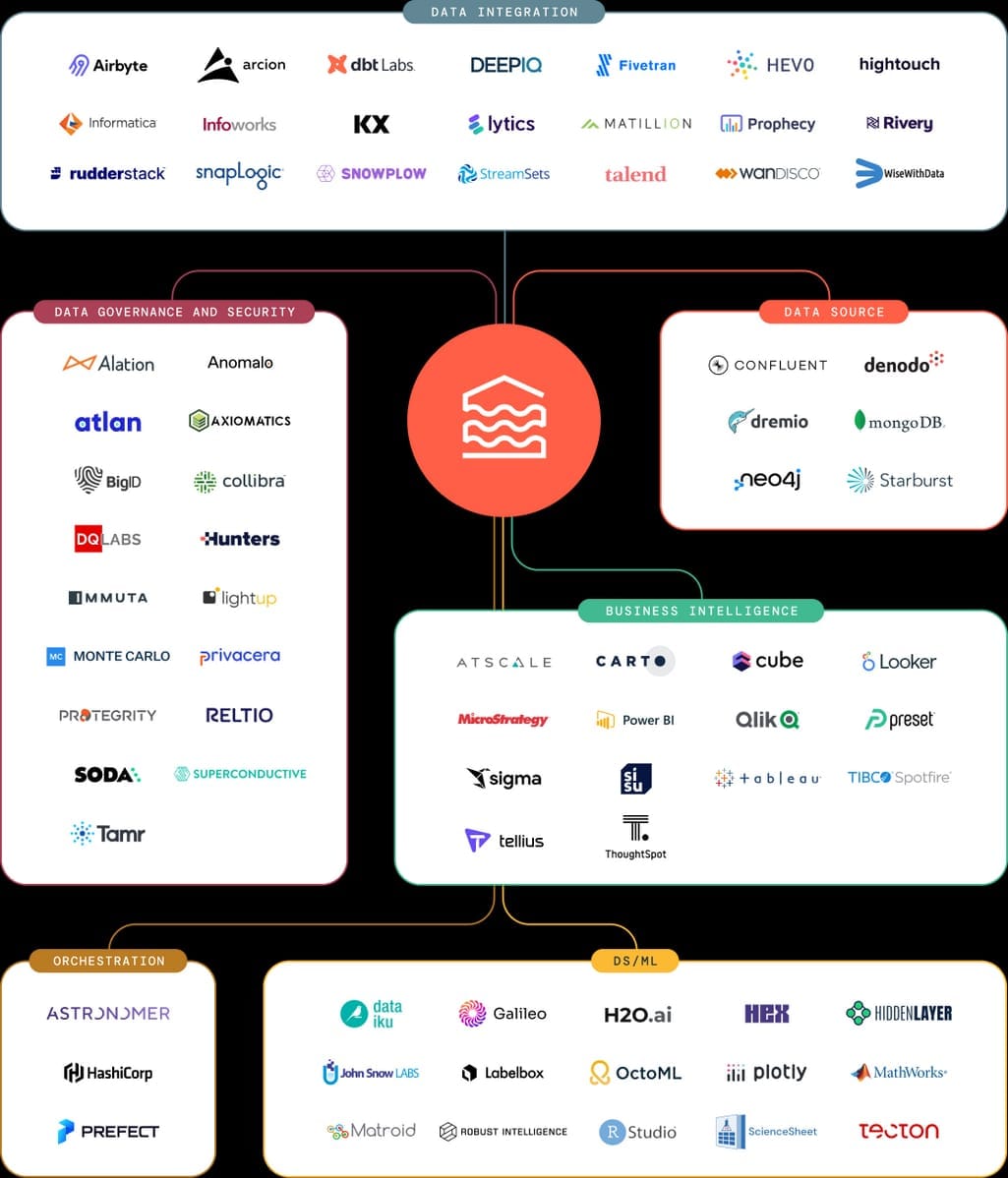

Databricks technology partners. Source: Databricks

To tap into integrations, pre-built tools, and data assets, the platform provides a unified workspace. Authorized users can share notebooks, libraries, queries, ML experiments, data visualizations, and other objects across the organization in a secure manner, enhancing collaboration. Moreover, the platform supports four languages — SQL, R, Python, and Scala — and allows you to switch between them and use them all in the same script. If you don’t know an easy way to solve a particular task in one language, swap to another.

It’s also possible to connect your preferable integrated development environment (Eclipse, PyCharm, Visual Studio Code, etc.) or notebook server (Zeppelin, Jupyter Notebook) to Databricks. This way, you can work with a familiar tool before running analytics or ML models on the lakehouse.

Another powerful instrument for breaking down data silos and democratizing data across the company is Unity Catalog, a data governance layer with a rich user interface for data search and discovery. It enables data experts to effectively seek relevant assets for different use cases — including BI, analytics, and machine learning.

Interoperability and no vendor lock-in

Databricks doesn’t make you move data to a proprietary system. Instead, it connects to your account hosted on a cloud environment of your choice — Google, Azure, or AWS. Products designed with the platform are portable, which enables organizations to leverage a multicloud strategy and avoid vendor lock-in.

To further facilitate interoperability, Databricks developed Delta Sharing, an open protocol for the secure real-time exchange of large datasets, no matter which cloud or on-premises environment organizations use. Data consumers can directly link to the shared assets via Tableau, Power BI, Apache Spark, pandas, and many other tools without replication or migrating data to a new store. The open protocol is natively integrated with Unity Catalog, so customers can take advantage of governance capabilities and security controls when sharing data internally or externally.

End-to-end support for machine learning and faster AI delivery

With Databricks, organizations can effectively manage the entire ML lifecycle, from data preparation to deployment, thus reducing the time to production of AI apps.

Databricks Runtime for machine learning automatically creates a cluster configured for ML projects. It comes pre-built with popular ML libraries (namely, TensorFlow, PyTorch, Keras, MLlib, and XGBoost) and Horovod, a distributed framework to scale and speed up deep learning training. Data scientists can also take advantage of Feature Store, designed to search for and share existing features to be used in the training process.

Other embedded tools to boost and automate ML development include the following.

Databricks AutoML prepares datasets for model training, performs a set of trials, evaluates and finetunes models, and displays results. It also saves source code for each trial run, enabling you to review, reproduce, and modify it;

MLflow performs four key functions:

- tracks ML experiments and records parameters and results for comparison;

- wraps ML code into reusable and shareable packages;

- deploys models from a variety of ML libraries to a variety of model serving platforms; and;

- saves models to a centralized store allowing companies to version, annotate, and collaboratively manage them.

The open source platform works with Java, Python, and R.

Hyperopt is a Python library that helps data scientists scan a set of models, optimize their hyperparameters, and select the best-performing version.

In 2023, Databricks launched a long-expected instrument called Model Serving that makes it possible to deploy MLflow models to the lakehouse as REST API endpoints. This new service simplifies delivering of real-time ML applications (such as recommender systems or AI chatbots) to production. Moreover, it automatically grows and reduces cloud resources to meet demand changes and guarantee cost-effectiveness along with scalability.

Multilevel data security

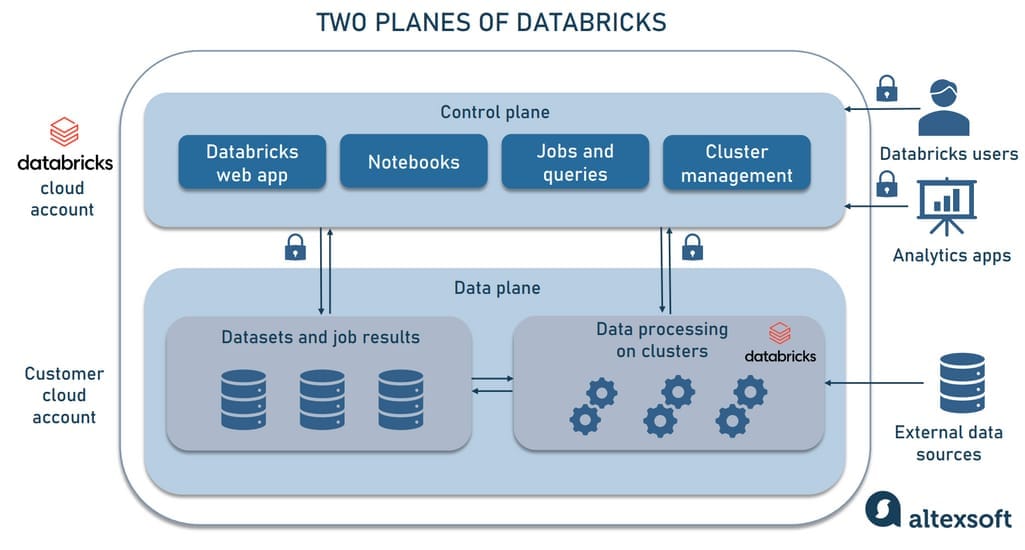

Databricks was designed with security in mind. To mitigate risks, it runs operations from two cloud environments — the operational plane and the data plane.

Databricks two-plane infrastructure

The data plane is a customer cloud account where data and compute resources live. All data processing happens in the data plane without leaving your account, and job results also reside here.

The control plane is a Databricks account created with the same cloud service provider as a customer. It’s used to run workspaces and manage notebooks, queries, jobs, and clusters. The plane comes with security features like access controls and network protection. If certain information — like configurations or logs — gets stored in the Databricks account, it’s encrypted at rest. All messages to and from the control plane are encrypted in transit.

Data experts log into the workspaces using a single sign-on (SSO) authentication to build data pipelines, write SQL queries, design ML models, and so on. Once the code is ready, Databricks deploys a cluster to execute the program within a customer account. The cluster works in its separate virtual private cloud, which provides an extra layer of security and isolation. You can apply additional precautions — like secure cluster connectivity when clusters launched on the data plane have no public IPs.

The security embedded in the Databricks two-plane infrastructure is strengthened even more by Unity Catalog, which offers centralized, fine-grained access controls, allowing you to make certain rows and columns available only to specific groups. Besides, the platform provides auditing features to monitor user activity and controls to meet compliance standards — such as HIPAA for medical data or PCI for payment card data.

Detailed and comprehensive documentation plus a knowledge base for troubleshooting

Databtricks provides numerous tutorials, quickstarts, how-to articles, and best practices guides published on their official website. There are three separate sets of documentation for AWS, Google, and Microsoft Azure since each platform has its own peculiarities:

Besides that, Databricks maintains a unified Knowledge Base where users can look for an answer to a specific question or a solution to a particular problem, no matter where they run the lakehouse. The knowledge base has a search bar, but if you fail to find a relevant article, you still have the option to suggest a new topic via an electronic form and wait for feedback.

Databricks disadvantages

Databricks' pitfalls are not as obvious as its benefits. Still, when getting more closely acquainted with the platform, users can face certain challenges — and we can’t but mention them, just to remain unbiased.

Hard learning curve and setup complexity

Despite detailed documentation and the platform’s declared objective — to make data processing easier, some customers find the lakehouse difficult to learn and understand. Partially, it’s due to a large number of tools, integrations, and features available. Besides, it lacks visualization and drag-and-drop capabilities which hinder non-programmer data citizens from using it.

The Databricks setup process is another challenge to overcome. Even tech people sometimes describe it as “a bit complex,” “confusing,” or “time-consuming,” taking from several hours to several days. It involves data engineers, machine learning engineers, and other tech experts, depending on how you will use the platform.

Scala as a primary language

Though Databricks supports four languages (SQL, R, Python, and Scala), it’s initially based on Spark, which is, in turn, written in Scala that runs on Java Virtual Machine (JVM.) Considering the environment, commands issued in non-JVM languages require additional transformations to be executed on top of a JVM process.

As a result, Scala code usually beats Python and R in terms of speed and performance. At the same time, Python and R are the primary languages of data people, while Scala is considered hard to learn and not as popular, so it may be difficult to find data specialists who know it well.

High cost of using

Databricks may be considered a commercial, managed version of Apache Spark. The platform simplifies the use of the big data analytics engine with a secure, collaborative environment and multiple services, integrations, and capabilities. But for end customers, improvements come at a substantial price that some small data projects can’t afford to pay. The good news is that Databricks charges based on consumption. So if you take your time learning how to optimize the platform from the start, it will save you a lot of money.

Relatively small community

As a commercial project, Databricks has a relatively small community compared to popular free tools. It means there are few forums or resources to discuss your problems should they arise.

StackOverflow hosts only 500 Databricks-related questions, and the Databricks community on Reddit totals just 342 members. You can also reach out to groups of Databricks practitioners and enthusiasts via the Community Home on the official website, though they are far from extensive. Still, you may ask questions, open discussions, and get expert answers and explanations.

Keep in mind that Databricks is known for its great tech support, so the size of the community matters not that much if you seek help rather than chatting opportunities.

Databricks alternatives

For many cases, Databricks is interchangeable with other cloud data platforms meaning that you can use them for the same purposes but at a slightly other price and with slightly different performance. The choice will depend on your needs and the experience of your team. Yet, let’s say it right away: Databricks delivers the best in class ML and MLOps capabilities and is unbeatable in this sense. Now, let’s see the closest alternatives it has.

Databricks vs Snowflake

Both Databricks and Snowflake are cloud-agnostic, autoscaling data platforms that leverage the capabilities of a data warehouse and a data lake. Yet the former is a platform-as-a-service (PaaS) solution primarily targeting data engineers and data scientists, and the latter is a software-as-a-service (SaaS) offering designed with data warehousing and data analysts in mind.

No wonder, Databricks shines in core data engineering and machine learning while Snowflake is more entrenched in business intelligence, with each trying to get into the other’s domain. Currently, large enterprises sometimes use both — Databricks for ML workloads and Snowflake for BI and more traditional analytics.

Read about Snowflake pros and cons in our dedicated article The Good and the Bad of Snowflake Data Warehouse.

Azure Synapse vs Databricks

Azure Synapse blends enterprise data warehousing, big data processing with Apache Spark, and tools for BI and machine learning. Similar to Databricks, it’s an end-to-end analytics solution that, however, lacks cross-cloud portability. Besides that, Azure Synapse doesn’t provide a collaborative environment, nor does it support versioning, and overall it has a narrower scope than Databricks.

On the bright side, Azure Synapse is not as complex, hard to set up, and overburdened with features as its counterpart. It perfectly suits companies who want to do traditional data analysis with SQL.

AWS SageMaker vs Databricks

AWS SageMaker competes with Databricks in the machine learning domain since it’s an end-to-end ML platform to simplify building, training, and deploying ML models on the cloud, embedded systems, and edge devices. SageMaker supports Jupyter Notebooks and natively integrates with a plethora of AWS tools and services, storing all data projects in S3. It’s especially praised for very easy and quick ML deployment. So, if you already use Amazon, focus on ML development, and don’t work with large amounts of diverse data, SageMaker can work for you. Otherwise, Databricks, with its big data capabilities, is a better option.

Cloudera vs Databricks

Following the example of Databricks, Cloudera positions itself as a data lakehouse. But instead of Delta Lake, it uses Apache Iceberg to address the challenges of data lakes. This storage layer was created by Netflix for internal needs and open-sourced two years later. Cloudera also includes a unified data fabric (integration and orchestration layer) and facilitates the adoption of a scalable data mesh — a distributed data architecture that organizes data by a business domain (HR, marketing, customer service, etc.)

The lakehouses, however, significantly differ in use cases. Databricks focuses on data engineering and data science. Cloudera, on the other hand, mainly takes care of data integration and data management.

How to get started with Databricks

In this section, we collected links to useful resources to get familiar with and start using Databricks.

Learn Databricks is an entry point to explore a lot of useful materials, including explanations of basics, documents from cloud service providers, and schedules of conferences and meetups. If you’re interested in acquiring greater knowledge confirmed with certification, go to pages dedicated to online professional training.

There are actually three get-started pages that briefly instruct you on how to set up an account, benefit from a 14-day free trial, and deploy and configure your first workspace. You can begin your Databricks journey with

Demo Hub has an accumulation of short videos with high-level overviews of Databricks components — workflows, Delta Lake, Unity Catalog, etc.

Databricks YouTube channel contains numerous practical guides, explainers, workshops, and tech talks. For example, there are tutorial series on getting started with Delta Lake, building a cloud data platform, and data analysis for people with no previous programming experience.

This post is a part of our “The Good and the Bad” series. For more information about the pros and cons of the most popular technologies, see the other articles from the series:

The Good and the Bad of Kubernetes Container Orchestration

The Good and the Bad of Docker Containers

The Good and the Bad of Apache Airflow

The Good and the Bad of Apache Kafka Streaming Platform

The Good and the Bad of Hadoop Big Data Framework

The Good and the Bad of Snowflake

The Good and the Bad of C# Programming

The Good and the Bad of .Net Framework Programming

The Good and the Bad of Java Programming

The Good and the Bad of Swift Programming Language

The Good and the Bad of Angular Development

The Good and the Bad of TypeScript

The Good and the Bad of React Development

The Good and the Bad of React Native App Development

The Good and the Bad of Vue.js Framework Programming

The Good and the Bad of Node.js Web App Development

The Good and the Bad of Flutter App Development

The Good and the Bad of Xamarin Mobile Development

The Good and the Bad of Ionic Mobile Development

The Good and the Bad of Android App Development

The Good and the Bad of Katalon Studio Automation Testing Tool

The Good and the Bad of Selenium Test Automation Software

The Good and the Bad of Ranorex GUI Test Automation Tool

The Good and the Bad of the SAP Business Intelligence Platform

The Good and the Bad of Firebase Backend Services

The Good and the Bad of Serverless Architecture