During shipment, goods are carried using different types of transport: trucks, cranes, forklifts, trains, ships, etc. What’s more, the goods come in different sizes and shapes and have different transportation requirements. Historically, this was a costly and burdensome process that often required a lot of manual labor to move the items into and out of places at each transit point. The laborers who did that job were called dockers.![]()

Dockers working in London, England. Source: Layers of London. CC BY 4.0. No changes to the image were made.

Then came standardized intermodal containers that revolutionized the transportation industry. They were designed to be moved between modes of transport with a minimum of manual labor. As a result, all transport machinery from forklifts and cranes to trucks, trains, and ships can handle these containers.

Those who work in IT may relate to this shipping-container metaphor. Gone are the days of a web app being developed using a common LAMP (Linux, Apache, MySQL, and PHP) stack. Today, systems may include diverse components from JavaScript frameworks and NoSQL databases to REST APIs and backend services all written in different programming languages. What’s more, this software may run either partly or completely on top of different hardware – from a developer’s computer to a production cloud provider. These are different environments that use different operating systems with different requirements.

Docker is a platform for developing and deploying apps in lightweight containers. Just like the intermodal containers simplified the transportation of goods, Docker made it easier to package and ship different programs on the same host or cluster while keeping them isolated.

This post will provide you with all the information necessary to understand what Docker is, how it works, and when it can be used. Also, you’ll find out about Docker's pros and cons and get acquainted with available alternatives.

What is Docker?

Docker is an open-source containerization software platform: It is used to create, deploy and manage applications in virtualized containers. With the help of Docker, applications including their environment can be provided in parallel and isolated from one another on a host system.

Launched in 2013 as an open-source project, the Docker technology made use of existing computing concepts around containers, specifically the Linux kernel with its features.

After the success with Linux, Docker partnered with Microsoft bringing containers and their functionality to Windows Server. Now the software is available for macOS, too.

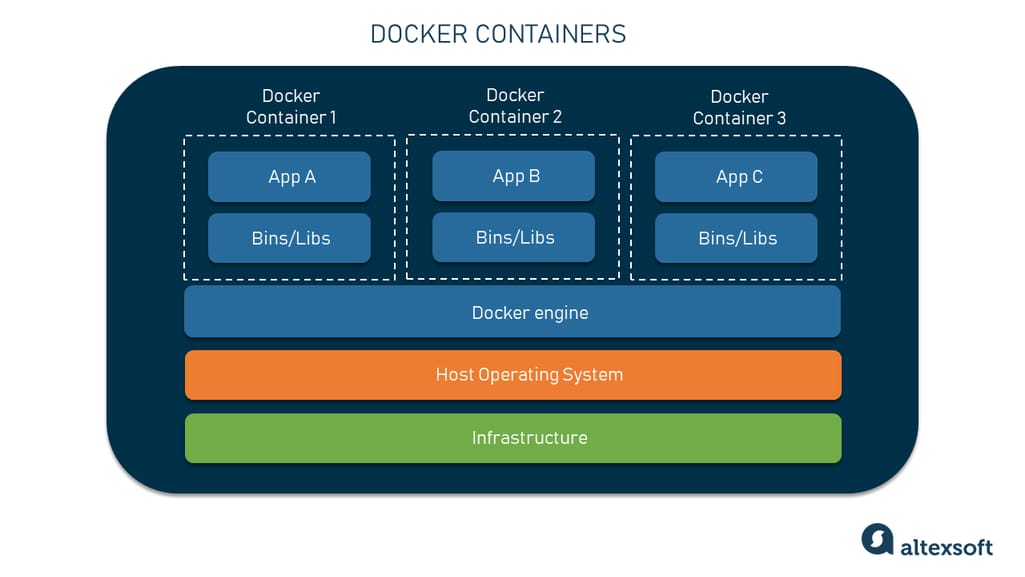

The heart and soul of Docker are containers — lightweight virtual software packages that combine application source code with all the dependencies such as system libraries (libs) and binary files as well as external packages, frameworks, machine learning models, and more. With Docker, applications and their environments are virtualized and isolated from each other on a shared operating system of the host computer.

Docker containers

The Docker engine acts as an interface between the containerized application and the host operating system. It allocates the operating system resources to the containers and ensures that the containers are isolated from one another.

Container environments can be operated on local computers on-premises or provided via private and public clouds. Solutions such as Kubernetes are used to orchestrate container environments. While you definitely saw the Docker vs Kubernetes comparison, these two systems cannot be compared directly. Docker is responsible for creating containers and Kubernetes manages them at a great scale.

What Docker can be compared to, though, are virtual machines.

Docker containers vs virtual machines

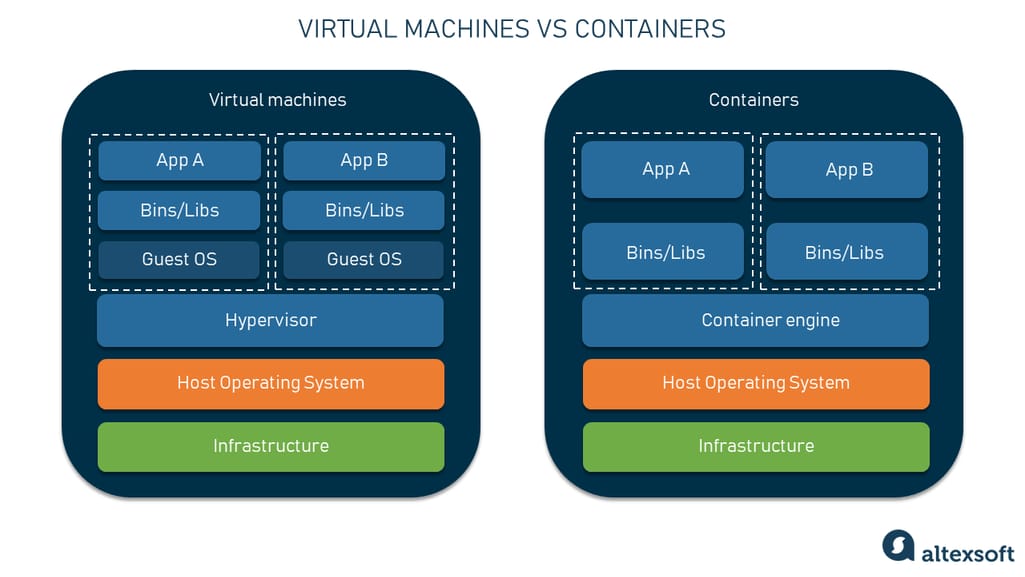

Virtual machines and containers are the two key concepts of virtualization that must be clearly distinguished from each other.

Virtual machines vs containers

A virtual machine (VM) is a virtualized computer that consists of three layers — the guest operating system, binaries and libraries, and finally the application running on the machine. A VM is based on a hypervisor like KVM or Xen that acts as the interface between a host operating system and the virtual machine.

With this software, the entire physical machine (CPU, RAM, disk drives, virtual networks, peripherals, etc.) is emulated. Thus, the guest operating system can be installed on this virtual hardware, and from there, applications can be installed and run in the same way as in the host operating system.

Containers, on the other hand, all use the same operating system of the underlying host computer. Hardware isn’t virtualized. Only the runtime environments with the program libraries and configurations as well as the applications including their data are virtualized. A container engine acts as an interface between the containers and a host operating system and allocates the required resources.

Containers require fewer host resources such as processing power, RAM, and storage space than virtual machines. A container starts up faster than a virtual machine because there is no need to boot up a complete operating system.

Common Docker use cases

There are hardly any limits to the possible uses of container virtualization with Docker. Typical areas of application of Docker are

- delivering microservice-based and cloud-native applications;

- standardized continuous integration and delivery (CI/CD) processes for applications;

- isolation of multiple parallel applications on a host system;

- faster application development;

- software migration; and

- scaling application environments.

Okay, but how does Docker work? As we provided you with the overview of Docker technology, it’s time to look under the hood of its ecosystem.

Docker architecture core components

To understand how to use Docker and its features most effectively, there should at least be a general idea of how the joint work of platform components hidden from the user is organized.

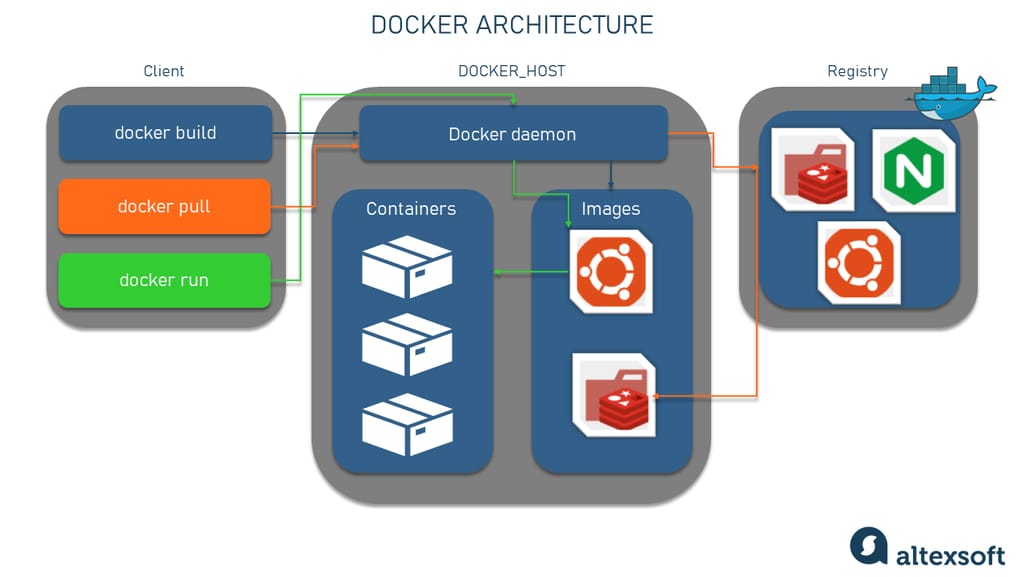

Docker Architecture

Docker uses a client-server architecture where the Docker client communicates with the Docker daemon via a RESTful API, UNIX sockets, or a network interface.

Docker daemon

The Docker daemon is a server with a persistent background process that manages all Docker objects (we’ll overview them below). It constantly listens to and processes Docker API requests. The Docker daemon is a service that runs on your host operating system.

The Docker daemon uses an “execution driver” to create containers. By default, this is Docker’s own runC driver, but there is also legacy support for Linux Containers (LXC). RunC is very closely tied to the following Linux kernel features, namely

- cgroups — used to manage resources consumed by a container (e.g., CPU and memory usage) and in the Docker pause functionality; and

- namespaces — responsible for isolating containers and making sure that a container’s filesystem, hostname, users, networking, and processes are separated from the rest of the system.

Docker client

The Docker client is a Command Line Interface (CLI) that you can use to issue commands to a Docker daemon to build, run, and stop applications. The client can reside on the same host as the daemon or connect to it on a remote host and communicate with more than one daemon.

The main purpose of the Docker client is to provide a means by which Docker images (files used to create Docker containers) can be pulled from a registry and run on a Docker host. Common commands issued by a client are

- docker build,

- docker pull, and

- docker run.

Docker Engine API

The Docker Engine API is a RESTful API used by applications to interact with the Docker daemon. It can be accessed by an HTTP client or the HTTP library that is part of most modern programming languages.

The API used for communication with the daemon is well-defined and documented, allowing developers to write programs that interface directly with the daemon without using the Docker client.

Docker registries

Docker registries are repositories for storing and distributing Docker images. By default, it is a public registry called Docker Hub. It hosts thousands of public images as well as managed "official" images.

You can also run your own private registry to store commercial and proprietary images and to eliminate the overhead associated with downloading images over the Internet.

The Docker daemon downloads images from the registries using the docker pull request. It also automatically downloads the images specified in the docker run request and within the FROM statement of the Dockerfile if those images are not available on the local system.

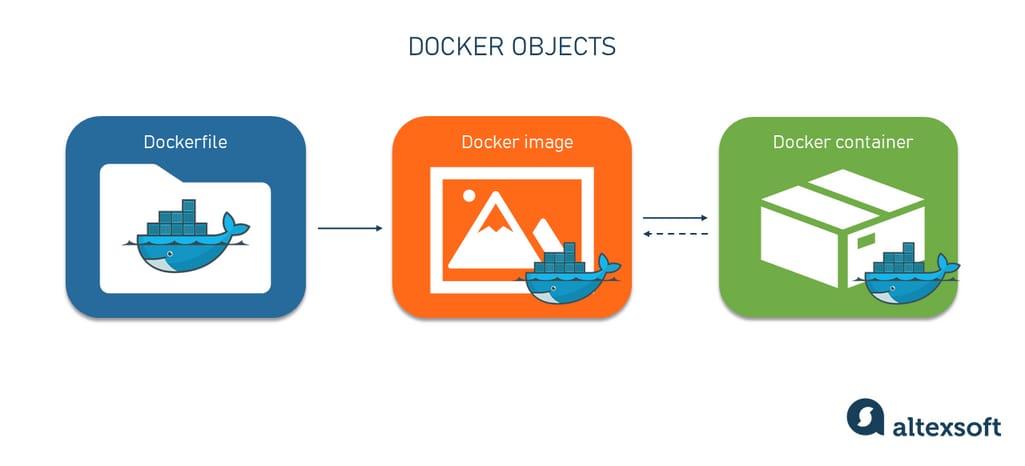

Docker objects

Let’s deal with the Docker objects mentioned above, namely the Dockerfile, Docker images, and Docker containers. They create the core of the whole system and enable containerization in general.

Docker objects

Dockerfile

Dockerfile is a simple text-based file containing commands and instructions with the help of which you can create a new docker image and pass it to a server.

A Dockerfile example. Source: Docker docs

Each instruction present in the docker file represents a layer of the docker image.

Docker images

Docker images are read-only container templates written in YAML. They are used to instruct the Docker daemon on the requirements of how to build containers and hold the entire metadata explaining the capabilities of the container. As mentioned above, you can download images from registries like Docker Hub or create a custom image with a Dockerfile.

Every image consists of multiple layers. A layer is a change of an image you create by writing lines in a Dockerfile. When you specify commands in your Dockerfile, you make changes to the previous image and create a new layer — or an intermediate image. This is how Docker rebuilds only the layer that was changed and the ones on top of it.

To combine these layers into a single image, Docker uses the union file system that allows files and directories from different file systems to overlap transparently.

Docker containers

As we already said, containers are Docker’s structural units that are used to hold the entire package needed to run the application. They are like directories containing everything you need to create and deploy a system, including the program code, frameworks, libraries, and bins.

Each container is an instance of an image. Containers can be created, started, stopped, moved, or deleted via a Docker API. Each container is isolated and has defined resources. At the same time, they can be connected to one or more networks and create a new image depending on the current state.

While the Docker image is read-only, a container is read/write. When Docker starts a container, it creates a read/write layer on top of the image (using the union file system as mentioned earlier) in which the application can be run.

A container can be deleted or removed. However, in this case, any application or data located there will also be scrapped.

The advantages of Docker

From all of the aforementioned, we can surely say that the concept of container virtualization and the use of Docker as container engine software offer numerous advantages.

Low resource consumption

Compared to virtual machines, containers are much more resource-efficient. Since a complete operating system doesn’t have to be installed on each container, they are significantly smaller and lighter. Also, containers take up less memory and reuse components thanks to images. There’s no need for large physical servers as containers can run entirely on the cloud.

Scalability

Containers are highly scalable and can be expanded relatively easily. Docker allows for both horizontal and vertical scaling. With vertical scaling, you resize the computing resource for a container. To do this, you either add or limit the amount of memory and CPU to use.

With horizontal scaling, you create and deploy multiple containers required for workloads. You have to first deploy an overlay network to allow the connection of containers. Then deploy the containers and load balance them to see the performance.

Moving to the cloud or other hosts can also be implemented quickly.

Flexibility and versatility

Docker allows you to use any programming language and technology stack on the server, eliminating the problem of incompatibility between different libraries and technologies. That's why applications that are designed to run as a set of discrete microservices benefit the most from containers.

Deployment speed

Containers can be deployed very quickly. Creating containers via images makes them ready to use in a few seconds. Installing and configuring operating systems is not necessary: Containers can be implemented with a few lines of code and deployed to the hosts. Therefore, new application versions that use containers see the world much faster than VMs.

Large community

Since its creation, Docker has been an open-source project. This contributed to the upward spiral and continued growth of a vast community. According to the official Docker statistics, in 2021, its community was 15.4 million monthly active developers sharing 13.7 million apps at a rate of 14.7 billion pulls per month.

Also, Stack Overflow Developer Survey 2022 showed that Docker became a fundamental instrument for being a developer with 69 percent of professional programmers choosing it as their number one tool.

The technology has a dedicated Slack channel, a community forum, and thousands of contributors on developer websites like Stack Overflow. What’s more, there are over 9 million container images hosted on Docker Hub.

CI/CD support

As we know, a container is based on a Docker image that can have multiple layers, each representing changes and updates on the base. This feature speeds up the build process. On top of that, it provides version control over the container, enabling developers to roll back to a previous version if the need arises. In other words, you can build the process of updating the application so that updating some containers does not affect the system's performance and the provision of services to users.

Taking this into account, Docker is a favorite of CI/CD practices and DevOps as it speeds up deployments, simplifies updates, and allows teammates to work efficiently together.

Well-written documentation

Docker can boast its top-notch documentation. Even if you have never dealt with containers before, docs will guide you from the beginning to the end of the process, explaining everything in a very concise and clear way. The docs include everything from guides to manuals to samples. On top of that, the documentation comes with a search bar and tags so that users can find the needed answer more quickly. Docker also offers video explainers for those who prefer to listen and watch instead of reading.

Docker extensions and tools

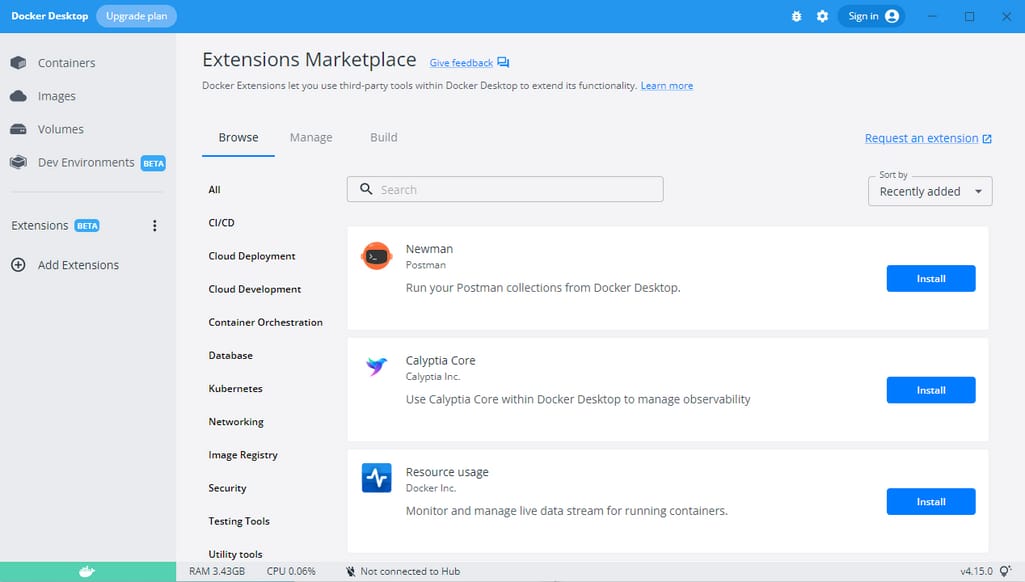

Docker has a new feature, currently in Beta, called Docker Extensions. It allows you to extend Docker functionality through third-party tools and integrate your workflows. In addition to this, you can use the Extensions SDK to build custom add-ons with React and TypeScript to enhance Docker Desktop.

Docker Extensions Marketplace

The available extensions can be downloaded from Docker Extensions Marketplace. There’s a wide selection of tools for you to choose from. For example, the embedded Snyk tool enables you to scan your containers for vulnerabilities as you code. By using Telepresence with Docker, you will be able to bridge your workstation with Kubernetes clusters in the cloud to develop and test your containers.

Also, there's a set of plugins called Docker Developer tools that facilitate the processes of building, testing, and deploying applications using Docker. In this way, you can work on your projects locally as well as collaborate with other team members and deploy applications to different environments.

The downside of Docker

As with any software technology, Docker containers are not a silver bullet and cannot solve every problem on their own. This technology has its drawbacks.

Security

Containers are lightweight, but you pay for this with security. Since containers in most cases use a common operating system, there is a general risk that several containers will be compromised at once if a host system is attacked. This is less likely with VMs as each VM uses its own operating system. Since containers are quite new, the technology must first be integrated into existing infrastructures so that security layers can also take effect.

No support for a graphical user interface

Docker was initially created as a solution for deploying server applications that don't require a graphical user interface (GUI). While you may opt for some creative strategies (such as X11 video forwarding) to run a GUI app inside a container, these solutions are cumbersome at best. Basically, if you want to handle Docker containers, be ready to work with the command line to perform all actions there.

Hard learning curve

The OS-specific caveats, frequent updates, and other nuances make mastering Docker challenging, Its learning curve is quite steep. Even if you feel that you know Docker inside out, there is still orchestration to consider, which adds another level of complexity. Also, even though the Docker Extensions feature is beneficial in many ways, it requires gaining additional levels of knowledge of the third-party tools.

Docker alternatives

While Docker is definitely one of a kind, there are several container alternatives out there that may be worth your attention.

Linux Container Daemon

LXD, the "Linux Container Daemon," is a management tool for Linux operating system containers, developed by Canonical, the company behind Ubuntu Linux. With LXD, Linux operating system containers can be configured and controlled via a defined set of commands. LXD is, therefore, suitable for automating mass container management and is used in cloud computing and data centers. LXD enables the virtualization of a complete Linux operating system on a container basis. As such, the technology combines the convenience of virtual machines with the performance of containers.

Podman

Podman is an open-source container management tool for developing, managing, and running OCI containers. OCI stands for the Open Container Initiative and was introduced by Docker Inc. in 2015. It describes two specifications: the runtime specification and the image specification.

Any software can implement the specifications, creating OCI-compliant container images and container runtimes that are compatible with each other. In addition, there are the so-called Container Runtime Interfaces (CRI), which are based on the OCI runtimes and abstract them.

In contrast to Docker, Podman is not a container runtime, but an implementation of the OCI image specification. Consequently, Podman itself cannot start any images. It requires the already mentioned CRI container runtime, which in turn uses the hardware-related OCI runtime such as runC to start the actual container.

There are a few other open-source tools for building containers, but they rely on Docker. Among them are containerd, kaniko, and BuildKit.

How to get started with Docker

If you are a programmer, a DevOps, a data engineer, or any other specialist who wants to use Docker in projects, you should have a clear roadmap of how to get started with this technology.

Get acquainted with Docker docs

No matter how self-evident you think it is, you should read the available Docker documentation to get familiar with this technology. Luckily for you, the documentation is well-written, nicely structured, and easy to grasp.

Complete the Docker certification course

If you want to validate your ability to carry out tasks and responsibilities related to the Docker platform, you will need to pass the Docker Certified Associate exam. There are quite a few academies that offer online courses with certifications. The cost may vary from one platform to another: On average the Docker certification cost is $250.

Here are a few popular platforms that provide Docker training and certification.

- Docker Certified Associate 2022 by Udemy

- Docker Certified Associate (DCA) Certification Training Course by Simplilearn

- Docker Certification Training Course by Edureka

But of course, there are a lot of other ways to get knowledge and skills to work with Docker.

Make use of a vast Docker community

It’s always a good idea to look for the expertise of people who have been working with Docker for years. For whatever question you may have concerning this technology use, there’s an experienced community of developers ready to lend a helping hand.

Some of the useful resources are

Also, there’s an awesome interactive in-browser Docker learning site called Play with Docker Classroom where you can find labs and tutorials that will help you get hands-on experience using Docker.

Have a fun time learning and using Docker!

This post is a part of our “The Good and the Bad” series. For more information about the pros and cons of the most popular technologies, see the other articles from the series:

The Good and the Bad of Hadoop Big Data Framework

The Good and the Bad of Snowflake

The Good and the Bad of C# Programming

The Good and the Bad of .Net Framework Programming

The Good and the Bad of Java Programming

The Good and the Bad of Swift Programming Language

The Good and the Bad of Angular Development

The Good and the Bad of TypeScript

The Good and the Bad of React Development

The Good and the Bad of React Native App Development

The Good and the Bad of Vue.js Framework Programming

The Good and the Bad of Node.js Web App Development

The Good and the Bad of Flutter App Development

The Good and the Bad of Xamarin Mobile Development

The Good and the Bad of Ionic Mobile Development

The Good and the Bad of Android App Development

The Good and the Bad of Katalon Studio Automation Testing Tool

The Good and the Bad of Selenium Test Automation Software

The Good and the Bad of Ranorex GUI Test Automation Tool

The Good and the Bad of the SAP Business Intelligence Platform

The Good and the Bad of Firebase Backend Services

The Good and the Bad of Serverless Architecture