The heavy lift that is data engineering exists in sharp contrast with something as easy as breathing or as fast as the wind. But, apparently, things were even more difficult before Apache Airflow appeared.

The platform went live in 2015 at Airbnb, the biggest home-sharing and vacation rental site, as an orchestrator for increasingly complex data pipelines. It still remains a leading workflow management tool adopted by thousands of companies, from tech giants to startups. This article covers Airflow’s pros and gives a clue why, despite all its virtues, it’s not a silver bullet.

Before we start, all those who are new to data engineering can watch our video explaining its general concepts. Also, read our dedicated article on data orchestration to learn more about how it works.

How data engineering works

What is Apache Airflow?

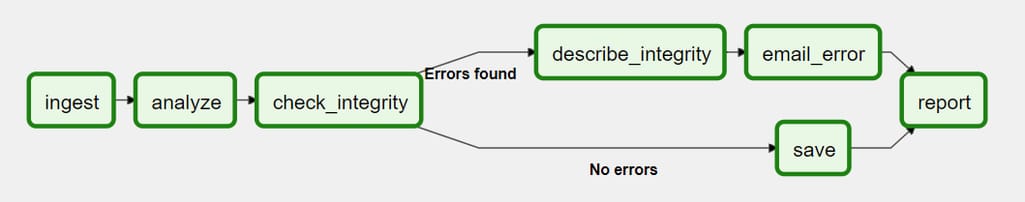

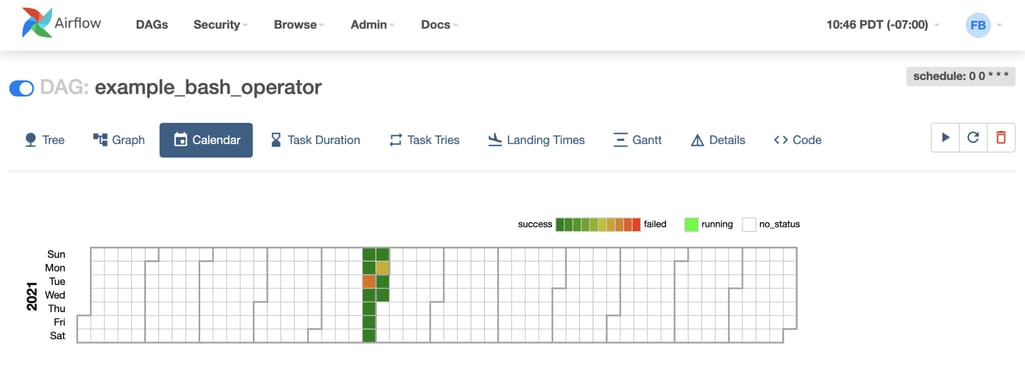

Apache Airflow is an open-source Python-based workflow orchestrator that enables you to design, schedule, and monitor data pipelines. The tool represents processes in the form of directed acyclic graphs that visualize casual relationships between tasks and the order of their execution.

An example of the workflow in the form of a directed acyclic graph or DAG. Source: Apache Airflow

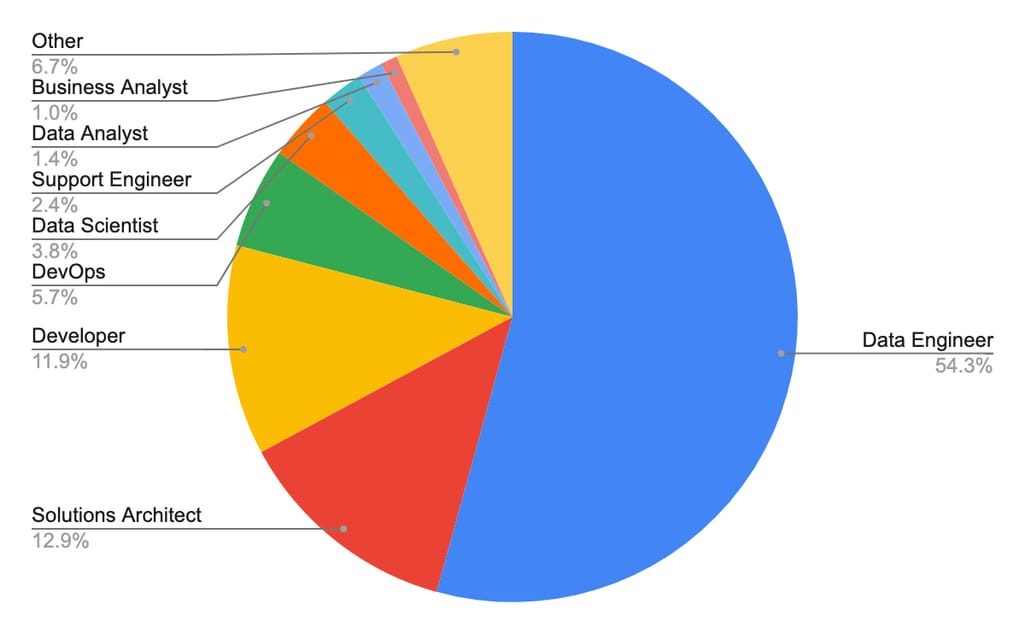

The platform was created by a data engineer — namely, Maxime Beauchemin — for data engineers. No wonder, they represent over 54 percent of Apache Airflow active users. Other tech professionals working with the tool are solution architects, software developers, DevOps specialists, and data scientists.

2022 Airflow user overview. Source: Apache Airflow

What is Apache Airflow used for?

Airflow works with batch pipelines which are sequences of finite jobs, with clear start and end, launched at certain intervals or by triggers. The most common applications of the platform are

- data migration, or taking data from the source system and moving it to an on-premise data warehouse, a data lake, or a cloud-based data platform such as Snowflake, Redshift, and BigQuery for further transformation;

- end-to-end machine learning pipelines;

- data integration via complex ETL/ELT (extract-transform-load/ extract-load-transform) pipelines;

- automated report generation; and

- DevOps tasks — for example, creating scheduled backups and restoring data from them.

Airflow is especially useful for orchestrating Big Data workflows. But similar to any other tool, it’s not omnipotent and has many limitations

When Airflow won’t work

Airflow is not a data processing tool by itself but rather an instrument to manage multiple components of data processing. It’s also not intended for continuous streaming workflows.

However, the platform is compatible with solutions supporting near real-time and real-time analytics — such as Apache Kafka or Apache Spark. In complex pipelines, streaming platforms may ingest and process live data from a variety of sources, storing it in a repository while Airflow periodically triggers workflows that transform and load data in batches.

Another limitation of Airflow is that it requires programming skills. It sticks to the workflow as code philosophy which makes the platform unsuitable for non-developers. If this is not a big deal, read on to learn more about Airflow concepts and architecture which, in turn, predefine its pros and cons.

Airflow architecture

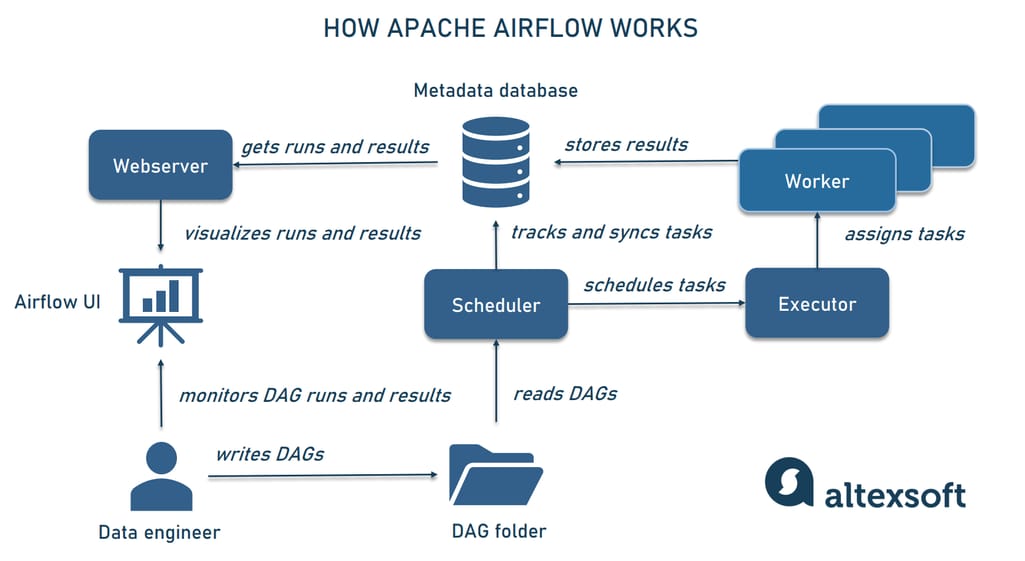

Apache Airflow relies on a graph representation of workflows and modular architecture with several key components to be aware of from the start.

Key components of Apache Airflow and how they interact with each other

Directed Acyclic Graph (DAG)

The key concept of Airflow is a Directed Acyclic Graph (DAG) that organizes tasks to be executed

- in a certain order, specified by dependencies between them (hence — directed); and

- with no cycles allowed (hence — acyclic).

Each DAG is essentially a Python script that represents workflow as code. It contains two key components — operators describing the work to be done and Task relationships defining the execution order. DAGs are stored in a specific DAG folder.

So, what you mostly do when interacting with Airflow is design DAGs to be completed by the platform. You can create as many DAGs as you want for any number of tasks. There are typically hundreds of workflows in the Airflow production environment.

Metadata database

A metadata database stores information about user permissions, past and current DAG and task runs, DAG configurations, and more. It serves as the source of truth for the scheduler.

By default, Airflow handles metadata with SQLite which is meant for development only. For production purposes, choose from PostgreSQL 10+, MySQL 8+, and MS SQL. Using other database engines — for example, Maria DB — may result in operational headaches.

Airflow scheduler

The scheduler reads DAG files, triggers tasks according to the dependencies, and tracks their execution. It stays in sync with all workflows saved in the DAG folder and checks whether any task can be started. By default, those lookups happen once per minute but you can configure this parameter.

When a certain task is ready for execution, the scheduler submits it to a middleman — the executor.

Airflow executor and workers

An executor is a mechanism responsible for task completion. After receiving a command from the scheduler, it starts allocating resources for the ongoing job.

There are two types of Airflow executors:

- local executors live on the same server as the scheduler; and

- remote executors operate on different machines, allowing you to scale out and distribute jobs across computers.

Two main remote options are Celery Executor and Kubernetes Executor. Celery is an asynchronous task queue for Python programs but to use it you need to set up a message broker — RabbitMQ or Redis. At the same time, the Kubernetes scenario doesn’t require any additional components and unlike other executors can automatically scale up and down (even to zero) depending on the workload.

No matter the type, the executor eventually assigns tasks to workers — separate processes that actually do the job.

Airflow webserver

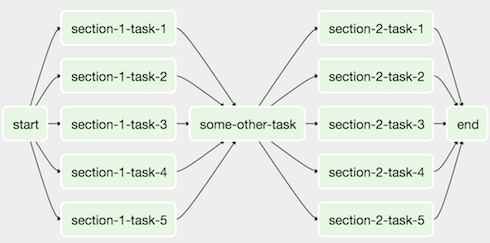

Airflow webserver runs a user interface that simplifies monitoring and troubleshooting data pipelines.

DAG history in the calendar view. Source: Apache Airflow

It lets you visualize DAG dependencies, see the entire DAG history over months or even years in the calendar view, analyze task durations and overlaps using charts, track task completion, and configure settings, to name just a few possibilities.

All the above-mentioned elements work together to produce benefits that make Airflow so popular among data-driven businesses.

Apache Airflow advantages

Let’s clarify once again: Airflow aims at handling complex, large-scale, slow-moving workflows that are to be on a schedule. And it copes with this task really well, being one of the most popular orchestration tools with 12 million downloads per month. Here are some extra benefits the platform delivers when you use it as intended.

Use of Python: a large pool of tech talent and increased productivity

Python is a leading programming technology in general and within data science in particular. According to the 2022 Stack Overflow survey, it’s the fourth most popular language among professional developers, and those who only learn to code put it in third place, after the frontend Big Three — HTML/CSS and JavaScript.

The fact that Airflow chose Python as an instrument to write DAGs, makes this tool highly available for a wide range of developers and other tech professionals, not to mention data specialists. The language features a clear syntax and a rich ecosystem of tools and libraries that allow you to build workflows with less effort and lines of code.

Everything as code: flexibility and total control of the logic

Since everything in Airflow is Python code, you can tweak tasks and dependencies as you want, apply custom logic that will meet business needs, and automate many operations. The platform allows for a great number of adjustments to be made and this way covers a large variety of use cases.

For example, you may program workflows as dynamic DAGs. This will save you tons of time when you need to handle hundreds or thousands of similar processes differing in a couple of parameters. Instead of constructing such DAGs manually, there is an option to write a script that will generate them automatically. The approach finds its application, for example, in ETL and ELT pipelines with many data sources or destinations. To run them, you require numerous DAGs that repeat the same sequence of tasks with slight modifications.

Task concurrency and multiple schedulers: horizontal scalability and high performance

Out-of-the-box, Airflow is configured to execute tasks sequentially. However, it also lets you perform hundreds of concurrent jobs within a single DAG if there are no dependencies between them.

An example of a DAG with a lot of parallel tasks. Source: Apache Airflow

A scheduler in turn can run several DAG parsing processes at a time. Additionally, you can have more than one scheduler working in parallel. All these make Airflow capable of ensuring high performance and scaling to meet changing needs of your business.

A large number of hooks: extensibility and simple integrations

Airflow communicates with other platforms in the data ecosystem using hooks — high-level interfaces that spare you the trouble of searching for special libraries or writing code to hit external APIs.

There are plenty of available hooks provided by Airflow or its community. So you can quickly link to many popular databases, cloud services, and other tools — such as MySQL, PostgreSQL, HDFS (Hadoop distributed file system), Oracle, AWS, Google Cloud, Microsoft Azure, Snowflake, Slack, Tableau, and so on.

Look through the full list of available hooks here. In case there are no out-of-the-box connections for data platforms you work with, Airflow allows you to custom develop them and in this way implement your specific use case. If the target system has an API, you can always integrate it with Airflow.

Full REST API: easy access for third parties

Since the 2.0 version, Airflow users have been enjoying a new REST API that dramatically simplified access to the platform for third parties. It also adds new capabilities — such as

- on-demand workflow launching: You can programmatically trigger a pipeline after events or processes in external systems;

- automated user management; and

- building your own applications based on the Airflow web interface functionality.

This is by far not all the benefits delivered by the full API. For more details, read Airflow’s API documentation.

Open-source approach: an active and continuously growing community

Open-source software typically enjoys the backing of a large tech community, and Airflow is no exception. Due to the vast number of active users, who already have faced and overcome typical challenges related to the tool you can find answers to almost all questions online.

The official community residing on the Apache Airflow webpage amounts to over 500 members. The tool boasts 28k stars and more than 2,2+k contributors on GitHub where the development of the tool most often occurs. There are also nearly 10,495 questions with the airflow tag on Stack Overflow. But the biggest group of 17+k Airflow fans live on Slack.

Apache Airflow disadvantages

The Airflow team is constantly working on the platform’s functionality, listening to data engineers’ wishes, and addressing issues in every next version of the product. Yet, there is still room for improvement. Here are some most popular complaints of Airflow users, both tech professionals, and business representatives.

No versioning of workflows

The most wanted and long-awaited feature mentioned by Airflow users is DAG versioning — or the ability to record and track changes made to a particular pipeline. Due to the massive use of version control systems, when unavailable, it is missed. Airflow still doesn’t have it, though.

When you delete tasks from your DAG script, they disappear from Airflow UI as well as all the metadata about them. Consequently, you won’t be able to roll back to the previous modification of your pipeline. Airflow recommends creating a new DAG under a separate ID each time you want to erase something. Another solution is to manage DAGs on your own in Git, GitHub, or a similar repository. However, it adds complexity to your daily routine.

Insufficient documentation

One of the comments on Airflow documentation describes it as “acceptable but isn’t great.” Most examples you can find in user manuals are very abridged, lacking details and thorough, step-by-step, guidelines.

Airflow Survey 2022 shows that over 36 percent of specialists working with the platform would like official tech documents and other resources about using Airflow to be improved. Such an enhancement would solve many problems with onboarding newcomers and somewhat flatten the learning curve.

Challenging learning curve

To start working with Airflow, new users have to grasp quite a lot of things: the built-in scheduling logic, key concepts, configuration details, and more. Not to mention that you need to have massive expertise in Python scripting to get the most out of the workflow as a code approach — including writing custom components and connections.

No wonder, novices have a hard time learning the way Airflow works. Many engineers complain that this experience is confusing and far from intuitive.

The complexity of the production setup and maintenance

This widely-recognized pitfall continues the previous two. Airflow consists of many components you should take care of so that they work well together. When it comes to the production environment, the complexity only increases since you need to set up and maintain Celery and message brokers or Kubernetes clusters. To make things even more difficult, the official documents don’t give detailed information on how to do it properly.

Due to these tech issues, businesses often end up hiring external consultants or buying paid Airflow services such as Astronomer or Cloud Composer from Google. These products come with additional features that simplify deployment, scaling, and maintenance of the Airflow environment in production.

How to get started with Apache Airflow?

With all its pros and cons, Apache Airflow remains the de facto standard of workflow orchestration. Here are some useful resources that will help you get familiar with the tool.

Content for the latest, 2.4.2, version of Apache Airflow serves as a guide to official documentation, tutorials, FAQs, how-tos, and more.

Airflow 101: Essential Tips for Beginners is a webinar by Astronomer to introduce key concepts and components of the platform as well as best practices for working with it.

Airflow Tutorial for Beginners on YouTube combines explanations of core concepts with practical demos to get you started with the tool in just two hours. The course is free, and the only prerequisite to making the most of it is the knowledge of Python.

Airflow — zero to hero and Introduction to Apache Airflow are two other free videos provided by Class Central which will help you set up the Airflow environment, connect to external sources, and write your first DAG.

Introduction to Airflow in Python on DataCamp provides a free intro to Airflow components. If you take the commercial part of the course, you’ll learn how to implement DAGs, maintain and monitor workflows, and build production pipelines.

The Complete Hands-On Introduction to Apache Airflow on Udemy is a bestseller course that teaches you to write, schedule, and monitor Airflow data pipelines on practical examples. Overall, there are 18 courses related to Apache Airflow on this resource. You can look through them here.

This article is a part of our “The Good and the Bad” series. If you are interested in web development, take a look at our blog post on

The Good and the Bad of Angular Development

The Good and the Bad of JavaScript Full Stack Development

The Good and the Bad of Node.js Web App Development

The Good and the Bad of React Development

The Good and the Bad of React Native App Development

The Good and the Bad of TypeScript

The Good and the Bad of Swift Programming Language

The Good and the Bad of Selenium Test Automation Tool

The Good and the Bad of Android App Development

The Good and the Bad of .NET Development

The Good and the Bad of Ranorex GUI Test Automation Tool

The Good and the Bad of Flutter App Development

The Good and the Bad of Ionic Development

The Good and the Bad of Katalon Automation Testing Tool

The Good and the Bad of Java Development

The Good and the Bad of Serverless Architecture

The Good and the Bad of Power BI Data Visualization

The Good and the Bad of Hadoop Big Data Framework

The Good and the Bad of Apache Kafka Streaming Platform

The Good and the Bad of Snowflake Data Warehouse

The Good and the Bad of C# Programming

The Good and the Bad of Python Programming