In 2021, the genealogy website MyHeritage presented its Deep Nostalgia app capable of making photos move, smile, and blink. Resembling the magical portraits from Harry Potter, pictures once frozen in time are being revived with one mouse click. The project went viral immediately: Millions of people rushed to animate their family photos experiencing some excitement and maybe a bit of cringe. But how is this even possible? Well, the technology behind this “magic” is called deep learning.

In this post, we’ll explain what deep learning is, how it works, how it’s different from traditional machine learning, and what areas it can be applied to. Get ready because you’re about to go deep into deep learning.

What is deep learning?

Deep learning is a subfield of machine learning where artificial neural networks — complex algorithms modeled to work in a way similar to human brains — learn from large sets of data. In simple terms, deep learning focuses on training computers to mimic how people learn things.

As humans, we perceive information from images, text, and speech using not only our sensory organs (eyes, ears, etc.) but also a network of neurons in our brain. Millions of neurons exchange electrical and chemical signals and transmit information in this way. Artificial neural nets pass information similarly: They do that through multiple interconnected layers consisting of neurons (nodes). In deep learning, the so-called depth typically refers to neural nets having more than three layers (they can have 100+ layers, just so you know.)

Artificial intelligence vs machine learning vs deep learning

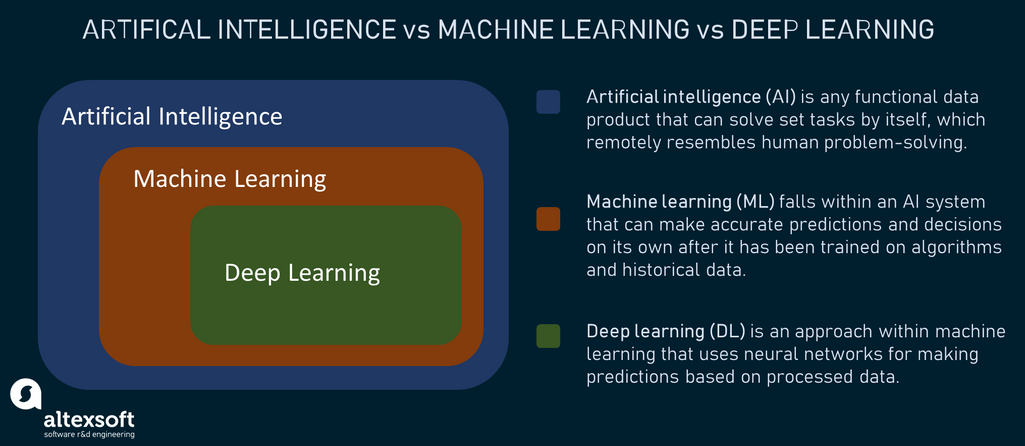

All three technologies are related but the terms can’t be used interchangeably as synonyms. Hence, it is important to point out how deep learning is different from machine learning and artificial intelligence.

How artificial intelligence, machine learning, and deep learning are related

As far as the relations go, deep learning is a subfield of machine learning which is a subset of artificial intelligence, making the latter an umbrella term for all smart technologies.

Artificial intelligence (AI) is simply any computer algorithm that remotely resembles human problem-solving. Systems can be called AI-powered if they meet the criteria of what we claim to be intelligent. From the business perspective, AI can be viewed as any functional data product capable of solving problems in a manner similar to how humans would.

Machine learning (ML) is a field of knowledge that relies on various algorithms to train computer systems on historical data so that they can make accurate predictions and decisions on their own when exposed to new data inputs.

Deep learning (DL) is a specific approach within machine learning that utilizes neural networks for making predictions based on large amounts of processed data. Deep learning enables computers to perform more complex functions like understanding human speech, automatically recognizing objects in images, or making the latter move.

Okay, to better understand how AI, ML, and DL relate to one another, let's use the analogy with running shoes. So, AI will stand for all running shoes utilizing technologies that boost running performance. Drawing on this, machine learning will be represented as training shoes with special soft foam for better cushioning. Comfy and light, the foam helps someone run faster, but that's not the limit. Why? Because there are shoes so advanced that they gained the name of “technology doping.” We mean racing shoes with carbon-fiber plates inside the foam that help fling the athlete forward with every stride, doing part of the running job for him. And that would be deep learning — the state-of-the-art technology inside the AI family.

Neural networks: how deep learning algorithms work

Imagine, you are given a picture of horses. Even if you have never seen this picture before, you will still recognize the animals. The fact that the horse may be cartoonish or dressed up for a party as a zebra makes no difference at all. You can recognize a horse because you know well all the defining elements such as the form of its head, body, hooves, and so on.

Simply put, people perceive different images and objects in an abstract and intuitive manner. Machines, on the other hand, can see only numbers that represent individual pixels, not objects as a whole. That’s why such straightforward tasks as telling a horse from a zebra may be quite complex for them.

While we talk about AI resembling human intelligence, existing AI systems are nowhere near our cognition capacities with deep learning neural networks showing the best results so far. But how does deep learning work? Let’s see.

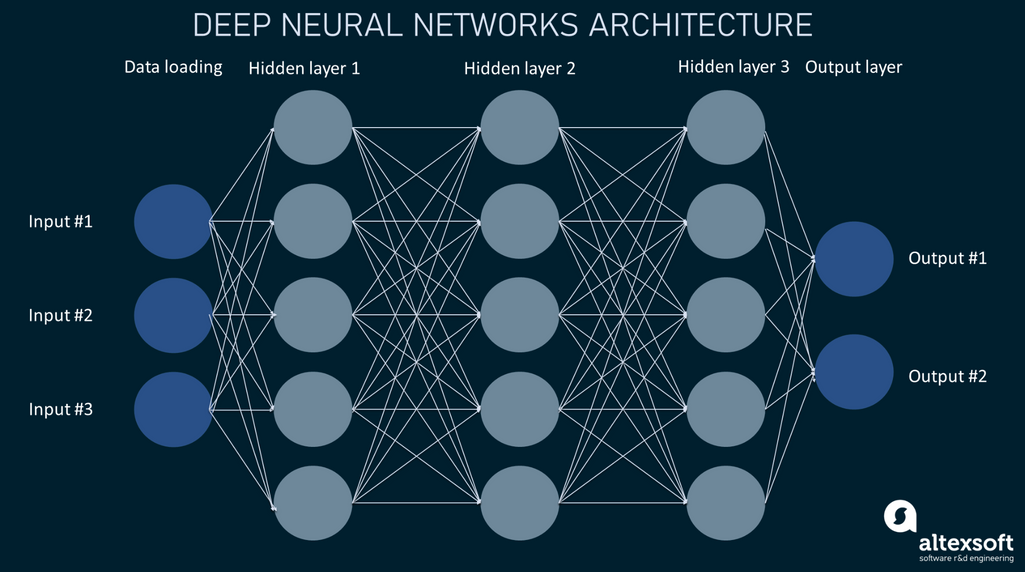

Typical architecture of deep learning neural nets

So, deep learning relies on neural networks to solve a variety of problems from simpler classification tasks to more complex ones like voice and image recognition. The input data goes through multiple processing layers of artificial neurons piled up on top of one another to provide the output. But what are neurons and how exactly are they connected?

Typical architecture of deep neural networks

The picture above shows a typical multi-layered design of neural nets with

- an input layer — the first layer that loads data into a model and passes it without any computation;

- hidden layers — multiple interconnected layers that perform mathematical operations on data and extract features; and

- an output layer — the final layer that provides the result by taking inputs from preceding hidden layers.

Let's say you want to create a neural net capable of recognizing the aforementioned horses and zebras in pictures. Your dataset will consist of numerous images showing both animals with each image made up of a certain number of pixels. Each pixel will have a corresponding neuron in the input layer. Neurons are individual nodes that are responsible for data flow and computations. Generally speaking, they perform the following tasks:

- receive input signals either from the set of raw data or from neurons of a preceding layer,

- perform some mathematical calculations on the signals they received, and

- send output signals to neurons residing in the subsequent layers.

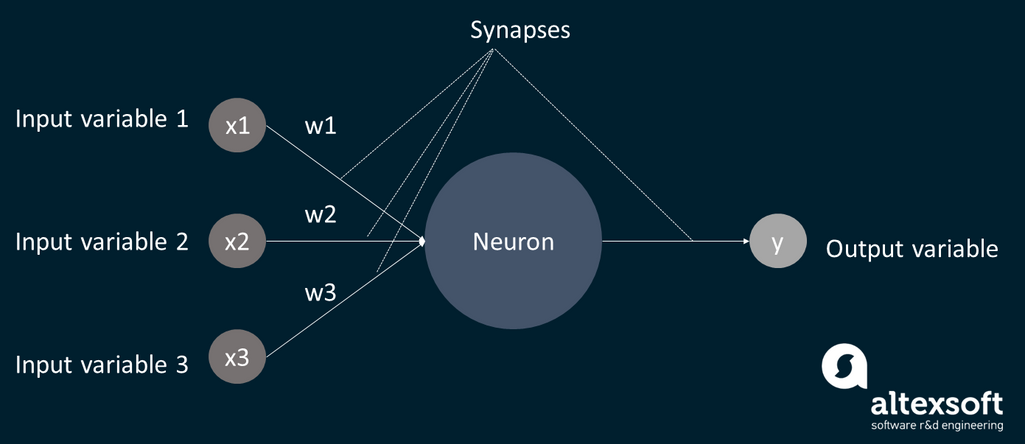

Schematic illustration of neuron functionality in a neural network

Every neuron holds the number that shows the value of a certain variable, an image pixel in our case. These numbers are called activations. The activations in one layer determine the activations of the next one, and so on.

All the neurons are connected by lines called synapses that enable the transmission of activations from one node to another. Synapses have weights that are determined by the activation numbers of the preceding neurons. The bigger the weight, the more influence it has on the next layer. In plain English, this means the greater likelihood of a certain node to contain a pixel or pixels that belong to an image of a horse or zebra.

When inputs from the neurons in the previous layers hit the target neuron in another, the neuron multiplies each input by its corresponding weight and passes their weighted sum to an activation function. There are also bias numbers added to the weighted sum, and their role is to move the activation function in different directions to better fit the prediction with the data. That said, the bias tells you how high the weighted sum needs to be before the neuron starts getting active.

The activation function then calculates the value of the output for the given neuron and so another synapse passes it on to the next layer. The final output represents how strongly a system thinks that a given image shows a horse, not a zebra.

Main types of deep neural networks

The thing is, there is no universal way to build deep learning models. There are a bunch of neural networks, and none is considered to be perfect. That’s because different tasks require different algorithms.

Convolutional neural networks (CNNs), sometimes called ConvNets, are neural nets consisting of multiple layers that can process data and extract features from it automatically. They are mostly used for image and object recognition, time-series forecasting, and anomaly detection.

Recurrent neural networks (RNNs) are the type of neural nets that have sort of looped connections, meaning the output of a certain neuron is fed back as an input. These nets can memorize previous inputs and, due to this, they are often used in developing chatbots, text-to-speech technologies, and price forecasting.

Long short term memory networks (LSTM) are the type of RNN that can learn long term dependencies in data and retain past information over time. As such, they are used for time-series forecasting.

Generative adversarial networks (GANs), as you can tell from the name, are the type of neural nets capable of generating new data instances that look similar to the training data. GANs are widely used by video game developers to increase the resolution of images and textures.

Whatever the deep learning algorithm you pick, you will need to ensure enough computing power to handle calculations. High-performance graphical processing units (GPUs) are inevitable in the case of neural nets training.

Deep learning applications

Moving from theory to practice, let’s discuss some examples of value created through the use of deep learning.

Computer vision and image recognition. The most extensive use of deep learning is seen in enabling computers to distinguish different objects depicted in pictures. From detecting brand logos in images posted on social media to such complex applications as identifying diseases on medical images, computer vision powered by deep learning proves to be effective in many areas. Not to mention that without neural nets the world would have never seen self-driving cars.

Watch our exploration of computer vision and its applications

Speech recognition is an application of natural language processing that can be achieved through deep learning. Neural nets, namely RNNs and LSTMs, are used to process sound data and accurately detect individual words and ultimately sentences. This technology gave birth to many chatbots and virtual assistants such as Siri and Alexa.

Sentiment analysis also relies on deep learning to process human language to identify and extract certain pieces of information from text. This is especially useful for cases when a company wants to understand how customers feel about their brand or product. Neural nets can analyze customer sentiments that come in both structured and unstructured formats including reviews, comments, and posts.

Personalization and recommendation engines are propelled by the capacities of deep learning providing more tailored offerings. Neural nets study massive volumes of data that contain interactions users have with a product, service, website, etc. They model nonlinear interactions and find hidden patterns in the data that couldn't otherwise be discovered. As a result, we've got such awesome things as personalized content playlists, product offerings, and so on.

Fraud detection. Deep learning has found its wide application in the field of fraud detection. Multi-layered structures of neural nets are capable of finding truly complex relations in large datasets and uncovering seemingly invisible signals of possible fraudulent activity. Due to this, healthcare, eCommerce, financial services, and other industries apply neural nets to verify transactional operations, identify fake/duplicate insurance claims, detect theft and scams, and so on.

Is deep learning the future of machine learning?

Deep learning has attracted a lot of attention in recent years, both in academic and business circles. According to a survey about the state of AI in 2020 conducted by McKinsey, AI adoption keeps adding value in organizations while deep learning use in business is still at an early stage. But the innovations brought to the table by this AI approach are definitely promising.

A really big question is left though, “Is deep learning the future of machine learning?” Well, we wouldn’t put it that way as these technologies are not equal, each with its own benefits and shortcomings. Here, we’d like to point out the distinctive features of deep learning that add to its popularity.

Automatic feature extraction

If you take classic machine learning algorithms (Naïve Bayes, Decision Trees, and Support Vector Machine, etc.), you won’t be able to apply them directly to raw data such as images or text. Normally, you will need a preprocessing step known as feature extraction so that algorithms get understandable representations of data to perform a task like classifying data into a few categories. This process is quite complex, time-consuming, and requires accomplished domain experts.

Unlike traditional machine learning, deep learning eliminates the need for manual feature extraction. Neural networks are capable of drawing features out of raw data automatically without human intervention. This is something known as feature learning. Multiple processing layers of neural nets produce different representations of data, increasing the feature complexity with every new layer. The best possible representation of input data is then used to provide the result.

More data — better performance

What you also should know about deep learning models is that the Big Data era of technology works in their favor. Andrew Yan-Tak Ng, who was a co-founder and former head of Google Brain and Chief Scientist at Baidu, explained this point in his speech “What data scientists should know about deep learning” at ExtractConf 2015. He highlighted that deep learning can be considered the first class of scalable algorithms that improve their performance the more data you feed them with, as opposed to traditional ML algorithms that hit the accuracy limit, despite the amount of training data. At the same time, opting for large neural networks is both a blessing and a curse as it ain’t easy to get enough data.

Transfer learning

Another cool thing about deep learning is that you don’t necessarily have to train neural networks from scratch. Instead, you can opt for the technique called transfer learning. In layman’s terms, it’s when you pick a neural network that has already been trained on one task and reuse it on a new but related problem.

Usually, it works like this: You take the earlier and middle layers of a pre-trained model that already can detect generic features and transfer them to another network. This way, you only need to train or retrain the later layers to recognize more specific features. This approach can be used when it is difficult to get enough data or when the project requires faster training. For transfer learning, there are a bunch of public and third-party models and toolkits allowing you to fine-tune them given your use case.