The article explains data migration, its challenges, and its main types. We will also delve into the data migration process and strategies and share some best practices.

What is data migration?

In general terms, data migration is the transfer of existing historical data to a new storage, system, or file format. This process is not as simple as it may sound. It involves a lot of preparation and post-migration activities, including planning, creating backups, quality testing, and validation of results. The migration ends only when the old system, database, or environment is shut down.

What makes companies migrate their data assets

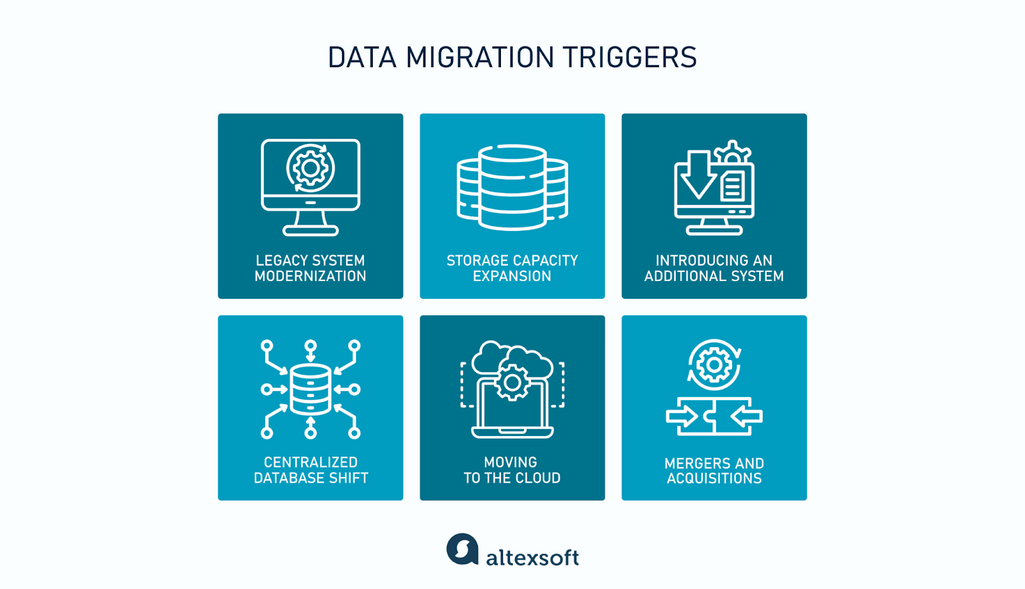

Usually, data migration comes as a part of a larger project, such as

- legacy software modernization or replacement,

- the expansion of system and storage capacities,

- the introduction of an additional system working alongside the existing application,

- the shift to a centralized database to eliminate data silos and achieve interoperability,

- moving IT infrastructure to the cloud, or

- merger and acquisition (M&A) activities when IT landscapes must be consolidated into a single system.

Explore how AltexSoft helped ASL Aviation migrate to the cloud.

Data migration is sometimes confused with other processes involving massive data movements.

Data migration vs. other concepts

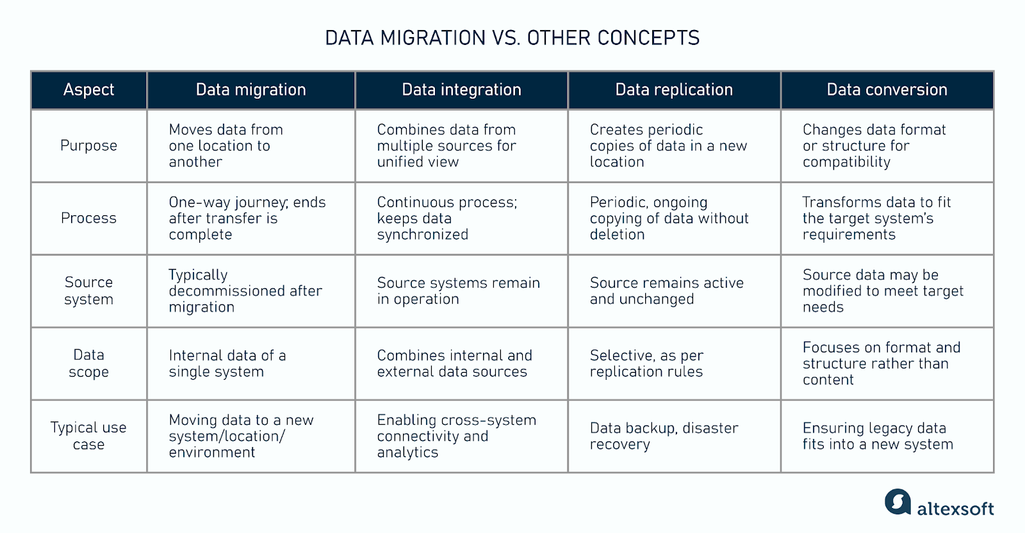

Before we go any further, it’s important to clear up the differences between data migration, data integration, data replication, and data conversion.

Data migration vs. data integration

Unlike migration, which deals with the company’s internal information, integration is about combining data from multiple sources outside and inside the company into a single view. It is an essential element of the data management strategy that enables connectivity between systems and gives access to content across various subjects. Consolidated datasets are a prerequisite for accurate analysis, extracting business insights, and reporting.

Data migration is a one-way journey that ends once all the information is transported to a target location. Integration, by contrast, can be a continuous process that involves data streaming and sharing information across systems.

Data migration vs. data replication

In data migration, after the data is completely transferred to a new location, you eventually abandon the old system or database. In replication, you periodically transport data to a target location without deleting or discarding its source. So, it has a starting point but no defined completion time.

Data replication can be part of the data integration process and may also become data migration, provided the source storage is decommissioned.

Data migration vs. data conversion

When migrating data, the two systems might have different data structures. Legacy data often requires modifications before it can be successfully transported.

Data conversion is the process of transforming data from one format to another. It is done to ensure the data is usable in a new environment and meets the requirements of the target system. It involves changing the data type or structure, such as the date format from MM/DD/YYYY to DD-MM-YYYY.

Data conversion is usually part of the broader data migration process.

Data migration strategy

Data migration strategies

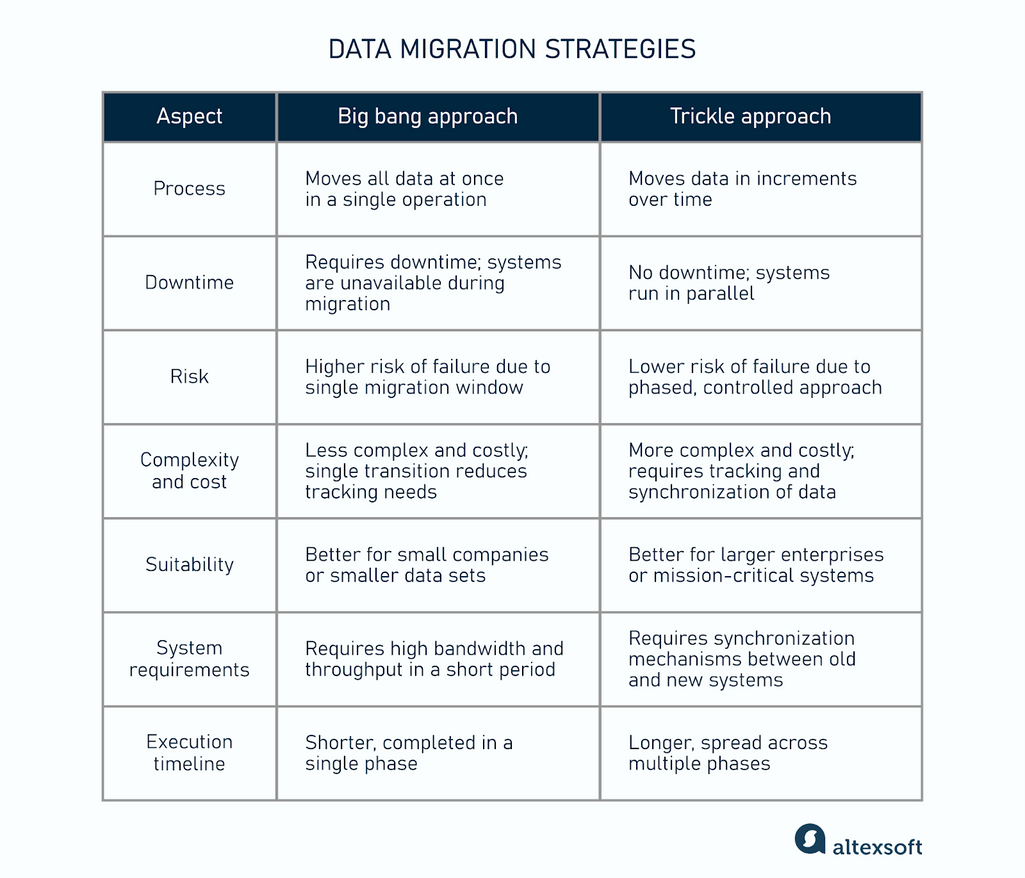

Choosing the right approach to migration is the first step to ensuring that the project will run smoothly without severe delays.

Big bang data migration

Advantages: less costly, less complex, takes less time, all changes happen once

Disadvantages: a high risk of expensive failure, requires downtime

In a big bang scenario, all data assets are moved from the source to the target environment in one operation within a relatively short time window.

Systems are down and unavailable for users so long as data moves and undergoes transformations to meet the requirements of a target infrastructure. The migration is typically executed during a legal holiday or weekend when customers presumably don’t use the application.

The big bang approach allows you to complete migration in the shortest possible time and saves the hassle of working across the old and new systems simultaneously. However, even midsize companies accumulate vast information in the era of big data, while the throughput of networks and API gateways is not endless. This constraint must be considered from the start.

Verdict. The big bang approach fits small companies or businesses working with small amounts of data. It doesn’t work for mission-critical applications that must be available 24/7.

Trickle data migration

Advantages: less prone to unexpected failures, zero downtime required

Disadvantages: more expensive, takes more time, needs extra efforts and resources to keep two systems running

Also known as a phased or iterative migration, this approach brings Agile experience to data transfer. It breaks down the entire process into sub-migrations, each with its own goals, timelines, scope, and quality checks.

Trickle migration involves parallel running of the old and new systems and transferring data in small increments. As a result, you take advantage of zero downtime and your customers are happy because of the 24/7 application availability.

Conversely, the iterative strategy takes much more time and adds complexity to the project. Your migration team must track which data has already been transported and ensure users can switch between two systems to access the required information.

Another way to perform trickle migration is to keep the old application entirely operational until the end of the migration. As a result, your clients will use the old system as usual and switch to the new application only when all data is successfully loaded to the target environment.

However, this scenario doesn’t make things easier for your engineers. They have to make sure that data is synchronized in real time across two platforms once it is created or changed. In other words, any changes in the source system must trigger updates in the target system.

Verdict. Trickle migration is the right choice for medium and large enterprises that can’t afford long downtime but have enough expertise to face technological challenges.

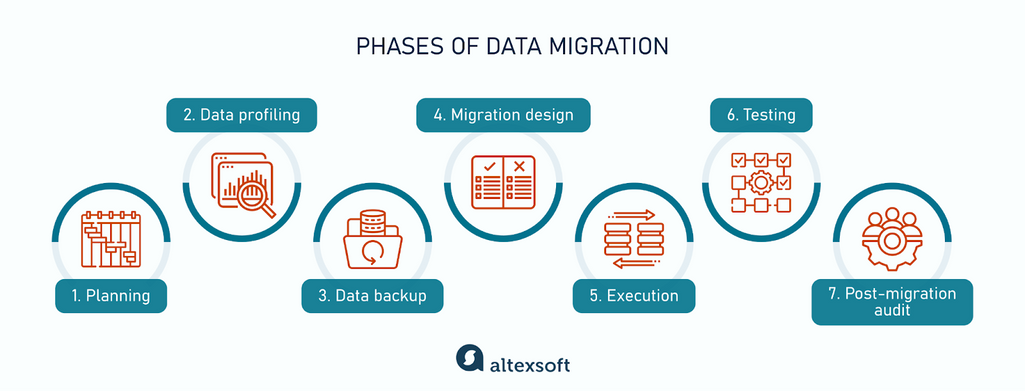

Data migration process

No matter the approach, the data migration project goes through the same key phases — namely

- planning,

- data auditing and profiling,

- data backup,

- migration design,

- execution,

- testing, and

- post-migration audit.

Key phases of the data migration process

Below, we’ll outline what you should do at each phase to transfer your data to a new location without losses, extensive delays, or/and ruinous budget overrun.

Step 1. Planning: create a data migration plan and stick to it

Data migration is a complex process, and it starts with evaluating existing data assets and carefully designing a migration plan. The planning stage involves four steps.

Refine the scope. The key goal of this step is to filter out any excess data and to define the smallest amount of information required to run the system effectively. You need to perform a high-level analysis of source and target systems in consultation with data users who will be directly impacted by the upcoming changes.

Assess source and target systems. A migration plan should include a thorough assessment of the current system’s operational requirements and how they can be adapted to the new environment.

Set data standards. This will allow your team to spot problem areas across each phase of the migration process and avoid unexpected issues at the post-migration stage.

Estimate the budget and set realistic timelines. After the scope is refined and systems are evaluated, it’s easier to select the approach (big bang or trickle), estimate resources needed for the project, and set deadlines. According to Oracle estimations, an enterprise-scale data migration project lasts, on average, six months to two years.

Step 2. Data auditing and profiling: employ digital tools

This stage examines and cleanses the full scope of migrating data. It aims to detect possible conflicts, identify data quality issues, and eliminate duplications and anomalies before the migration.

Auditing and profiling are tedious, time-consuming, and labor-intensive activities, so in large projects, automation tools should be employed. Among popular solutions are Open Studio for Data Quality, Data Ladder, SAS Data Quality, Informatica Data Quality, and IBM InfoSphere QualityStage, to name a few.

Step 3. Data backup: protect your content before moving it

Technically, this stage is not mandatory. However, best data migration practices dictate a full backup of the content you plan to move—before executing the migration. As a result, you’ll get an extra layer of protection in the event of unexpected migration failures and data losses.

Step 4. Migration design: hire an ETL specialist

The migration design specifies migration and testing rules, clarifies acceptance criteria, and assigns roles and responsibilities across the migration team members.

Though you can use different approaches, extract, transform, and load (ETL) is the preferred one. It makes sense to hire an ETL developer—or a dedicated data engineer with deep expertise in ETL processes, especially if your project deals with large data volumes and complex data flow.

At this phase, ETL developers or data engineers create scripts for data transition or choose and customize third-party ETL tools. The ideal scenario also involves a system analyst who knows both the source and target systems and a business analyst who understands the value of the data to be moved.

The duration of this stage depends mainly on the time needed to write scripts for ETL procedures or to acquire appropriate automation tools. If all required software is in place and you only have to customize it, migration design will take a few weeks. Otherwise, it may span a few months.

Step 5. Execution: focus on business goals and customer satisfaction

This is when migration—or data extraction, transformation, and loading—actually happens. In the big bang scenario, it will last no more than a couple of days. Alternatively, if data is transferred in trickles, execution will take much longer but, as we mentioned before, with zero downtime and the lowest possible risk of critical failures.

If you’ve chosen a phased approach, ensure that migration activities don’t hinder usual system operations. Your migration team must also communicate with business units to refine when each sub-migration will be rolled out and to which group of users.

Step 6. Data migration testing: check data quality across phases

Testing is not a separate phase, as it happens across the design, execution, and post-migration phases. If you have taken a trickle approach, you should test each portion of migrated data to fix problems on time.

Frequent testing ensures the safe transit of data elements, their high quality, and congruence with requirements when entering the target infrastructure. Our dedicated article explains the details of ETL testing.

Step 7. Post-migration audit: validate results with clients

Before launching migrated data in production, validate the results with key stakeholders. This stage ensures that information has been correctly transported and logged. After a post-migration audit, the old system can be retired.

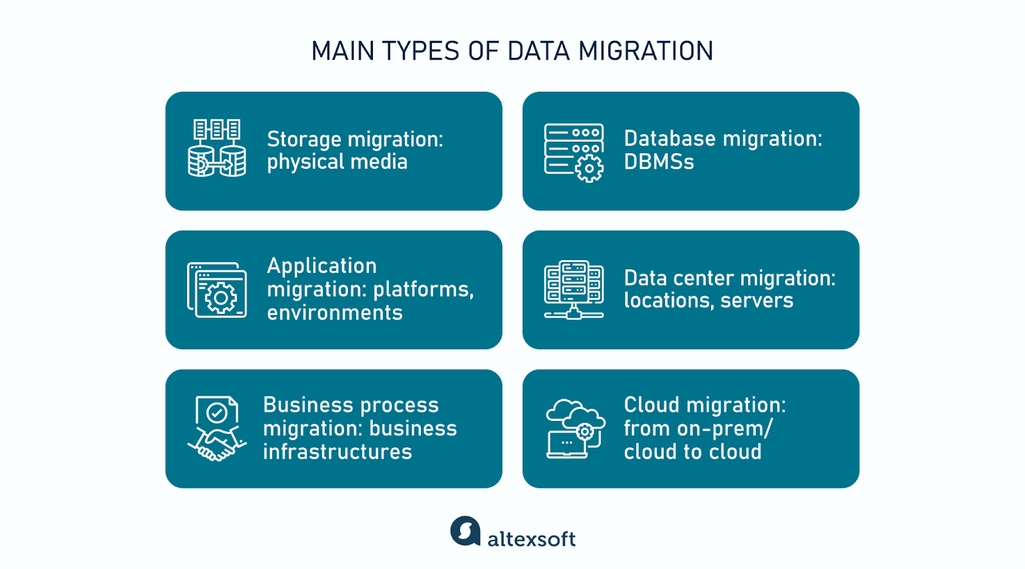

Main types of data migration

There are six commonly used types of data migration. However, this division is not strict. A particular data transfer case may belong, for example, to both database and cloud migration or involve application and database migration at the same time.

Six main types of data migration

Storage migration

Storage migration occurs when a business acquires modern technologies, discarding out-of-date equipment. It entails transporting data from one physical medium to another or from a physical to a virtual environment. Examples of such migrations are

- from paper to digital documents,

- from hard disk drives (HDDs) to faster and more durable solid-state drives (SSDs), or

- from mainframe computers to cloud storage.

The primary reason for this shift is a pressing need for technology upgrades rather than a lack of storage space. When it comes to large-scale systems, the migration process can take years. Say, Sabre, the second-largest global distribution system (GDS), had been moving its software and data from mainframe computers to virtual servers for over a decade.

Database migration

A database is not just a place to store data. It provides a structure to organize information in a specific way and is typically controlled via a database management system (DBMS).

So, most of the time, database migration means

- an upgrade to the latest version of DBMS (so-called homogeneous migration),

- a switch to a new DBMS from a different provider — for example, from MySQL to PostgreSQL or from Oracle to MSSQL (so-called heterogeneous migration).

The latter case is tougher, especially if the target and source databases support different data structures. It makes the task even more challenging when moving data from legacy databases—like Adabas, IMS, or IDMS.

Application migration

When a company changes an enterprise software vendor—for instance, a hotel implements a new property management system (PMS) or a hospital replaces its legacy EHR system — this requires moving data from one computing environment to another. The key challenge here is that old and new infrastructures may have unique data models and work with different data formats.

Data center migration

A data center is a physical infrastructure used by organizations to store their critical applications and data. Put more precisely, it’s a very dark room with servers, networks, switches, and other IT equipment. Data center migration can mean different things, from relocating existing computers and wires to other premises to moving all digital assets (data and business applications) to new servers and storage.

Business process migration

This type of migration is driven by mergers and acquisitions, business optimization, or reorganization to address competitive challenges or enter new markets. All these changes may require transferring business applications and databases with data on customers, products, and operations to the new environment.

Cloud migration

Cloud migration is a popular term that embraces all the above-mentioned cases if they involve moving data from on-premises to the cloud or between different cloud environments.

Depending on volumes of data and differences between source and target locations, migration can take 30 minutes to months and even years. The project's complexity and the downtime cost define how exactly to unwrap the process.

Data migration real-world examples and use cases

In recent years, many global businesses have embraced data migration to drive scalability, agility, and resilience in their operations. We collected several examples of trickle migration to illustrate how companies reframed their business potential.

Zuellig Pharma: Migrating a 40-System SAP landscape with Azure

Types of migration: cloud, application

Zuellig Pharma, a healthcare solutions company operating in Asia, migrated its entire SAP environment, previously housed by on-premises infrastructure, to an Azure-hosted cloud environment. The migration included a substantial 40 production systems and more than 65 terabytes of data.

As part of a broader hybrid, multi-cloud strategy, the company integrated with Azure and Amazon Web Services (AWS) to benefit from advanced ERP (Enterprise Resource Planning) capabilities, along with analytics and reporting tools like Tableau.

The migration drastically improved Zuellig Pharma’s ability to operate at scale and slashed latency for real-time analytics by 75 percent.

Airbnb: Using AWS to handle a growing user base

Types of migration: cloud, application, database

Airbnb migrated nearly all its cloud computing operations to AWS when they faced difficulties with its original provider.

As Airbnb’s user base rapidly expanded, the company needed a scalable, reliable infrastructure. AWS offered on-demand scalability to meet traffic and storage needs. Airbnb now uses Amazon EC2 instances for applications, caching, and search. Elastic Load Balancing allows the company to distribute traffic, and Amazon EMR helps process 50 GB of data daily.

Additionally, Airbnb completed its MySQL database migration to Amazon RDS with only 15 minutes of downtime. The company benefits from reduced time spent on database administration tasks, such as replication and scaling, which can be performed via API calls.

Netflix: Global expansion and increased service uptime with AWS

Types of migration: cloud, application, database

A major database failure in 2008 made Netflix migrate from on-premises data centers to AWS. The process included transforming their monolithic architecture into microservices and shifting from relational databases to more resilient, horizontally scalable systems.

With AWS’s global reach, Netflix could dynamically allocate resources across multiple regions, supporting its expansion into over 130 countries. The cloud allowed Netflix to add thousands of virtual servers and petabytes of storage to manage rapid user growth.

Moving to AWS helped Netflix achieve near "four nines" (99.99 percent) of service uptime. If a data-intensive feature encounters a problem, Netflix can temporarily suspend or limit access to it while maintaining essential streaming functionality for users.

Spotify: Migrating to GCP to focus on product innovation

Types of migration: cloud, application

Spotify transitioned from on-premises data centers to the Google Cloud Platform (GCP). They opted for a single-provider, “all-in” cloud strategy with GCP, refusing multi-cloud or hybrid options.

As a music streaming company, Spotify wanted to prioritize developing user-focused services rather than managing complex data centers. By moving to the cloud, they could shift their engineering focus from infrastructure maintenance to product innovation, benefiting their growing audience.

Spotify’s partnership with GCP allowed them to work closely with Google engineers, enhancing GCP’s features and tailoring cloud solutions specifically to Spotify’s requirements.

Amadeus: Partnering with Microsoft to scale data-heavy travel operations

Types of migration: cloud, application, business process

Amadeus partnered with Microsoft to migrate its infrastructure to Azure, establishing a cloud-based platform to support its extensive, interconnected travel services. This transition was essential due to the company's complex, mission-critical services that handle vast amounts of data and traffic in real time.

Amadeus implemented a Zero Trust security framework, continuously validating every stage of digital interaction to protect sensitive customer information. They also achieved PCI DSS compliance through Azure’s advanced security tools and role-based access controls (RBAC).

As a part of the migration project, Amadeus broke down its legacy monolithic architecture into cloud-native components, providing greater flexibility to scale and update its services as demand grows.

Data migration challenges

Data migration involves several risks and challenges. Understanding them and how they arise is crucial to preparing for a smooth and successful migration process.

Data loss. Some data may be unintentionally lost or corrupted, especially if the data volumes are large and if there are complex dependencies. Create thorough data backups before migration and conduct incremental migrations where possible. Run pre- and post-migration data verification, comparing datasets in the source and target systems.

Downtime and business disruption. Migration often requires system downtime, which can impact business operations. Plan for downtime during off-peak hours or use a phased approach to minimize impact. Implement a rollback strategy to quickly revert to the old system if the migration fails, and clearly communicate the schedule and potential downtime to all stakeholders.

Performance and scalability issues. If the new data structure does not match the target system’s performance needs, you may experience slowdowns or issues with data retrieval. Conduct performance testing and optimize data structures in the new environment to ensure that the system remains fast and scalable.

Lack of stakeholder engagement. If stakeholders are not informed and consulted early on, their needs may be overlooked. From the beginning, communicate the purpose of the migration, addressing any concerns upfront. Set clear expectations about timelines and deliverables, and be transparent about any challenges or delays.

Unexpected costs. Migration projects may exceed budget due to unforeseen complexities, delays, additional resource needs, or extended use of third-party data migration tools. Develop a detailed budget with contingency funds allocated for unexpected challenges. Consider agile project management to address scope adjustments and perform regular cost assessments to avoid budget overruns.

Security and data governance. Security vulnerabilities can arise if there are no clear access control mechanisms during migration. Data governance defines who owns the data, who can modify it, and how it’s handled over time. Establish clear policies for data ownership and role-based access controls to ensure that only authorized personnel are involved in the migration process.

Each challenge underlines the importance of a structured approach to data migration, which ensures thorough preparation, communication, and careful resource management.

Data migration best practices

While each data migration project is unique and presents its own challenges, the following golden rules may help companies safely transit their valuable data assets, avoiding critical delays.

- Use data migration as an opportunity to reveal and fix data quality issues. Set high standards to improve data and metadata as you migrate them.

- Hire data migration specialists and assign a dedicated team to run the project.

- Minimize the amount of data for migration.

- Profile all source data before writing mapping scripts.

- Allocate considerable time to the design phase as it greatly impacts project success.

- Don’t be in a hurry to switch off the old platform. Sometimes, the first attempt at data migration fails, demanding rollback and another try.

Data migration is often viewed as a necessary evil rather than a value-adding process. This seems to be the key root of many difficulties. Considering migration an important innovation project worthy of special focus is half the battle won.