Humans can naturally sense emotional nuances, but machines often struggle to interpret this subtext. We can pick up on tone, sarcasm, and context naturally, but AI tools still struggle with these nuances. The challenge is amplified in enterprise settings, where systems must process massive volumes of unstructured data from diverse formats like tweets, reviews, surveys, and chat logs. Yet, businesses depend on understanding how people feel about their products, services, or brand to make better decisions.

This article explores the main sentiment analysis methods for extracting reliable insights from complex, messy text. We will explain how each approach works, compare their pros and cons, and highlight the best use cases for each method.

What is sentiment analysis?

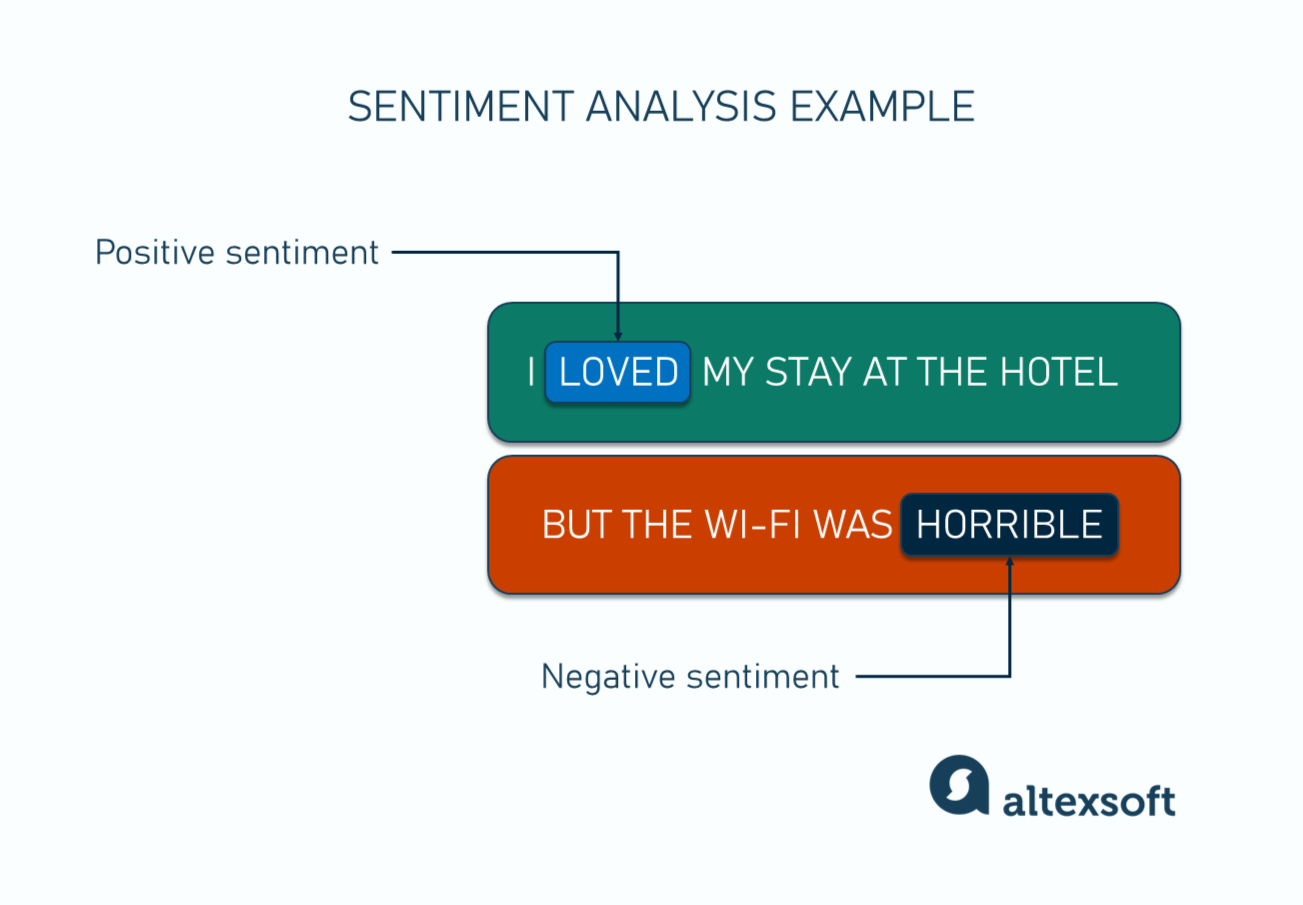

Sentiment analysis, also called opinion mining, is the process of using computational techniques—including natural language processing (NLP), rule-based systems, and machine learning—to identify and interpret the emotional tone behind text data. It helps determine whether a piece of text—from sources like social media posts, customer reviews, and surveys—expresses a positive, negative, or neutral opinion. The goal is to turn subjective information like attitudes or emotions into measurable insights that can guide business or research decisions.

By processing large volumes of text automatically, businesses can track customer satisfaction, monitor brand reputation, and uncover trends in real time.

Goals of sentiment analysis

The goals of sentiment analysis often depend on what a business wants to understand from its text data. Some organizations need a broad overview of customer sentiment, while others seek deeper, more detailed insights tied to specific aspects of their products or services.

Sentiment analysis can operate at three levels of granularity.

Document-level sentiment. This provides a general sense of whether a whole text is positive, negative, or neutral. For example, a hotel might analyze full customer reviews to determine overall satisfaction with a recent stay. A review saying “My trip was amazing. However, the Wi-Fi could be better” would likely still be classified as positive overall. This level works well for businesses that need quick summaries or trend tracking at scale.

Sentence-level sentiment. This method looks at sentiment one sentence at a time. It’s useful when a review or comment contains both positive and negative opinions in different parts of the text or document. For example, in “My trip was amazing. However, though the Wi-Fi could be better,” each sentence is treated separately, so the system captures both emotions. Sentence-level sentiment helps summarize the overall tone of individual statements and detect mixed opinions in a single review.

Aspect-based sentiment analysis. This is the most granular approach. This goes a step further by identifying specific features or topics—called aspects or entities—and measuring how people feel about each one. For instance, in a hotel review, it separates feedback about the “location,” “food,” and “service,” even if they appear in the same sentence. This helps businesses see patterns like “guests love the location but dislike the breakfast,” giving a more detailed view of what features drive satisfaction or complaints.

Different industries and data sets have their own language, tone, and context. For that reason, they often require different sentiment analysis methods to get accurate results. Let’s explore these methods in detail in the following section, starting with rule-based sentiment analysis.

Rule-based sentiment analysis methods

Rule-based sentiment analysis is the oldest and most straightforward approach to detecting sentiment in text. It relies on predefined sets of rules and sentiment lexicons—dictionaries that map words to sentiment scores. For example, words like "excellent" or "amazing" may have a score of +2, while "terrible" or "poor" might have a score of -2.

The system looks for these words in a portion of text and calculates the overall polarity (positive, negative, or neutral) based on how many positive or negative terms appear and how strong they are.

How it works

At its core, a rule-based system follows a sequence of steps.

- Text preprocessing: The text is first cleaned to remove things like punctuation, numbers, or extra spaces that don’t add meaning. Then it’s tokenized, which means the text is split into smaller parts—usually words or phrases—so the system can analyze them individually. Finally, the words are often lemmatized, which means converting different forms of a word to their base form (for example, “loved,” “loving,” and “loves” become “love”) so they can be recognized as the same word during analysis.

- Lexicon lookup: After preprocessing, each token (word or phrase) is checked against a sentiment lexicon—a predefined list of words with assigned sentiment scores. Commonly used lexicons include AFINN, SentiWordNet, and VADER (Valence Aware Dictionary and sEntiment Reasoner), each with its own scoring method and language coverage. These lexicons help the system decide whether a word expresses a positive, negative, or neutral emotion. For example, in the AFINN lexicon, a word like “excellent” might have a score of +3, while “terrible” might have −3. The system adds up these values throughout the text to estimate its overall sentiment.

- Rule application: The system applies handcrafted rules to handle linguistic context, such as negations (“not good” becomes negative), intensifiers (“very happy” becomes more positive), or contrastive conjunctions (“The room was nice, but the service was terrible,” which often gives more weight to the clause after "but").

- Score aggregation: The sentiment scores are combined to produce an overall polarity score or label for the text.

This makes the process transparent and easy to interpret—you can see exactly how each word or phrase contributes to the final score. Tools like VADER are popular examples of rule-based models, as they include built-in lexicons and rules to handle linguistic nuances like negation and intensifiers.

Pros

Rule-based sentiment analysis provides transparency since each sentiment outcome can be traced back to a specific word or rule. This makes it useful in settings where explainability matters. It doesn’t require training data like machine learning methods do, so it works well when labeled examples are limited or unavailable.

The approach is also customizable, letting teams adjust lexicons and rules to match the language of their industry, whether that’s hospitality, finance, or healthcare. It’s lightweight and fast, which makes it a good fit for quick setups or real-time analysis.

Cons

Rule-based systems have limited adaptability and struggle with new slang, shifting language, or domain-specific terms. They’re hard to scale because maintaining and updating large sets of rules becomes complex over time. They also have context limitations, often missing a text's sarcasm, irony, or mixed emotions. Besides that, manual effort is a constant factor—building and refining rules takes time, especially when the language or business environment changes frequently.

When it’s best suited

The following work best for rule-based sentiment analysis.

- Small or structured datasets where vocabulary and tone are predictable, such as internal surveys or product feedback forms.

- Quick prototypes or baselines before deploying more advanced models.

- Domains with strict interpretability needs, such as finance, healthcare, or compliance monitoring.

- Low-resource projects where you can’t afford to label data or run large models.

In business contexts, rule-based systems can be highly effective for monitoring known keywords, e.g., tracking “check-in,” “breakfast,” or “Wi-Fi” sentiment in hotel reviews. However, when scale, variety, or linguistic complexity increases, machine learning and deep learning models begin to outperform rule-based approaches.

Machine learning-based sentiment analysis

Machine learning-based sentiment analysis uses algorithms that learn patterns from data instead of relying on predefined rules. These models analyze large amounts of labeled text to recognize how words and phrases relate to emotions, opinions, or attitudes.

The following different types of algorithms can be used for this approach.

- Logistic Regression: This model examines how different words contribute to a sentiment score. For example, it might learn that “poor” lowers the score while “amazing” increases it.

- Naive Bayes: This algorithm calculates how likely a piece of text belongs to a sentiment class based on word frequency. It might predict a review as positive if words like “great” or “excellent” appear often.

- Gradient Boosting (e.g., XGBoost, LightGBM): These are combined models that build decision trees one after another, with each new tree learning from and fixing the mistakes made by the earlier ones. For example, if an early prediction misclassifies neutral feedback, later trees adjust to handle it better.

How it works

Instead of relying on fixed rules, machine learning-based sentiment analysis learns patterns from data through a structured process.

- Data processing: Text data is collected from various sources, cleaned, and tagged with a sentiment label—positive, negative, or neutral—manually or through automated methods.

- Feature extraction: The processed text is converted into numerical form using text representation techniques like bag-of-words, TF-IDF, or n-grams, which capture how often words appear or occur together.

- Model training: Algorithms like Logistic Regression and Naive Bayes are trained on the labeled data to identify patterns linked to each sentiment type.

- Evaluation and prediction: The trained model is validated on new text to measure its accuracy. Once deployed, it can automatically classify unseen data—for instance, tagging “The hotel room was spotless, but check-in took forever” as a mixed sentiment.

This process allows the model to improve as it processes more data, making it adaptable to new words, expressions, and industry-specific contexts.

Pros

Machine learning–based sentiment analysis is more flexible and adaptive than rule-based systems. It doesn’t depend on static word lists, so it can handle new phrases, slang, and language variations once it’s exposed to enough examples. It also improves with more data, meaning that accuracy tends to increase as the training set grows. Another advantage is domain adaptability—a model can be retrained on new data from a specific industry, such as hospitality or retail, to match the tone and vocabulary of that field.

Cons

The biggest limitation is the need for labeled data. Building and maintaining large, high-quality training datasets is often time-consuming and expensive. Machine learning models can also struggle with context, especially when faced with sarcasm or subtle emotional cues. They’re less interpretable than rule-based systems, so understanding why a model assigned a specific sentiment score can be difficult. Lastly, these models require computational resources for training and tuning, which can increase cost and complexity in production environments.

When it’s best suited

Machine learning–based sentiment analysis is a good choice when you need models that can learn from data and adapt to changing language patterns. It works well in the following cases.

- When labeled training data is available and you want the model to improve accuracy over time

- When the text includes diverse vocabulary or domain-specific terms that rule-based systems can’t easily handle

- When scalability and automation are priorities, making manual rule updates too time-consuming

- When you need moderate interpretability but higher accuracy than simple rule-based approaches

- When analyzing customer feedback or reviews across multiple products, categories, or regions

In the hospitality sector, major hotel chains apply machine-learning sentiment models to process thousands of guest-reviews and social-media posts per property.

Deep learning approaches

Deep learning is a branch of machine learning that focuses on neural networks with many layers of interconnected neurons. These layers help the model automatically learn complex patterns in text, such as tone, emotion, and context.

Models such as Recurrent Neural Networks (RNNs), Convolutional Neural Networks (CNNs), and transformer-based architectures like BERT, RoBERTa, or DistilBERT can understand context, sarcasm, and subtle emotional cues that simpler models often miss. They are widely used in large-scale applications like social listening, brand reputation monitoring, and automated customer service systems, where text data is too large and diverse for manual analysis.

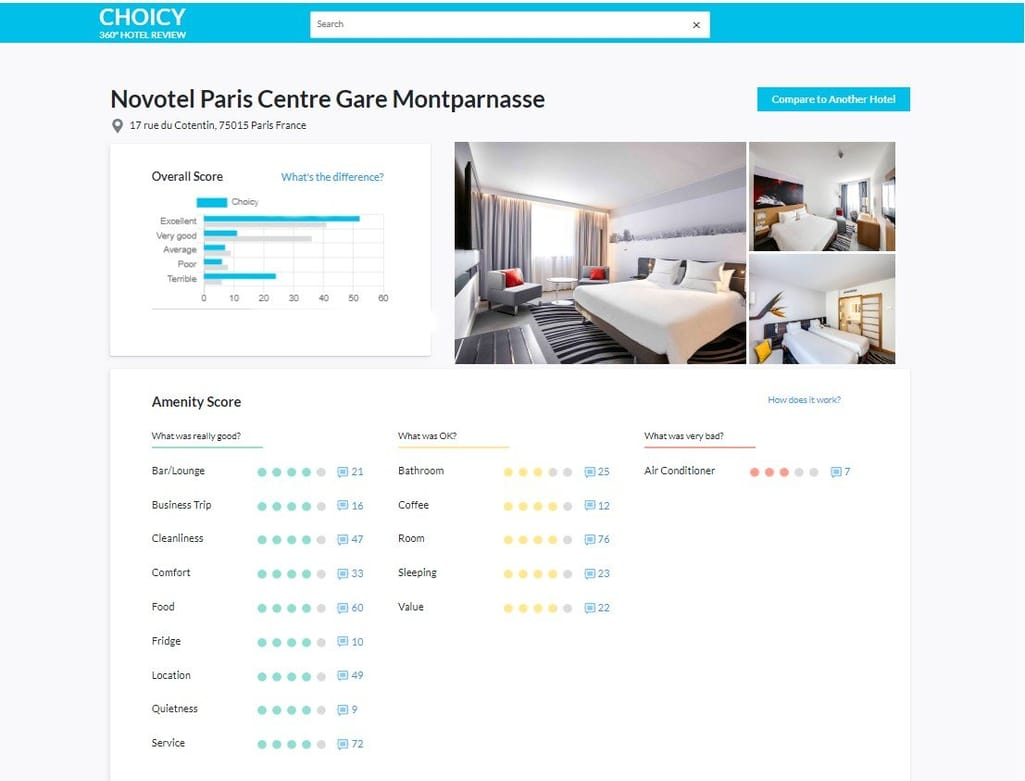

At AltexSoft, our data science team applied this approach to build Choicy, an NLP-powered prototype that analyzes hotel reviews and scores amenities such as breakfast, room quality, swimming pool, and cleaning services, based on guest sentiment. The system helps travelers compare hotels at a deeper level without reading through hundreds of reviews.

Read our case study to learn more about Choicy.

How it works

There are different tools and methods, but here’s how we approached it when developing Choicy:

- Defining the concept and workflow: We began by outlining how the platform should function. Users would be able to search for a hotel by name or URL, and the system would detect mentions of amenities, like Wi-Fi, air conditioning, or breakfast, in customer reviews. The goal was to analyze each amenity’s sentiment and return both individual and overall hotel scores.

- Building the dataset: We collected around 100,000 hotel reviews from public sources, including Kaggle datasets. Because these reviews didn’t contain labeled sentiment for amenities, our team performed semi-manual labeling to tag each mention as positive, negative, or neutral. This dataset formed the foundation for model training.

- Model training and experimentation: We experimented with multiple models, from traditional NLP techniques to deep learning architectures. Eventually, we settled on two neural networks:

- A 1D Convolutional Neural Network (CNN) with GloVe embeddings for scoring entire hotel reviews.

- A Hierarchical Attention-based Position-aware Network (HAPN) for analyzing individual amenities.

This combination allowed the model to provide both a general sentiment overview and more granular insights at the amenity level.

We used Python, NLTK, Keras, and TensorFlow to build Choicy. However, there are many other great sentiment analysis tools out there, including Google’s Natural Language API, Amazon Comprehend, Meltwater, and Qualtrics.

Pros

Deep learning sentiment analysis provides high accuracy and captures contextual meaning, making it suitable for detecting sarcasm and subtle emotional differences. It can adapt to different domains through fine-tuning, and pretrained models reduce the time needed to develop a system from scratch. These models also support multiple languages, allowing consistent sentiment tracking across regions.

Cons

Deep learning requires large, labeled datasets and powerful computing resources, making it costly to train and maintain. The models are often difficult to interpret, as they operate like black boxes with limited visibility into decision-making. They also require regular retraining to keep up with changes in language or user behavior. Deploying them can be complex without proper infrastructure and MLOps support.

When it’s best suited

Deep learning sentiment analysis works best when accuracy and contextual understanding are more important than simplicity. It excels in the following scenarios.

- When analyzing complex language that includes sarcasm, irony, or mixed emotions

- When processing large volumes of data, such as social media feeds, product reviews, or call transcripts

- When building domain-specific sentiment models tailored to industries like healthcare, finance, or retail

- When using pretrained transformer models for faster, high-quality results

- When supporting multilingual sentiment analysis for global use cases

Deep learning is a good fit when large text datasets and subtle emotional tone detection are required. It is ideal for organizations seeking deep, nuanced insight from text data and are prepared to invest in data, infrastructure, and ongoing model updates.

Generative AI methods

Generative AI–based sentiment analysis uses large language models (LLMs) to interpret, classify, or even generate text that reflects sentiment. Unlike standard deep learning models, which are trained just to label sentiment, generative AI can be prompted or fine-tuned to provide detailed sentiment insights across multiple tasks, often without needing large, labeled datasets.

Many SaaS platforms are now embedding sentiment analysis features powered by generative AI. These integrations allow users to automatically assess tone, mood, and emotional context in conversations or written content. For example, platforms like tl;dv, Otter.ai, and Fireflies.ai use sentiment analysis on the transcripts of recorded meetings to help teams understand the overall tone of discussions and identify key moments.

How it works

The workflow for generative-AI sentiment analysis typically includes the following steps.

- Prompt/design or fine-tune stage: Define the task prompt or fine-tune an LLM on domain-specific data (e.g., “Classify the sentiment of the following review: positive/negative/neutral”). The prompting technique can be zero-shot (no examples), few-shot (a few examples), or use more complex methods like chain of thought (CoT) to guide the model's reasoning.

- Input ingestion and preprocessing: Collect text data like customer reviews, social-media posts, surveys, and clean it. Then, it is fed to the generative model via a prompt.

- Model inference and generation/extraction: The LLM processes the input and either outputs a sentiment classification, a sentiment score, or a summary that captures emotional tone and context. It may also generate additional text (e.g., “Why this sentiment?” or “What key issues are mentioned?”).

- Post-processing and structure: You convert the model output into structured insights such as sentiment labels, aspect-level sentiment, themes, or actionable summaries. You may aggregate results, tag categories, or integrate into dashboards.

This workflow allows organizations to leverage generative AI’s ability to understand context and nuance and even generate explanatory text rather than just assign a label.

Pros

Generative AI methods are flexible. You can prompt the model for many sentiment-related tasks without building separate models for each. They perform well even with very little labeled data. These models handle context better than traditional models, detect subtle sentiments, analyze specific aspects, and can generate explanations or summaries that are easy to understand. This makes the results more actionable than just positive, negative, or neutral labels.

They’re also often cheaper to deploy than training a custom machine learning model, since you can use an existing LLM API instead of building and maintaining your own system.

Cons

Generative AI methods are often less interpretable. While they can output labels or summaries, it can be hard to see exactly how the model arrived at them. They can sometimes generate information that isn’t in the input, which requires careful monitoring. Using these models at scale can be costly and demands careful prompt design and quality checks. They may also reflect biases or issues from the training data if not carefully audited.

When it’s best suited

Generative-AI sentiment analysis is best used when you need more than just a sentiment label and want richer outputs like summaries, themes, explanations, or when labeled data is scarce.

- When you want to extract aspect-based sentiment and automatically generate human-readable insights rather than only numeric scores

- When you have diverse or unstructured text from multiple domains (reviews, chats, social posts, transcripts) and need flexibility in the task

- When you have limited labeled data and prefer a prompt-based or few-shot approach rather than full supervised training

- When you require multilingual or cross-domain support, since LLMs often come pretrained in many languages and tasks

- When you want to generate actionable insights (summaries, themes, actionable issues) from sentiment, not just classification

In these scenarios, generative AI can unlock deeper insight and faster deployment. It's important to deploy it alongside AI guardrails to ensure accuracy and reliability.

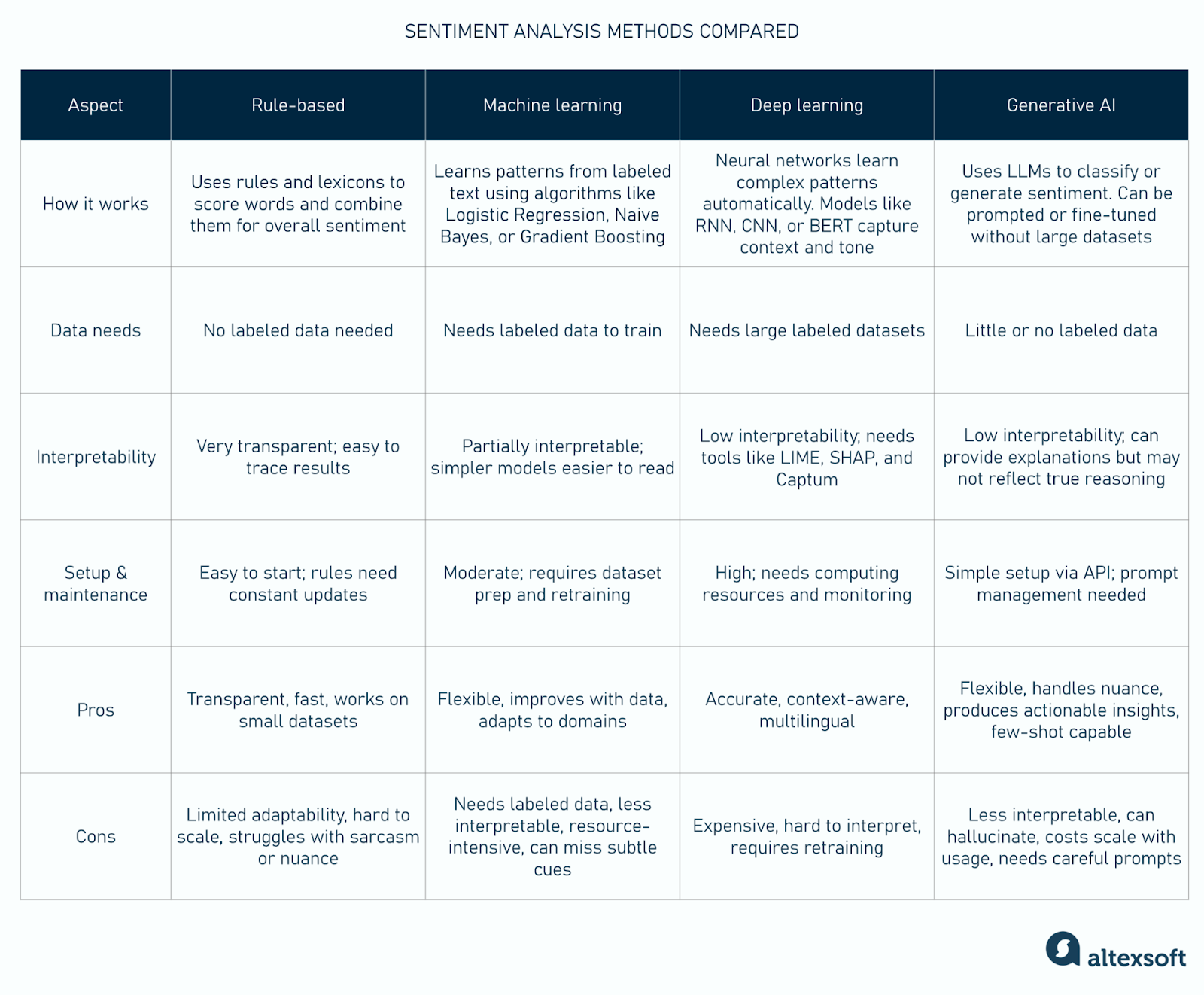

The sentiment analysis methods compared

We’ve looked at four main approaches to sentiment analysis. Now, let's explore their differences.

Data needs and scalability

Rule-based systems are arguably the easiest to start with because they don’t rely on training data. They depend on predefined word lists and manual rules, making them simple but harder to maintain as data or language variety increases. Machine learning builds on this by learning from labeled datasets, making it easier to handle larger volumes and changing language patterns. However, enough clean and balanced data is still needed to perform well.

Deep learning goes a step further by using large datasets to automatically detect more complex patterns, offering better scalability but at a much higher computational cost. Pretrained, generative AI models like GPT can perform sentiment analysis with little or no task-specific labeled data by using prompt-based (zero-shot) or few-shot learning. This saves time on data labeling, but scalability depends more on model access and API costs than on building new datasets.

Accuracy and context understanding

Rule-based models often miss subtle language cues like sarcasm, negation, or changes in tone because their logic doesn’t account for context. Machine learning models are better at spotting patterns in labeled data, which improves accuracy, but they can still struggle with complex phrasing or mixed emotions.

Deep learning handles this more effectively—models like LSTMs and Transformers can understand how words relate across sentences, leading to a more accurate interpretation of tone and emotion. Generative AI models, such as GPT, can match or even surpass this accuracy since they’ve been trained on massive text datasets and can naturally infer meaning from context. Still, without proper fine-tuning or safeguards, they may produce uneven or biased results.

Transparency and interpretability

A major difference between these methods is how transparent their outputs are. Rule-based models are the easiest to explain because every sentiment label comes from clear rules or dictionary matches. Machine learning adds some opacity—simple models like Logistic Regression can show which features influenced a result, but complex ones like Random Forests or SVMs make it harder to trace how predictions are made.

Deep learning is even less interpretable; understanding why a neural network marked something as positive often needs separate tools like LIME, SHAP, Integrated Gradients, and Captum to visualize feature importance and explain model decisions. Generative AI brings some level of interpretability since it can describe its reasoning in plain language, but those explanations don’t always match how the model actually arrived at the result, except in cases where the model exposes its chain of thought.

Set up, maintenance, and operational costs

Rule-based systems are simple to set up but can become expensive to maintain as language and customer behavior change. Teams have to keep updating word lists and rules to stay accurate. Machine learning takes more effort upfront since it needs labeled data, but once trained, it’s easier to update because retraining with new data can quickly restore accuracy.

Deep learning increases both setup and maintenance needs, requiring powerful hardware, specialized tools, and constant monitoring. Generative AI removes most of that infrastructure work since models are accessed through APIs, but this shifts the focus to managing usage costs and prompts. At a large scale, those costs can add up fast, especially when real-time analysis is required.

Factors to consider when choosing the right method for your use case

Choosing the right sentiment analysis method depends on your goals, the kind of data you have, and the resources available. Each approach has its strengths and trade-offs, so it’s important to find the one that fits your business and technical needs.

Data availability and quality. If you don’t have labeled data, a rule-based system is a good starting point. Machine learning and deep learning models need structured, labeled datasets to perform well, while generative AI can work with little to no labeled data through prompt-based approaches. The quality and balance of your data will directly affect your model’s accuracy.

Domain complexity. In simple domains like short surveys or basic reviews, rule-based or traditional machine learning models can do the job. But if your data includes complex expressions or emotional language, such as in hospitality, healthcare, or social media, deep learning or generative AI will capture meaning more accurately.

Transparency needs. Some industries need full visibility into how decisions are made. Rule-based and traditional ML models offer clearer reasoning and can be audited easily. Deep learning and generative AI are harder to interpret and often require additional tools to explain their predictions.

Budget and infrastructure. Rule-based systems are cheap to set up but require ongoing manual updates. Machine learning and deep learning need more investment in data labeling, computing power, and retraining. Generative AI shifts the cost toward API usage instead of infrastructure, but these costs can mount up quickly as usage scales.

Update frequency and scalability. If your data changes frequently, such as in customer feedback or social media streams, machine learning, deep learning, or generative AI are better suited, as they can adapt over time. Rule-based systems can lag behind when language evolves.

Accuracy requirements. If you need detailed, context-aware insights, such as in brand reputation analysis or market research, deep learning and generative AI deliver the most precise results. For simpler needs like keyword tracking or basic sentiment tagging, a rule-based or ML approach can be more efficient.

The best method depends on how you balance accuracy, transparency, cost, and maintenance. Many teams find success by combining methods, for example, using rule-based systems for initial filtering, then applying deep learning or generative AI for advanced analysis.

With a software engineering background, Nefe demystifies technology-specific topics—such as web development, cloud computing, and data science—for readers of all levels.

Want to write an article for our blog? Read our requirements and guidelines to become a contributor.