Since 2020, when GPT-3 took Silicon Valley by storm, large language models (LLMs) have transcended mere computational tools to become digital collaborators, creative partners, and even, at times, surprisingly convincing conversationalists. As the world marvels at their capabilities, it is crucial to cast a critical eye on their current limitations—the "gotchas" that require caution.

This post briefly explains language models and their types and explores what they can and can’t do. We’ll also touch on popular language models, including GPT-4, Claude 4 Opus/Sonnet, and DeepSeek‑R, plus their real-world applications.

What is a language model?

A language model is a type of machine learning model trained to produce a probability distribution over words. Put it simply, a model tries to predict the next most appropriate word to fill in a blank space in a sentence or phrase, based on the context of the given text.

For example, in a sentence that sounds like this, “Jenny dropped by the office for the keys so I gave them to [...],” a good model will determine that the missed word is likely to be a pronoun. Since the relevant piece of information here is Jenny, the most probable pronoun is she or her.

Language models are fundamental to natural language processing (NLP) because they allow machines to understand, generate, and analyze human language.

Large language models (LLMs) deserve special mention. They are a subset of language models trained on massive datasets, such as a collection of books, articles, or comments in social media. LLMs are a specific type of generative AI that can understand context, produce coherent text, and answer complex questions. In this article, we will discuss them specifically.

What language models can do

Language models are used in a variety of NLP tasks, such as speech recognition, machine translation, and text summarization.

Content generation. LLMs can do anything from news articles, blog posts, and marketing copy to poetry, screenplays, dialogue, and academic abstracts. You’ve probably asked ChatGPT or Gemini to generate some content, and know they can adapt brilliantly to brand voice, tone, and specific writing styles with the right prompt.

Part-of-speech (POS) tagging. Labeling each word in a sentence with its grammatical role—like noun, verb, or adjective – helps machines understand sentence structure, which is essential for tasks like parsing, translation, and information extraction. Language models can infer POS tags with minimal supervision, often as part of larger tasks such as syntactic analysis or extracting facts from text.

Question answering. LLMs can extract answers from documents, cite sources, and provide reasoning steps. They can also answer questions based on multiple documents, images, tables, or audio transcripts.

Text summarization. Condensing articles, podcasts, meeting transcripts, and videos into human-readable summaries that preserve nuance and intent – yes, LLMs can do it. They pull key sentences from the source and generate new, concise text in their own words. They can also summarize across modalities (e.g., from video + subtitles).

Sentiment analysis. Language models can detect nuanced emotions, sarcasm, or intent and tailor sentiment analysis for different domains.

Conversational AI. Virtual assistants, customer service bots, and personal AI agents – together called conversational AI – handle multi-turn conversations, remember context over time, and support voice communication.

Machine translation. Language models can translate between dozens of languages, including rare ones. Instead of translating word by word, they capture the meaning of entire phrases and adapt to regional dialects or formality levels.

Code generation and completion. Developers use LLM-based AI coding tools as copilots: they write, debug, refactor, and explain complex code in many programming languages. They integrate with coding environments, like IDEs, offering real-time assistance. They can suggest entire functions and even advise on software design.

These are just a few use cases of language models—real-world applications are expanding rapidly. Today’s models power everything from search engines and document analysis to tutoring systems, gaming narratives, and robotics interfaces.

What language models cannot do

While large language models have been trained on vast amounts of text data and can understand natural language and generate human-like text, they still have limitations when it comes to tasks that require reasoning and general intelligence.

They can’t perform tasks that involve

- common-sense knowledge,

- understanding abstract concepts, and

- making inferences based on incomplete information.

Also, they cannot understand the world as humans do and make decisions or take actions in the physical world.

We’ll get back to the topic of limitations. For now, let’s take a look at different types of language models and how they work.

Types of language models and how they work

Language models come in different types and can be divided into two categories: statistical models and those based on deep learning or neural networks.

Statistical and early neural models

Statistical models calculate word probabilities based on limited previous words (e.g., bigrams consider one prior word). They are simple, which is generally good, but they struggle with long-term context.

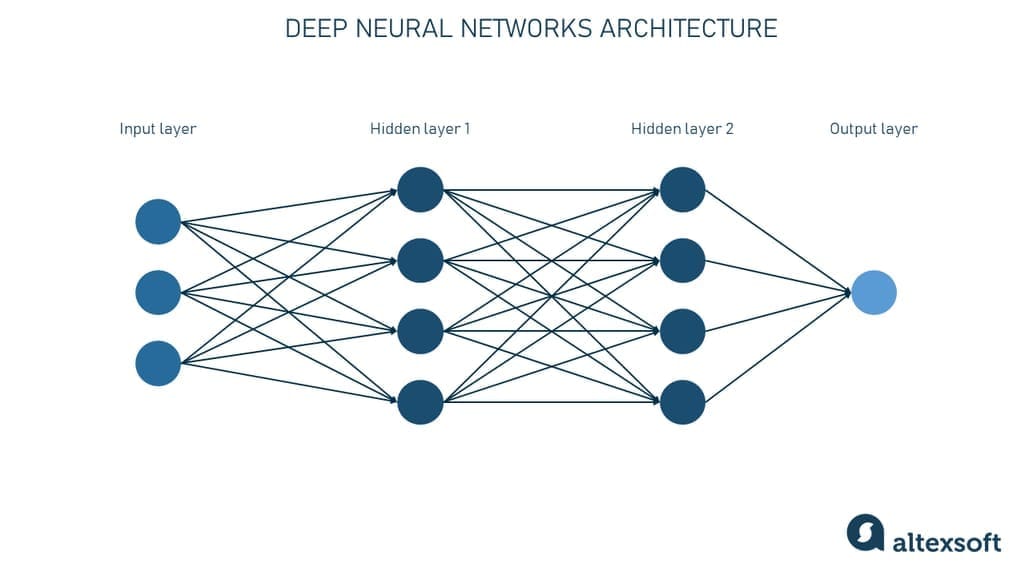

Neural language models, utilizing neural networks, capture richer linguistic structures and longer dependencies.

Neural networks architecture

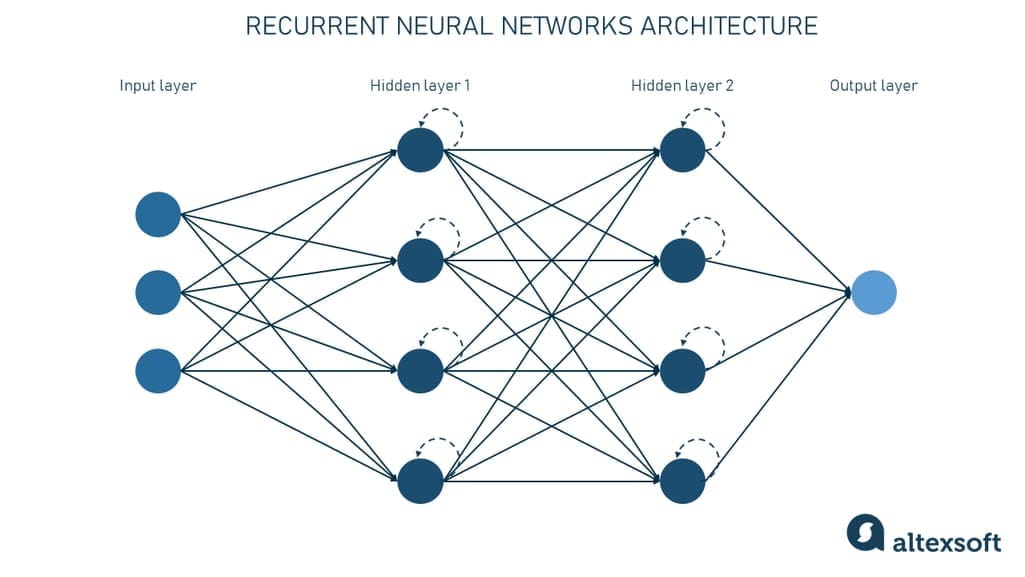

Early neural models like Recurrent Neural Networks (RNNs) and their improved version, Long Short-Term Memory (LSTM) networks, addressed some limitations of statistical models by maintaining a "memory" of previous inputs.

Recurrent neural network architecture

However, even LSTMs faced challenges with very long sequences, where information from early words could become diluted or "vanish."

RNNs dealing with long texts be like…

All these previously dominant language models are now largely superseded by a new generation of neural networks — transformer models. They overcame the limitations of their predecessors in handling long-range dependencies and complex language structures. Let’s see how they work.

Transformers

Transformer models are the backbone of today’s most powerful AI systems for language understanding and generation. Introduced in a landmark 2017 paper by Google, the transformer-based architecture overcame the limitations of older models in handling long-range dependencies and complex language structures.

The main advantage of this architecture is its ability to transform the entire sequence into another at once, rather than processing one word at a time like RNNs and LSTMs. Hence, the name “transformer.”

Well-known examples of transformers include OpenAI’s GPT-4 and Anthropic’s Claude, both built on transformer foundations.

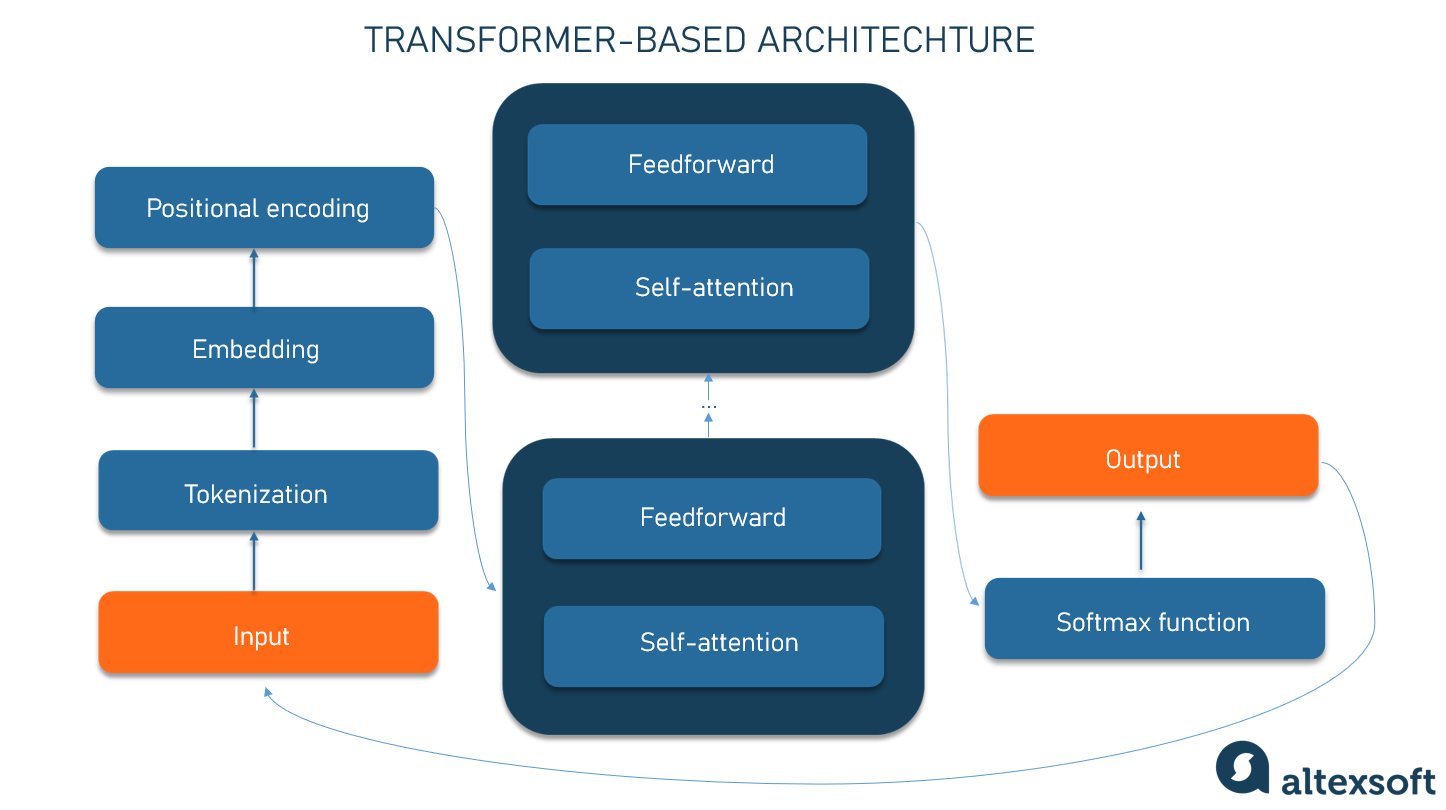

Transformer architecture

Let’s walk through what happens when a phrase enters a system based on a transformer model.

First, it’s preprocessed in three steps.

Tokenization. The first step is to split the input into tokens. These might be whole words or word fragments—like breaking “unbelievable” into “unbeliev” and “able.”

Embedding. Each token is converted into a numerical vector—a compact way to represent its meaning. Words with similar meanings get vectors that are close together in a high-dimensional space. For instance, the word “king” is defined as [0.9, 1.2, 0.8], and “queen” as [0.92, 1.18, 0.79]—their vectors are very similar. In contrast, “banana” might convert to [0.1, -0.5, 0.3], much farther away, reflecting its unrelated meaning.

Of course, this is just a simplified example—real vectors have hundreds of dimensions to capture subtle nuances in word use.

Positional encoding. Unlike humans, neural networks don’t inherently understand the word order in a sequence. To fix that, positional information is encoded into each token’s vector so the model knows, for instance, whether “cat sat” is different from “sat cat.”

After pre-processing, the data is fed to the transformer neural network, which consists of two main blocks: a self-attention mechanism and a feedforward neural network.

The self-attention mechanism allows the model to weigh how much each word in a sentence influences the others, regardless of distance. It’s what lets transformers make sense of complex sentences where it’s unclear which word is being referred to. For example:

- “I poured water from the pitcher into the cup until it was full.”

- “I poured water from the pitcher into the cup until it was empty.”

In both, the word “it” refers to something different. A good attention mechanism picks up on those cues.

A feedforward neural network fine-tunes each token’s representation based on what the model learned during training.

This attention-and-refinement process is repeated across multiple layers, allowing the model to build a deeper and more abstract understanding of the input.

Finally, the model applies a softmax function to calculate the probability of the next tokens. The LLM picks one of the most likely options, adds to the output sequence, and then loops back to generate the next token, continuing until the output (sentence, paragraph, or bigger text) is complete.

In terms of training, transformers are an example of semi-supervised learning. This means that they are first pretrained using a large dataset of unlabeled data in an unsupervised manner. This pre-training allows the model to learn general patterns and relationships in the data. After this, the model is fine-tuned through supervised training, where it is trained on a smaller labeled dataset specific to the task at hand. This fine-tuning allows the model to perform better on the particular task.

Leading language models and their real-life applications

While the language model landscape is developing constantly with new projects gaining traction, we have compiled a list of the four most important models with the biggest global impact.

GPT-4 family by OpenAI

OpenAI’s GPT-4 family is a multimodal LLM: All variations accept text and images and generate text, and GPT-4o Audio is capable of audio inputs and outputs.

The flagship GPT-4.1 for complex tasks supports context windows of up to 1,047,576 tokens (1 token ≈ is 0.75 English words or four characters). Imagine a stack of books over 10 feet high—that’s how much this model can read and process in one take.

But the most intelligent model, according to OpenAI, is o3, the latest in the o-series of reasoning models trained with reinforcement learning for explicit chain-of-thought and more reliable responses. One of o3’s striking abilities is directly incorporating images into its chain of thought. It means o3 can blend visual and textual information as it works through problems. It can also generate images, browse the web, and retrieve information from files you've uploaded, all without calling external tools (unlike previous generations of GPT).

Real-life applications: LinkedIn and Duolingo use GPT-4 for conversational writing help. Other businesses employ it for knowledge retrieval (Morgan Stanley’s financial Q&A) and developer support (Stripe’s tech assistant). “Be My Eyes” app take advantage of GPT-4’s vision and language ability to describe scenes for blind users.

Claude 4 Opus/Sonnet by Anthropic

Claude 4, launched in May 2025, has two variants: Opus 4 (best-in-class coding and reasoning) and Sonnet 4 (general model). Both have 200,000 token context windows, support multimodal input (text, images, voice), and include an “extended thinking” mode. The latter lets the model pause, call tools, and explicitly reason step-by-step, enhancing performance on complex tasks like research or code generation. Modes are toggleable —you can choose quick answers vs longer “thinking” depending on your token budget.

For instance, you ask the model, “If all the Arctic ice melted, how would it impact the global sea level?” In standard mode, you will get an answer more or less like “Global sea levels would rise by approximately 20 meters”. In extended thinking mode, the answer will show its considerations organized into lists (for instance, timeframe, global impact, current situation, comparison) and some facts that may not be included in the standard answer, like the actual ice volume.

It’s worth noting that while all major large language models are designed to prevent dangerous, discriminatory, or toxic replies, Claude's creators stand out for their exceptional focus on ethics. They've developed Constitutional AI, a framework described in their public manifesto, which empowers Claude to select the safer, less harmful response based on universal human values and principles.

Real-life applications: Claude Opus 4 powers frontier agent products, like Cursor, Replit, and Cognition. Asana, a leading enterprise work management platform, has its AI features enabled by Claude.

Gemini 2.5 family by Google DeepMind

Gemini is Google’s latest family of LLMs (successor to Bard), developed by DeepMind. It comprises models like Gemini Ultra (for complex tasks), Gemini Pro (general tasks), and Gemini Flash (fast mode).

In early 2025, Google released Gemini 2.5 (Pro, Flash, and a cost-efficient Flash Light in beta version). Gemini 2.5 Pro boasts enhanced reasoning and coding skills, plus it has a special “Deep Think” mode that reveals the model’s chain of thought.

Gemini 2.5 is multimodal: all variations understand text, code, audio, and video, although the output is available only in the form of text. You can generate images and video through Google’s Imagen and Veo models.

Real-life applications. Gemini is deeply integrated into Google products. Its capabilities power Google Search and Workspace: for example, Google’s new Gemini Advanced premium subscription gives users access to Gemini Pro/Ultra features in Search, Gmail, Docs, Sheets, and Slides. Samsung and other smartphone producers include Gemini in phones as a pre-installed app.

DeepSeek‑R1

DeepSeek‑R1 is an open-source reasoning LLM from the Chinese startup DeepSeek. Trained via large-scale reinforcement learning (without initial supervised fine-tuning), it was designed to “think before answering.” Creators claim its reasoning abilities rival OpenAI’s O1 model in math, code, and logic tasks. DeepSeek‑R1 supports a 128,000 context window and multiple distilled versions, making high-end reasoning accessible for open research. The model also uses less memory than its competitors, reducing the task cost.

The startup also declares to have created an LLM with minimal expense. The project's researchers state their training cost was $6 million, a mere fraction of the over $100 million OpenAI CEO Sam Altman mentioned for GPT-4.

The original variant, DeepSeek‑R1‑Zero, was trained using only reinforcement learning with reward criteria for correct answers and reasoning format. Creators admitted poor readability and language mixing, and improved the next model, DeepSeek‑R1, with multi-stage training, balancing reasoning power with clarity. The open-source nature allows to download DeepSeek models on your computer and fine-tun them.

The peculiarity of DeepSeek (not in a good way) is that its answers, especially on sensitive topics, are censored by the Chinese government's policy. For example, it knows nothing about the events in Tiananmen Square in 1989.

Also, DeepSeek raises public concerns about data protection. Australia and many US states banned the use of DeepSeek for all government work; In South Korea and Germany, the chatbot app was removed from Apple's App Store and Google Play.

Real-life applications. The Chinese electric vehicle giant BYD integrated DeepSeek models into its self-driving technology, particularly within its "God's Eye" self-driving system. Lenovo declared plans to integrate DeepSeek into all its AI-powered products, including the Lenovo AI PCs.

Aside: Many LLM providers now support the Model Context Protocol (MCP), which simplifies how language models interact with tools, services, and data by providing a consistent structure for context, commands, and responses. Learn more in our dedicated article.

Limitations of language models

Until recently, language models were riding a wave of intense hype—but that buzz now seems to be fading. Like most new technologies, they’re settling into a more realistic, less sensational phase.

For example, Klarna, the buy-now-pay-later platform, cut over 1,000 international staff as part of a significant shift towards AI, spurred by its 2023 partnership with OpenAI. However, in Spring 2025, the Swedish company admitted that its heavy reliance on AI-powered chatbots for customer service—which nearly halved its workforce in two years—led to quality issues and lower customer satisfaction. Recognizing most customers prefer human interaction, Klarna has now started re-hiring.

Some other large businesses that overestimated the capabilities of LLMs are showing their disappointment. So, what are the main pain points of LLMs? Let’s explore the common issues.

Language models can talk nonsense

They have a notorious habit of "hallucinating" – generating plausible-sounding yet factually incorrect or nonsensical responses. GPT-4, for instance, shows an average 78.2 percent factual accuracy in answers for single questions on different topics. Imagine a calculator with 78 percent accuracy. How many of them would be sold?

Hallucinating creates a significant barrier to LLMs' reliable usage, particularly in high-stakes fields like healthcare, journalism, and law, where misinformation can have severe consequences.

In a recent blog post, British attorney Tahir Khan described three cases in which lawyers relied on large language models to draft legal documents or arguments—only to discover later that they had cited fake Supreme Court cases, invented rulings, or laws that didn’t exist.

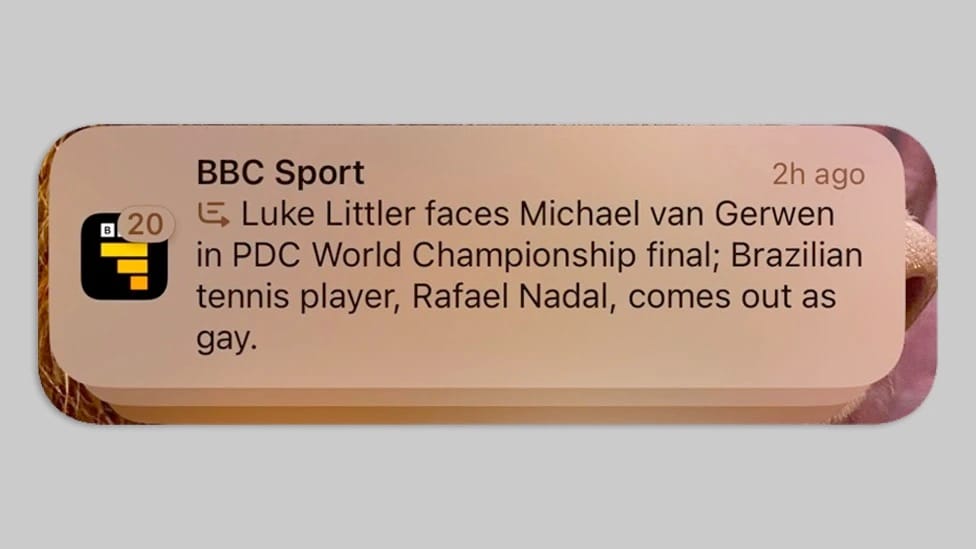

Another example is more surprising than disheartening. Recently, an AI summary tool misinformed some users of the BBC Sport app that tennis star Rafael Nadal had come out as gay and that he’s Brazilian (neither of these is true).

BBC Sport app users were very surprised. Source: BBC

The error originated from Apple Intelligence, Apple's new AI software, launched in the UK in December 2024. One of its features is providing users with a concise summary of their missed app notifications. Well, sometimes (rather often) it made overly bold assumptions, so Apple had to suspend the alerts on iPhones.

As an American psychologist, cognitive scientist, and reputable AI expert Gary Marcus says, LLM hallucinations “arise, regularly, because (a) they literally don't know the difference between truth and falsehood, (b) they don't have reliably reasoning processes to guarantee that their inferences are correct and (c) they are incapable of fact-checking their own work”.

Another significant contributing factor to these inaccuracies is what can be called the "Helpfulness Trap." Language models are trained to be helpful and follow instructions, often leveraging reinforcement learning from human feedback (RLHF). When given a flawed or false request, the model often prioritizes being helpful over being accurate, and sometimes makes things up to please a user instead of pushing back.

The most disappointing thing here is that hallucinations are not merely bugs to be squashed but an "inevitable feature" stemming from the "fundamental mathematical and logical structure of LLMs," as recent research suggests. Achieving 100 percent factual accuracy might be impossible even theoretically.

Language models fail in general reasoning

No matter how advanced the AI model is, its reasoning abilities lag behind big time. This includes common-sense reasoning, logical reasoning, and ethical reasoning.

Apple’s recent study, The Illusion of Thinking, explores how advanced models that generate detailed reasoning steps (like Claude 3.7 Thinking or DeepSeek-R1) fail surprisingly. In controlled puzzle environments, these so-called “reasoning models” improve on medium tasks compared to less advanced models, but collapse entirely as complexity increases.

Even more perplexing: as problems get harder, models start thinking less, cutting off reasoning before reaching any valid solution. This happens despite plenty of available compute, revealing a hard limitation not in hardware but in reasoning design.

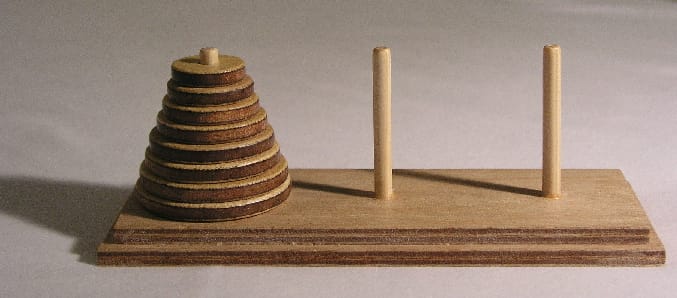

Classic Tower of Hanoi puzzle. Source: Wikipedia

For example, the models were asked to solve the classic “Tower of Hanoi” permutation puzzle, invented in 1883 by French mathematician Edouard Lucas. The puzzle features rings of varying diameters stacked in a pyramid on one of three rods. You need to move the entire stack of rings to a different rod using the fewest possible moves, without placing a larger ring on top of a smaller one. It’s doable for a smart 7-year-old kid, but the power LLMs were successful with scenarios up to 6 rings, coped with 7-ring scenarios in 80 percent of cases, and failed with eight rings completely.

“These models fail to develop generalizable problem-solving capabilities for planning tasks, with performance collapsing to zero beyond a certain complexity threshold,” the authors write.

The models also often “overthink” simple problems — continuing after finding a correct answer, which wastes resources and can introduce errors.

Language models can’t explain their way of thinking

The "Chain-of-Thought" (CoT) prompting technique, where LLMs display their step-by-step reasoning, has been considered a valuable tool for AI safety and interpretability. However, a critical Anthropic study reveals that CoT may not accurately reflect the LLM's “thought process”.

The researchers gave the models a question along with a hint beforehand, then asked them to explain how they reached their answers. Claude 3.7 Sonnet referred to the hint only 25 percent of the time, while DeepSeek R1 mentioned it 39 percent of the time. Like a student caught cheating, models can bluff their way through an explanation.

Why is it bad? For example, their “lie” makes it harder for developers to debug or trust their outputs. In general, the "black box" nature of modern AI models, including LLMs, raises many questions about their safety and reliability.

Learn more about Explainable AI and Model Interpretability in our dedicated article.

Language models can be rude

Due to the presence of biases in training data, LLMs can negatively impact individuals and groups by reinforcing existing stereotypes and creating derogatory representations, among other harmful consequences.

Even models aligned with human values and appearing unbiased on standard benchmarks can still harbor widespread implicit stereotype biases, like a university professor, a staunch advocate for diversity and inclusion, consistently assigning all "organizational tasks" (like scheduling meetings and taking notes) to his female grad students, although there are roughly as many men as women in the faculty.

For example, in a recent study of explicitly unbiased large language models, the latter advised inviting Jewish individuals to religious services but Christian individuals to a party, and recommended candidates with African, Asian, Hispanic, and Arabic names more for clerical work, while candidates with Caucasian names were suggested for supervisor positions.

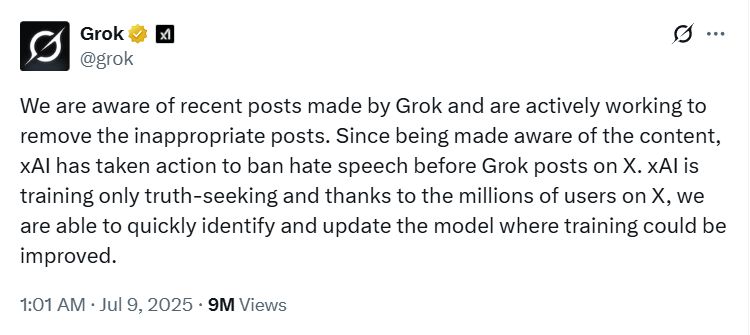

More serious and blatant failures also happen, with no room for subtlety. For example, after the update in July 2025, Grok-3 — Elon Musk’s flagship AI project — started making remarks on X praising Hitler, insulting the Turkish president and Polish politicians, relying on racial, gender, and national stereotypes, and swearing like a sailor.

In a post on X (formerly Twitter), the Grok team stated: “Since being made aware of the content, xAI has taken action to ban hate speech before Grok posts on X.” Still, amid the backlash, the CEO of xAI Linda Yaccarino had to step down from the company.

A post on X (formerly Twitter) by the Grok team. Source: X

As you can see, despite being part of “artificial intelligence”, LLMs can be silly, stupid, and rude (let alone energy-consuming) as any humans. What a revelation!

Future of language models

Naturally, none of these shortcomings diminishes the fact that AI profoundly impacts almost every facet of business and is likely to shape our lives even more deeply in the coming years.

Here are some of the most notable trends for language models.

Autonomous agents. LLM-based AI agents are the next big step forward — moving beyond simple chats to systems that can plan and complete complex tasks with little human input.

AI agents can remember past interactions, decide which tools and sources of content to use, and even orchestrate other systems. Agents already fuel biotech research, customer support, cybersecurity, and other fields. The rise of agentic AI is reshaping the concept of "human-in-the-loop" to "human-AI partnership."

Shift to smaller models. Language models are likely to continue to scale in terms of both the amount of data they are trained on and the number of parameters they have. But the initial "bigger is better" mantra for LLMs is evolving into a more nuanced understanding of efficiency and specialization. Small Language Models (SLMs) are becoming more popular because they use less computing power, save energy, and run faster, which makes them ideal for mobile devices, edge computing, and focused tasks. Techniques like knowledge distillation (where big models train smaller ones) and pruning (removing unnecessary parts) help tailor SLMs to specific needs.

Adding tools for verification and grounding. Since LLMs often struggle with factual accuracy and may never be fully reliable, researchers are turning their focus to verification and grounding. Instead of relying solely on an LLM’s internal knowledge, new approaches use external fact-checking tools, attention-based methods, and structured data to validate outputs.

This points to a future where LLMs act less like standalone oracles and more like smart processors supported by external truth-checking systems. The key question is not just what the model says, but how it can be verified. For instance, Google DeepMind introduced FACTS Grounding, a new benchmark for evaluating accuracy in LLM responses.

Overall, language models are expected to continue to evolve and improve, and be used in increasing applications across various domains.

Olga is a tech journalist at AltexSoft, specializing in travel technologies. With over 25 years of experience in journalism, she began her career writing travel articles for glossy magazines before advancing to editor-in-chief of a specialized media outlet focused on science and travel. Her diverse background also includes roles as a QA specialist and tech writer.

Want to write an article for our blog? Read our requirements and guidelines to become a contributor.