Human languages are complex. They get even more complex when context shows up on the horizon. Let’s take the name Lincoln, for example. Some people will instantly think of the 16th President of the United States, a towering historical figure. For others, however, this will be a car manufacturer with the same name. A simple word, it has different meanings.

As humans, we can discern meanings and categories effortlessly. This is a testament to our intuitive grasp of the world around us. When it comes to computers, this seemingly straightforward task becomes a challenge packed with ambiguity. Such complexities underscore the need for robust named entity recognition or NER — a mechanism by which we teach machines to understand various linguistic nuances.

This article explains what named entity recognition is, its working principles, and how it is used in real life. It also sheds light on different NER methods and ways to implement a NER model.

What is Named Entity Recognition (NER)?

Named entity recognition (NER) is a subfield of natural language processing (NLP) that focuses on identifying and classifying specific data points from textual content. NER works with salient details of the text, known as named entities — single words, phrases, or sequences of words — by identifying and categorizing them into predefined groups. The categories encompass a diverse range of subjects present within the text, including individuals' names, geographic locations, organizational names, dates, events, and even specific quantitative values such as money and percentages.

Key concepts of NER

Named entities are not the only concept to understand within the NER world. Several other terms should be explained to understand the topic better.

POS tagging. Standing for "part-of-speech tagging," this process assigns labels to words in a text corresponding to their specific part of speech, such as adjectives, verbs, or nouns.

Corpus. This is a collection of texts used for linguistic analysis and training NER models. A corpus can range from a set of news articles to academic journals or even social media posts.

Chunking. This is an NLP technique that groups individual words or phrases into "chunks" based on their syntactic roles, creating meaningful clusters like noun phrases or verb phrases.

Word embeddings. These are dense vector representations of words, capturing their semantic meanings. Word embeddings translate words or phrases into numerical vectors of fixed size, making it easier for machine learning models to process. Tools like Word2Vec and GloVe are popular for generating such embeddings, and they help in understanding the context and relationships between words in a text.

NER example

Consider the sentence: "Mary from the HR department said that The Ritz London was a great hotel option to stay in London."

NER performed on the sentence using the displaCy Named Entity Visualizer.

In this sentence:

- "Mary" is labeled as PERSON, indicating that it is an entity representing a person's name.

- "The Ritz" is tagged as ORG, which stands for Organization. This means it is recognized as an entity that refers to companies, agencies, institutions, etc.

- "London" has been classified as GPE, which stands for Geopolitical entity. GPEs represent countries, cities, states, or any other regions with a defined boundary or governance.

In essence, if you aim to discern a text's who, where, what, and when, NER is the technique to use.

NER for structuring unstructured data

NER plays a pivotal role in converting unstructured text into structured data. It systematically identifies and categorizes key elements such as names, places, dates, and other specific terms within the text. By doing so, NER transforms vast amounts of textual content into organized datasets, ready for further analysis. For instance, NER can quickly extract company names, stock prices, and economic metrics in a news article, turning lengthy narratives into concise summaries.

Key use cases of NER

Named entity recognition has far-reaching implications for various sectors, enhancing processes and delivering sharper insights. Below are some of its pivotal applications.

Information retrieval

Information retrieval is the process of obtaining information, often from large databases, which is relevant to a specific query or need.

Let’s take the realm of search engines, for example, where NER is used to elevate the precision of search results. For instance, if a researcher inputs "Tesla's battery innovations in 2023" into a database or search engine, NER discerns that "Tesla" is a company, and "2023" is a specific year. Such a distinction ensures the results fetched are directly about Tesla's battery-related advancements in 2023, omitting unrelated articles about Tesla or generic battery innovations.

Content recommendation

Recommender systems suggest relevant content to users based on their behavior, preferences, and interaction history.

Modern content platforms, from news websites to streaming services like Netflix, harness NER to fine-tune their recommendation algorithms. Suppose a user reads several articles about "sustainable travel trends." NER identifies "sustainable travel" as a distinct topic, prompting the recommendation engine to suggest more articles or documentaries in that niche, providing a tailored content experience for the user.

Automated data entry

Robotic Process Automation (RPA) refers to software programs replicating human actions to perform routine business tasks. While these programs aren't related to hardware robots, they function like regular white-collar workers.

Robotic process automation in 10 minutes or less.

In the corporate ecosystem, automated data entry augmented with NER is revolutionizing operations. Let’s take a logistics company that deals with myriad bills of lading daily, for example. An RPA system, equipped with NER, can scan these documents, identifying entities like "Shipper," "Consignee," or "Carrier name." Once detected, the system can extract and input this data into the company's management software for easier access and organization.

Sentiment analysis enhancement

Sentiment analysis is a technique that combines statistics, NLP, and machine learning to detect and extract subjective content from text. This could include a reviewer's emotions, opinions, or evaluations regarding a specific topic, event, or the actions of a company.

When coupled with NER, sentiment analysis offers businesses a granular understanding of customer feedback. And this can go a long way. Consider a guest's review for a hotel stating, "The room was spacious and had a breathtaking view, but the breakfast buffet was limited." Using NER, sentiment analysis tools can determine that the positive sentiment relates to the "room" and its "view," while the negative sentiment concerns the "breakfast buffet." Such detailed insights enable hotels to address specific areas for enhancement and elevate their guest experience.

How NER works

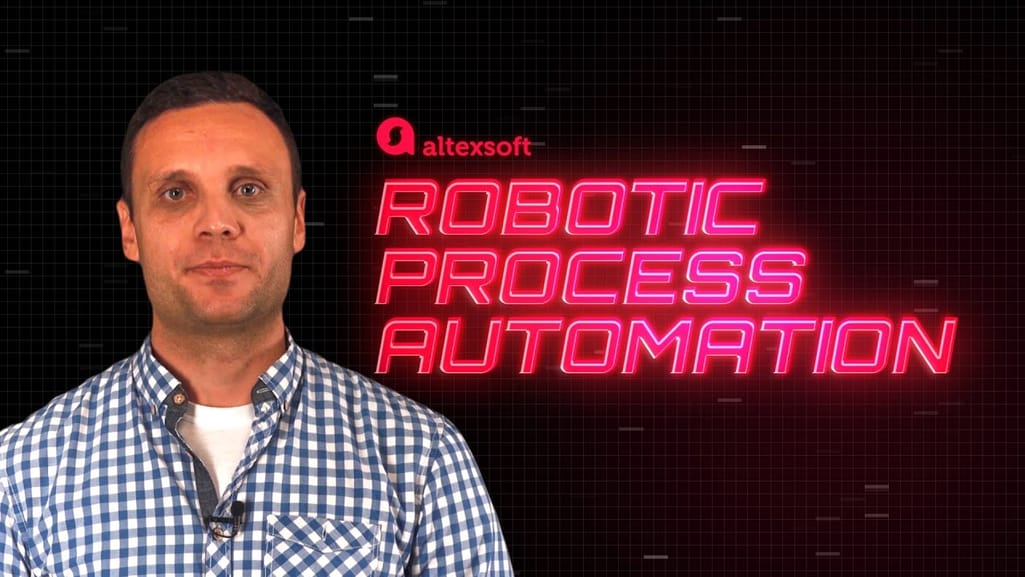

Fundamentally, NER revolves around two primary steps:

- identifying entities within the text and

- categorizing these entities into distinct groups.

Two steps of the NER process performed on an excerpt from Jack Kerouac's "On the Road."

Let’s get into more detail with each.

Entity detection

Entity detection, often called mention detection or named entity identification, is the initial and fundamental phase in the NER process. It involves systematically scanning and identifying chunks of text that potentially represent meaningful entities.

Tokenization. At its most basic, a document or sentence is just a long string of characters. Tokenization is the process of breaking this string into meaningful pieces called tokens. In English, tokens are often equivalent to words but can also represent punctuation or other symbols. That kind of segmentation simplifies the subsequent analytical steps by converting the text into manageable units.

Feature extraction. Simply splitting the text isn't enough. The next challenge is to understand the significance of these tokens. This is where feature extraction comes into play.

It involves analyzing the properties of tokens, such as:

- morphological features that deal with the form of words, like their root forms or prefixes;

- syntactic features that focus on the arrangement and relationships of words in sentences; and

- semantic features that capture the inherent meaning of words and can sometimes tap into broader world knowledge or context to better understand a token's role.

The subsequent phase in the named entity recognition process, following entity detection, is entity classification.

Entity classification

Entity classification involves assigning the identified entities to specific categories or classes based on their semantic significance and context. These categories can range from person and organization to location, date, and myriad other labels depending on the application's requirements.

A nuanced process, entity classification demands a keen understanding of the context in which entities appear. This classification leverages linguistic, statistical, and sometimes domain-specific knowledge. For example, while "Apple" in a tech sphere might refer to the technology company, in a culinary context, it's more likely to mean the fruit.

Another example is the sentence "Summer played amazing basketball," where "Summer" would be classified as a person due to the contextual clue provided by "basketball." However, with no such clues present, "Summer" might also signify the season. Such ambiguities in natural language often necessitate linguistic analysis or advanced NER models trained on extensive datasets to differentiate between possible meanings.

Approaches to NER

Named entity recognition has evolved significantly over the years, with multiple approaches being developed to tackle its challenges. Here are the common NER methods.

The rule-based method of NER

Rule-based approaches to NER rely on a set of predefined rules or patterns to identify and classify named entities.

These rules are often derived from linguistic insights and are codified into the system using the following techniques.

Regular expressions. This is pattern matching to detect entities based on known structures, such as phone numbers or email addresses.

Dictionary lookups. This means leveraging predefined lists or databases of named entities to find matches in the text. Say a system has a dictionary containing names of famous authors like "Jane Austen," "Ernest Hemingway," and "George Orwell." When it sees the sentence "I recently read a novel by George Orwell," it can quickly recognize "George Orwell" as a named entity referring to an author based on its lookup list.

Pattern-based rules. This involves reliance on specific linguistic structures to infer entities. A capitalized word in the middle of a sentence might hint at a proper noun. For instance, in the sentence "If you ever want to go to London, make sure to visit the British Museum to immerse yourself in centuries of art and history," the capitalized word "London" suggests it's a proper noun referring to a location.

Why use it? The rule-based approach works best when you're dealing with specific, well-defined domains with entities following clear and consistent patterns. Such methods can be efficient and straightforward if you know exactly what you're looking for and the patterns don't change frequently.

Machine learning-based method of NER

In the realm of traditional machine learning methods for NER, models are trained on data where entities are labeled. For instance, in the sentence "Paris is the capital of France," the words "Paris" and "France" could be marked as GPEs.

You can read more about organizing data labeling for ML purposes as well as preparing your dataset for machine learning in our dedicated articles.

If you prefer visual formats, here’s a 14-minute video explaining how data is prepared for ML.

This method heavily relies on feature engineering, where specific attributes and information about the data are manually extracted to improve the model's performance.

The commonly engineered features include:

- word characteristics — details like word casing (capitalized, uppercase, lowercase), prefixes, and suffixes;

- context — the surrounding words, whether preceding or succeeding, that offer clues;

- syntactic information — part-of-speech tags, which shed light on a word's function in a sentence, such as whether it's a noun, verb, adjective, etc.;

- word patterns — the shape or pattern of a word, especially for recognizing specific formats like dates or vehicle license numbers; and

- morphological details — information derived from the root form of a word or its morphological nuances.

After the features are prepared, the model is trained on this enriched data. Common algorithms employed here include Support Vector Machines (SVM), Decision Trees, and Conditional Random Fields (CRF). Once trained, the model can then predict or annotate named entities in raw, unlabeled data.

Why use it? The machine learning-based approach is the way to go when entities come in diverse types and don't always follow a consistent pattern. If you can access annotated data, you can train a model that can generalize well across various entity patterns.

While effective, this approach requires substantial effort in the feature engineering phase, unlike a rule-based technique where it’s not required at all or deep learning methods, which can automatically discern important features from the data.

Deep learning-based method of NER

Deep learning offers a more automated and intricate approach to NER. Deep learning is a subfield of machine learning where artificial neural networks — complex algorithms designed to operate like human brains — learn from vast data sets. Essentially, it aims to train computers to replicate the manner in which humans learn and process information.

As said, one significant advantage of deep learning over traditional machine learning is its ability to learn features automatically from the data. This capability reduces reliance on extensive manual feature engineering, as seen in traditional methods.

By the way, if you struggle to distinguish data science, AI, deep learning, and machine learning, we have a separate article to clear everything up for you.

Training deep learning models involves using annotated data, similar to the traditional approach. However, given the capacity of these models to handle vast datasets and their intricate structures, they often outperform their counterparts, especially when there's a wealth of training data available.

Several neural network architectures are prominent in the NER domain.

- Recurrent Neural Networks (RNNs) and Long Short-Term Memory (LSTM) networks capture sequential information, making them suitable for processing textual data with context.

- Transformer architectures, including the likes of GPT, have reshaped the landscape of NLP tasks, including NER. Their ability to attend to different parts of an input simultaneously allows for a deeper understanding of context.

You can learn more about them in our articles about large language models and generative AI models.

Regarding GPT and entity recognition, while models like ChatGPT are proficient in many tasks, they are part of a broader spectrum of tools available for NER. Depending on the specific requirements, other specialized models might still be preferred for pure entity recognition tasks.

Why use it? Deep learning shines with tasks that involve a high level of complexity and when you have a vast amount of data at your disposal. Unlike traditional machine learning, it can automatically discern and learn relevant features from the data, making it particularly effective in ambiguous or diverse contexts where entities can be multifaceted.

How to implement NER

There is a vast array of tools and libraries available for NER. However, it's essential to be aware of the overarching strategies that can amplify their potential: transfer learning and active learning. Transfer learning involves adapting a pre-trained model, like GPT-4 or RoBERTa, for a specific NER task. Using pre-trained architectures can save computational effort and often leads to better performance. Active learning, in turn, iteratively retrains the model on challenging examples, boosting its efficiency and convergence speed.

With these strategies in mind, let's delve into the tools and libraries to help you implement NER.

Tools and libraries to build a NER model

In this section, we will overview a few examples of tools and libraries suitable for NER tasks. Since they are just a drop in the ocean, it’s advisable to do your own research in case you decide to build a custom NER model.

spaCy is a free open-source library in Python for NLP tasks. It offers features like NER, Part-of-Speech (POS) tagging, dependency parsing, and word vectors. The EntityRecognizer in spaCy is a transition-based component designed for named entity recognition, focusing on clear and distinct entity mentions. However, its design might not be optimal for tasks where entity definitions are ambiguous or when the key information is in the middle of entities.

NLTK (Natural Language Toolkit) is a platform for building Python programs to work with human language data. Though primarily known for its capabilities in linguistic data analysis, the platform can also be used for NER.

Stanford NLP offers a range of tools for natural language processing. One of its prominent features is RegexNER, a rule-based interface specifically designed for NER using regular expressions. While the core of Stanford NLP is written in Java, it provides a Python wrapper, enabling Python developers to utilize its capabilities.

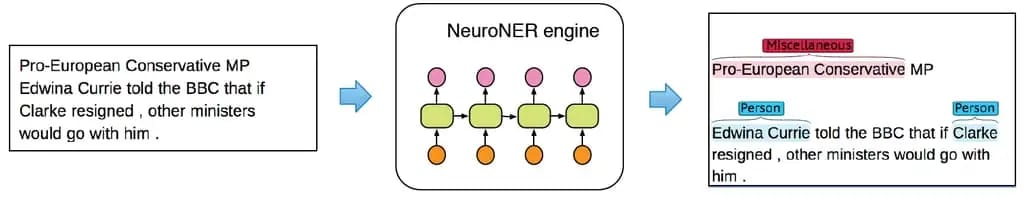

NeuroNER is a program designed specifically for neural network-based named entity recognition.

How NeuroNER works. Source: NeuroNER.com

NeuroNER enables users to create or modify annotations for a new or existing corpus, ensuring tailored and precise entity recognition outcomes.

DeepPavlov is an open-source library for conversational AI based on ML libraries like TensorFlow and Keras, offering a collection of pre-trained NER models suitable for deep learning enthusiasts.

BRAT (Brat Rapid Annotation Tool) is a web-based software solution that allows users to annotate text, marking entities and their intricate relationships.

Available APIs for NER

Another consideration for developers is using application program interfaces (APIs). These provide a convenient way to tap into robust NER capabilities without delving into model training or backend complexities.

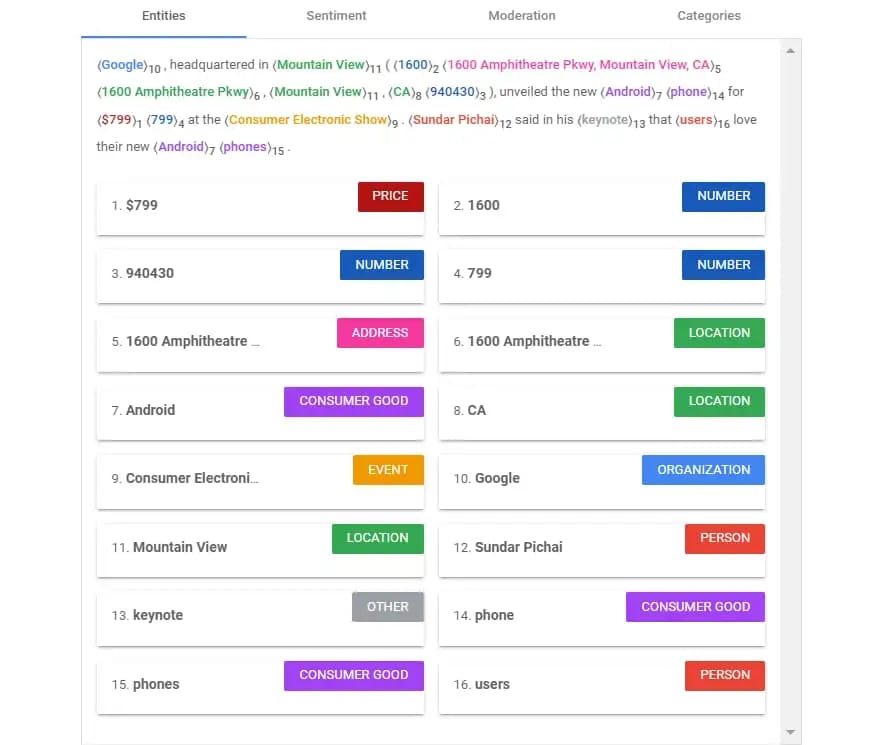

Google Cloud NLP is Google's cloud offering for natural language processing tasks, which includes a robust named entity recognition system that can identify and classify entities within text.

NLP API demo. Source: Google

AWS Comprehend, Amazon's natural language processing service, harnesses machine learning to dig into the text, detect entities, and more. According to official resources, Amazon Comprehend leverages NLP to extract critical insights about document content. It pinpoints entities, key phrases, language, sentiments, and other prevalent document elements.

IBM Watson NLU is a component of the extensive IBM AI suite with entity recognition functionalities. Exploring unstructured text data, Watson Natural Language Understanding employs deep learning to decipher underlying meanings and extract metadata. Through its text analytics, it can draw out categories, classifications, entities, keywords, sentiment, emotion, relations, and syntax, allowing users to deeply understand and analyze their data.

OpenAI GPT-4 API offers developers access to one of the most advanced language models. While its primary strength is in generating human-like text, it can be used for tasks such as named entity recognition by posing queries in a structured manner. It's particularly useful when the requirement is not just to identify entities, but also to understand context, derive insights, or answer questions related to the text. The versatility of the GPT-4 model makes it a strong candidate for various NLP tasks, including NER.

As you can see, there are different options to make NER work for you. When deciding between building a custom NER model or using an API, consider your specific needs and data sensitivity. Custom models offer flexibility for niche tasks and ensure data privacy but require more resources. In contrast, APIs are quick and cost-effective for general tasks but may not cater to specialized requirements. Opt for a custom model for tailored tasks and utmost data security or choose an API for broad applications with cost and speed in mind.