Generative AI has taken the world by storm. Image generation, conversational AI, and voice generation had such a resounding success that gen AI became synonymous with artificial intelligence for many. It pushed other applications of machine learning further away from the spotlight.

But let’s give the technology its credit. Applications of machine learning have spread across different industries and brought numerous improvements. From personalized news feeds in social media and eCommerce visual search capabilities to drug discovery and cancer detection in healthcare, machine learning keeps proving to be a powerful development tool, and it’s changing the world we live in.

What has already been said about machine learning is rather fragmented. This material aims at drawing a complete picture of it, hence the scale. We'll give a shot at explaining things related to the topic of machine learning, like its types, tools, algorithms, trends, etc., in simple words.

What is machine learning?

Machine learning is a field of knowledge aimed at creating algorithms and training machines on data so that they can make predictions and decisions on their own when exposed to new data inputs. For instance, a machine trained on the credit history data of different users can determine whether or not a certain user should be given a loan.

Arthur Samuel, who created the very first computer learning program in 1952, suggested the following definition of machine learning:

Field of study that gives computers the ability to learn without being explicitly programmed.

Rephrasing these words, machine learning is about providing a machine with the ability to utilize data for self-learning rather than just following pre-programmed instructions.

The history of machine learning

Tracing back the timeline, the invention of Arthur Samuel called Samuel Checkers-playing Program wasn't the only machine learning breakthrough in the 1950s. Another huge advance happened in 1957 when Frank Rosenblatt presented the Perceptron ‒ a simple classifier and an ancestor of today's neural networks.

A decade later, in 1967, the world was presented with the nearest neighbor algorithm used for mapping routes. The algorithm became the base for pattern recognition. The 1990s witnessed many improvements in machine learning, from the shift to a data-driven approach to the increased popularity of SVMs (support vector machines) and RNNs (recurrent neural networks). Starting the 2000s and up to now, machine learning has been developing by leaps and bounds.

Data science vs machine learning vs AI vs deep learning vs data mining

Machine learning is often seen through the prism of other data-driven disciplines such as data science, data mining, artificial intelligence, and deep learning. While they are closely related, the terms cannot be used interchangeably.

Data science is like a house where other models, studies, and methods reside. It is a wide scientific field that tries to make sense of data.

Following the house analogy, data mining would be seen as the basement. Just like the basement stores useful things for other rooms in a house, data mining tries to discover interesting patterns in data and make information more suitable for further use in AI products.

Artificial intelligence and machine learning would make a great living and dining room combo respectively. Artificial intelligence is usually considered to be any functional data product that can solve set tasks by itself simulating human problem-solving abilities. Machine learning takes place within an AI system capable of self-learning.

Exploring the house, deep learning would be the kitchen that shares space with the dining room aka machine learning. Deep learning is a part of machine learning that uses neural networks, one of the machine learning methods.

Now that you know the basics, prepare to get completely immersed in the world of machine learning.

Key types of machine learning based on the training approach

There are quite a few machine learning types, but the most commonly distinguished approaches are supervised learning, unsupervised learning, and reinforcement learning.

Supervised learning

Unsupervised learning

Reinforcement learning

It’s essential to recognize that these machine learning types have particular strengths and applications. Choosing which one to employ hinges on the nature of the problem and the available data. Balancing the right approach could unlock invaluable insights and drive innovation in numerous fields.

Machine learning algorithms and models

To turn data into a working model, machine learning needs algorithms. The algorithms are computational and logic methods that can learn from data and then improve without human assistance. The choice of a certain algorithm or a combination of algorithms depends on the problem needed to be solved, the nature of data used, and the computing resources achievable.

Types of predictions in ML models

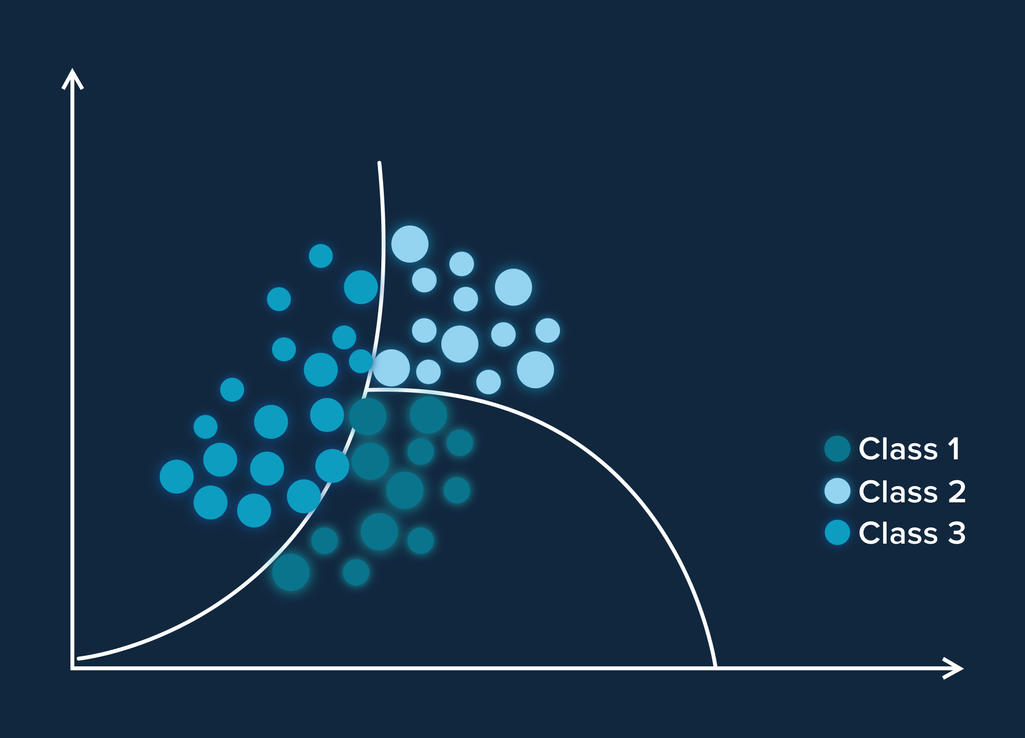

There are several types of predictions data scientists stick to when building machine learning models. These are classification, regression, clustering, and outliers.

Classification

Regression

Clustering

Outlier detection

Generation

Most popular machine learning methods

The predictions described above can be executed with the help of appropriate machine learning algorithms. Based on the task, the number and types of methods may vary. Let's go through some commonly used machine learning algorithms.

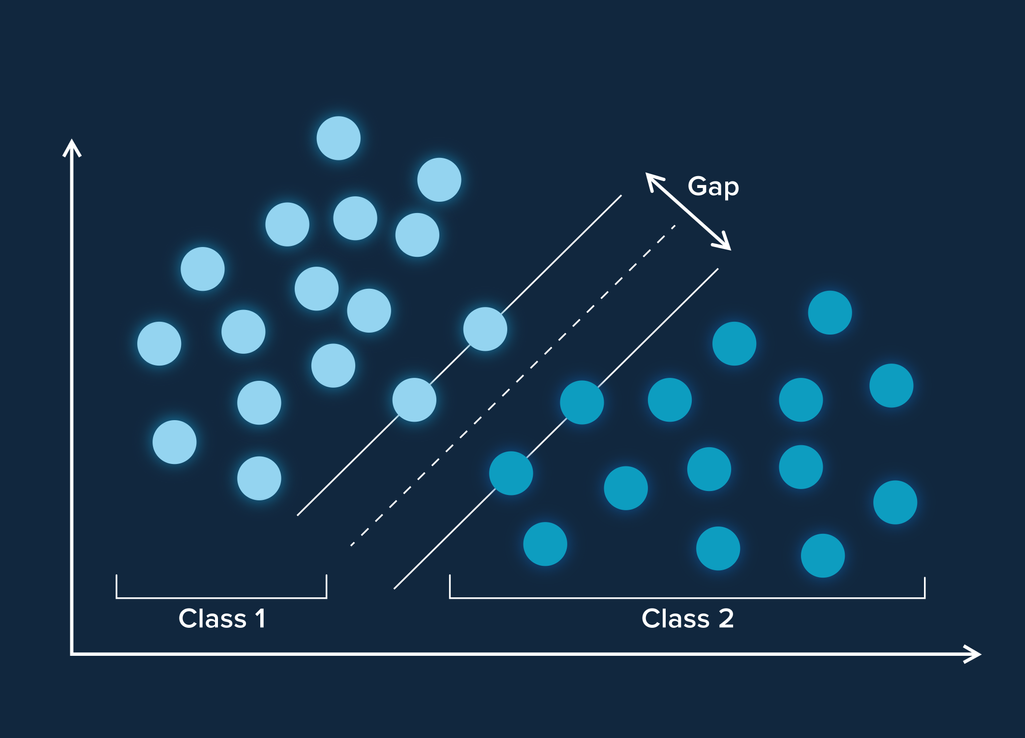

Support vector machines (SVMs) are algorithms used for classification and regression goals. The task of SVMs is to split the data points with similar features into classes by having as big a gap on either side of the separating line (hyperplane) and between the closest data points (support vectors) as possible. Once the line is in place, that's the classifier. Based on which side of the hyperplane new data points land, the respective classes are assigned. If data doesn't fit anywhere, it is highlighted. As such, SVMs can be used in medicine to search for anomalies on MRI scans.

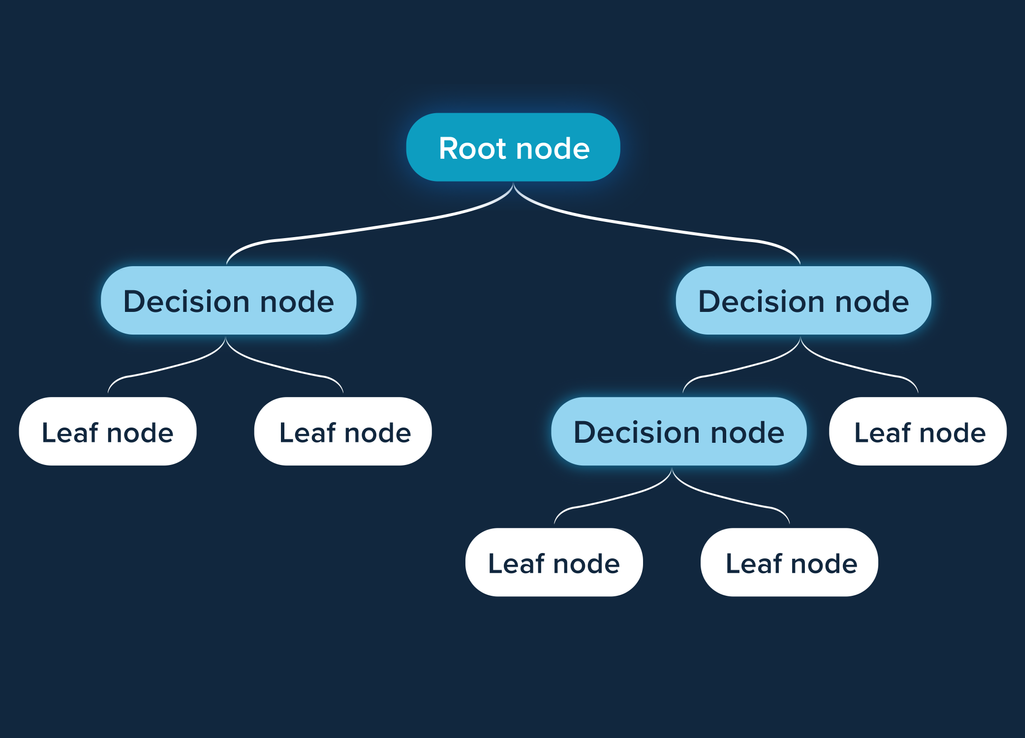

Decision trees is another supervised learning algorithm that can be used for both classification and regression purposes. Within this model, the data is split into nodes with Yes/No questions. The lower the branch in a model, the more narrowly-focused the question. Once created, a training model can be used to make predictions on the class or value of the target variable by applying previously learned decision rules. The algorithm can be applied to make complex decisions across different industries.

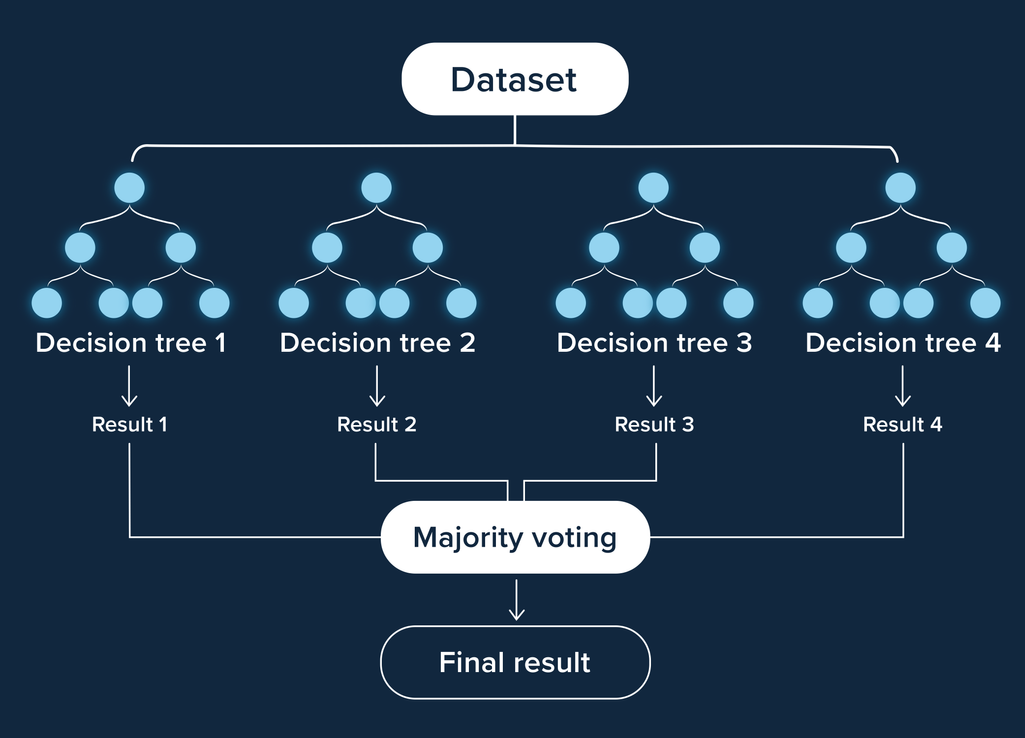

Random forest is capable of performing both regression and classification tasks. This algorithm creates a forest with several decision trees. Generally speaking, the more trees in the forest, the more robust the prediction. To classify a new object on attributes, each tree provides a classification and sort of “votes” for the class. Then the forest chooses the class with the most votes. The banking sector can use Random Forests to find loyal customers and fraud customers.

Naïve Bayes is a classification algorithm used to calculate the likelihood of a certain data item belonging to a certain class. The method can be utilized to discover whether an email is spam or not spam, given it contains spam-related words. A machine counts the number of spam words and not spam words in training data, multiplies both probabilities with the help of the Bayes equation, and uses the results of summing to assign a class.

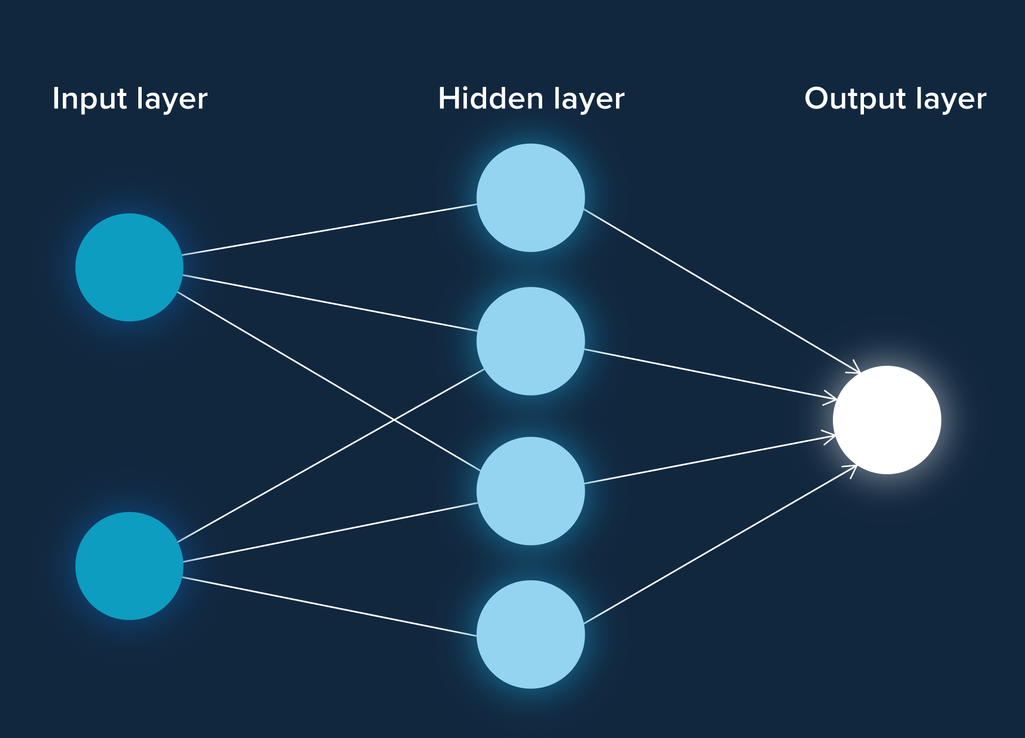

Neural nets consist of a bunch of neurons that reside in different interconnected layers (input layer, multiple hidden layers, and output layer). The neurons represent simple elements that can activate differently based on the signals (inputs) they get from neurons of the previous layer. Once received, inputs are processed and transmitted to neurons of the next layer. The process continues until the output layer where neurons provide high accuracy results. Image recognition is a prominent example of neural nets application, yet not the only one.

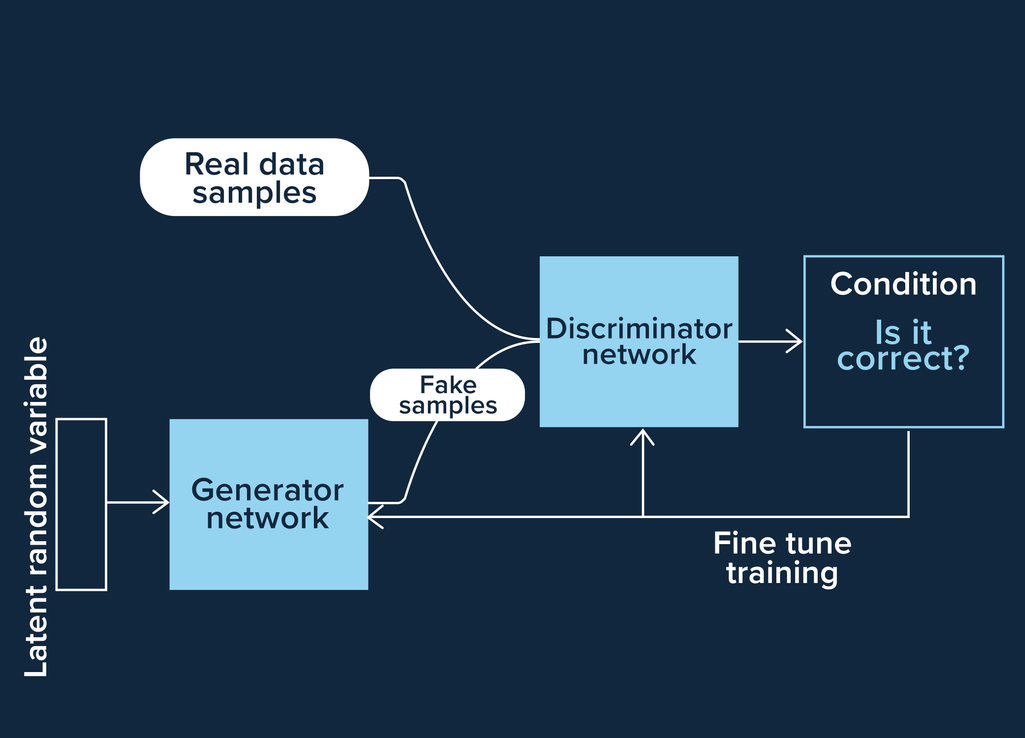

Adversarial neural nets or generative adversarial networks (GANs) are the architecture of algorithms that put two neural nets to work together yet against each other to generate new artificial data that can be taken for real data. There is the discriminator neural net that learns to recognize fake data and the generator neural net that learns to generate data capable of fooling the discriminator. With no consensus over GANs being good or evil, the algorithm is quite effective in video generation, image generation, and voice generation.

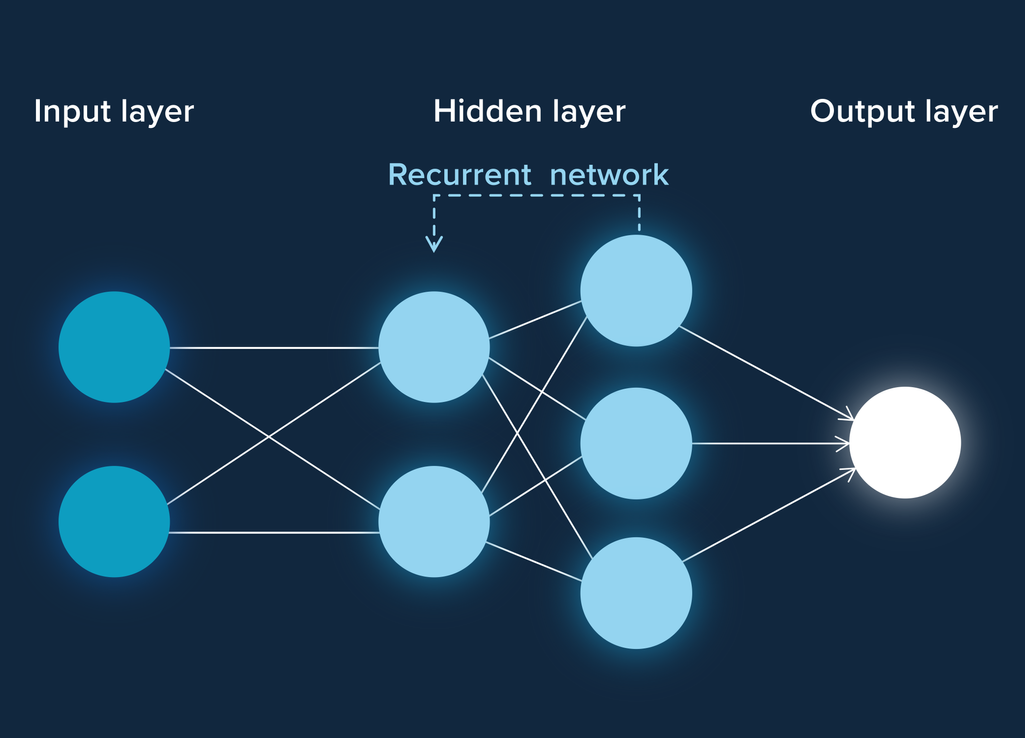

Recurrent neural networks (RNNs) is the deep learning algorithm with the capability of remembering its inputs owing to internal memory. Unlike other neural networks, an RNN considers all inputs including previously learned ones as it has a built-in feedback loop. As such, this type of neural network is a good fit for sequential data like speech, video, audio, text, and financial data. Personal virtual assistants like Siri or Alexa are just a few of many practical applications of RNNs.

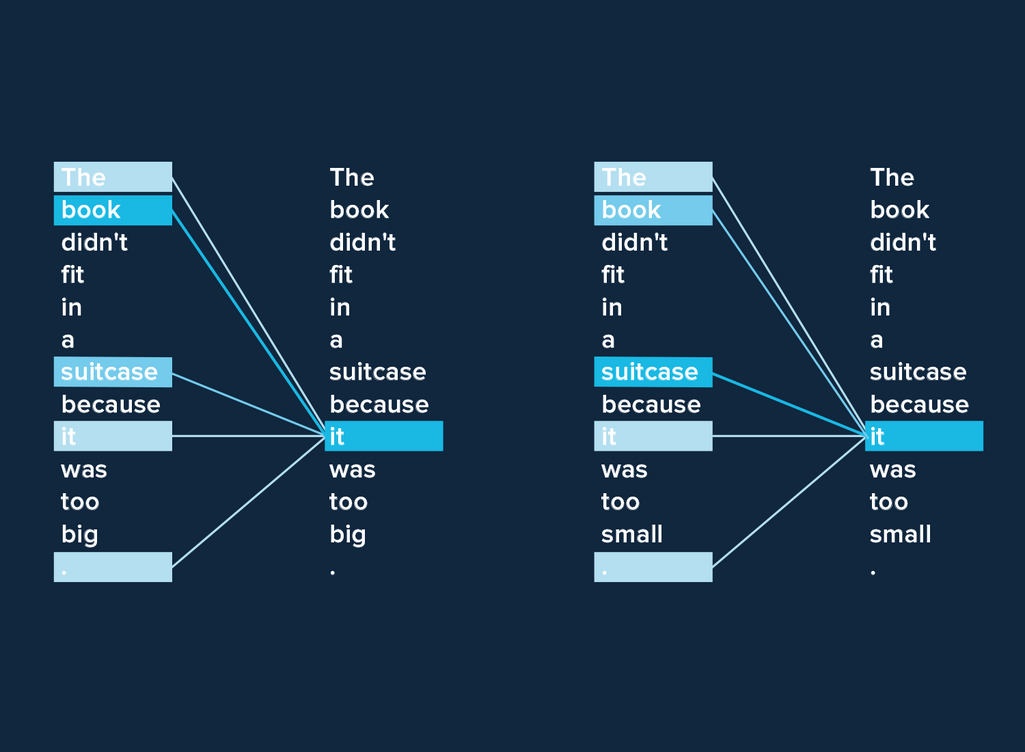

Transformers are deep learning algorithms that, similar to RNNs, are good for sequential data, especially texts. They consider the input as a whole and understand the context of each word related to other words -- a so-called attention mechanism. Input undergoes several transformer blocks before becoming the output, hence the name. The attention mechanism makes transformers so strong for text generation tasks. Initially designed by Google, transformer architecture is now powering all main large language models (LLMs), such as Google’s Gemini and OpenAI’s ChatGPT.

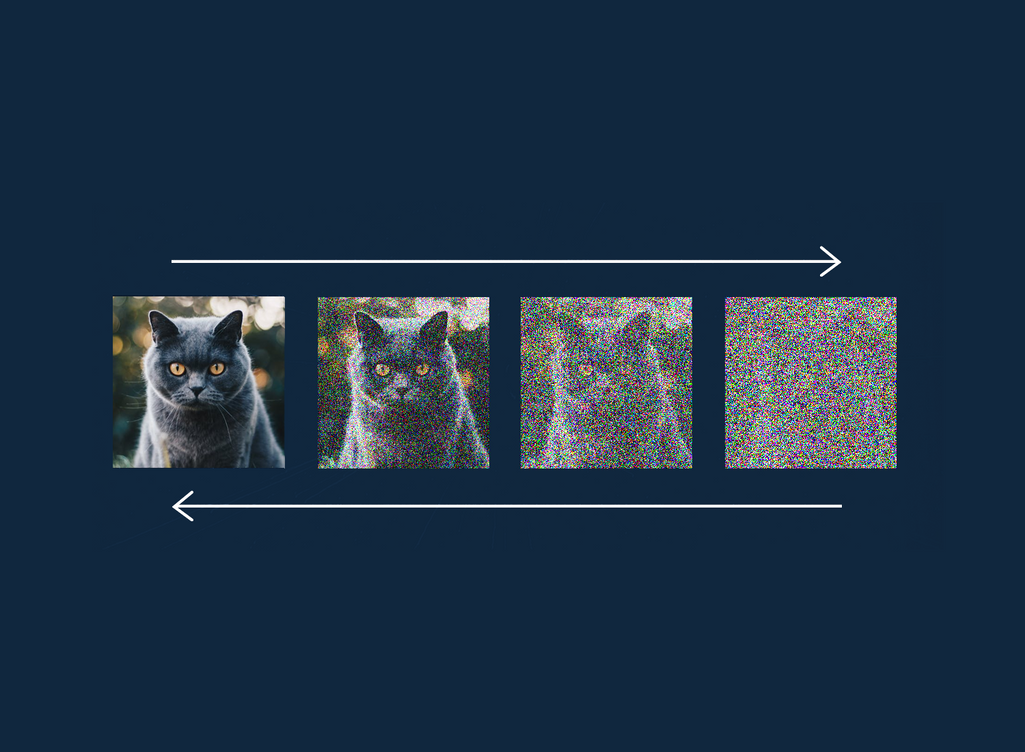

Diffusion models represent the most popular image generation method based on neural nets. The name comes from diffusion, the movement of molecules from a high concentration region to a low concentration region. Inspired by that, the logic of the model is to first gradually turn an input image into noise during training and then learn to restore the noise back to the image. Today, diffusion models power DALL-E and Stable Diffusion models while also being used for other tasks, e.g. upscaling low-resolution images.

Machine learning project and team

The number of companies that want to use machine learning to streamline business operations is growing rapidly. With this interest, there must be at least some general understanding of how things work when it comes to building an ML project.

The overall ML project structure

While the scale and complexity of machine learning projects might differ, there's a common scenario for their implementation which involves the following stages:

Strategy planning is half the success when done properly. At this stage, company specialists engaged in business analytics and solution architecture map out the path of an ML project realization, set clear goals, and decide on a workload.

Dataset preparation is a labor-intensive stage involving data collection, selection, labeling, and feature engineering. This is the stage when data analysts and data scientists enter the game.

- Data collection is about finding relevant and useful data with its further interpretation and analysis of results. The good rule of thumb here is to collect as much data as possible because it's difficult to predict which and how much data will bring the most value.

- Data comes in all shapes and the task of the data selection and cleansing step is to single out those data attributes that will be relevant and useful for building a particular predictive model.

- Used in supervised learning, data labeling is referred to as a process of specifying target attributes in a dataset to train an algorithm. Basically, with data labeling an ML model is shown what characteristics of data to look at and learn.

- Feature engineering or data transformation is a process of converting raw data into features that best describe the underlying problem to a model.

Dataset splitting is the stage of dividing data into three parts: the dataset for training a model, the dataset for testing a model, and the dataset for its validation. The ratio of training and testing datasets is usually 80 to 20 percent respectively. The testing set is then split again in the same proportion with 20 percent making the validation set. This stage also requires experienced data scientists.

The modeling stage comes next and it covers the processes of model training, assessment, testing, and further fine-tuning. As a rule, these are data scientists who take care of modeling. They create several models and go with the one(s) providing the most accurate results.

The model deployment stage is implemented after the most reliable ML model is picked. These are the tasks related to putting a model into production, and they are carried out by a machine learning engineer, a data engineer, or a data administrator, given you operate smaller amounts of data.

Infrastructure in production

When trained and put on production, ML models require a specific type of technical infrastructure. The machine learning pipeline is used for the management and automation of machine learning processes on production.

In the production environment, the ML system is triggered by data inputs coming from the application client. The model is also provided with additional features needed to make accurate predictions. These features come to the model from a dedicated database called a feature store. Before data from the feature store and application client gets to the model, it goes through preprocessing and feature extraction.

Another important part of the ML infrastructure in production is the ground-truth data storage ‒ the container for ground-truth data that is later compared to predictions data to evaluate the accuracy with the help of monitoring tools. The orchestration instruments are used to operate the model and all the tasks related to its performance on production.

Once the monitoring metrics show the accuracy reduction of predictions, the model enters the retraining phase, where a model builder retrains it with new data.

The data science team and main roles

If you want to become a data-driven company and complete machine learning tasks with flying colors, you will need to build a qualified data science team. While there are quite a few roles within the data science ecosystem, we'll walk you through the most crucial ones.

Data scientists

ML engineers

Data analysts

Finding the right talent is only half the success, as any machine learning process relies on hardware and software as well.

Machine learning hardware

When it comes to training a model, the choice of the right hardware is vital too as some ML processes require a lot of computational power in place. Here's an overview of hardware based on the model training scenarios.

Scenario 1: You are training a simple machine learning model

If this is the case, you don't need to purchase expensive hardware. Since you deal with simple machine learning tasks, basically any laptop or desktop computer equipped with a CPU (Central Processing Unit) with a few cores (i5-i7) is a good choice. The CPUs can handle a set of complex instructions one by one.

Scenario 2: You are training a neural net or ensembling different models

These tasks are more intensive, hence require more powerful hardware. A GPU (Graphics Processing Unit) is considered a better choice in this case due to its capability of performing parallel processing of instructions. Consider a high-end gaming laptop or PC with at least 32GB of RAM and a good GPU (Nvidia or AMD).

Scenario 3: You are dealing with large scale machine learning tasks

In case you are involved in some serious machine learning and you have a high budget, opt for more powerful solutions such as a GPU cluster or TPUs as they allow for faster model training. A TPU (Tensor Processing Unit) is the machine learning ASIC (Application Specific Integrated Circuits), originally designed by Google. Nvidia also caught up the idea and presented EGX converged accelerators as a part of its AI platform.

Machine learning tools and datasets

When building and deploying ML models, data science teams work with machine learning software tools. Knowing what tools, frameworks, libraries exist as well as knowing what challenges need to be solved can help speed up the project completion.

Popular machine learning software libraries

TensorFlow is one of the most widely-used toolkits for building flexible and scalable machine learning systems. Presented by Google in 2015, the open-source software supports ML projects that are built with NLP, computer vision, reinforcement learning, and deep learning of neural networks.

PyTorch is an open-source machine learning library for building neural networks. It was developed by the Meta AI research lab in 2016 and is widely adopted in the research and academic domains due to its Pythonic nature and dynamic computational graphs.

Python-based, scikit-learn is an efficient open-source machine learning framework used for classification, reduction, clustering, and other purposes. Well-documented, scikit-learn is a good fit for beginners, providing quick ML model development.

Developed for data analytics and modeling, a free Python-based library under the name of Pandas is one more popular ML software choice.

NumPy is a basic package for numerical computing in Python. The software supports multidimensional data and matrices.

Machine learning platforms

Machine learning opportunities are available for use as a service (MLaaS). There are lots of cloud-based platforms and solutions that facilitate tasks related to the creation, training, and deployment of machine learning models. If you are planning to move from words to actions and initiate your own ML project, it is worth paying attention to the major players in the industry of MLaaS.

Amazon Machine Learning Services

Microsoft Azure Machine Learning Services

Google Cloud Machine Learning Services

All ML platforms offer APIs with already working models for image recognition, speech-to-text, video analysis, and much more. Although the services come at extra costs, they save users time and effort.

Open datasets for machine learning

Machine learning projects require suitable datasets. The idea here is simple: You either create datasets by collecting large amounts of data by yourself or look for ready-made machine learning datasets many of which are publicly available.

Datasets for machine learning can be found on different resources:

Huge dataset aggregators like DataPortals and OpenDataSoft contain lists of links to other data portals or form a collection of datasets from various open providers in one place. Usually, such catalogs present data portals in alphabetical order with tags on region or topic.

Individual online catalogs can provide users with topic-related datasets. For instance, there are separate data resources for scientific research purposes (Re3data, Harvard Dataverse), sets of open government data (Data.gov, Eurostat), datasets covering healthcare aspects (Medicare, World Health Organization), and other narrowly-focused catalogs.

Real-life applications of machine learning

Machine learning advances in leaps and bounds. Today we can witness technology developments that would have seemed unreal 20 years ago. From smart recommender systems to autonomous vehicles, it is machine learning that breathes life into these cool innovations. In this section, we'll walk you through some of the most popular real-life applications of machine learning.

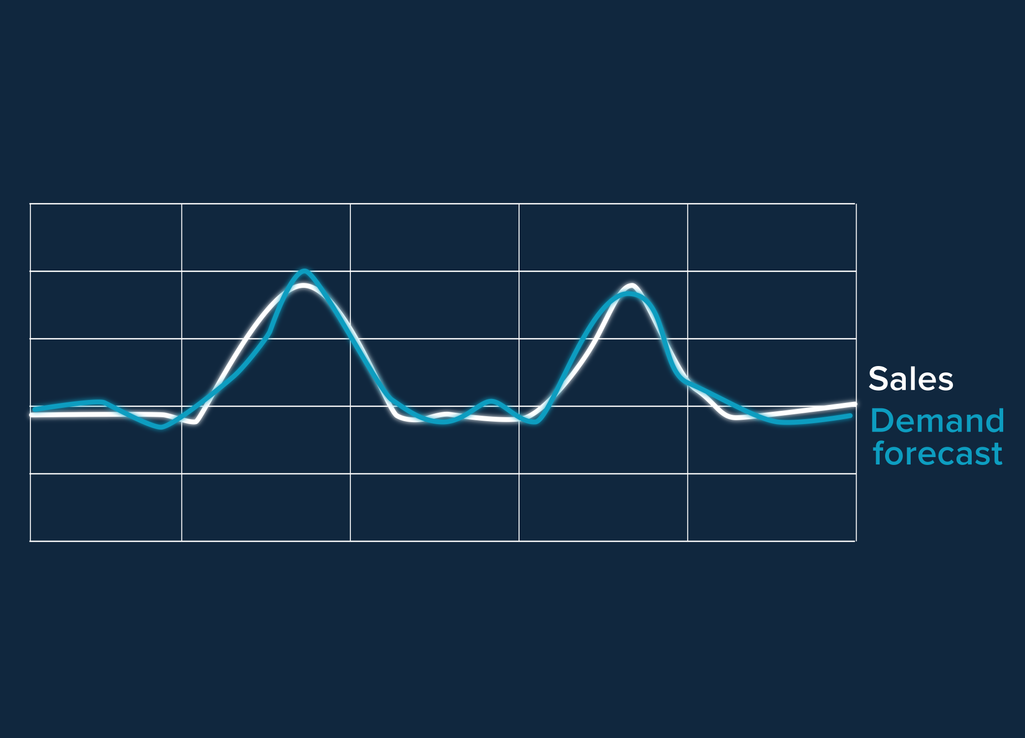

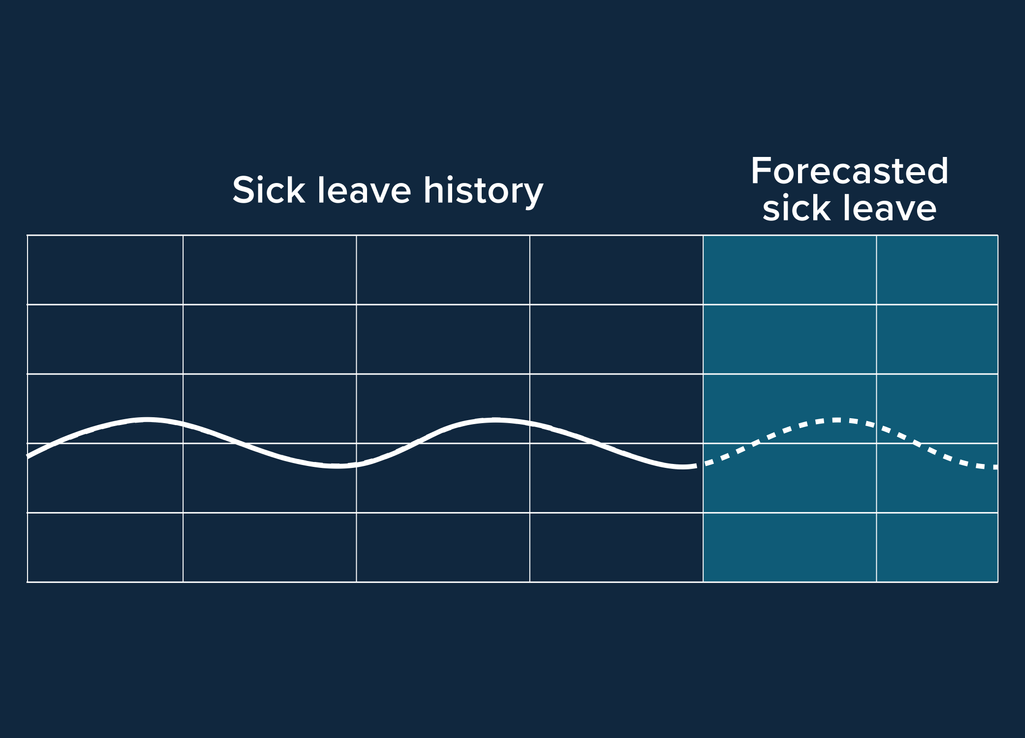

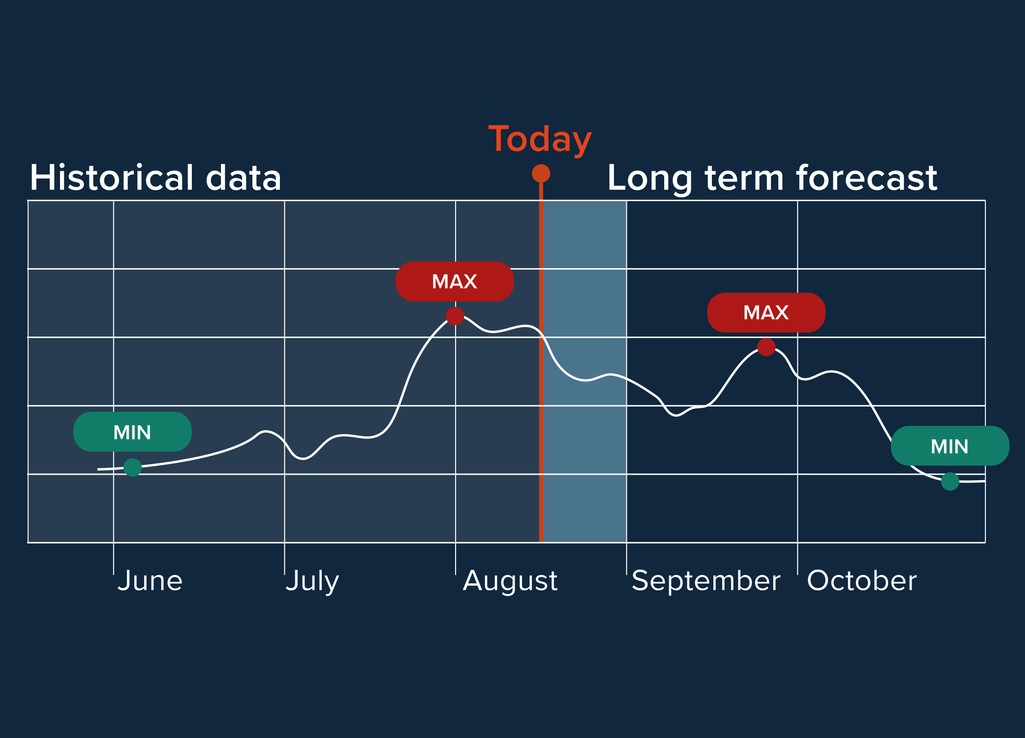

This sort of forecasting in machine learning involves building models that will predict future trends by analyzing past events through a sequence of time. Online shops may use time-series forecasting to calculate the number of sales during the upcoming winter holidays based on historical sales data.

Demand forecasting is an approach used to estimate the probability of demand for a service or product in the future. Danone, for instance, managed to reduce its promotion forecast errors by 20 percent owing to the use of a demand forecasting ML model.

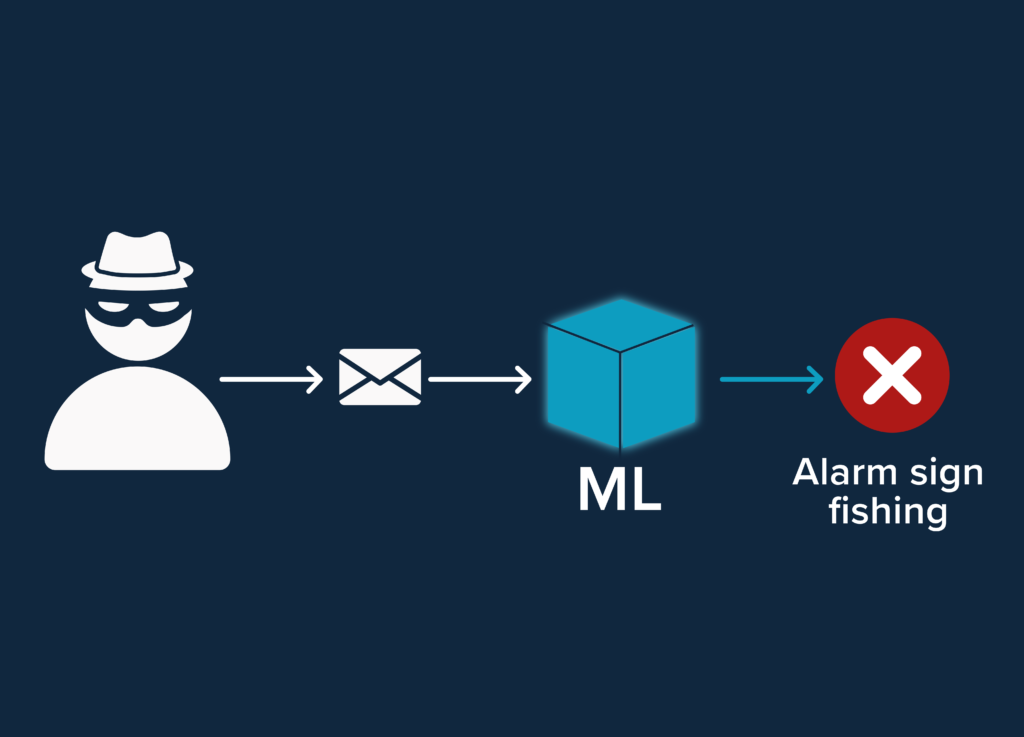

In cybersecurity, machine learning models can automatically detect, evaluate, and even respond to security incidents. For instance, ML algorithms can scrutinize email content and sender information to accurately identify and filter out phishing attempts, enhancing the security of communications.

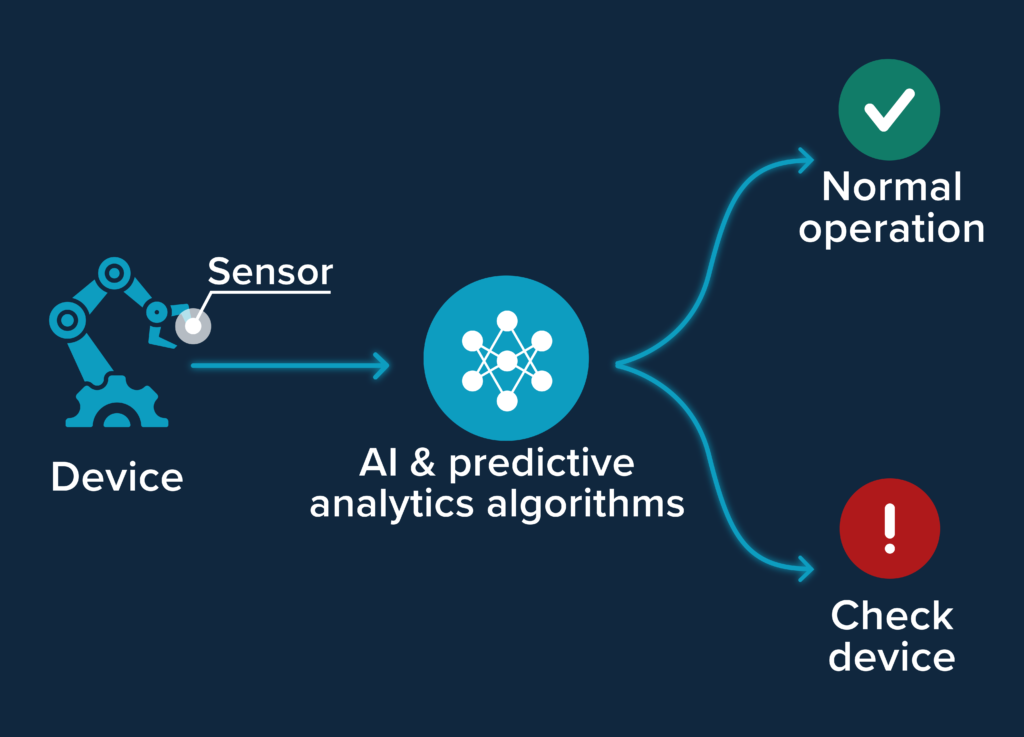

Predictive maintenance helps companies reduce downtime and lower costs for machinery maintenance operations. The application of predictive maintenance technology is shown by Infrabel ‒ Belgian railways ‒ that managed to automate condition monitoring of railway lines, tracks, and ties, and increase their staff safety as well.

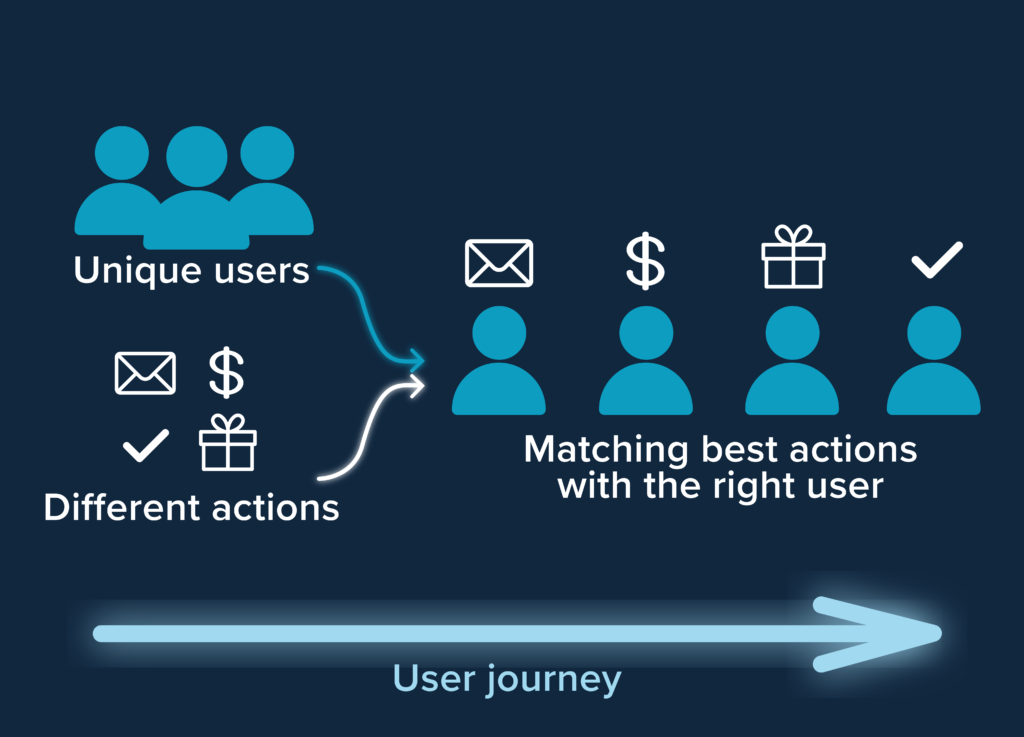

Next best action is a marketing approach that helps companies decide which is the best action to take regarding a specific customer or group of customers. By tracking which offerings and menu items customers are most interested in, Starbucks makes recommendations of the most popular flavours allowing their guests to customize drinks.

People analytics uses ML tools and collects metrics to analyze data related to employees and other HR challenges. IBM, for example, managed to reduce turnover for critical roles by 25 percent thanks to their on-time people analytics strategy implementation which was made possible with IBM’s Watson machine learning capabilities.

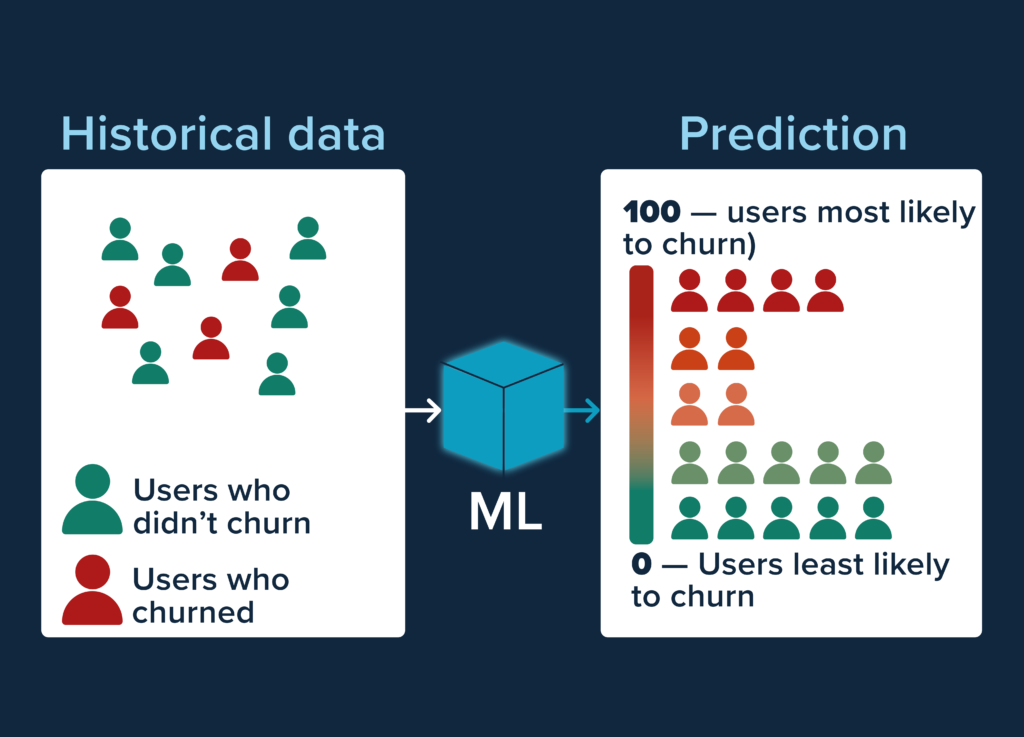

ML-driven systems are capable of predicting customer churn rates to help businesses prioritize their retention activities. Companies like HubSpot and Spotify leverage churn prediction models to study the behavior of their users and then forecast which of them are likely to leave and so they can prevent that.

Companies of different scale and scope effectively use machine learning models to forecast prices on products or services for their business purposes. For example, some travel agencies can advise their customers, who care about the price, on the most advantageous time to grab the best flight offers.

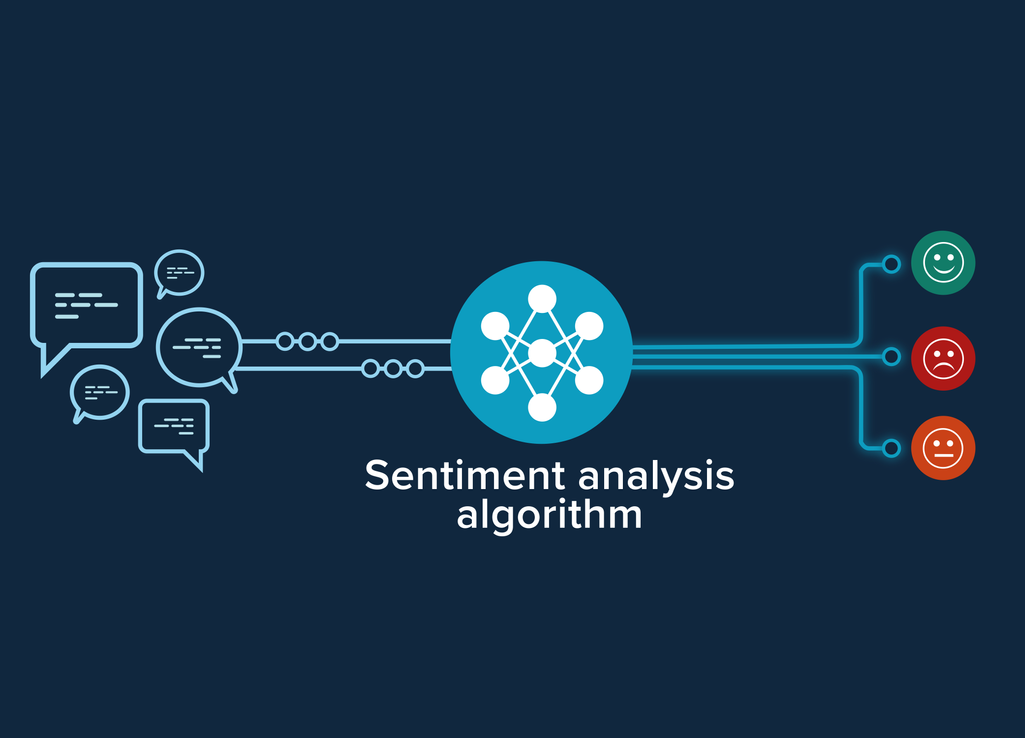

Sentiment analysis aims at extracting user opinions about a product or service by analyzing large volumes of data. KFCmeasures and analyzes public opinion about its marketing campaigns inspired by pop culture and memes and then creates more targeted advertisements to build a strong brand image

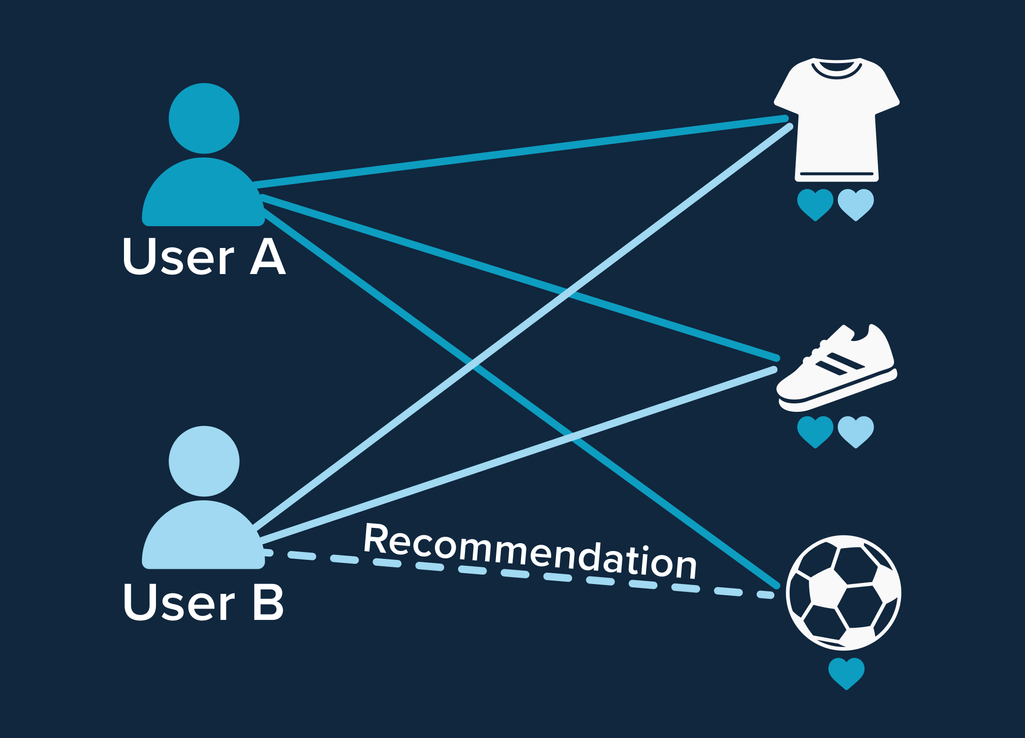

Personalization and recommendation

Driven by machine learning, recommender systems study the preferences of customers and help them make the right choices about services and products. Think of Netflix with their "Because you watched". The service takes advantage of machine learning to give offerings tailored to the needs of their customers.

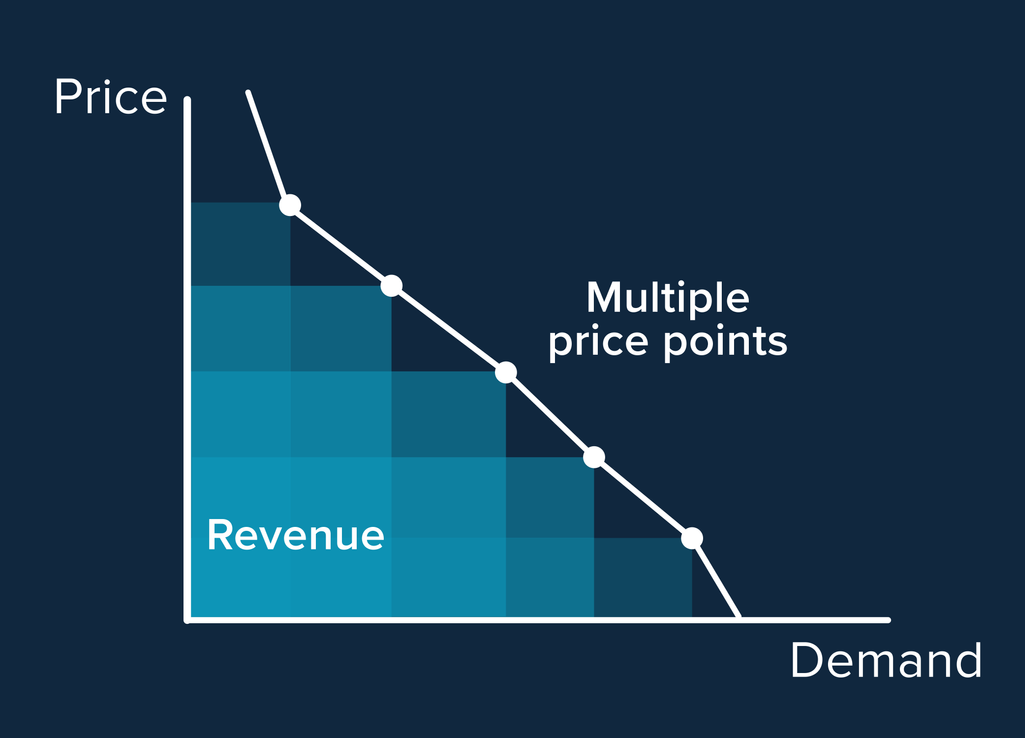

With ML, companies can leverage dynamic pricing: They can set a price on a service or product based on current supply and demand. Uber uses the dynamic pricing practice to predict the place and amount of requests they will receive at a given point in time.

Such image generation models as Midjourney, DALL-E, and Stable Diffusion are used for concept art, product design, video production, advertising, and creating other visual assets. Besides that, generative models help with upscaling low-resolution images and textures for game development. They’ve also become a new form of artistic expression.

The revolution in conversational AI after the release of ChatGPT has led to dozens of successful use cases, from asking the questions people used to google to idea brainstorming and helping writers. Businesses integrate conversational AI to answer customer requests and even as an alternative to traditional interfaces.

As you can see businesses have plenty of ways to utilize machine learning to optimize workflows, increase revenues, and engage customers. Now let's take a closer look at how things work with machine learning in different industries.

Examples of machine learning across industries

Machine learning fosters pathways to growth whatever the industry. The thing is, you use ML-driven products daily without giving it much thought. Every time you look for something using Google Search or open up your email junk box to clear it or get personalized recommendations from Amazon, you stumble on machine learning. Find out what industries take advantage of machine learning and how they do that.

Healthcare

Finance

Insurance

Retail

eCommerce

Logistics

Hospitality

Airlines

Travel agencies

The future and challenges of machine learning

The AI and machine learning fields are growing bigger and more influential each passing day. Two decades ago things like virtual assistants, self-driving cars, front-desk robots seemed to be somewhat unrealistic. Face it, if you were told that your mobile phone would be able to recognize your voice and transform it into text, you wouldn’t have believed it. Right?

In fact, lots of scenarios that used to be the subject of sci-fi only became a part of our everyday life ‒ applications driven by machine learning are already making the world a better place.

With all the machine learning advances, there's still room for development. And when we say "development", we don't mean a robot uprising against humans. Instead, we’ll talk about the real future of machine learning. Here are a few growing ML trends:

- With deep learning being actively hyped, deep reinforcement learning is predicted to get more attention in the upcoming years. This machine learning trend is a mix of deep neural networks and reinforcement learning approaches with the potential to solve complex problems across industries.

- Demand and time series forecasting will continue to be of great importance for businesses as more and more companies rely on various machine learning algorithms to make informed decisions.

- AI, machine learning, and deep learning technology will bring hyper-automation closer with systems being able to automatically learn from generated data and improve over time.

- Cybersecurity will keep paving the way to AI and machine learning capabilities to be able to recognize suspicious activity patterns and identify possible threats at an earlier stage.

- Generative adversarial networks (GANs) will remain a hot trend. It is expected that GANs can soon be used to generate realistic images of suspect faces from police sketches.

Along with the growth opportunities, machine learning still faces some challenges such as high costs of developing and deploying ML models, difficulties with finding the right talent and reliable data, and issues concerning interpretability in machine learning.