Watch our video about data preparation for ML tasks to learn more about this

This idea is also important when working with Generative AI models — whether they produce text, code, or images. If you're an engineer or a decision-maker at a company planning to add generative AI features to its applications, the prompts you use are crucial. A poorly crafted prompt can lead to unhelpful or wrong outputs. This makes understanding how to create good prompts, also known as prompt engineering, critical for your projects.

In this article, we'll explain the basics of prompt engineering, the different types and techniques, and why it's key for successfully integrating generative AI into your apps.

What is prompt engineering and why does it matter?

Prompt engineering is the practice of meticulously crafting and optimizing questions or instructions to elicit specific, useful responses from generative AI models. It's a strategic discipline that translates human intentions and business needs into actionable responses from generative AI models, ensuring that the system aligns closely with desired outcomes.

Prompt engineering is used for different types of generative AI models:

- text-based models (e.g., ChatGPT),

- image generators (e.g., Midjourney), and

- code generators (e.g., Copilot).

How Generative AI Works

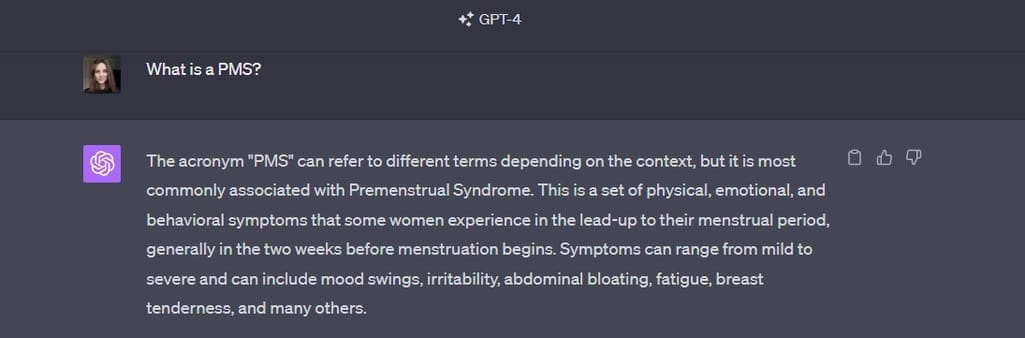

Let's consider a real-world example that could impact your business. Say you're a CTO or software engineer in the hospitality industry, and you're integrating a generative AI chatbot to answer staff queries about your hotel's Property Management System (PMS). If the prompts for the chatbot are poorly engineered, a question like “What is PMS?” may result in the AI giving a definition of premenstrual syndrome — not at all relevant or useful in your business context.

With a well-engineered prompt that provides the necessary industry context — such as "What is PMS in the travel industry?" — you can receive a targeted response about "hotel property management systems," which is the exact information you'd need.

In addition to delivering more accurate and relevant responses, good prompt engineering offers several other advantages.

Efficiency and speed. Effective prompting can expedite problem-solving, dramatically reducing the time and effort required to produce a useful result. This is particularly important for companies seeking to integrate generative AI into applications where time is of the essence.

Scalability. A single, well-crafted prompt can be adaptable across various scenarios, making the AI model more versatile and scalable. This is crucial for businesses aiming to expand their AI capabilities without reinventing the wheel for each new application.

Customization. Prompt engineering enables you to tailor the AI's responses to specific business needs or user preferences, thereby providing a uniquely customized experience.

To get all the abovementioned benefits, it becomes invaluable for organizations to hire prompt engineers who fine-tune the prompts to ensure that queries yield specific and useful responses and align with precise business objectives.

What is a prompt engineer? Role and responsibilities

A prompt engineer is a specialist uniquely positioned at the intersection of business needs and AI technology, i.e., large language models like GPT-4 in particular. They serve dual roles: they are linguists, understanding the nuances and complexities of human language, and data scientists capable of analyzing and interpreting machine behaviors and responses.

Their primary responsibility is designing, testing, and optimizing prompts, translating business objectives into effective interactions with generative AI models. Utilizing a toolkit of techniques (which we will explain further), they craft prompts that consistently coax useful and specific responses from the AI. Prompt engineers also assess prompt effectiveness, using telemetry data to regularly update a specialized library of prompts tailored for diverse workflows.

This prompt library is like a shared toolbox. It has a bunch of tried-and-true prompts for all kinds of situations, making it easier for everyone in the company to find what they need. Prompt engineers sort these prompts by things like tone, domain, output produced, and other factors to make it easy to find and reuse existing prompts.

Many publications, including Time, have reported on the growing importance of prompt engineers across various sectors. Companies planning to integrate generative AI into a business application increasingly recognize the value of a prompt engineer.

Leading job platforms like Indeed and LinkedIn already have many prompt engineer positions. In the United States alone, job postings for this role run in the thousands, reflecting the growing demand. The salary for prompt engineers is also compelling, with a range that spans from $50,000 to over $150,000 per year, depending on experience and specialization.

Technical responsibilities

If you want to get the position of a prompt engineer or are looking to hire one, here are the key technical responsibilities to be aware of.

Understanding of NLP. This specialist must have a deep knowledge of language algorithms and techniques is a must-have.

Familiarity with LLMs. Experience with models like GPT-3.5, GPT-4, BERT, and others is critical to understanding their limitations as well as possibilities.

JSON and basic Python. Understanding how to work with JSON files and having a basic grasp of Python is required for system integration, particularly with models like GPT-3.5.

API knowledge. It's also essential for prompt engineers to know how to interact with APIs to be able to integrate generative AI models.

To learn more, read our dedicated article about LLM API integration.

What is an API? Connections and principles explained

Data analysis and interpretation. A prompt engineer must be able to analyze model responses, recognize patterns, and make data-informed decisions to refine prompts.

Experimentation and iteration. Prompt engineers conduct A/B tests, track metrics, and optimize prompts based on real-world feedback and machine outputs.

Nontechnical responsibilities

Tech responsibilities are only one side of the coin. A prompt engineer must possess the following non-tech skills.

Communication. This person must be able to articulate ideas clearly, collaborate with cross-functional teams, and gather user feedback for prompt refinement.

Ethical oversight. A prompt engineer ensures that prompts do not produce harmful or biased responses, in line with ethical AI practices.

Subject matter expertise. Depending on the application, having specialized knowledge in specific domains can be invaluable.

Creative problem-solving. This specialist thinks outside the conventional boundaries of AI-human interaction to innovate new solutions.

As you can see, a prompt engineer's role is multifaceted, requiring a blend of technical skills and the ability to translate business objectives into effective AI interactions. These experts are invaluable for successfully integrating generative AI capabilities. With this in mind, let's explore some foundational concepts to prompt engineering.

Core technical concepts to know in prompt engineering

Prompt engineering is not just about asking — it's about asking most effectively, often demanding a nuanced understanding of natural language processing (NLP) and large language models (LLMs). So let’s overview the core concepts related to prompt engineering.

Natural language processing (NLP) is a specialized field within artificial intelligence (AI) that focuses on interactions between computers and human language. It enables machines to contextually analyze, understand, and respond to human language.

Large language models (LLMs) are an advanced subset of language models that are trained on extensive datasets to predict the likelihood of various word sequences. In simpler terms, they strive to guess the next word in a sentence based on the words that come before it, effectively understanding the context to produce meaningful text.

Transformers are the basis for many LLMs, including the well-known GPT series. They are a specific type of deep neural network optimized for handling sequential data like language. Transformers excel in understanding the contextual relationships between words in a sentence. Attention mechanisms within transformers allow the model to focus on different parts of the input text, helping it "pay attention" to the most relevant task.

Parameters are the model's variables learned from the training data. While prompt engineers typically don't adjust these, understanding what they are can help in comprehending why a model responds to a prompt in a certain way.

A token is a unit of text that the model reads. Tokens can be as small as a single character or as long as a word (e.g., "a" or "apple"). LLMs have a maximum token limit, and understanding this is important for prompt engineering, especially for longer queries or data inputs.

Multimodality is an emerging trend of multimodal AI models that can understand, interpret, and generate various types of data — be it text, images, or code. Prompt engineering expands the canvas on which you can work, enabling you to craft prompts that generate diverse output forms depending on the model's capabilities.

Armed with these foundational concepts, you'll better understand the subsequent discussions on prompt engineering techniques and best practices.

What are prompts?

Prompts are precise blueprints for the output you expect from an AI model. They act as the intermediary language, translating human intent into tasks the AI can execute.

Key elements of a prompt

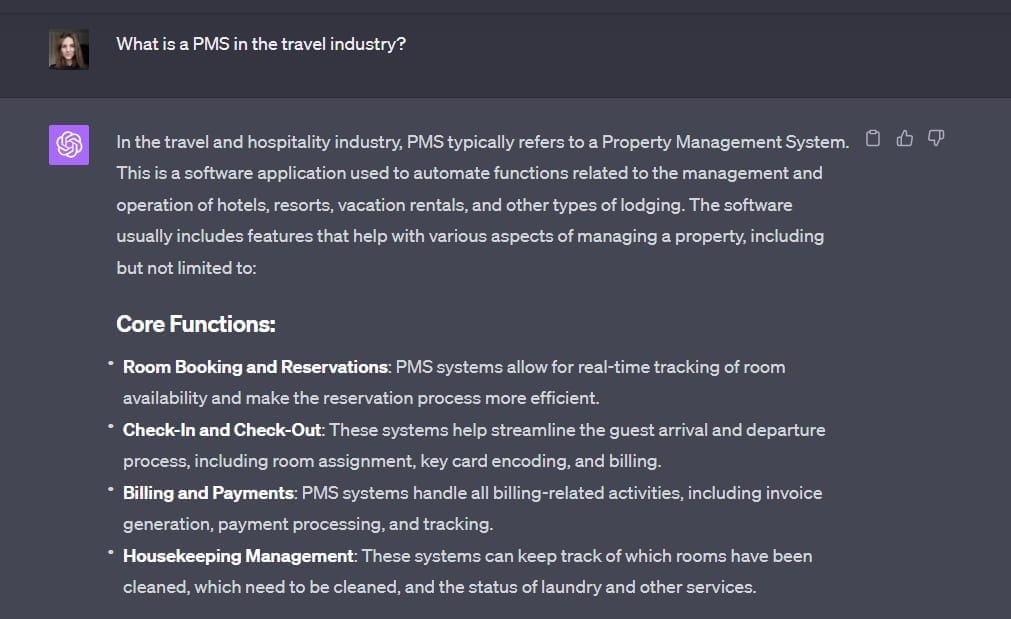

Let’s take one comprehensive prompt and explain the elements it consists of.

"Considering the recent research on climate change, summarize the main findings in the attached report and present your summary in a journalistic style."

Key elements of a prompt

Instruction. This is the core component of the prompt that tells the model what you expect it to do. As the most straightforward part of your prompt, the instruction should clearly outline the action you're asking the model to perform.

In our example prompt, the instruction is "summarize the main findings in the attached report."

Context. This element provides the background or setting where the action (instruction) should occur. It helps the model frame its response in a manner that is relevant to the scenario you have in mind. Providing context can make your prompt more effective by focusing the model on a particular subject matter or theme.

The contextual element in our prompt is "considering the recent research on climate change."

Input data is the specific piece of information you want the model to consider when generating its output. This could be a text snippet, a document, a set of numbers, or any other data point you want the model to process.

In our case, the input data is implied as "the attached report."

The output indicator guides the model on the format or style in which you want your response. This can be particularly useful in scenarios where the output format matters as much as the content.

In our example, the prompt "present your summary in a journalistic style" is the output indicator.

Weights in prompt engineering

The concept of weighting refers to adding extra emphasis or focus on specific parts of a prompt. This can help guide the AI model to concentrate on those elements, influencing the response type or generated content. The weights in prompts are not a standard feature across all models: Some platforms or tools might offer weights implemented as special syntax or symbols to indicate which terms should receive more attention.

Weighting is often discussed in the context of image generation because the models responsible for this task — like DALL-E or Midjourney— are more likely to produce significantly varied outputs based on slight tweaks to the prompt. However, the concept could theoretically be applied to other generative models, like text-based or code-based models, if the system allows it.

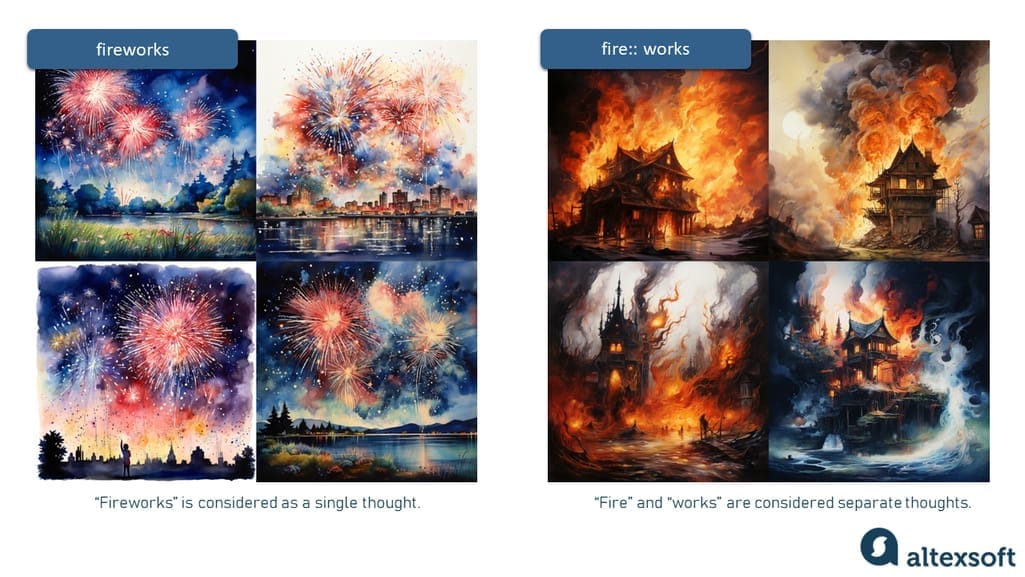

For example, using "fireworks" and "fire::works" as prompts for Midjourney brings about starkly different results. See for yourself.

We gave Midjorney two prompts, "fireworks, watercolor painting" and "fire::works, watercolor painting," and received two different outputs

- "fireworks" (without any weight) typically guides the model to generate an image featuring the bright, colorful aerial displays we associate with celebrations and events.

- "fire::works" separates two words and heavily emphasizes the first part of the term, "fire." In this case, the generated image might feature scenes where fire is the main focus, such as houses engulfed in flames.

The addition of "::" as a weight between "fire" and "works" influences the generated output, shifting the focus to the first part of the term and leading to a completely different visual representation.

This demonstrates how critical the weighting can be in prompt engineering for image generators. By simply adjusting the weight, you change the entire context and meaning of the image produced.

Prompting interfaces in practical application: OpenAI's playground

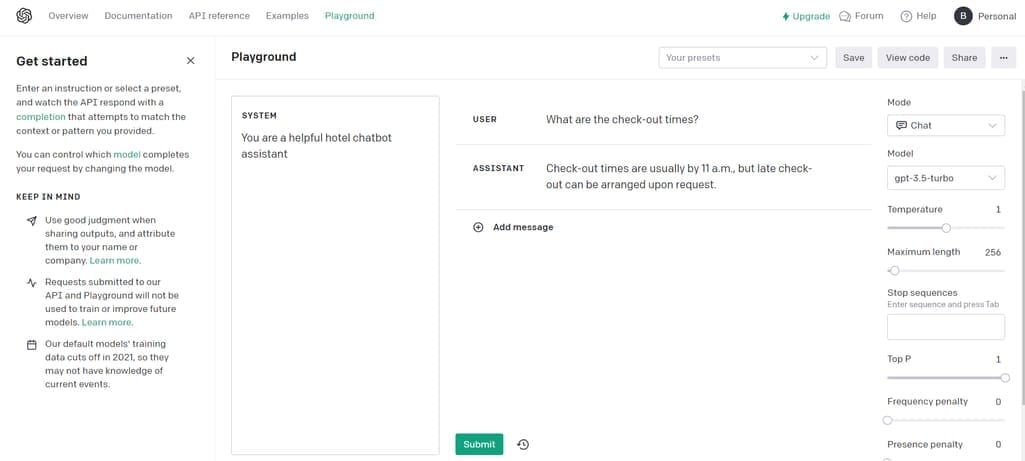

For those integrating generative AI models like ChatGPT into their applications, it's essential to understand the interface where prompting occurs. OpenAI's playground provides an environment for developers to test and optimize their prompts, which are categorized into three roles: system, user, and assistant.

OpenAI’s playground page

Role "system.".This is where a prompt engineer can set the general guidelines for the conversation and say what kind of personality ChatGPT should have within chat conversation messages. For instance, a "system" message in a hotel management application could be: "You are a helpful hotel chatbot assistant," ensuring that the AI model knows to focus on hotel-related queries.

The role "user" represents the person or system interacting with the AI. In line with our hotel chatbot example, a "user" prompt might be: "What are the check-out times?"

The role "assistant" is the model's response to the "user" prompt. Following our example, the "assistant" could reply: "Check-out times are usually by 11 a.m., but late check-out can be arranged upon request."

These roles not only guide the conversation but also serve as an important framework for preparing datasets to fine-tune your AI model. Fine-tuning aims to adjust the model's behavior to better suit your specific application, such as focusing on hotel management queries in our example. According to OpenAI's guidelines for fine-tuning, you must provide at least 10 examples for the process. However, noticeable improvements are generally seen when using 50 to 100 training examples.

Having hands-on experience integrating ChatGPT into travel applications, we understand the nuances and challenges involved in this process. You can read our dedicated article for a more detailed look at our journey.

Now that we've discussed the basics and the practical aspects of crafting prompts, including the roles that dictate the conversational flow, let's delve into the more advanced techniques of prompt engineering that can help you fine-tune your interactions with AI.

AI prompt engineering techniques with examples

There are quite a few prompt types to use to talk to AI — from simpler zero-shot prompting to more complex chain-of-thought techniques.

Yet, before diving into the various techniques for prompt engineering, it's crucial to understand some general best practices that can significantly influence the quality of outputs you receive. Here's a quick list to keep in mind.

- Clarity is the key: Clear and concise prompts lead to better and more accurate results. The more specific you can be, the better the AI can understand your request.

- Avoid information overload: While it's tempting to include as much detail as possible, too much information can be counterproductive and might confuse the model.

- Use constraints: Adding constraints helps you narrow down the response to your needs. For example, specify the length or format of the output if that's important for your use case.

- Avoid leading and open-ended questions: Leading questions can bias the output, while open-ended questions may get a too broad or generic response. You should find balance.

- Iteration and fine-tuning are helpful: Regardless of your technique, iterative prompting and fine-tuning are often necessary steps to arrive at the desired output.

These tips are not exhaustive but serve as a good starting point. They can be applied across different techniques and combinations.

Zero-shot prompting

Zero-shot prompting is one of the most straightforward yet versatile techniques in prompt engineering. At its core, it involves providing the language model with a single instruction, often presented as a question or statement, without giving any additional examples or context. The model then generates a response based on its training data, essentially "completing" your prompt in a manner that aligns with its understanding of language and context.

Zero-shot prompting is exceptionally useful for generating fast, on-the-fly responses to a broad range of queries.

Example. Consider you are looking for a sentiment analysis of a hotel review. You could use the following prompt.

“Extract the sentiment from the following review: ‘The room was spacious, but the service was terrible.’”

Without prior training on sentiment analysis tasks, the model can still process this prompt and provide you with an answer, such as "The review has a mixed sentiment: positive towards room space but negative towards the service."

While this may look like something obvious to a human, the fact that AI can do that is impressive.

One-shot prompting

One-shot prompting is a technique where a single example guides the AI model's output. This example can be a question-answer pair, a simple instruction, or a specific template. The aim is to align the model's response more closely with the user's specific intentions or desired format.

Example. Let’s imagine you're working on a blog post about "sustainable travel," your prompt could look like this:

"I give you the keyword 'sustainable travel,' and you write me blog post elements in this format: Blog post H1 title: Meta title: Meta description:"

Upon receiving this one-shot prompt, the model would generate a blog post outline that closely aligns with the example template, producing something like:

Blog post H1 title: Exploring the World Sustainably Meta title: The New Age of Sustainable Travel Meta description: A detailed look at how to make your travels more eco-friendly without sacrificing comfort or experience.

This technique allows for higher customization and is particularly useful when the output must conform to a specific style or format.

Few-shot prompting

Few-shot prompting is an extension of one-shot prompting where multiple examples are provided to guide the AI model's output. The concept is similar, but including several examples offers more contextual cues to the model. This enables the model to understand the user's requirements better, generating output that closely adheres to the given examples.

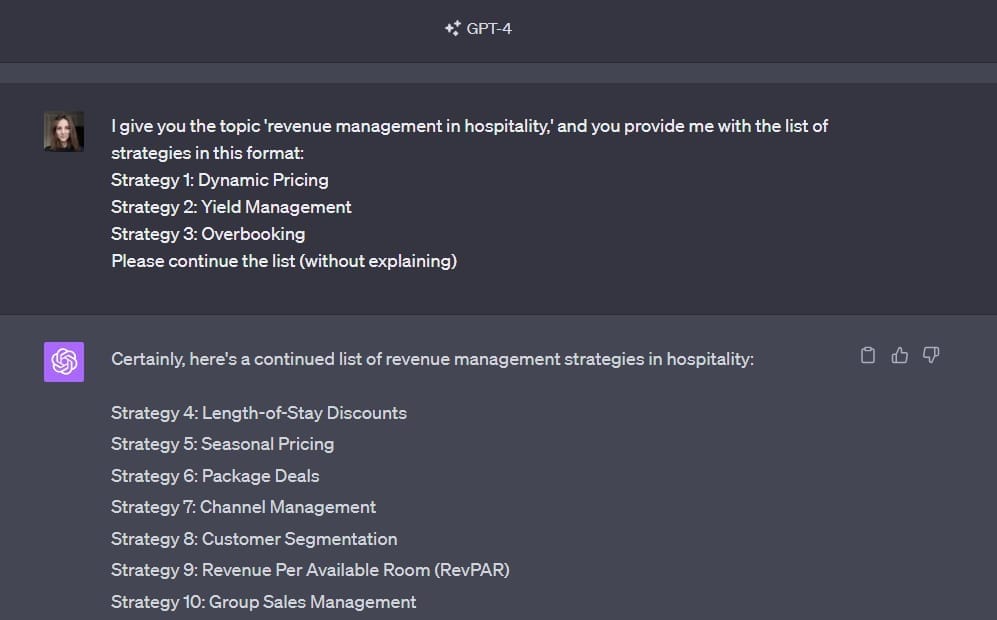

Example. Let's say you're working on a project related to "revenue management in hospitality," and you need a list of strategies.

Prompt: "I give you the topic 'revenue management in hospitality,' and you provide me with the list of strategies in this format:

Strategy 1: Dynamic Pricing

Strategy 2: Yield Management

Strategy 3: Overbooking

Please continue the list (without explaining)"

After receiving this prompt with multiple examples, the model might generate additional strategies as follows:

Few-shot prompting with ChatGPT

We asked ChatGPT this, and it easily listed the most common strategies like length of stay discounts, seasonal pricing, channel management, and RevPAR.

By providing several examples in a few-shot prompt, you increase the likelihood that the generated output will be more closely aligned with your desired format and content.

Role-playing technique

The role-playing technique employs a unique approach to crafting prompts: instead of using examples or templates to guide the model's output, you assign a specific "role" or "persona" to the AI model. This often includes explicitly explaining the intended audience, the AI's role, and the goals of the interaction. The roles and goals offer contextual information that helps the model understand the purpose of the prompt and the tone or level of detail it should aim for in its response.

Example. Consider the prompt: "You are a prompt engineer, and you need to explain to a 6-year-old kid what your job is."

Here, the role is that of a "prompt engineer," and the audience is a "6-year-old kid." The goal is to explain what a prompt engineer does in a way that a 6-year-old can understand. Using the role-playing technique, you set up the context for the AI model, guiding it to generate an output tailored to the assigned role and audience.

Positive and negative prompting

Positive and negative prompting are techniques used to guide the model's output in specific directions. As the names suggest, positive prompting encourages the model to include certain types of content or responses, while negative prompting discourages it from including other specific types. This framing can be vital in controlling the direction and quality of the model’s output.

Example. Let's say you are a sustainability consultant and want the model to generate a list of recommendations for reducing an organization's carbon footprint.

Here’s what your positive prompt could look like:

"You are a sustainability expert. Generate a list of five feasible strategies for a small business to reduce its carbon footprint."

Building on the above scenario, you should ensure the model doesn't suggest too expensive or complicated strategies for a small business. So you extend your initial prompt as follows:

"You are a sustainability expert. Generate a list of five feasible strategies for a small business to reduce its carbon footprint. Do not include suggestions that require an initial investment of more than $10,000 or specialized technical expertise."

Adding the negative prompting here filters out too expensive or technical strategies, tailoring the model's output to your specific requirements.

Chain-of-thought prompting

Chain-of-thought prompting is a technique designed to guide the AI model through a logical reasoning process. Rather than just asking for an output, this strategy incorporates a "chain of thought" that demonstrates how to arrive at the correct answer step by step. This approach is especially valuable for tasks requiring critical thinking or problem-solving skills, like mathematical calculations or complex queries.

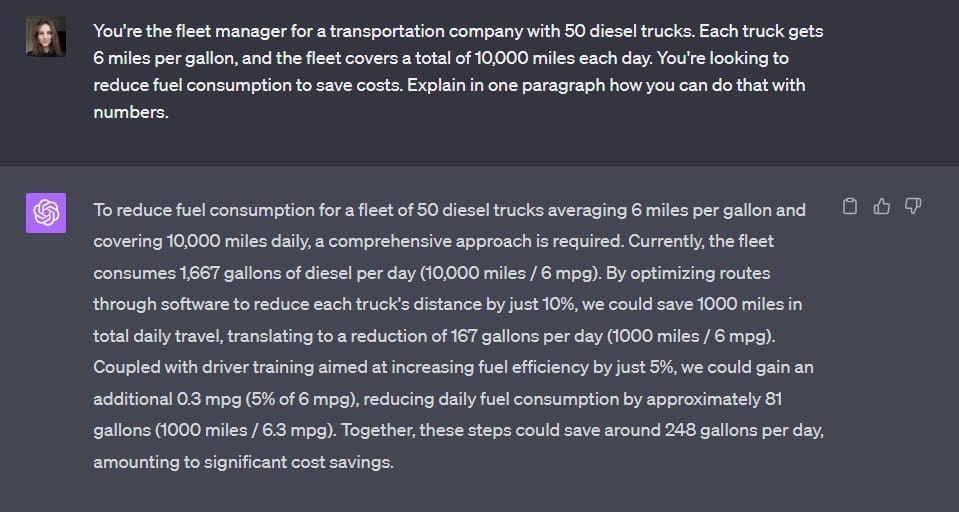

Example. Suppose you're in charge of optimizing fuel efficiency for a transportation company's fleet of trucks. You can employ chain-of-thought prompting to guide the model through this complex task as follows.

Prompt: "You're the fleet manager for a transportation company with 50 diesel trucks. Each truck gets 6 miles per gallon, and the fleet covers a total of 10,000 miles each day. You're looking to reduce fuel consumption to save costs. Let’s think step by step."

The response may look like this:

Chain-of-thought prompting with ChatGPT

By structuring the prompt as a chain of thought, you get the solution and understand how the AI reasoned its way there, producing a more complete and insightful output.

Iterative prompting

Iterative prompting is a strategy that involves building on the model's previous outputs to refine, expand, or dig deeper into the initial answer. This approach enables you to break down complex questions or topics into smaller, more manageable parts, which can lead to more accurate and comprehensive results.

The key to this technique is paying close attention to the model's initial output. You can then create follow-up prompts to explore specific elements, inquire about subtopics, or ask for clarification. This approach is useful for projects that require in-depth research, planning, or layered responses.

Example. Your initial prompt might be:

"I am working on a project about fraud prevention in the travel industry. Please provide me with a general outline covering key aspects that should be addressed.”

Assume the model's output includes points like identity verification, secure payment gateways, and transaction monitoring.

Follow-up prompt 1: "Great, could you go into more detail about identity verification methods suitable for the travel industry?"

At this point, the model might elaborate on multifactor authentication, biometric scanning, and secure documentation checks.

Follow-up prompt 2: "Now, could you explain how transaction monitoring can be effectively implemented in the travel industry?"

The model could then discuss real-time monitoring, anomaly detection algorithms, and the role of machine learning in identifying suspicious activities.

This iterative approach allows you to go from a broad question to specific, actionable insights in a structured manner, making it particularly useful for complex topics like fraud prevention in the travel industry.

Model-guided prompting

Another useful approach is model-guided prompting, which flips the script by instructing the model to ask you for the details it needs to complete a given task. This approach minimizes guesswork and discourages the model from making things up.

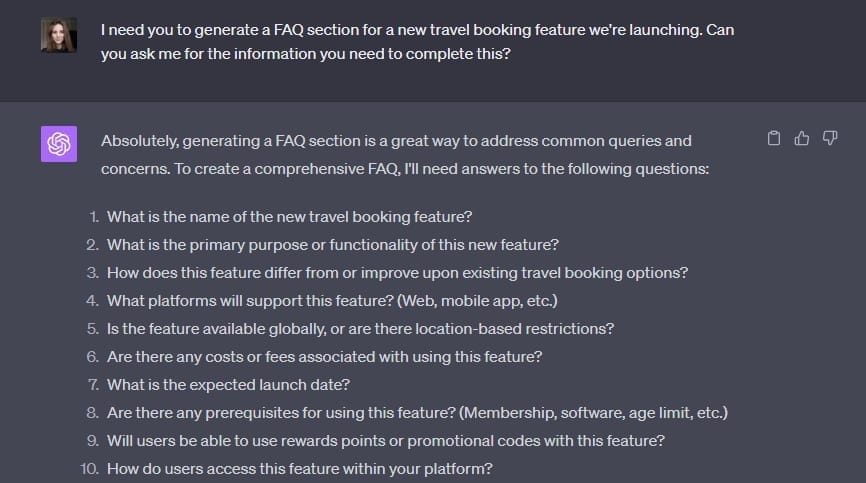

Example. Suppose you work in travel tech and you want an AI model to generate a FAQ section for a new travel booking feature on your platform. Instead of just asking the model to "create a FAQ for a new booking feature," which could result in generic or off-target questions and answers, you could prompt the AI as follows:

"I need you to generate a FAQ section for a new travel booking feature we're launching. Can you ask me for the information you need to complete this?"

ChatGPT might then ask you, "What is the name of the new travel booking feature?" and "What is the primary purpose or functionality of this new feature?" among other things.

Model-guided prompting with ChatGPT

By using model-guided prompting, you ensure that the resulting FAQ will be relevant, accurate, and tailored to your specific travel booking feature rather than relying on the model's assumptions.

We've outlined some of the key prompt types and techniques here. However, it's important to note that this is not an exhaustive list; many other techniques can be employed to get the most out of your model. Also, these techniques are not mutually exclusive and can often be combined for more effective or nuanced results. The prompt combination technique, for example, involves merging different instructions or questions into a single, multifaceted prompt to elicit a comprehensive answer from the AI.

Having discussed the essentials of prompt types, let's move on to the role of a prompt engineer, a crucial player in leveraging these techniques effectively.

The future of prompt engineering

Prompt engineering's horizon is teeming with fascinating prospects, surpassing the methodologies we've explored until now. A few groundbreaking trends and paradigms that might revolutionize this field include:

Automated Prompt Engineering (APE). The emergence of algorithms capable of customizing prompts for specific tasks or data sets could amplify the efficiency of interactions with LLMs. APE strives to reduce manual adjustments, accelerating the generation of meaningful and precise content from AI.

Real-time language translation. With the strengthening of machine translation capabilities, prompt engineering could be useful in instantaneous language translation services. This application could extend beyond text data to encompass real-time audio translations, facilitating seamless communication across linguistic barriers.

These progressive developments represent just a fraction of what's possible. As generative AI models become more advanced, the role of prompt engineering in ensuring smooth integration into existing systems and workflows will only increase.