As businesses collect more data every day, finding the right system to store and analyze it has become an important decision. Two common options are data lakes and data warehouses, each designed for different needs.

In this article, we’ll look at how data lakes and data warehouses differ in their architecture, use cases, costs, and trade-offs to help you decide which one fits your data strategy.

Read our article on Database vs Data Warehouse vs Data Lake vs Lakehouse to learn how these options compare.

Data warehouse and data lake explained

A data warehouse (DW) is a centralized system for storing structured data from different sources, such as databases, CRMs, or ERP systems, in a consistent, predefined format. It follows a schema-on-write model, meaning you must know in advance what kind of data you’ll analyze, what metric you’ll track, and how the data will be used. This makes it ideal for report generation and business intelligence (BI), but less flexible when analytics needs change over time. Some warehouses use the data vault architecture to organize information into hubs, links, and satellites to improve scalability and tracking.

Data warehouses are best suited for historical and transactional data—things like sales numbers, customer profiles, and financial records. They’re mainly used by data analysts and business teams who need accurate, consistent insights. Popular DW platforms include Snowflake, Amazon Redshift, Google BigQuery, and Microsoft Azure Synapse Analytics.

A data lake is a centralized repository where you dump all your data in its original form. From images and videos to logs, IoT data, or social media feeds, a data lake is built to take it all. It's essentially a place where you can keep any and all raw information, without having to define a schema in advance. This schema-on-read setup gives you more freedom—you can query and explore data however you want, from different angles, whenever a new question or idea comes up. This flexibility is why many modern data teams prefer an ELT (Extract, Load, Transform) approach, where data is loaded first and shaped later as needed, rather than predefined upfront.

Data lakes are often built on top of HDFS (Hadoop Distributed File System) or object storage systems such as Amazon S3, Azure Data Lake Storage, and Google Cloud Storage. For processing and analysis, tools like Apache Spark, Apache Flink, Google Dataflow or managed services like Databricks are typically used.

A new hybrid storage option has emerged over time: the data lakehouse. It keeps the flexibility of a lake but adds the structure, reliability, and speed of a warehouse. With a lakehouse, you can store all kinds of data and run analytics from the same place. Formats like Delta Lake (the open-source foundation behind Databricks Lakehouse Platform), Apache Iceberg, and Apache Hudi are key technologies that enable the lakehouse approach.

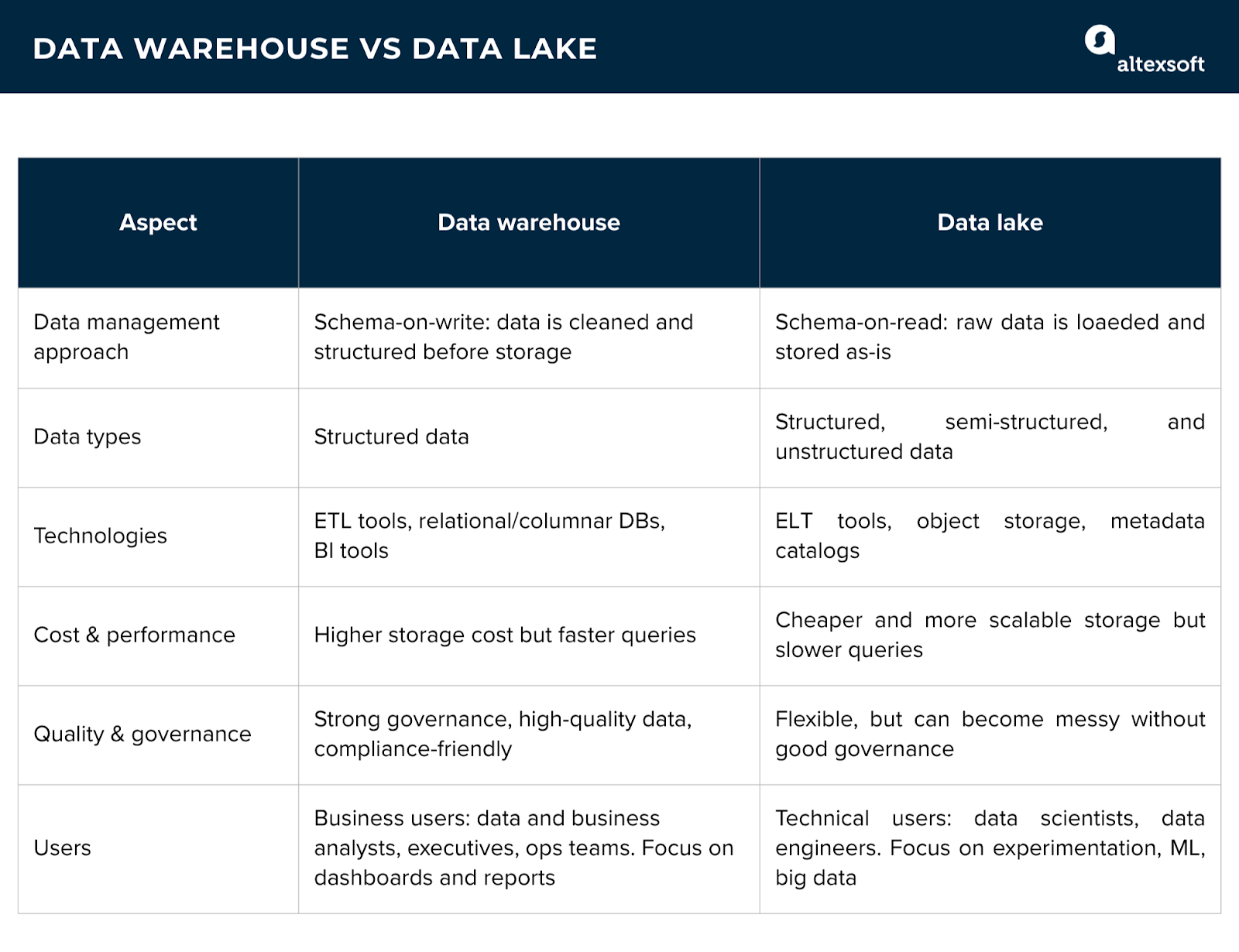

Key differences between a data lake and a data warehouse

Let’s take a closer, more thorough look at the differences between data lakes and warehouses.

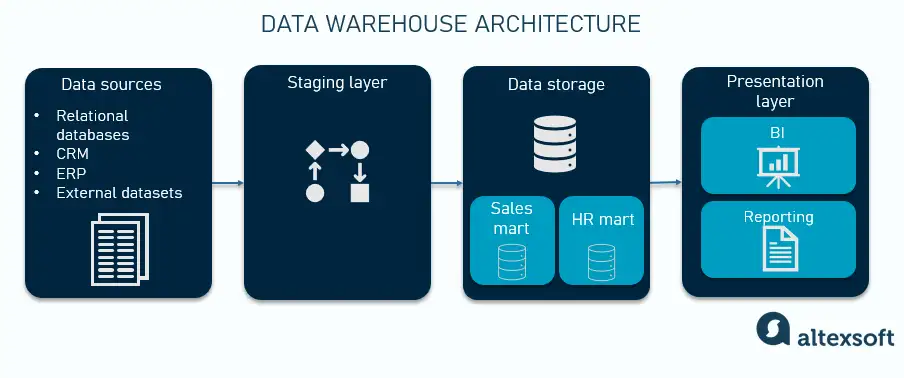

Architecture

A data warehouse organizes data before it’s stored, following a schema-on-write approach. It’s built for structured data, like transaction records, inventory tables, or CRM information. Some modern warehouses can handle semi-structured data, but many people still prefer data lakes for that.

Data is first pulled from different sources and moved to a staging layer where it’s cleaned and transformed to ensure consistency and quality. Then, it’s loaded into the warehouse according to a set schema. The entire workflow is known as Extract, Transform, Load (ETL).

Once stored in the warehouse, data is immediately ready for reporting, visualization, and analysis with BI tools. To make things easier for different teams, warehouses often include data marts—smaller sections that focus on specific areas like sales, finance, marketing, or operations.

This setup is predictable and easier to manage because every dataset follows clear rules. The trade-off is that it takes more work up front to get the data ready. However, once it's prepared, users get fast query performance, reliable results, and strong data governance.

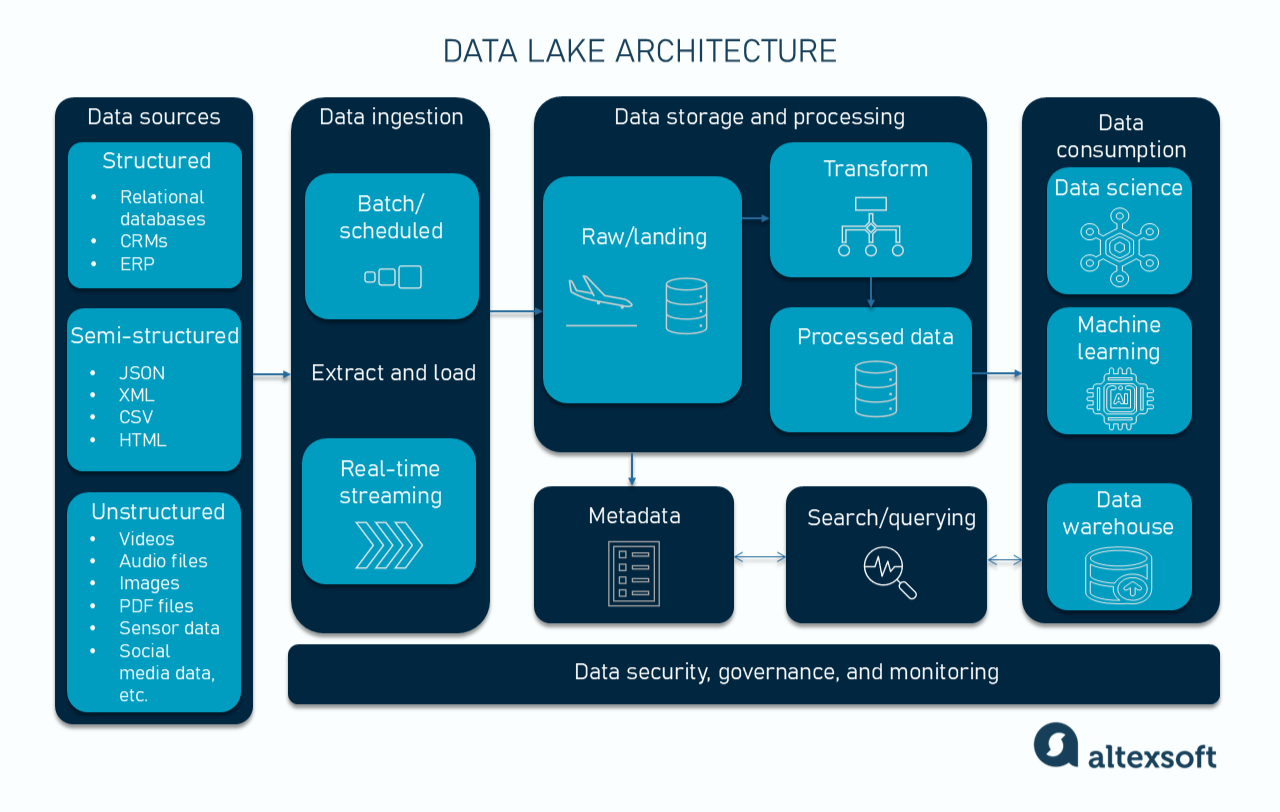

A data lake, by contrast, uses a schema-on-read approach. A lake doesn’t require a set format, so data is stored in its raw form. Organizations can collect and keep all kinds of data (structured, semi-structured, or unstructured), even if they aren’t sure how they’ll use it.

The architecture includes an ingestion layer to bring in data from multiple sources to scalable object storage (such as Amazon S3). The data integration happens via an Extract, Load, Transform (ELT) approach, where the schema is applied as needed, only after the data is brought into the repository.

A metadata catalog (such as AWS Glue, Unity Catalog, or Hive Metastore) helps classify, index, and manage this data, making it easier for data scientists and other stakeholders to discover and govern. The structure is applied only when the raw data is read or queried by the data warehouse through the ETL process.

At the consumption level, data engineers, analysts, and data scientists can query data directly in the lake using processing engines like Apache Spark, Presto, or Databricks. They can also connect visualization or BI tools to the lake through these engines, turning raw or semi-processed data into insights without moving it to another system first.

A data lake’s setup gives you more flexibility, but it also takes more engineering effort to manage and maintain. Also, it requires careful organization and strong metadata management practices to prevent the data from becoming messy or hard to use.

Processing approach

In a data warehouse, data is processed before it’s stored. It’s extracted, cleaned, and transformed to fit a set schema. This means the data in the warehouse is already structured, validated, and ready to use. Queries run faster and results are consistent, though it can take longer to enter new data.

In a data lake, data is processed after it’s stored. Raw data is loaded right away and structured later when needed. This makes it faster to add new data and handle different types of information. The trade-off is that analysts or data scientists usually spend more time cleaning and preparing the data before it can be used.

Storage cost and performance

Data lakes are usually cheaper to maintain and scale because they rely on object storage, which can hold big data at lower cost. They’re good for long-term storage or archiving, especially if you’re not running queries frequently. The downside is that query performance can be slower, since raw or unstructured data often needs additional processing when accessed.

Data warehouses, on the other hand, are more expensive because they involve pre-processing, indexing, and optimized storage formats. But they make queries much faster and more reliably, which is important for business intelligence and analytics. For regular reporting and dashboards, the overall cost per query can actually be lower because the data is already organized and ready to use.

Data quality and governance

Data warehouses follow strict rules for data quality, governance, and access control. Because data is cleaned and structured during the ETL process, users can trust it for accurate reporting and compliance. This makes warehouses a good choice for regulated industries, financial reporting, and other critical business analysis.

Data lakes' greater flexibility can make them harder to manage. Without proper metadata management and cataloging, a lake can quickly become a “data swamp.” Duplicates, missing values, or inconsistent formats can cause reliability issues unless there’s a robust governance framework in place.

User type

Data warehouses are built for business users—analysts, executives, and operations teams in need of access to structured, reliable data and visual dashboards. They rely on SQL-based tools to run queries and generate reports without writing code.

Data lakes are built for technical users—including data scientists, machine learning engineers, data engineers, and analysts who must experiment with large and complex datasets. These users often work with notebooks, programming languages (like Python), or machine learning frameworks to draw out insights, test models, and build predictive systems.

Combining data lakes and data warehouses

Data lakes and data warehouses offer various benefits, and the great thing is that you don’t have to choose only one—you can have the best of both worlds.

Many organizations use a hybrid approach where a data lake collects all raw data in one place, while a warehouse stores cleaned and processed data for reporting and business intelligence. This setup lets teams experiment freely in the lake without disrupting the warehouse, keeping analytics consistent and reliable.

Here are some real-world examples.

BMW Group

BMW Group uses a hybrid data architecture that brings together a data lake and a data warehouse on AWS. Its Cloud Data Hub collects over 10 terabytes of data each day from more than a million connected vehicles. This includes everything from speed and location to engine performance.

The data lake on Amazon S3 holds raw data, while services like Athena and SageMaker handle processing and analysis. This setup supports use cases like predictive maintenance and real-time performance monitoring. Teams utilize a central Data Portal to find and work with data easily, following a provider–consumer model that keeps things organized and accessible.

Uber Technologies

Uber’s data platform is built around a massive, centrally modeled data lake powered by Apache Hudi—an open-source project that Uber created and later contributed to the Apache Software Foundation.

In this setup, Uber’s raw data—such as trip logs, GPS data, driver earnings, and menu updates for Uber Eats—first flows into the data lake. The lake acts as the foundation for data engineering, machine learning, and analytics across teams. Using Hudi, Uber processes data incrementally instead of in large, time-consuming batches. This allows them to keep datasets fresh and up to date, which is crucial for real-time use cases, like ETA predictions, fraud detection, and pricing.

At the same time, Uber’s data warehouse layer is used for structured, business-ready information. Cleaned and modeled data from the lake is organized into dimensional tables for reporting and analysis. Teams across rides, delivery, and finance use this layer to generate consistent metrics and dashboards for decision-making.

Nasdaq

Nasdaq moved from an on-premises warehouse to Amazon Redshift for better performance and scalability. As trading data continued to grow, they added a data lake built on Amazon S3 to store raw financial information such as orders, trades, and quotes.

With this hybrid approach, Nasdaq now loads data up to five hours faster, runs queries 30% quicker, and can handle over 70 billion records per day.

Shopify

Shopify’s Production Engineering department formed a dedicated Lakehouse team with one goal: to make data access faster and simpler throughout the business.

This meant breaking down data silos that had formed across Hive, BigQuery, and custom in-house formats. The team standardized on Apache Iceberg as a single table format and used Trino for querying and data migration. After moving petabytes of data, including a 1.5 PB API log table, query times dropped from three hours to just under two minutes.

By unifying its data architecture, Shopify made analytics faster, more consistent, and easier to manage.

With a software engineering background, Nefe demystifies technology-specific topics—such as web development, cloud computing, and data science—for readers of all levels.

Want to write an article for our blog? Read our requirements and guidelines to become a contributor.