This post aims to compare the main data storage types side-by-side, explain each one's capabilities and limitations, and help you understand which best suits your goals. For more in-depth information on each type, you’re welcome to follow the links in the text.

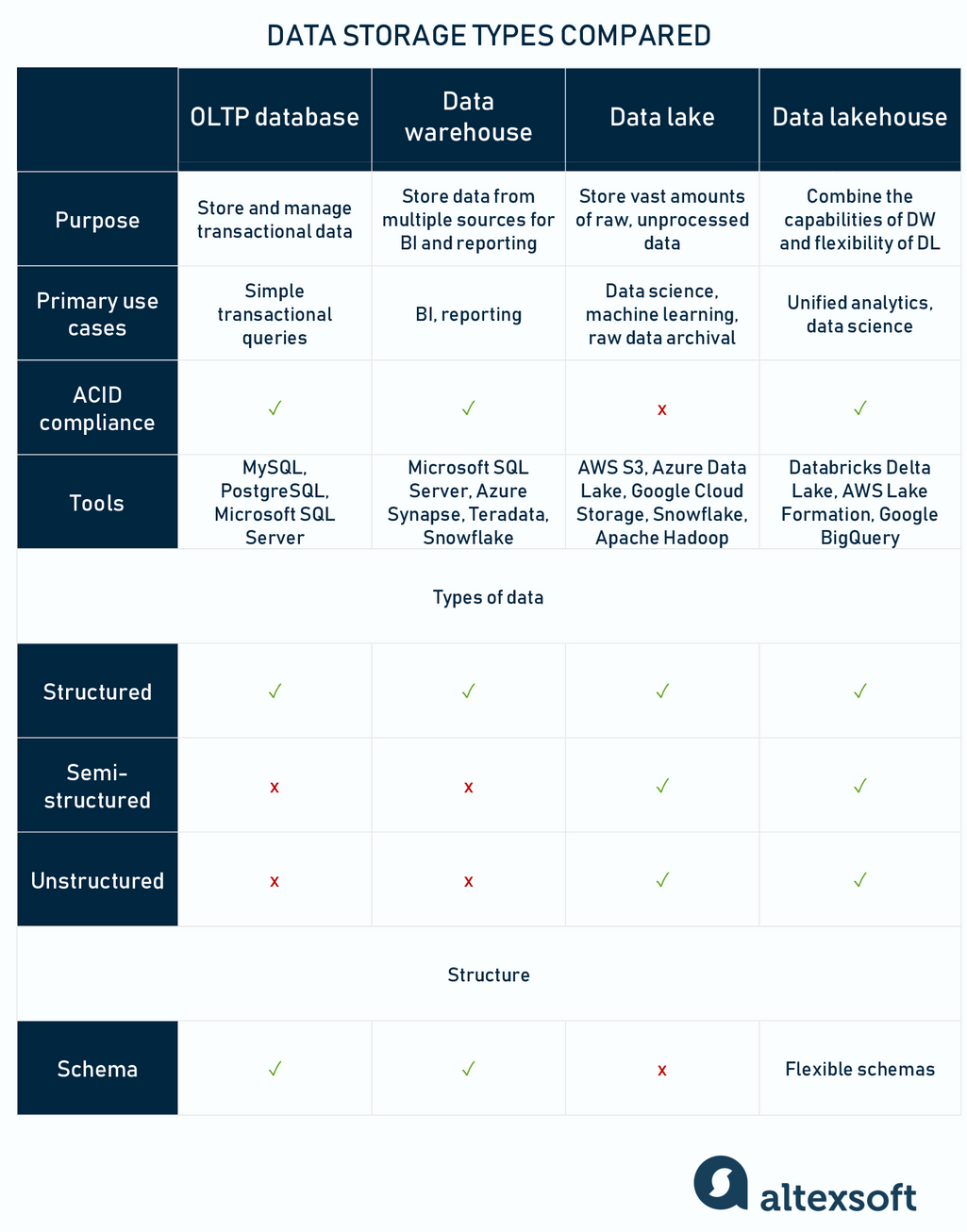

Main data storage types compared

To make things easier to understand, let’s imagine you run an eCommerce business and have to manage its data. Let’s assume your business transaction receipts, whether paper or electronic, are neatly filed and you’ve started an Excel spreadsheet—typical.

Seeing all the information in one table is convenient at first. But then you keep adding columns of customer details, products, brands, and suppliers. The rows of transactions just seem to multiply daily… At some point, you’re likely to find yourself struggling to manage what is now a bloated, clunky table.

It becomes way too hard to organize data or find what you need – let alone run any analytics. That’s when you need to optimize things.

OLTP database

A database (DB) is an organized collection of information stored electronically that can be easily retrieved and managed. And even though it might look like that spreadsheet table you used, it’s a whole different thing under the hood.

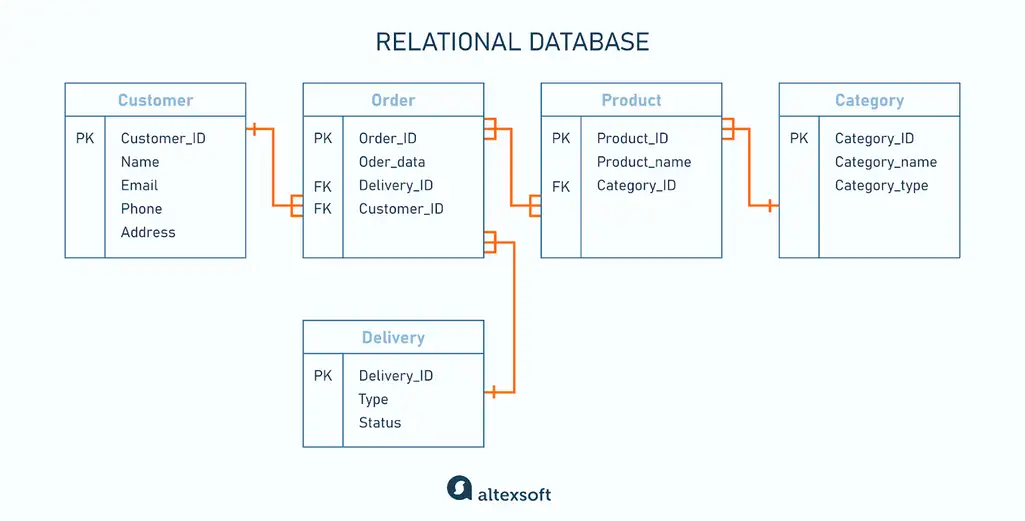

The thing is that, unlike a spreadsheet that stores and displays all the information in a single table, a database is actually a number of connected – related – tables. Which is why they're called relational databases.

Data model: fixed, normalized schema

Relational databases store structured data in tables with rows and columns with defined relationships between the tables. How the tables are related structurally is known as a schema.

For example, you have your main table with order information. But the customer details aren’t included in it. Instead, they are stored in a different table, so you only use the customer ID to record who you sold a specific item to. The same goes for products, categories, and deliveries – each with separate, related tables from which the system retrieves information.

Relational database schema

The connections between tables are maintained with the help of keys. The primary key (PK) is the unique ID of each record (table rows), while the foreign key (FK) is a reference to a primary key in a different table.

Databases allow you to organize everything efficiently and avoid redundancy. This process is called normalization. Breaking down large tables into smaller, related tables minimizes duplications, reduces space usage, and supports data integrity. Simply speaking, this keeps your data complete, consistent, and easier to manage.

Now, editing entries becomes a breeze. You don’t have to update, say, supplier contact details in all the tables. Instead, you change them once in just one table.

Another important thing worth mentioning is that relational databases support ACID.

- Atomicity ensures that if a process fails while writing data, the entire operation will be rolled back.

- Consistency relates to transactions maintaining all predefined rules and fitting into the schema.

- Isolation ensures that concurrent transactions do not interfere.

- Durability means that once a transaction has been committed, it will remain so, say, in case of power failure, crash, or other mishaps.

These key properties let you rest assured that database transactions are processed reliably, consistently, and safely.

Database management systems (DBMS) are software solutions that help manage databases and interact with the information they contain. Popular relational DBMSs are MySQL, MariaDB, PostgreSQL, and Microsoft SQL Server.

See that SQL in most names? All data storages that deal with structured data (including relational DBs) use SQL or Structured Query Language to access and manage information, hence the name.

We have a detailed overview of the main database management systems, so check it out for more information. You might also be interested in master data management.

Why use relational databases: store and manage transactional data

Relational DBs are perfect for OLTP – Online Transaction Processing. OLTP is about handling a large amount of day-to-day transactional data that changes often and must be quickly accessed or updated.

OLTP involves four basic operations: create, read, update, and delete (CRUD). Of course, those are done in the bowels of the database. Real-life user’s actions can include adding an item to the cart or updating customer details.

Unlike OLAP (Online Analytical Processing), OLTP isn’t used for in-depth analytics. Instead, it focuses on operational data—real-time, frequent, and small-scale.

What relational databases are suboptimal for: complex analytical queries

A relational database is the most widely used solution for storing operational data, recording sales transactions, and quickly retrieving any piece of information. Basically, they serve as a basis for any application in any industry that has to store data.

But as your analytics maturity grows and you want to know more about what’s going on in your company, databases become, well, ineffective. If you want to keep historical data and run an analytics query like tracking the dynamics of changes over time, relational DBs aren’t the best solution. While possible, keeping the history of your prices or other changing data points isn’t efficient for CRUD transactional operations.

But even if you use OLTP databases to run analytical queries on them, it may be way too slow.

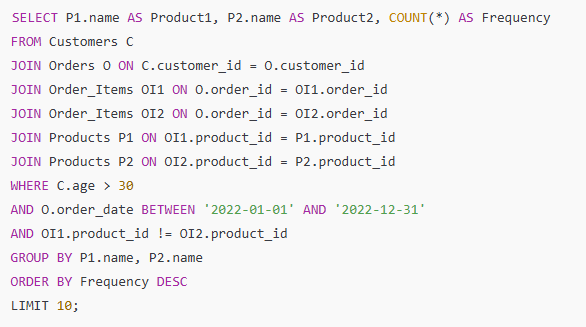

Let’s look at an example. Say, you want to find out which products were most frequently purchased together by customers over 30 years old in the past year. This would involve retrieving data from four related tables:

- Customers (contains customer details including customer ID, name, age, and location);

- Orders (includes records of each order, with an order ID, customer ID, order date, and total price);

- Order_Items (details what products were in each order, linking order IDs to product IDs and quantities); and

- Products (stores product details such as product ID, name, category, and price).

Now, the SQL query would look like this.

An example of an SQL query

So what's the problem here? What affects the database performance?

Many joins. As you can see, this query requires multiple joins (combining data from multiple tables) across four different tables. Each join increases the query execution time, especially as the amount of data grows.

Row-based storage. Relational DBs record and retrieve data row by row. So when a query accesses a row, it reads all the data for that record, even if only a few fields are needed. While there are a number of available optimizations, this still means slow processing, again, especially when dealing with large datasets.

So, if you want to drill deeper into your data, you need a warehouse.

Data warehouse

A data warehouse (DW) is a relational database for storing large volumes of structured data from multiple sources (OLTP databases, business management solutions, external datasets, flat files, etc.).

Unlike OLTP databases that are mainly optimized for transactional operations, data warehouses are designed for querying information for analytics purposes: tracking trends, defining patterns, and deriving actionable insights that support decision-making.

Let’s take a quick look under the hood and see how data warehouses work. That is, how do the numbers from your POS system get to that nice-looking dashboard with colorful graphs?

Data warehouse architecture: ETL and key layers

To give you that sought-after view into your operations, data has to go through the ETL pipeline. That stands for Extract, Transform, Load. So data is first extracted from various sources, then it’s transformed and prepared for analysis (cleaned, formatted, organized, etc.), and then loaded into the storage space.

Note that today, modern cloud-based warehouses also support the ELT process that’s typically inherent in data lakes. We’ll touch on it in the next section, but here, for the sake of simplicity, we’ll stick to the most common approach.

Okay, that might sound a bit too complex, but let’s look at the four layers of the DW architecture.

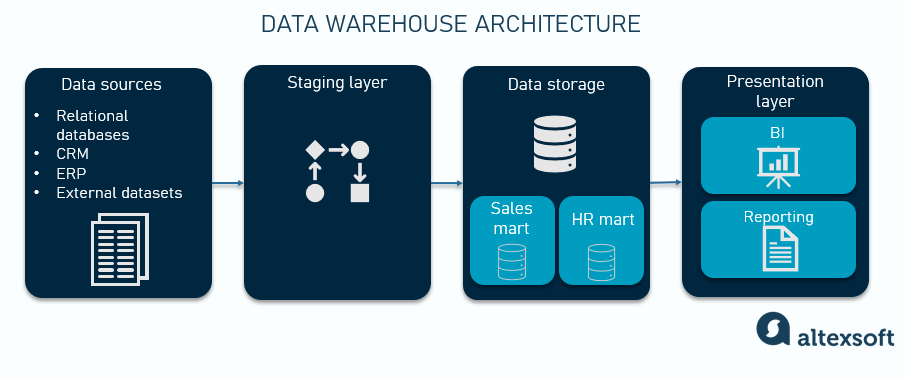

Data warehouse architecture

A data source layer is basically where data comes from.

A staging or transformation layer is a transitional area where data is prepared and organized for efficient storage and querying.

A storage layer (often referred to as the data warehouse itself) is where data is kept. This layer can include data marts – subsets of the WH built for the needs of a specific department, business area, or user group.

A presentation layer is where end-users such as business analysts can easily access stored information, usually via BI tools. Here, you can interact with data, run queries, create dashboards and visualizations, generate reports, etc.

Sounds pretty logical so far, doesn’t it?

Now, let’s zoom in a bit and look at how that DW is structured.

Data model: star, snowflake, and vault schemas with schema on write approach

In data warehouses, the structure is strictly predefined, meaning that it’s created before data comes in. This approach is called schema on write.

There are three main schema types used for DWs: star, snowflake, and vault.

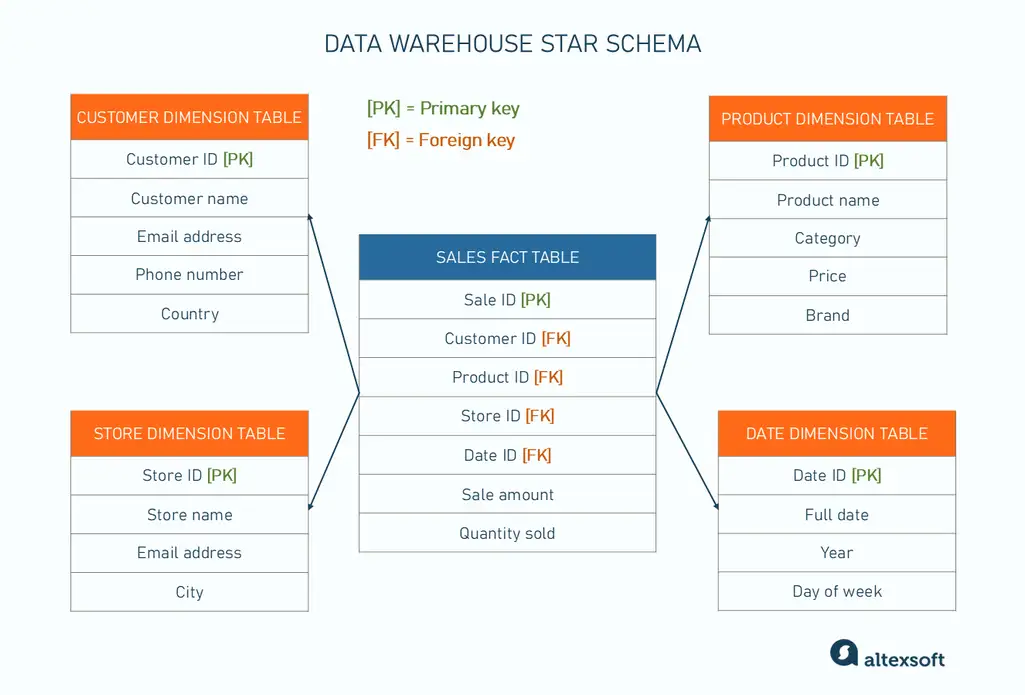

The star schema is the simplest and most widely used design. It centers around a fact table, which contains core data like sales or transactions.

The fact table connects to multiple dimension tables, such as customer, product, or date. These dimension tables provide descriptive details about our core data.

Star schema

See, warehouses use the same approach as relational databases, connecting tables through foreign keys. However, these DWs aren’t necessarily normalized, meaning that redundancy and duplications are possible. For example, instead of recording a date as a single entry, you can break it down by years, quarters, days of the week, etc.

Why is it better for analytics? You can explore your key metrics (e.g. sale amount) through the lenses of different dimensions with the minimum number of table joins.

It makes star-schema DWs faster and more efficient when retrieving information.

In addition, DWs normally read data by columns, as opposed to databases that are row-oriented. For analytics, you usually scan and aggregate data across many rows – but only a few columns. So DW’s columnar approach makes returning queries a lot faster since no unnecessary data is processed.

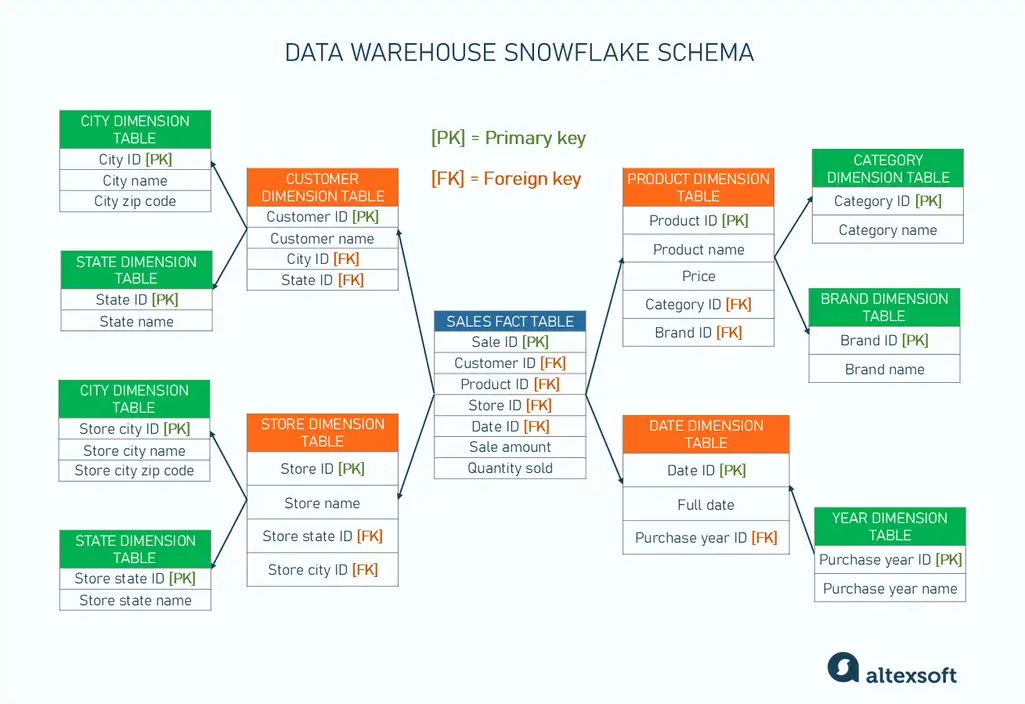

The snowflake schema is a bit more complex and detailed. Here, dimension tables are further broken down into smaller related tables. For example, a product table might be split into separate tables for categories and brands.

Snowflake schema

Such decomposition of dimension tables in the snowflake schema makes it more normalized than the star one. However, it’s still typically not as fully normalized as relational databases.

Such partial normalization reduces redundancy in dimension tables, which saves space but makes querying more complex due to more joins. So this approach balances space-efficiency and keeping data retrieval manageable.

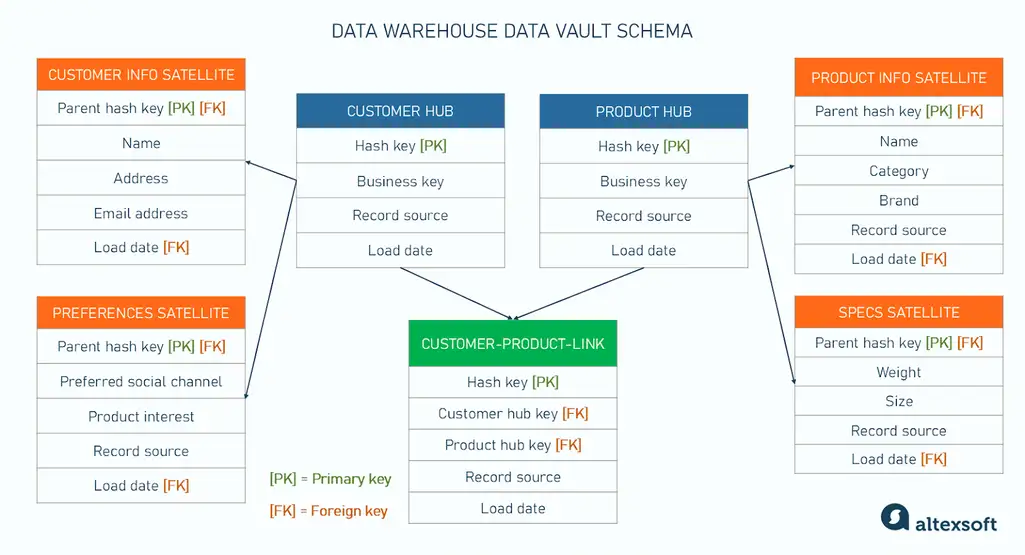

The data vault schema is designed for scalability and flexibility. It separates data into three components:

- hubs (core business entities like customers or products);

- links (relationships between entities, like orders linking customers and products); and

- satellites (detailed information about the entities or relationships).

Read more about data vault schema in our separate article.

Data vault schema

Each schema has its strengths and is suited to different needs. The star schema prioritizes simplicity and speed, the snowflake schema focuses on storage optimization, and the data vault schema is more adaptable and can better handle growing amounts of data.

As for data warehouse tools, the popular platforms are Google BigQuery, Azure Synapse, Amazon Redshift, Teradata, and Snowflake.

Read a complete overview of the main data warehouse tools in our detailed comparison article.

Why use data warehouses: store data from multiple sources for BI and reporting

A data warehouse allows you to get an in-depth view of your business operations and performance. They are excellent for BI and reporting, compiling information from different sources into digestible dashboards and detailed reports.

Also, data warehouses are designed to keep historical records and track changes over time. They enable complex queries and analyses on this data, such as, for example, defining a product's seasonal sales trends.

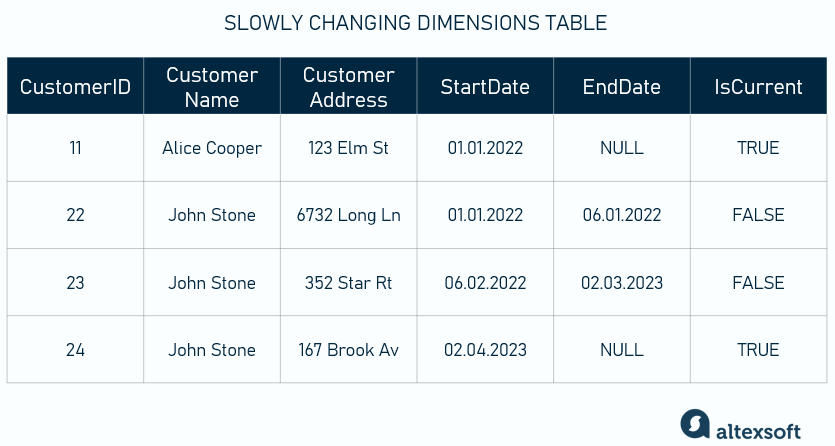

It’s done with the help of slowly changing dimensions (SCDs) tables. These refer to those dimensions whose attributes change over time, but the changes are rare and irregular. Some examples are customer contact details or product prices.

How can SCDs be tracked in a DW

There are several approaches to SCDs, for example, you can add rows, columns, or separate tables to keep historical data.

What data warehouses are suboptimal for: storing unstructured data, streaming analytics, ML

So now you can quickly calculate your sales volume by customer, identify low-stock items, or rate your best-selling products. And all your reports are now duck soup. What else might you need?

Well, data warehouses are really powerful, but they can’t be used for all purposes.

Structured data only. By its nature, a DW can’t handle unstructured data (e.g., images, social media posts) and semi-structured data (e.g. JSON). So if want to work with all data types, you need a different solution.

No real-time data processing. DWs are generally designed for batch processing, so they aren’t optimized for streaming data from, say, an IoT network. That said, they can be a part of the real-time analytics pipeline where streaming data is first loaded into the lake and then transformed, put into a warehouse, and analyzed.

Potentially limited datasets for machine learning. While DWs can be used for training machine learning models, there’s always a risk of having insufficient training data since you only load predefined data from certain sources. Sometimes, it’s just not enough for a comprehensive training dataset. So if you want to dive deep into data science, data lakes are generally a better solution.

To know more about training ML models, read about data collection and data preparation in our dedicated posts.

Data lake

A data lake (DL) is a storage system designed to hold vast amounts of raw, unprocessed data. That’s where big data comes into play. A DL supports structured, semi-structured, and unstructured formats, which makes it a versatile repository for in-depth analysis and machine learning pipelines.

In a retail business, you might have unstructured data of, for example, customer reviews, delivery route maps, posts from social media platforms, footage and images of your products – all that will sit in the data lake, waiting to be analyzed later.

While DWs usually contain essential operational data for BI and reporting, a DL is, well, like a jam-packed closet where you have everything you might need in the future, even if you don’t know how you’ll use it yet.

Data model: no fixed schema (schema on read approach)

Unlike relational DBs and DWs, data lakes don’t have a rigid schema-based model. Imagine that instead of putting your clothes neatly into separate drawers and shelves, you just toss everything in a big pile in your closet – together with shoes, bags, toys, and sometimes even tableware or food leftovers. That’s pretty much what a data lake looks like.

Data lakes are like some people's desktops. Source: r/mildlyinfuriating on Reddit

Such a flat data environment is called object storage. And this approach to organizing information is called schema on read meaning that data isn’t allocated in a predesigned structure (you can tell, right?!) – and the data model is applied upon retrieval.

That’s why data lakes, unlike DWs, are primarily built for the ELT processes when data is transformed after it’s loaded into the repository (extract, load, and only then transform). On one hand, it allows for more flexibility in storing a variety of formats, but on the other, it requires more effort in managing the storage and ensuring data quality.

You might be wondering how it is possible to find anything in such a mess. In data lakes, metadata – or data about data – helps locate the necessary information.

Metadata can include size, date, file format, tags, or any other contextual description of data you store – and it’s stored in a more organized and structured way. Using these metadata labels, you can find that old picture of your best-selling item.

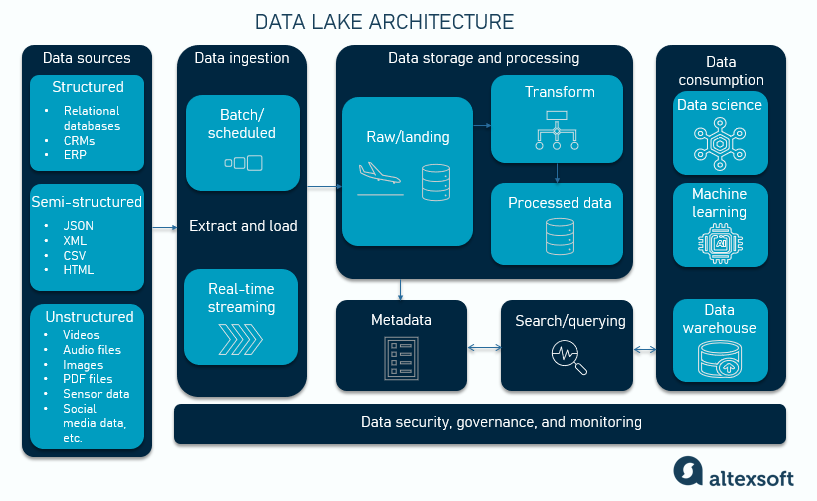

So let’s take a closer look at the common data lake architecture components.

Data lake architecture

Data lakes include more layers than data warehouses, though some of them are the same.

Data lake architecture

The data sources layer includes all the internal and external systems that information is gathered from.

A data ingestion layer is a transition stage responsible for smooth data flow (in batch or real-time modes) from the sources to the storage.

A data storage and processing layer has several zones or sections:

- where raw data is kept,

- where data is transformed or prepared for further use, and

- where prepared data sits post-transformation.

A metadata layer is that structured catalog of data descriptions that helps you find and retrieve the needed information from the storage. Metadata management tools automatically capture and categorize those descriptions into an organized inventory of data assets.

A query engine is a tool that basically allows users to access data in storage. It queries the metadata catalog, searches the repository, and retrieves exactly the requested information for further usage.

A data consumption layer is similar to the presentation layer in the DW. That’s where we mortal people can interact with data via BI tools.

An overarching layer of governance, security, monitoring, and stewardship is optional, though often implemented to enhance compliance and data usage efficiency.

Visit a separate post about data governance to better understand what it's about.

The popular data lake platforms are AWS S3, Azure Data Lake, Google Cloud Storage, Snowflake, and Apache Hadoop.

Why use data lakes: store vast amounts of raw, unprocessed data for further processing

As we said, a data lake is used for storing all sorts of data in massive volumes which makes it a useful tool in itself. You might not know how to use available information now, but at some point in the future, it has the potential to help you solve a business problem.

Also, if you want to build a machine learning pipeline, data lakes are a go-to solution. For example, you might want to get deeper insights into customer behavior, optimize internal operations, develop a recommender system, or predict demand trends. That’s when you’ll need massive volumes of diverse data to train your model with.

In addition, typically, data lakes include analytical sandboxes that are like separate playgrounds where data scientists can experiment with data without the risk of compromising the entire dataset.

What data lakes are suboptimal for: precise analytics

While data lakes unlock a whole sea of possibilities, there’s always a ‘but’.

Data lakes generally do not adhere to ACID properties, meaning that information in the storage can be incomplete or corrupted. That’s a limitation for precise analytics where the reliability of data operations is critical (e.g., BI and reporting).

That said, some modern solutions provide ACID guarantees.

Obviously, classic data lakes have limited data management functionality, given the diversity of data types stored. So a typical approach is building a two-tier architecture, i.e., a data lake for storing raw data + a data warehouse for keeping refined, structured information and running analytics. That led to the emergence of a new solution, blending the best of both worlds.

Data lakehouse

A data lakehouse combines the structured querying of a DW and DL’s flexibility with raw data. So instead of building a tangled bundle of multiple separate tools, you can now go with a single solution that serves all your needs. It’s cheaper, more organized, and more efficient.

Data model: flexible schemas

A data lakehouse allows both schema-on-write for some use cases and schema-on-read for others. That flexibility covers any purpose you might need: efficient querying of organized data, real-time analytics, and raw data storage.

Data lakehouses support the ACID principles, so now you can be sure that your datasets are reliable for analytics and reporting. Also, there’s a schema enforcement approach meaning that a predefined schema will be applied to data before storing it. It’s used together with a schema evolution approach which means that schemas are flexible and can dynamically adjust to changing data needs.

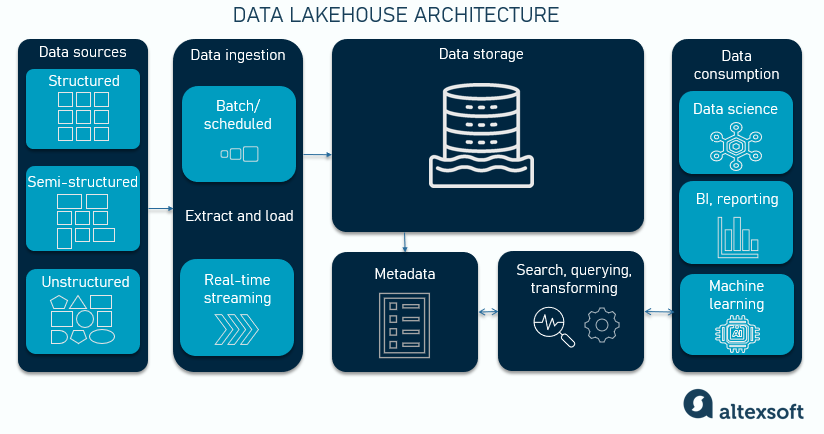

Data lakehouse architecture

The data lakehouse architecture is actually pretty similar to that of a data lake.

Data lakehouse architecture

The ingestion layer is about pulling data from those sources and delivering it to the storage layer. There, various protocols are used to unify batch and streaming data.

The storage layer is essentially a data lake with that flat, object storage approach we discussed above.

A metadata layer contains a detailed, structured catalog of metadata for all objects in the lake storage.

A query engine is a powerful tool capable of complex query execution. It handles all data types and formats and can process both SQL queries and ML tasks efficiently.

The UI layer includes user-facing tools where we can interact with data.

Some well-recognized brands in the world of data lakehouses are Databricks Delta Lake, AWS Lake Formation, Google BigQuery, and Cloudera Apache Iceberg.

Why use data lakehouses: сombine the structured querying capabilities of DWs with the flexibility of DLs

Data lakehouses allow you to run queries combining several data types (e.g., historical transactional data and real-time unstructured data) which was previously difficult to achieve.

For example, you can merge sales data with customer reviews or weblogs to analyze the correlation between revenue trends and underlying user behavior.

What data lakehouses are suboptimal for

So a lakehouse seems to be that long-sought magic bullet that solves all the problems of humanity, from people cutting in line to climate change. But is it really?

The key thing to remember is that this concept is fairly new, so the tooling, expertise, and best practices are only being developed. That said, we still don't know for sure whether the data lakehouse is more cost-efficient and high-performing in the long run, compared to a more typical DL+DW architecture.

As of today, the data lakehouse has proven its worth as a viable component of the modern data stack.

But to build the optimal data architecture that will best serve your development goals, you must clearly define your data sources, business needs, and available resources. And who knows – you might find out that Excel isn’t that bad after all 🙂

Maria is a curious researcher, passionate about discovering how technologies change the world. She started her career in logistics but has dedicated the last five years to exploring travel tech, large travel businesses, and product management best practices.

Want to write an article for our blog? Read our requirements and guidelines to become a contributor.