There's no denying it: ChatGPT, OpenAI's groundbreaking AI, is the talk of the tech world. This impressive Large Language Model is altering the landscape across many sectors, with the travel industry emerging as a prime example of its transformational power.

ChatGPT continues to reshape industries, redefining how we interact with technology. In this article, we'll uncover the mystery of this AI marvel by explaining its foundation in prompt engineering and showcasing its growing value in the travel sector.

We'll highlight our company's experience demonstrating how we've used ChatGPT within travel technology. This practical exploration can help spark your imagination on how this cutting-edge technology could redefine your field. Remember, we're still experimenting and learning more every day. So the results we share here are just what we've discovered so far.

If the technical details of large language models don't interest you, you can skip directly to the use cases section.

Large language models and transformers in a nutshell

Language models, types of machine learning models, are trained to anticipate the likelihood of a word sequence. They predict the next appropriate word based on text context, essentially learning how humans use language.

For example, in the sentence: "John went to the store and bought some apples because he wanted to bake a [...]," a well-trained language model would anticipate that the missing word could be "pie." This is because the context provided by the earlier part of the sentence ("bought some apples") suggests that John might be preparing to make an apple pie. Of course, there are other possibilities, such as "cake" or "tart," but "pie" is an everyday culinary use for apples, making it a likely prediction.

Nowadays, language models are the backbone of natural language processing (NLP) — the ML technique empowering machines to analyze, comprehend, and generate human language. Primarily trained on extensive text datasets, they leverage learned patterns to anticipate the next word in a sentence and create contextually appropriate and grammatically sound text.

Types of language models

Language models come in two main categories: statistical models and those based on deep neural networks.

Statistical language models leverage data patterns to make predictions about word sequences. To build a simple probabilistic model, they use n-gram probabilities, sequences of words where n is a number greater than zero. N-gram models come in different types, like unigrams, bigrams, and trigrams, that evaluate words and their probability concerning the previous text. In the term "n-gram," the "n" denotes the number of words or items in a sequence that the model uses as context to make predictions. For instance, in a bigram (2-gram), the model considers two words to anticipate the next one. While these models are efficient, they fail to capture long-term dependencies between words.

Neural language models use deep neural networks to predict the likelihood of word sequences. They handle large vocabularies and deal with rare words relying on distributed representations. They capture context better than traditional models and can deal with more complex language structures and longer word dependencies.

A significant neural language model we will talk about in this article’s context is the transformer model.

Transformers

Transformers are an advanced type of deep learning architecture. Excelling in understanding context and interpreting meaning, transformers analyze the intricate relationships within sequential data — such as words in a text string. Known as "transformers," they can effectively translate one sequence into another.

Distinct from Recurrent Neural Networks (RNNs) and Long Short-Term Memory (LSTM) models, which process data sequentially, transformers analyze the entire sequence simultaneously. This attribute makes them parallelizable, accelerating the training process and enhancing efficiency.

Transformer architecture

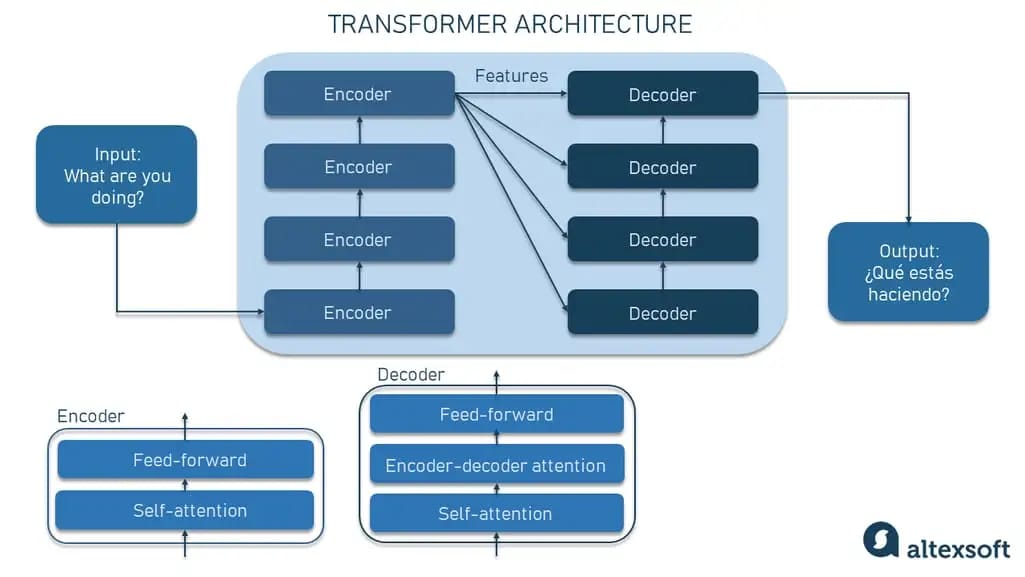

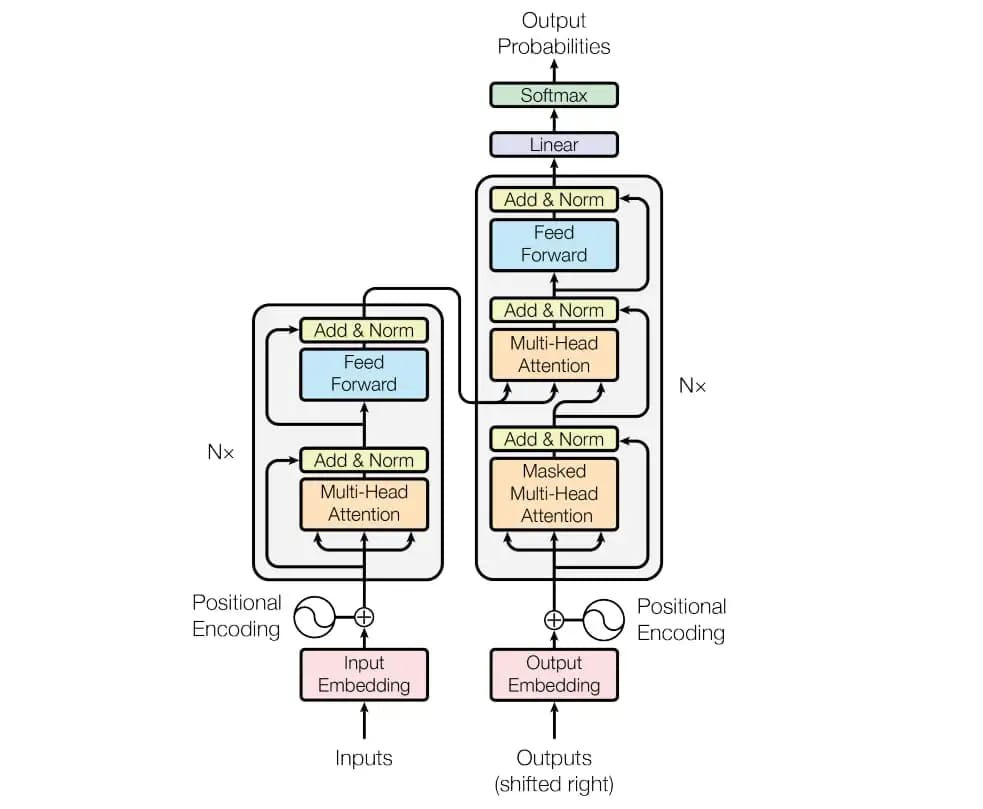

The critical components of transformer models include the encoder-decoder architecture and so-called attention mechanisms.

Encoder-decoder architecture. This dual structure is fundamental to transformer models. The encoder ingests an input sequence — usually text — and transforms it into a vector representation encapsulating the word's semantics and position in the sentence, often termed the input's "embedding." On receiving these encoder outputs, the decoder synthesizes context and generates the outcome. The encoder and decoder have multiple identical layers, housing a self-attention mechanism and a feed-forward neural network. Also, the decoder contains an encoder-decoder attention mechanism.

Attention and self-attention mechanisms. The attention mechanism is the core of transformer systems. It empowers the model to concentrate on specific input sections while making predictions. By assigning weights to input elements corresponding to their predictive significance, the attention mechanism facilitates a weighted summary of the input, which guides the prediction generation.

Self-attention is a variant of the attention mechanism that allows the model to focus on different input sections for each prediction. By revisiting the input sequence repeatedly and shifting focus during each revisit, the model captures complex relationships within the data.

The transformer architecture employs self-attention mechanisms in parallel, aiding the model in discerning complex relationships between input and output sequences.

The transformer-model architecture. Source: The “Attention is all you need” paper by Google

Regarding training, transformers utilize semi-supervised learning. Initially, they undergo unsupervised pretraining using a substantial unlabeled dataset to learn general patterns and relationships. Subsequently, they fine-tune through supervised training on a smaller, task-specific labeled dataset, boosting their performance on the assigned task. This two-stage training approach optimizes the utility and specificity of transformer models.

Read our dedicated article about large language models (LLMs) for more detailed information.

For now, we’ll focus on the heart and soul of transformers — the Generative Pre-Trained Transformer or GPT, namely its narrowly-focused sibling ChatGPT.

What is ChatGPT?

ChatGPT is an advanced AI chatbot that leverages the power of machine learning to understand and generate human-like text. Developed by OpenAI, an artificial intelligence research laboratory, and officially launched in November 2022, it's based on transformer architecture, specifically the Generative Pretrained Transformer (GPT) models. Over time, it has evolved significantly with the release of various versions, the latest being GPT-4 at the time of this writing.

Models behind ChatGPT have been trained on the large corpus of diverse Internet texts. The latest version, GPT-4, has 175 billion parameters. Each parameter represents a learned aspect of the language, contributing to the model's ability to generate text responses.

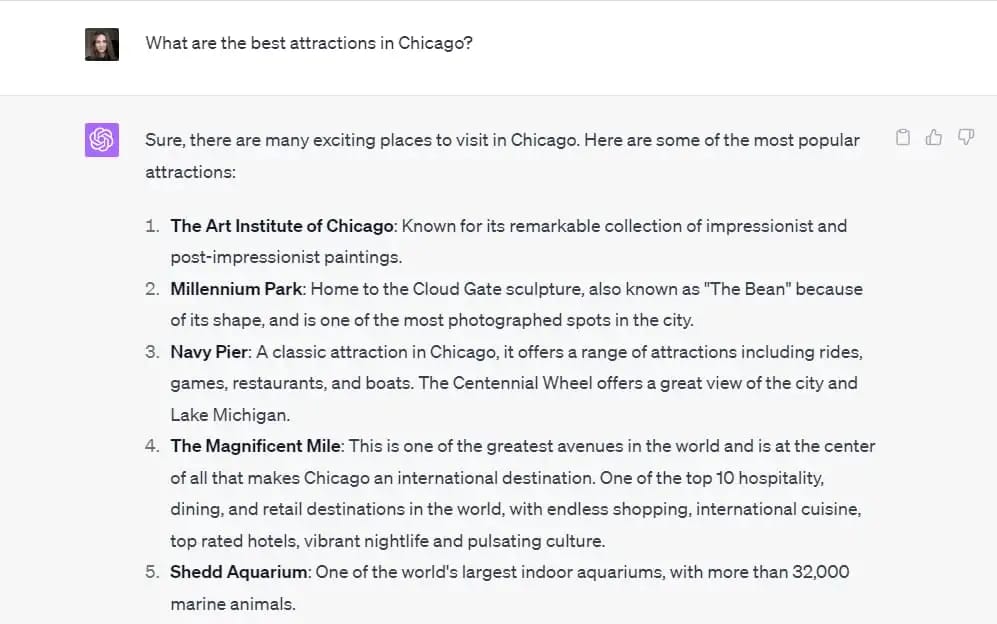

For example, you could ask ChatGPT about the best attractions in a specific city (say, Chicago), and it would respond with a list of them, including information on why each might be interesting.

ChatGPT can be a good travel guide suggesting places to visit in a particular area.

Modern chatbots like ChatGPT excel in conducting multi-turn conversations, a significant evolution from the older models that primarily handled single-turn interactions. In a single-turn conversation, the model responds to a prompt without considering any prior interaction — it's a one-time exchange, much like asking a standalone question and getting an answer. In contrast, multi-turn conversations involve back-and-forth exchanges where the model considers the history of the conversation to generate appropriate responses. ChatGPT, in its advanced design, accepts a sequence of messages as input and generates the following message in the sequence as output, preserving the context and maintaining the flow of the dialogue.

The great news is, OpenAI has provided access to its GPT models to the broader development and research community, abiding by specific licensing conditions. This facilitates the harnessing of the power of GPT by organizations and businesses within their services and products as long as they operate within the guidelines set forth by OpenAI. The following section will delve into the detailed process of integrating the ChatGPT API.

ChatGPT API integration

The common way of using ChatGPT is via the interface, but you can also integrate its capabilities into your application through the API.

OpenAI's APIs enable developers to interact with models like GPT-4, GPT-3.5-Turbo, and others and can be used in multiple contexts, including the chat format and single-turn tasks. API integration requires some setup and understanding of the underlying technology, but it offers greater flexibility and control.

At the time of this article’s publication, the available models that can be accessed through APIs include

- modern GPT-4 and GPT-3.5-Turbo, and

- legacy models like ada, babbage, curie, and davinci.

Each model offers unique features and capabilities, allowing developers to choose the best fit for their specific requirements.

Developers can use any programming language to interact with OpenAI's APIs. While Python is a popular choice due to OpenAI's Python client library, HTTP requests can be made using any other language, ensuring the APIs cater to diverse development needs.

Pricing for API usage (GPT-3.5 Turbo model) is set at $0.002 per 1,000 tokens. Here, "tokens" refer to units of text processed by the model, accounting for both the input and output sequences. At the moment, those who want to try GPT-4 API must join the waitlist.

Also, it's pretty easy to integrate with OpenAI APIs since they are modern REST APIs with detailed API reference documentation that can be found on OpenAI's official website.

In our endeavors at AltexSoft, we've had the opportunity to incorporate ChatGPT functionalities into some projects. More on them further in the text.

Reflecting on the integration process, our Solution Architect, Alexey Taranets, shared his experience: "The wealth of documentation made integrating ChatGPT a straightforward task. We encountered no technical hurdles during the integration; it essentially boiled down to a single API endpoint. You just generate the API Key, and off you go, send requests.”

The operative part of utilizing ChatGPT effectively lies in crafting the appropriate prompts. So let’s elaborate more on this.

Read our dedicated article about LLM API integration to learn more.

Prompt engineering

The prompts to GPT are, essentially, the means by which the model is programmed. By providing specific instructions or examples of a task's successful completion, users can direct the AI's outputs. ChatGPT learns to identify and replicate patterns found in the provided text. Consequently, when presented with similar prompts in the future, ChatGPT can generate responses that align with the desired outcome.

“When implementing a GPT model, we spent most of our time on refining the request to it,” confirms Alexey Taranets. “We essentially had to instruct ChatGPT to function as an OTA API handler. We'd say, 'Here's our list of destinations, and we want you to answer in this specific format. If you can't find results, respond in this alternative format.' So our main task was indeed crafting these request instructions."

Apart from the artful crafting effective prompts to extract the desired output from AI language models like ChatGPT, prompt engineering involves understanding how the model works, what limitations it has, and how to use its strengths.

Glib Zhebrakov, Head of the Center of Engineering Excellence Technologies at AltexSoft, shares some thoughts on writing prompts successfully: “There are three key aspects here. First, you shouldn’t expect your first prompt to work perfectly. Second, it may take some time, trial, and error to get the results you are looking for. And last but not least, keep iterating as much as needed.”

Another critical aspect here is chained prompting, a technique where multiple instructions or queries are issued to the model in a sequence to guide its outputs toward a particular desired outcome progressively.

The idea behind chained prompting is that sometimes a single instruction may not be sufficient for the model to produce the exact output you desire, especially when dealing with more complex queries or tasks. In such cases, you can break down your overall goal into smaller, manageable tasks and then feed these tasks to the model in a particular order to gradually steer the model towards the intended result.

For instance, suppose you want to get a summary of a complex scientific text from ChatGPT. A single prompt like "Summarize the text" might not yield a satisfactory result. Instead, you can use a chain of prompts:

- ask the model to identify the key points in the text,

- ask it to explain these points in simpler terms, and finally,

- ask it to combine these simplified points into a concise summary.

By mastering prompt engineering, you can turn the power of language models into exciting projects with many opportunities. Below, we cover some of the existing ones.

Popular travel industry use cases of implementing ChatGPT

The travel industry is rapidly harnessing the potential of AI models like ChatGPT to streamline operations, enhance customer experience, and unlock new avenues for growth. Let's delve into some of the most prevalent use cases of ChatGPT in travel to understand better how this innovative AI model is reshaping the industry.

Personalization and trip recommendations

Personalization and trip planning have become essential aspects of the travel experience, and ChatGPT starts playing a pivotal role in this. It not only understands user preferences, moods, and behaviors to offer personalized travel recommendations but also assists in complex tasks such as trip planning.

Expedia, one of the world's leading online travel platforms, is a prime example of leveraging ChatGPT's capabilities for personalization and trip planning. Recently, Expedia integrated ChatGPT into their mobile application, initiating a conversational trip-planning experience.

Upon opening the Expedia app, users are prompted: “Planning a new escape? Let’s chat. Explore trip ideas with ChatGPT.” It marks the onset of an open-ended conversation where users can get personalized recommendations on destinations, hotels, modes of transportation, and attractions based on their interactions with the chatbot.

Peter Kern, Vice Chairman and CEO of Expedia Group, commented in a press release, "By integrating ChatGPT into the Expedia app and combining it with our other AI-based shopping capabilities, like hotel comparison, price tracking for flights and trip collaboration tools, we can now offer travelers an even more intuitive way to build their perfect trip."

Also, Expedia has developed a plugin for ChatGPT, available along with other plugins on the OpenAI website. The plugin acts as a virtual travel agent capable of recommending flights and hotels.

Chatbots and customer service

In the travel industry, the role of chatbots and customer service has been elevated significantly with the introduction of models like ChatGPT. These advanced conversational AI systems transform how travel companies interact with their customers, providing instant responses to inquiries and offering personalized recommendations.

For instance, travel search engine KAYAK has taken advantage of ChatGPT's capabilities to augment its customer service experience. With the integration of ChatGPT, KAYAK has become a more efficient and responsive virtual travel assistant. Now, users can ask travel-related queries in natural language and receive tailored responses based on their specific criteria and KAYAK's extensive historical travel data.

Travel data analytics

Another fascinating use case of ChatGPT in the travel sector is in the realm of data analytics. Through its profound understanding of natural language, ChatGPT can analyze vast amounts of data such as customer reviews, social media feedback, and more. This enables businesses to glean valuable insights into customer behavior and market trends, thereby assisting in strategic decision-making.

For instance, airlines and hotel chains can leverage ChatGPT to analyze customer feedback, thus identifying areas of improvement to enhance customer experience. Understanding preferences and sentiments in reviews also allows businesses to adapt their services accordingly, ensuring customer expectations are met and even surpassed.

From our position at the forefront of technology, we at AltexSoft couldn't overlook something as significant as ChatGPT. Read on for the details of our exploration.

AltexSoft’s expertise with ChatGPT

ChatGPT’s diverse applications extend well beyond chatbots and personalization, reaching into areas that might at first be surprising. At AltexSoft, we've had the opportunity to experiment with this AI model in a real-world setting. Our exploration aimed to tackle intricate problems, improve our current solutions, and better understand the technology's potential. In the following sections, we'll share how we applied ChatGPT to specific challenges and discuss the outcomes of these initiatives.

Migrating a travel web app's Node.js service to Java

In this section, we take a closer look at the practical application of ChatGPT in the realm of software development, particularly in the travel industry. The scenario unfolds around migrating a Node.js service to Java for our client's travel-oriented web application. Its primary function was to calculate the carbon footprint of hotels.

The AltexSoft team was operating with various microservices written in Java. However, one component of this ecosystem, inherited from a previous vendor, stood apart as it was written in Node.js. Looking ahead at the client's plan to introduce more features into the service, a clear opportunity arose: migrating this outlier to Java. Aligning all services to the same language was a strategic move for simplicity and consistency.

Glib Zhebrakov offers some valuable insights on this matter: “It's imperative to fully comprehend what you are asking from ChatGPT. If you can't understand a piece of code, say in C or C++, and ask ChatGPT to translate it to your language of preference, you're likely to encounter some obstacles. Often, the devil is in the details. Hence, recognizing these intricate points is crucial.”

Back to the case, the Node.js service was a typical web application with controllers, services, and a relational database that was far from perfect code-wise. The team's strategy for migration initiated with the database, hosting around 20 tables. These tables were manually extracted, and then ChatGPT was employed to generate the corresponding Java entities. This included migration scripts, services layer, mappings, and data transfer objects (DTOs)— in Java, they are simple objects used to carry data between processes.

Given a “user” table, ChatGPT successfully generated a “user entity,” a Java class with simple properties inside. However, the model struggled a bit with generating Liquibase migration, a Java migration tool that relies on XML or YAML files as input to migrate the database schema. ChatGPT produced something resembling a migration, but the XML tags used were nonexistent.

After adjusting the prompts for a more thorough explanation, ChatGPT effectively produced a bash script for creating the appropriate directory. Additionally, it successfully generated layered spring-based Java entities, including services, repositories, etc., and entities with fields corresponding to the table. This demonstrates the importance of the quality of prompts when interacting with ChatGPT— unclear or inadequate instructions will yield less than satisfactory results.

Overall, by utilizing ChatGPT, AltexSoft managed to save about 30 percent of the developer's time, and more importantly, the risk of bugs or mistakes was mitigated. Furthermore, under tight deadlines, ChatGPT served as an invaluable tool to expedite the process.

Natural language search

In the realm of the hospitality industry, natural language search empowers users to leverage natural, everyday language to seek out their preferred travel experiences. This user-friendly approach significantly enhances customer interaction by offering more intuitive and human-like communication. Users can phrase their queries in their own words, such as "I'm looking for a tranquil beach retreat," "I'd like to explore iconic historical landmarks," or "Find me thrilling adventure destinations."

ChatGPT makes such search implementation incredibly dynamic. It allows platforms to understand and interpret real-world, natural language queries, profoundly transforming how users interact with the platform and leading to a more conversational and personal user experience.

At AltexSoft, we leveraged ChatGPT to innovate the customer experience in collaboration with one of our clients. Together, we built a travel platform for tour packages containing flights and hotels across various destinations. The platform was ripe for innovation to meet the escalating user expectations in the digital age.

To elevate the platform's capabilities, we harnessed the power of the GPT-3.5-Turbo model via the OpenAI API. The challenge went beyond mere API integration — we needed to master prompt engineering, which involved providing precise instructions to the model to generate pertinent responses. After numerous iterations spanning two weeks, we fine-tuned a prompt that yielded the desired results.

Alexey Taranets, Solution Architect at AltexSoft, shared his insight on this innovation: "Imagine typing in a natural language request in the search box on the website - outlining what you wish to see, where you desire to travel, and so forth. ChatGPT, in turn, curates a list of top destinations specifically tailored to your query. The real charm of this system is its ability to comprehend non-specific instructions. You could simply say, 'I'd love somewhere warm with delectable cuisine, exciting adventure sports, and breathtaking scenery,' and the system grasps exactly what you're seeking."

Upon receiving a user's query, the GPT model scans through the list of destinations, picking the top three that best align with the user's request. It even suggests the optimal month for vacationing in each location. The derived data is then delivered in a CSV format.

To convert this data into actionable results, we designed a system that extracts the destination names and corresponding months from the GPT response. This information is then fed into a standard request form to search for matching tour packages. As a result, users receive a list of available holiday options per their natural language request.

Hotel and room mapping

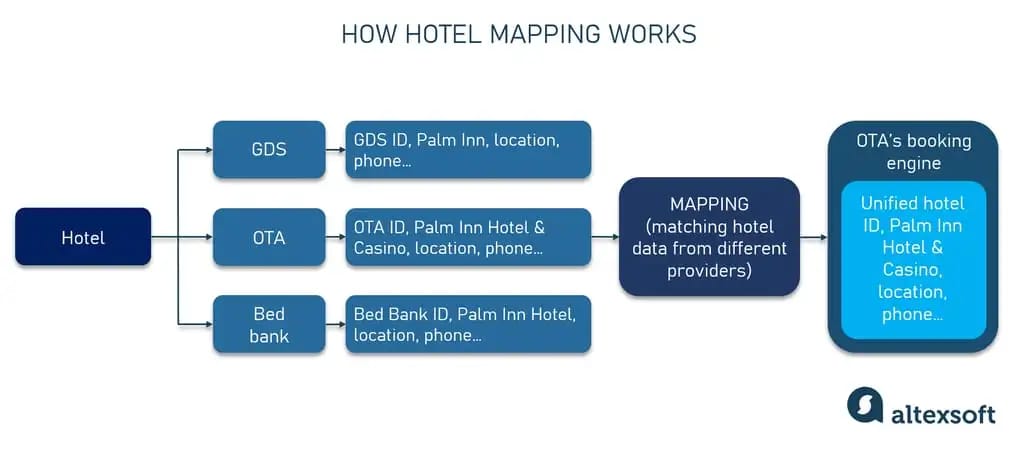

Hotel and room mapping are fundamental processes in the travel industry, particularly for resellers who source inventory from multiple suppliers. In this context, suppliers may list the same hotels and rooms but under different names and IDs, leading to potential overlaps. Therefore, the task of hotel and room mapping is to identify and rectify these overlaps, ensuring a consistent and accurate representation of the inventory.

At a high level, hotel mapping is all about matching the same property across various platforms. To illustrate this, consider the instance of a hotel listed as "The Ritz Carlton New York" in one system, "Ritz Carlton - New York" in another, and "New York Ritz Carlton" in yet another. While to the human eye, these names are synonymous, a computer might treat them as different entities. The objective of hotel mapping, thus, is to help computer systems recognize these listings as the exact, same hotel.

How hotel mapping works.

The matching process involves a blend of algorithms and manual checks that consider key identifying details like hotel name, address, postal code, geolocation data, and other unique identifiers. The end goal is that, irrespective of the listed name, all entries point to the same hotel property.

When we go a level deeper, we encounter the realm of hotel room mapping. The principle here is similar, but the granularity is higher. The task is to standardize the room types of a specific hotel presented on different platforms for your system. Given that room descriptions can vary significantly across different systems (a "Deluxe King Room with City View" could be listed as "King Deluxe - City View" elsewhere), hotel room mapping is essential to prevent confusion and overbooking.

With the significance of these processes, the AltexSoft team sought to explore the potential of artificial intelligence in simplifying them. To do this, we leveraged ChatGPT in a real-world-like scenario.

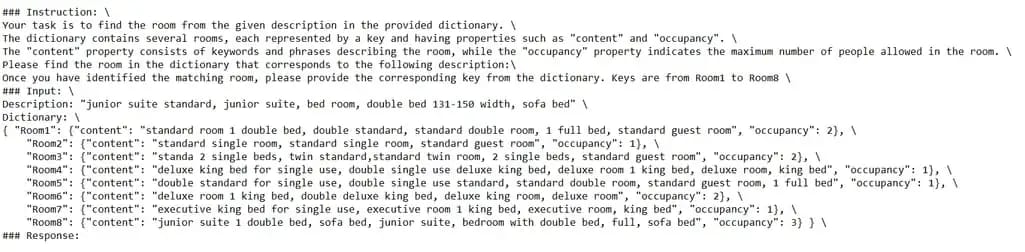

We sourced information about Thistle Holborn Hotel from Hotelbeds and Sabre. We then manually compared room descriptions, identified matches, and compiled a unified dictionary containing key phrases that could be used for room identification. ChatGPT was tasked then with matching room descriptions to corresponding room keys in a dictionary, a situation designed to mimic the challenges of hotel room mapping. The dictionary, contained different room keys, each linked to specific properties —"content" and "occupancy." "Content" encompassed keywords and phrases that characterized the room, while "occupancy" referred to the maximum number of people that could be accommodated.

The general prompt for Alpaca and ChatGPT written within the AltexSoft experiment.

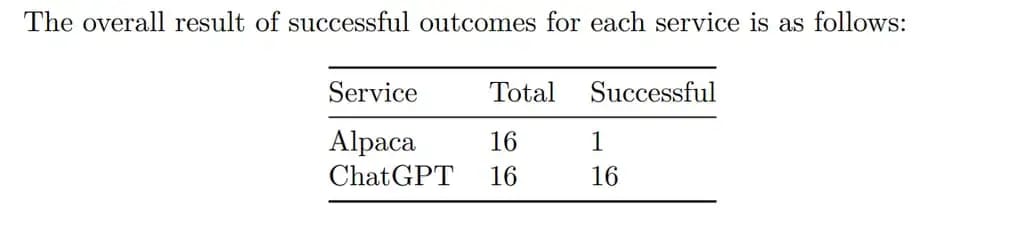

We benchmarked the performance of ChatGPT against another model, Alpaca, across eight room types with two distinct descriptions for each room from different booking platforms. We aimed to assess how effectively the models could normalize the descriptions and correctly identify the corresponding rooms.

In the first scenario, the description included elements like "junior suite standard, junior suite, bedroom, double bed 131-150 width, sofa bed." In our dictionary, this best matched Room8's content, and impressively, ChatGPT made the correct match. This success was repeated across the other rooms, with ChatGPT consistently making accurate matches, even when the room descriptions differed in their text and detail.

Alpaca produced numerous issues. It often made mistakes in room identification, and its performance declined with large or sequential prompts. Additionally, Alpaca's input string length was limited, leading to unpredictable behavior and strange results. It also took longer to process queries than ChatGPT.

The overall results of the GDS hotel data normalization experiment made by AltexSoft.

In contrast, ChatGPT displayed no such problems and processed queries more swiftly. Testing revealed that ChatGPT successfully identified the correct rooms 100 percent of the time (16 out of 16), whereas Alpaca only managed once in the same number of tries.

This real-world-like scenario highlighted the potential of AI, specifically ChatGPT, in performing complex tasks such as hotel room mapping. By accurately interpreting and standardizing room descriptions, it demonstrated how it could help prevent overbookings and ensure customers get the room they expect. It also reaffirmed our belief at AltexSoft in the power of artificial intelligence to enhance operational efficiency and customer satisfaction in the hospitality industry.

Current limitations and the future of ChatGPT in travel

While ChatGPT offers many opportunities, it is essential to recognize its inherent limitations.

- The functionality of ChatGPT relies on training data, which means it can only generate responses based on its training and given prompts.

- Although it can produce remarkably accurate responses, it remains prone to errors or misleading information.

Despite these constraints, ChatGPT's potential to revolutionize the travel industry is immense and just starting to unfold. Its applications range from enhancing virtual tours of vacation destinations to promoting sustainable travel, providing tailored tourist suggestions, improving travel accessibility, and transforming transportation system management.

Here are a few prospects for ChatGPT that many people in the travel industry agreed on.

Virtual guide tours. In the realm of virtual reality, ChatGPT can serve as a natural language assistant, guiding and informing users throughout these virtual experiences, allowing them to plan their journeys and make informed choices before booking their trips.

Responsible tourism. By focusing on sustainable tourism, this technology may encourage eco-friendly choices, such as recommending environmentally responsible accommodations or carbon-neutral travel alternatives.

Enhanced travel planning. ChatGPT also promises to enhance the accessibility of travel planning for people with disabilities. Facilitating natural language interactions can empower individuals to independently plan and book their travels, ensuring that the joy of travel is accessible to everyone.

Regarding travel operations, ChatGPT can potentially optimize various transportation systems through its natural language interface, benefitting both customers and personnel. The scope of ChatGPT's impact on the travel industry is extensive and promising, presenting ample room for innovation and expansion. While the road ahead will undoubtedly be filled with excitement and challenges, one certainty remains: ChatGPT has the capacity to transform travel planning into a more efficient, customized, and eco-friendly process that is accessible to everyone.

This article belongs to a series showcasing our expertise. For a deeper dive into similar topics, we invite you to explore the related blog posts:

Hotel Price Prediction: Hands-On Experience of ADR Forecasting

Occupancy Rate Prediction: Building an ML Module to Analyze One of the Main Hospitality KPIs

Sabre API Integration: Hands-On Experience with a Leading GDS

Amadeus API Integration: Practice Guide on Getting the GDS Content

Sentiment Analysis in Hotel Reviews: Developing a Decision-Making Assistant for Travelers

Flight Price Predictor: Training Models to Pinpoint the Best Time for Booking

OTA Rates and Building Commission Engine: Best Practices and Lessons Learned

Audio Analysis With Machine Learning: Building AI-Fueled Sound Detection App

Computer Vision in Healthcare: Creating an AI Diagnostic Tool for Medical Image Analysis