In our modern world, there's hardly a business that doesn't need a communication channel with its customers. Here's the catch though: According to Meta (formerly Facebook), 64 percent of people would rather message than speak to a human call center agent on the phone. Besides that, customers want timely responses to whatever questions they have. So, it makes sense that more and more companies are looking for a way to implement conversational artificial intelligence (AI) technology to streamline these processes.

In this post, we'll focus on what conversational AI is, how it works, and what platforms exist to enable data scientists and machine learning engineers to implement this technology. So, if you are interested in building a conversational AI bot, this article is for you.

What is conversational AI and how is it different from traditional chatbots?

Conversational AI is the area of artificial intelligence that deals with questions on how to let people interact with software services through chat- or voice-enabled conversational interfaces and make this interaction natural. In general, it is a set of technologies that work together to help chatbots and voice assistants process human language, understand intents, and formulate appropriate, timely responses in a human-like manner.

Simply put, it is an AI you can talk to via text or voice.

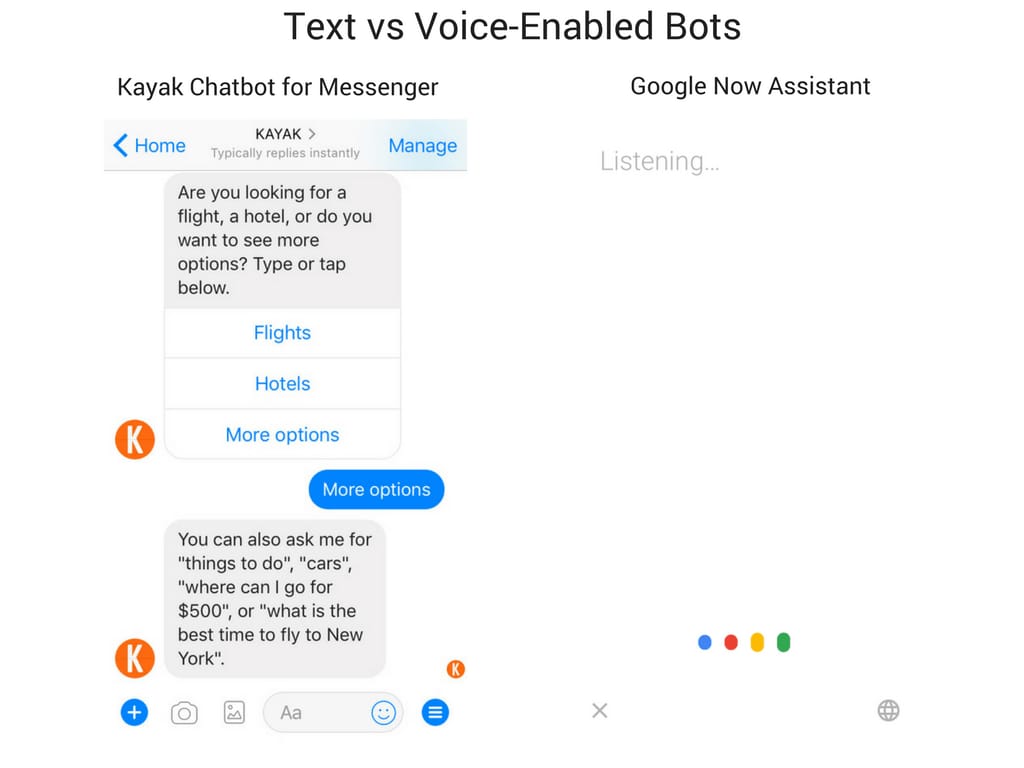

Text and voice-enabled conversational bots

For example, imagine you are in your comfy bed ready to fall asleep but the lights are still on. Instead of getting out of bed and switching off the lights manually, you can open an Alexa app on your phone and give a voice command like, “Alexa, bedroom lights off.”

Or you want to find out the opening hours of a clinic, check if you have symptoms of a certain disease, or make an appointment with a doctor. So, you go on the clinic’s website and have a textual conversation with a bot instead of calling on the phone and waiting for a human assistant to answer.

This brings us to the question of how conversational AI is different from rule-based chatbots.

Traditional or rule-based chatbots are software programs that rely on a series of predefined rules to mimic human conversation or perform other tasks through text messaging. They follow a rigid conditional formula — if X (condition) then Y (action). Such chatbots may use simpler or more complex rules, but they can’t answer questions outside of the defined scenario.

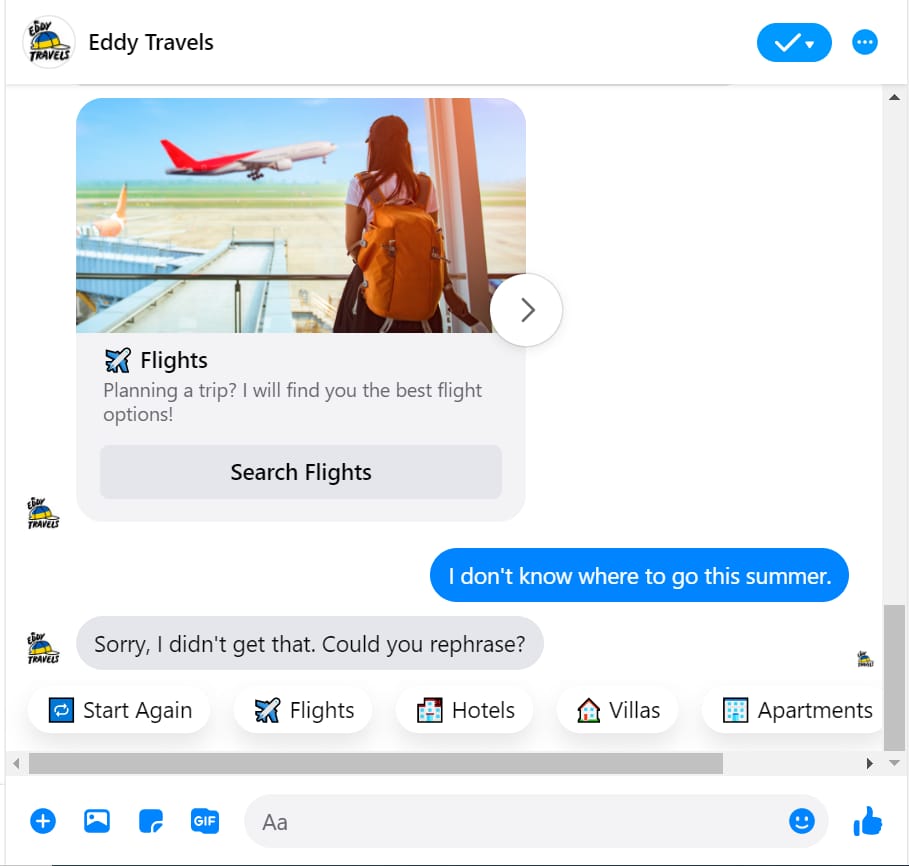

A common bot response to an unforeseen scenario

Also, such chatbots do not learn from interactions with a user: They only perform and work with the previously known scenarios you wrote for them.

An AI-based chatbot is also software programmed to mimic human conversation. But unlike rule-based systems, these chatbots can improve over time through data and machine learning algorithms. Basically, AI chatbots can do two core things that differentiate them, namely:

- generalize — a bot can understand patterns and add context to a conversation, going beyond the defined script; and

- get better from human feedback — when a user provides additional information and corrects a bot’s mistakes, you can use those corrections to automate learning for the model to improve.

So, a conversational AI assistant furnishes a variety of requests. Those requests may be satisfied with a simple question-and-answer format or may require an associated conversational flow.

Let’s dive deeper into core technologies that enable machines to do these awesome things.

Learn about our hands-on experience building an AI travel agent.

Conversational AI key concepts and technologies

Human language is incredibly complex, and so is the task of building machines that can understand and use it. For example, there are a bunch of possible ways for a user to state that they need their password reset. From simple "Reset my password" and "I forgot my password" to more complex "I'm locked out of my email account" and "I can't sign into my account."

Our brains are wired to be good at understanding all of that, but computers are not. That’s why conversational AI systems need some help in the form of smart technologies to execute communication in a human-like manner. Or at least approach it.

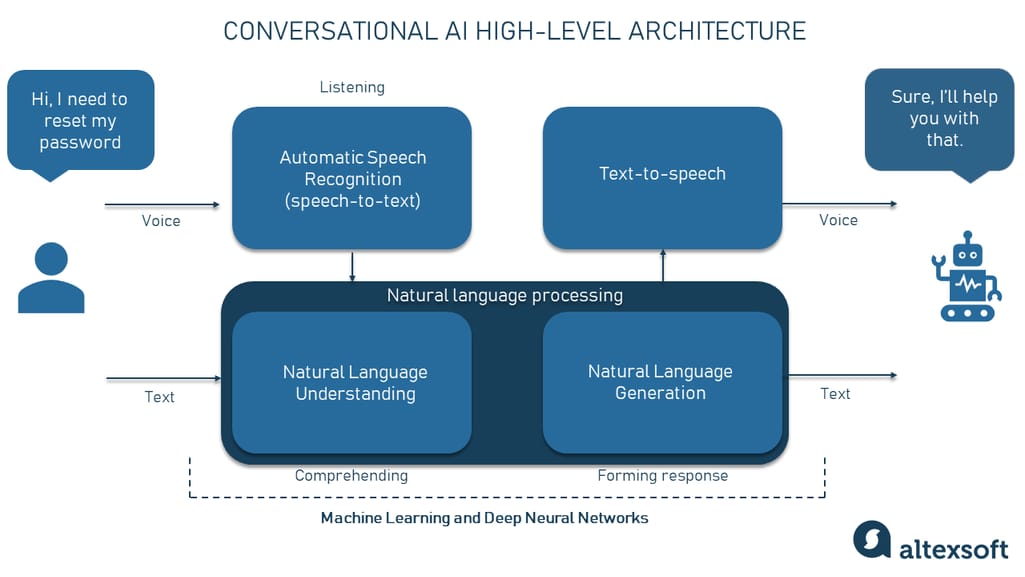

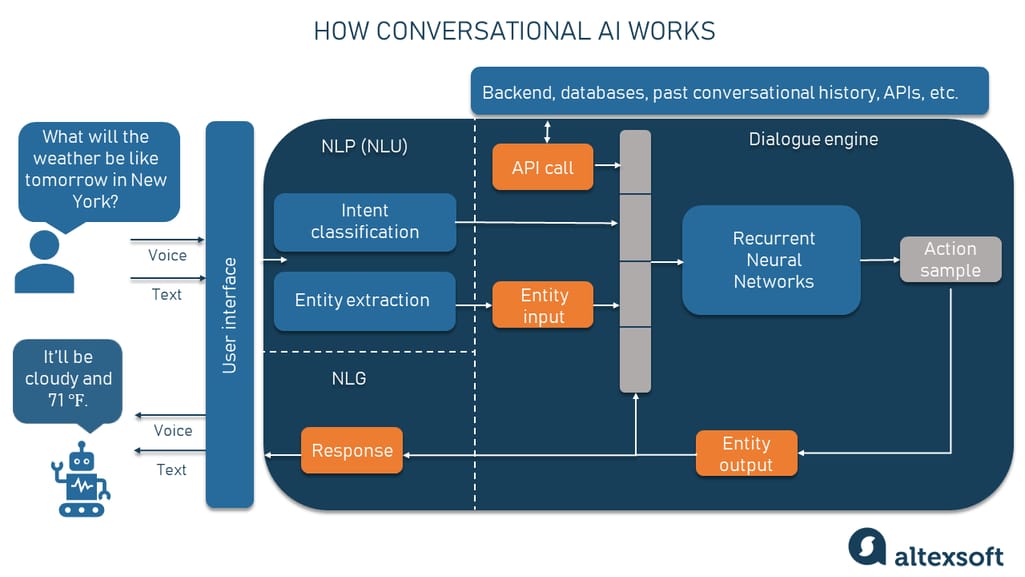

High-level architecture of conversational AI

As you can see from the image above, there are a lot of pieces of the tech puzzle involved. So, it’s worth reviewing the key concepts before we dive into how conversational AI works.

Automatic speech recognition (ASR) or speech-to-text is the conversion of speech audio waves into a textual representation of words. ASR is applied to analyze audio data and parse sound into language tokens for a system to process them and convert them into text. If conversing via text-only, the system excludes this piece of tech.

Machine learning (ML) is a field of knowledge that deals with creating various algorithms to train computer systems on data and enable them to make accurate predictions on new data inputs. ML emphasizes adjustments, retraining, and updating of algorithms based on previous experiences.

Deep learning (DL) is a specific approach within machine learning that utilizes neural networks to make predictions based on large amounts of data. Neural nets are a set of algorithms in which the input data goes through multiple processing layers of artificial neurons piled up on top of one another to provide the output. Deep learning enables computers to perform more complex functions like understanding human speech.

Natural language processing or NLP is the heart and soul of any conversational AI system. It is a branch of Artificial Intelligence that enables machines to process and understand human speech. NLP relies on linguistics, statistics, and machine learning to recognize human speech and text inputs and transform them into a machine-readable format.

There are two subfields in natural language processing:

- Natural language understanding (NLU), as the name suggests, is about understanding human language and recognizing the underlying intent. It uses syntactic and semantic analysis of text and speech to extract the meaning of what’s said or written.

- Natural language generation (NLG) is the process of creating a human language text response based on some data input.

Text-to-speech (TTS) is assistive software that takes text as an input, converts it into audio, and replies via this machine-generated voice. Basically, it reads digital text aloud.

With these concepts in mind, let’s look under the hood of a typical conversational AI architecture to see how everything works.

How conversational AI works

While conversational AI systems may be built differently, the architecture commonly comprises a few core elements that breathe life into what we know as intelligent assistants.

An example of a conversational AI pipeline

These components are:

- a user interface — a text or voice interface visible to users so that they can interact with an AI assistant;

- an input analyzer module — an NLU component that extracts meaning from a user’s natural language input and passes it further;

- a dialogue management module (dialogue engine) — an ML-powered module that manages dialogue state and coordinates building the assistant’s response to the user;

- an integrations module (optional) — an orchestrator that allows you to connect to third-party data via APIs; and

- an output module — a component that uses natural language generation to create a response.

So, let’s examine how these components can work in a single turn of dialogue with a user input that sounds like this, “What will the weather be like tomorrow in New York?”

User interface

Everything starts with a user’s input also known as an utterance, which is literally what the user says or types. In our case, this is the textual sentence, “What will the weather be like tomorrow in New York?” The utterance goes to the user interface of a conversational platform. This is where you can rely on your preferred messaging or voice platform, e.g., Facebook Messenger, Slack, Google Assistant, or even your own custom bot.

Input analyzer module

So, when the message is picked up by a system, it is sent to the input analyzer module where the NLU engine resides. The main idea here is to take the unstructured utterance in a simple human language and extract structured data that an assistant then can use to understand what the input is about.

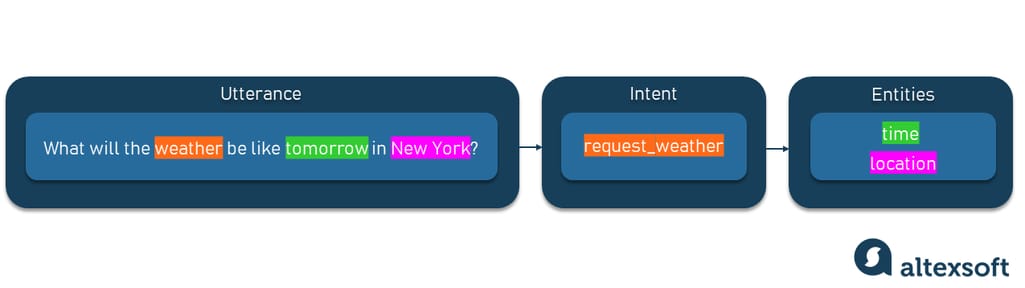

Utterance split into intent and entities

The user’s utterance is generally understood to contain two main components.

Intent is what the user wants to achieve. The intent is generally centered on a key verb but this verb may not be explicitly included in the utterance. In our example, the intent is “request weather.”

Entities are parameters or details that are important to an assistant to be more specific on what actions it can take. Entities can be names, dates, times, company names, etc. Here, there are two entities — time (tomorrow) and location (New York).

To apply structure to the unstructured text and extract intents and entities, the NLU component has two parts.

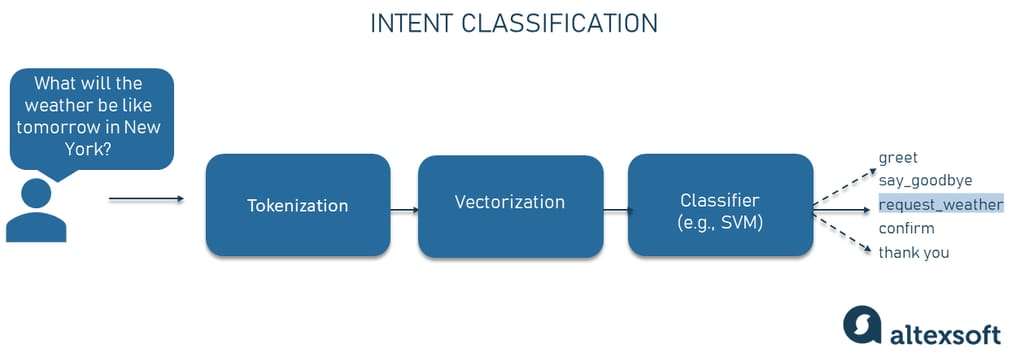

How intent classification works

Intent classification. It finds meaning that applies to the entire utterance. It commonly includes the following stages:

- tokenization — the process of defining the borders of units within the text, such as the beginning and end of words;

- word vectorization — the process of mapping words or phrases to corresponding vectors of numbers (generally speaking, converting words into numbers) to create a sentence representation; and

- intent classification — the process of predicting the label for the user input based on vectors using an ML model, for example, a supervised machine learning model called Support Vector Machine (SVM).

Entity extraction. Another NLU component finds subsets of the utterance with a specific meaning. The entities are usually noun-based and may be extracted by machine learning, hardcoded rules, or both. Basically, the process is similar to how you determine an intent but with a few additional steps. In addition to tokenization, the model uses

- Part of Speech tagging — the process of assigning a grammatical meaning to the words (whether it is a noun, a verb, an adjective, etc.);

- named entity recognition — the process of chunking the sentence and detecting the named entities such as person names, location names, company names, etc. from the text; and

- entity extraction — the process of mining the value and the label of the entity.

In our example, the entities are “tomorrow” and “New York” that have values such as “time” and “location” respectively.

Dialogue management module

The extracted details that include intent and entities are then passed to the dialogue management module. It also uses the power of machine learning. This can be a simple algorithm like a Decision Tree or a more complex model like Deep Learning Neural Networks.

For the showcase, we’ll take Recurrent neural networks (RNNs) that are often used in developing chatbots, and text-to-speech technologies.

RNNs are the type of neural nets that have sort of looped connections, meaning the output of a certain neuron is fed back as an input. These nets can consider sequential data and understand the context of the whole piece of text, making them a perfect match for creating chatbots. Apart from intent and entity input, RNNs can be fed with corrected outputs and third-party information.

So, this module of conversation AI takes the details about the input from the NLU model, considers the history of the conversation, and uses all this information to predict what an assistant should do – how to respond at the specific stage of the conversation.

Integrations module

The architecture may optionally include integrations and connectors to the backend systems and databases. This is an orchestrator module that may call an API exposed by third-party services. In our example, this can be a weather forecasting service that will give relevant information about the weather in New York for a particular day.

Output module

Once an assistant is able to create a response, it sends the details to the output module. The response has two parts: an action (performed by the orchestrator) and a text response. Providing an answer based on the recognized intent is the task of natural language generation. It interprets the received data, creates a narrative structure, combines words and parts of the sentences, and applies grammatical rules. The dialogue engine finally responds to the user via the user interface with an answer, “It’ll be cloudy and 71℉.”

The number of dialogue rounds can be infinite. With each round, conversational AI gets better at predicting user intents and providing more accurate and relevant responses.

It’s worth noting that depending on the system, you may see slightly different terminology than the one we used in our example.

Conversational AI examples across industries

Conversational AI systems have a lot of use cases in various fields since their primary goal is to facilitate communication and support of customers.

Conversational AI in healthcare

The healthcare industry can greatly benefit from using conversational AI as it helps patients understand their health problems and quickly direct them to the right medical professionals. It can also reduce the load on call centers and eliminate call drop-offs.

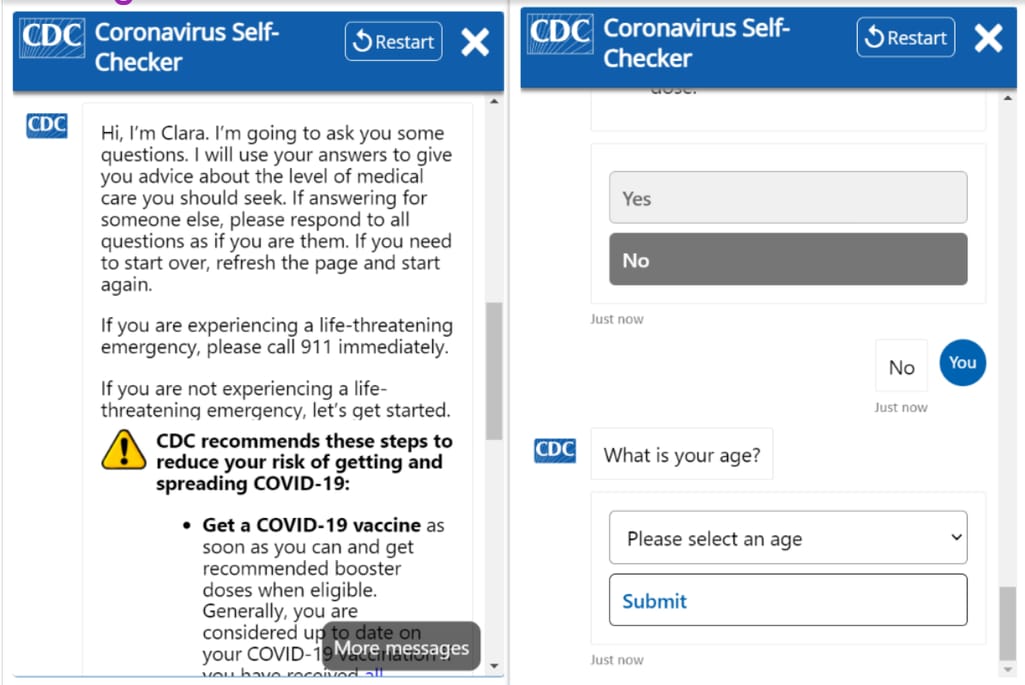

Clara — Coronavirus self-checker bot by US CDC

With the heavy hit of the Covid-19, the global population started searching for information about the disease and its symptoms. Emergency hotlines were flooded with phone calls, so plenty of people were left without any help. The US Centers for Disease Control decided to create its own conversational AI assistant called Clara to let everyone find out more about coronavirus, check their symptoms, and see whether they should be tested for COVID-19. The bot greatly helped increase people's awareness of the disease and what should be done if a person thinks they have signs of the condition.

Conversational AI in travel

Conversational AI is the future of highly efficient traveling. Customers can communicate with chatbots to find inspiration on where to go on a vacation, complete hotel and airline bookings, and pay for it all.

Let’s take, for example, virtual host Edward from Edwardian Hotels London. This cool AI chatbot uses text messaging to provide hotel guests with personalized information and assistance. He can find the nearest vegetarian restaurant if you wish or point you to where the towels are in your room.

And there are a lot of other types of chatbots designed specifically for the travel and hospitality domain. You can learn more on the topic in our dedicated article explaining how to build a bot that travelers will love.

Conversational AI in banking

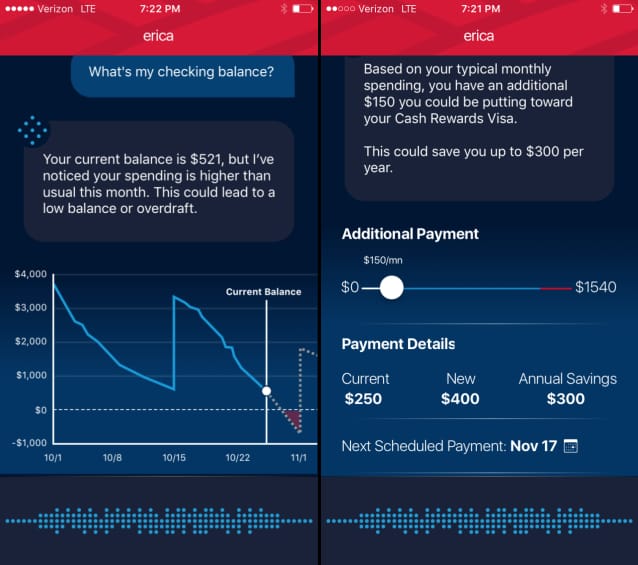

In 2018, Bank of America introduced its AI-powered virtual financial assistant named Erica.

Chatbot displaying account balance and offering advice. Source: ChatbotGuide.org

The smart banking bot helps customers with simple processes like viewing account statements, paying bills, receiving credit history updates, and seeking financial advice. During the third quarter of 2019, digital clients of Bank of America had logged into their accounts 2 million times and had made 138 million bill payments. By the year’s end, Erica was reported to have 19.5 million interactions and achieved a 90% efficiency in answering users’ questions.

Keep in mind that these are just three examples out of thousands. In reality, conversational AI applications can be found in every domain.

How to approach conversational AI: Platforms to consider

There are quite a few conversational AI platforms to help you bring your project to life. We’ll take a look at the most popular ones.

Please note: The off-the-shelf solutions we’re reviewing in this section are for general understating of the market, not for promotional purposes.

IBM Watson Assistant

Pioneering the domain, IBM offers an AI platform called Watson Assistant that enables developers and business users to collaborate and build conversational solutions. It is feature-rich and integrates with various existing content sources and applications. IBM claims it is possible to create and launch a highly-intelligent virtual agent in an hour without writing code.

IBM is known for being a leading conversational AI platform for huge enterprises. For example, Humana — one of the largest insurance providers in the US — fields thousands of routine health insurance queries per day with a voice-enabled, self-service conversational AI developed with Watson. This Voice Agent serves callers at one-third of the cost of Humana’s previous system and doubles the call containment rate.

Rasa

Rasa is an open-source framework for creating conversational AI. The framework consists of two Python libraries: Rasa NLU and Rasa Core. The former aims at enabling an assistant to understand what a user means in terms of intent and derive details of user input. Another piece of this tech stack puzzle is Rasa Core — an ML-based development and management system. Rasa allows you to build and run conversational AI locally, use your own data, and tune models to your specific use cases.

Google Dialogflow

Google also has a wide array of software services and prebuilt integrations in its catalog. Google’s Dialogflow is the primary service used for conversational AI.

At its core, Dialogflow is an NLU platform that helps design and integrate a conversational user interface into your mobile app, web application, device, bot, interactive voice response system, and so on. Dialogflow is set to analyze various user input types and provide responses through text or with synthetic speech.

The platform comes in two shapes, each of which has its own agent type, user interface, API, client libraries, and documentation:

- Dialogflow CX is for building large and complex virtual agents; and

- Dialogflow ES is suitable for small and simple agents.

Dialogflow also has the Natural Language API to perform sentiment analysis of user inputs — identify whether their attitude is positive, negative, or neutral.

Amazon Lex

Amazon Lex is a fully managed artificial intelligence (AI) service with advanced NLU models to design, build, test, and deploy conversational interfaces in applications. It integrates easily with other AWS cloud-based services as well as external interfaces. Amazon Lex uses the same foundation as Alexa. Amazon’s Alexa is available on more than 100 million devices worldwide and is configurable with more than 100,000 Alexa skills. These skills are usually command interpreters, but you can use Lex to build conversational AIs as well.

Based on the use case, it may be more sensible to build your own custom conversational AI system without relying on any of the existing solutions. More difficult in terms of realization, this is a good way to ensure that the end result will meet all of your desired criteria.

Conversational AI challenges

If you are considering building a conversational AI system, there will be obstacles on your path you have to be ready to overcome.

Language complexity

First and foremost, language is hard. There are lots of different languages each with its own grammar and syntax. In addition to that, those languages are packed with dialects, accents, sarcasm, and slang that take the complexity of understanding speech to a whole new level. Besides, there are also spelling errors and noise that should be separated from important signals. These and other factors influence the communication between a human and a machine and are very difficult to deal with.

Security and privacy

To provide appropriate responses, your conversational AI needs a lot of data, which makes it prone to privacy and security breaches. Protecting your data and making your system compliant with all required security standards is a difficult yet mandatory task. Conversational AI applications must be designed to ensure the privacy of sensitive data.

Technology adoption

While it's not yet everybody's go-to, conversational AI is in use and enjoying increasing popularity as people get to know and understand it. There are still challenges that have to be overcome to increase the number of people who are comfortable using this technology and for a wider variety of use cases. It is important to educate: Help your target audiences who are not familiar with the technology get to know it better.