Many reviews on the Pandas Library contradict themselves. It’s easy to find articles that simultaneously praise and criticize Pandas’ documentation. While this doesn’t bother more experienced users much, beginners find it confusing. Without clarity on how the same features that make Pandas great for data analysis can also make it not so great, newcomers may feel put off.

The documentation is just one of several hot topics that need more light shed on them. As we tackle these contradictions, we'll mainly focus on laying out the pros and cons of using Pandas. We will also explore its data structures, applications in businesses, and alternatives.

What is Pandas in Python?

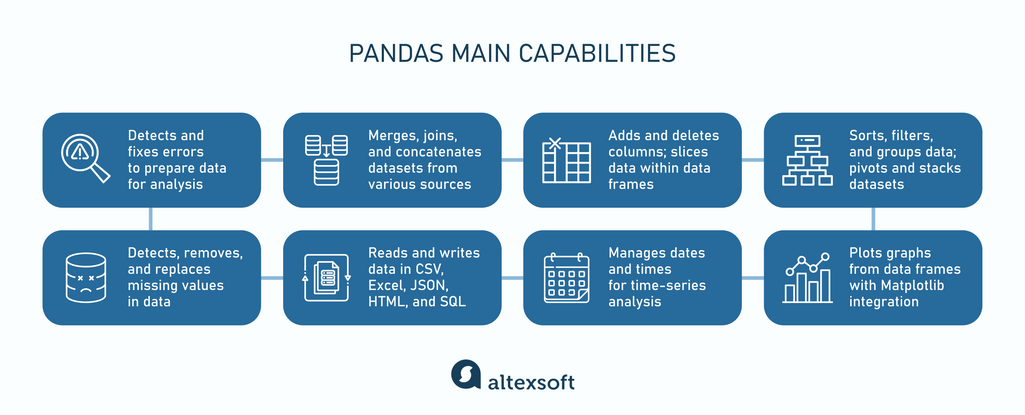

Pandas is an open-source Python library highly regarded for its data analysis and manipulation capabilities. It streamlines the processes of cleaning, modifying, modeling, and organizing data to enhance its comprehensibility and derive valuable insights.

Pandas functions as part of its broader uses in data manipulation

For instance, when handling sales data in a spreadsheet, Pandas enables practitioners to:

- filter data according to specific periods or products;

- ascertain the total sales of each product; and

- modify the dataset by adding a new column to depict the percentage of total sales accounted for by each product.

The name Pandas is engaging due to its association with the cute bear. But it’s really a portmanteau created by combining panel and data. A panel, or longitudinal data, is used to track changes across different variables for the same subjects over multiple time periods.

Pandas 2.2.0: latest release

For over sixteen years, Pandas library has seen consistent iterations, with the latest update, Pandas 2.2.0, rolled out on January 22, 2024. The release tacks on some extra enhancements, leaning on the Apache Arrow ecosystem.

PyArrow upgrade. PyArrow made its debut right before Pandas 2.2.0, with additional improvements following. It will now enable more efficient processing of complex data types like lists and structs in Pandas.

Apache ADBC driver support. Introduction of the ADBC driver made reading data from SQL databases into Pandas data structures faster and more efficient. It’s particularly beneficial for PostgreSQL and SQLite users.

case_when method. A new method similar to SQL's CASE WHEN allows for easier creation of new columns based on conditional logic, enhancing data manipulation capabilities.

Upgrade recommendations. Instructions for upgrading to Pandas 2.2 helps users take advantage of the latest improvements and prepare for future changes in Pandas 3.0.

Pandas use cases

Reportedly, around 220 companies, including giants like Facebook, Boeing, and Philips, have Pandas in their tech arsenals. Wondering how they use it? Let's dive into the main ways to make the most of the Pandas library.

Data cleaning and preparation. A ton of companies are buried under data from all sorts of places—think interactions with customers, financial transactions, sensor readings, and daily operations logs. They're turning to Pandas to get this data cleaned up and prepped for intense analysis. This includes dealing with missing values, axing duplicates, sifting through the data, and tweaking data types.

Exploratory Data Analysis (EDA). EDA is a technique to explore and summarize the main traits of a dataset visually. It's super useful for getting a grip on what your data is really saying, identifying any outliers, and sparking new ideas. Pandas provides functions for summarizing data, computing descriptive statistics, and visualizing distributions. Companies are using these tools to sift through massive datasets fast, spot trends, and figure out where to take their strategies next.

Feature engineering for machine learning. Feature engineering is crucial for making accurate predictions. Pandas helps by allowing you to extract, modify, and select data properties or features to power machine learning models. Take recommendation systems, for instance, where nailing the right features determines how on-point your predictions are. Big techs like Netflix and Spotify rely on Pandas for slicing and dicing important individual characteristics to understand user preferences and suggest new movies or music they might enjoy.

Financial analysis. Financial analysis is a critical aspect of economic research. Economists sift through data to uncover trends and assess the health of the economy across multiple sectors. They are increasingly utilizing Python and Pandas because they efficiently manage large datasets. Pandas provides numerous tools, including advanced file management, which streamlines the process of accessing and altering data. As a result, financial analysts are able to reach significant decision-making milestones more swiftly than in the past.

Time series analysis. Sectors such as retail and manufacturing rely heavily on time series analysis to understand seasonal patterns, predict how demand will change over time, adjust inventory levels, and schedule production more effectively. Pandas supports different time-related concepts and offers comprehensive tools for handling time series data.

Pandas DataFrames and Series explained

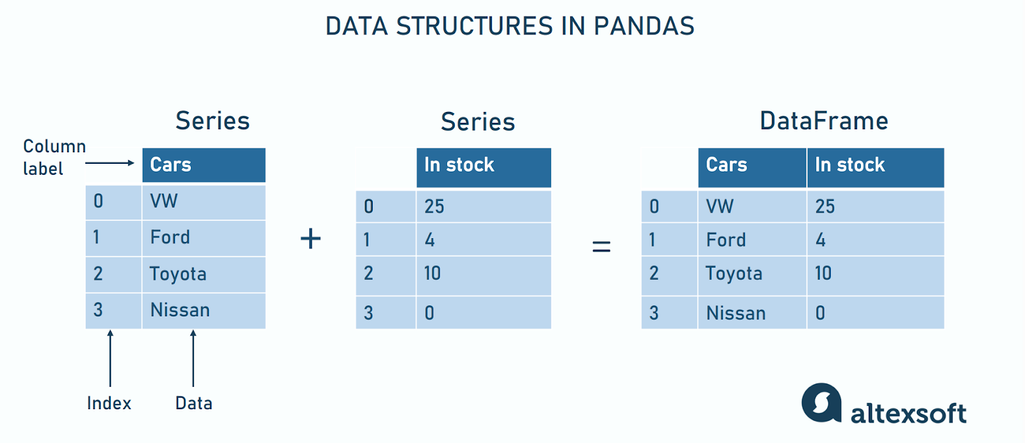

Pandas has two key data structures: DataFrames and Series. Let's break down their features and get how they tick.

Comparing Pandas DataFrames and Series

Dimensionality. DataFrame is like a spreadsheet that renders in a two-dimensional array. It holds different data types (heterogeneous), which means each column can have its own type. You can change its size by adding or removing data. You can also change the data inside it (mutable). A Series, on the other hand, could be considered a single column in a spreadsheet. Essentially, a Series is a one-dimensional array that can hold any type of data, but all the data inside it must be of the same type (homogeneous). It’s great for lists of prices or names.

Data structure. DataFrames can be thought of as a container for multiple Series objects that allows for the representation of tabular data with rows and columns. A Series represents a single column within a DataFrame. It does not have the concept of rows and columns on its own but rather a single array of data with indices.

Data storage. Because it is a two-dimensional structure, a DataFrame is ideal for datasets where each row represents an observation and each column represents a variable. A Series keeps data in a linear format, which is suitable for storing a sequence of values or a single variable's data across different observations.

Index. A DataFrame has both row and column indices for more complex operations like selecting specific rows and columns, pivot tables, etc. A Series only has a single index, which corresponds to its rows. A Series index allows for fast lookups and alignment.

Functionality. A DataFrame supports a wider range of operations for manipulating data, including adding or deleting columns, merging, joining, grouping by operations, and more. Series operations are generally limited to element-wise transformations and aggregations. Though powerful, the scope is narrower compared to a DataFrame.

Data input flexibility. Both DataFrame and Series accept a variety of data inputs including:

- ndarray — a NumPy array, which can be heterogeneous (different data types allowed) or homogeneous (all elements have the same data type).

- Iterable — any iterable object, like a list or a tuple.

- dict — a Python dictionary. Just like in a dictionary where you look up words to find their meanings, in a Python dictionary, you look up 'keys' to find their 'values'.The values in the dictionary can be Series, arrays, constants, dataclass instances, or list-like objects.

When using a dict for a DataFrame, the keys become column labels that map to their respective Series, arrays, constants, or dataclass instances. In contrast, for a Series, a dict's key-value pairs turn into labels for each data point.

Data type specification (dtype). Both let you decide what type of data they should hold. If you don't give instructions, they'll specify the type themselves based on your data input.

Naming and data integrity. A DataFrame doesn't need a name because it's like a big table meant to contain lots of different data types and sets. You can give Pandas Series a name, which is handy if you turn it into a DataFrame column later or just for keeping track of what your data represents.

Copying behavior (copy): When making either a DataFrame or a Series, you can choose to either copy the data you're using (making it safe to change later without messing up your original data) or just use it directly (which could be faster but riskier). DataFrames tend to copy by default when you're working with a dictionary (to keep things safe), but not if you're turning another DataFrame or an array into a new DataFrame (to save time). For efficiency reasons, Series are set up not to copy things, unless you say otherwise.

Pandas advantages

We've set forth a key goal for this discussion: to demystify the mixed opinions surrounding the pros and cons of using Pandas. Let's begin with the perks.

Provides flexible data structures

Pandas provides adaptable data structures with powerful instruments for data indexing, selecting, and manipulating which sidesteps the need for complex programming methods.

You can easily convert CSV, Excel, SPSS (Statistical Package for the Social Sciences) files and SQL databases into DataFrames. After analyzing your data and extracting insights, you have the option to export your DataFrames back into familiar formats, which makes it simpler to share your discoveries or incorporate them into other software.

Enhances performance with big datasets

Pandas excels in managing large datasets. It streamlines tasks such as sorting, filtering, and merging data swiftly through vectorized operations.

Unlike the conventional Python approach of processing data item by item in slower loops, Pandas handles entire datasets simultaneously, saving time and reducing the complexity of large-scale data projects.

Smoothly integrates with other Python libraries

Pandas works in harmony with a broad spectrum of Python libraries, allowing users to draw on the strengths of each for diverse tasks. For example, its compatibility with NumPy enables the manipulation of numeric data within Pandas structures.

It also aligns well with SciPy for scientific computing and scikit-learn for machine learning, serving as a connective tissue between these specialized libraries and streamlining the data science process for users at all levels of expertise.

Offers an intuitive and readable design

Pandas is widely acclaimed for its accessible syntax, which is the set of rules that govern how code must be structured for a computer to interpret and execute it accurately. When syntax is clear, expressive, and resembles natural language, it becomes approachable for a broader spectrum of individuals, not solely those with a background in programming and data science.

For instance, with Pandas, tasks such as selecting particular columns, filtering rows under specific conditions, merging different datasets, and applying functions across data are achievable through simple commands.

Maintains comprehensive documentation for users

The developers of the Pandas library created a lot of resources as a reference guide for developers and analysts who want to understand how to use Pandas effectively. You get everything from basic concepts and installation to advanced features and best practices. The documentation is also continually expanded, with Pandas 2.2.0. as the latest update.

Comes with robust graphical support

Even though Pandas isn't a visualization library per se, it integrates with Matplotlib and Seaborn to offer a robust toolkit for visual data exploration. A few lines of code can produce histograms, scatter plots, or intricate time-series visualizations, aiding in identifying trends, outliers, or patterns in data.

Boasts a vibrant user community

A major strength of Pandas is its active and supportive community. With Python being used by over fifteen million developers, a good chunk of them likely rely on Pandas, making for one of the most vibrant communities on sites like StackOverflow and GitHub.

At the time of this writing, there were over 285,000 questions tagged "Pandas" on Stack Overflow and about 138,000 Pandas-related repositories on GitHub.

These forums are great for asking questions, exchanging tips, and collaborating on projects. Such a large, engaged community also plays a role in driving the library's development forward through bug fixes, feature suggestions, and code contributions.

Streamlines data cleaning and wrangling

Data cleaning and wrangling constitute critical phases in the data preparation process for analysis. But they also consume a significant portion of a data scientist's efforts, sometimes as much as 80 percent. Pandas streamlines essential tasks in the detection and rectification of errors and inconsistencies in datasets to ensure their accuracy and dependability.

Watch how data is prepared for machine learning

Pandas disadvantages

Here, we'll explain how the very strengths of this tool can sometimes double as its weaknesses.

Hard learning curve

Pandas is easy to use because it's intuitive and mimics Excel in some ways. It lets you manipulate data through simple commands. But it's also tricky because it has lots of functions and ways to do things.

As you dive deeper, you'll find more complex operations that require understanding its nuances. So, it's a hard learning curve for many: straightforward to start, challenging to master.

Poor documentation structure

Pandas' documentation is extensive as it covers a wide range of functionalities and use cases. It’s ranked "poor" by users who find it hard to navigate or lacking in practical examples for specific problems.

There's a wealth of information available, but not everyone finds it accessible or user-friendly, especially when trying to apply the concepts to real-world scenarios.

API inconsistencies

As Pandas has evolved, it’s accumulated some inconsistencies in its API (Application Programming Interface), which can lead to user confusion. Inconsistencies might manifest in different naming conventions, parameter names, or behavior across similar functions.

For example, the syntax for merging DataFrames may differ subtly from the syntax for concatenating them, despite the operations being related. Such discrepancies can make the library less intuitive and require users to frequently consult the documentation to ensure they are using functions correctly.

Incompatibility with massive datasets and unstructured data

Pandas isn't a one-size-fits-all solution for all data types. It does handle large datasets, but once you hit the 2 to 3 GB cap, things can start to drag or cog your memory. So, while Pandas can deal with big data, massive datasets might not give you the best performance. For those kinds of tasks, you'll need to look for more efficient alternatives.

Another way to think of it: Pandas rocks when it comes to dealing with neat, tabular data, but it's not your best bet for formats, such as images, audio, or unstructured text. It is optimized for tables, so trying to wrangle unstructured data with Pandas could end up being more trouble than it's worth, potentially slowing you down or boxing you in.

Dependency on other Python libraries

Pandas integrates well with other libraries, like NumPy, Matplotlib, and SciPy. However, for very complex tasks or specialized analyses, these additional tools become necessary, which some view as a dependency issue. But this is also arguably a perspective problem rather than a real one: integration for versatility versus dependency for advanced tasks.

Pandas vs NumPy, PySpark, and other alternatives

You can replace or combine Pandas with other tools. To understand when to do this, let's compare them in specific cases

How NumPy, PySpark, Dask, Modin, Vaex, R libraries stack up against Pandas

NumPy: For numerical computing

NumPy is the fundamental package for scientific computing in Python. It is applicable when you need heavy lifting in numerical computing as it speeds up operations with its array magic, slicing through data faster than Pandas for certain tasks.

If you compare NumPy vs Pandas, the former is more lightweight and packs a punch for array operations, making it efficient for high-level mathematical functions that operate on arrays and matrices. NumPy doesn't eat up excess memory, so it's great when you're running tight on resources. However, it falls short of handling non-numerical data types and lacks the convenience of data manipulation that Pandas does well.

PySpark: For distributed big data processing

PySpark is the Python API for Apache Spark, an open-source framework and set of libraries for crunching big data on the fly. It is one of the most efficient Python libraries for handling big data. PySpark’s made for speed and scales up easily. It makes light work of massive datasets that would bog down Pandas.

PySpark brings the power of distributed computing to your doorstep so you can churn through data across multiple machines. This means you can tackle big data projects that Pandas can’t. However, it’s heavyweight compared to Pandas.

It requires a bit more setup and learning curve, definitely not the best fit for small-scale data wrangling where Pandas' simplicity and ease of use would win out. But, when you're eyeing projects with voluminous datasets, such as analyzing web-scale datasets or running complex algorithms over large clusters, PySpark is preferred.

Dask: For parallel computing

Dask is a Python library used to break down big data into manageable chunks, making it easier to process without choking up your computer. Pandas is meant to run on a single system and has trouble with huge datasets that don't fit in memory, whereas Dask is designed for parallel and distributed computing and can scale out workloads across numerous machines.

Nonetheless, it can be a bit trickier to get the hang of compared to Pandas, especially when it comes to the simplicity and directness Pandas offers for data manipulation.

Modin: For speedier data manipulation

Modin is a DataFrame for datasets from 1MB to 1TB+. It comes into play when you want to supercharge your DataFrame operations. It's like putting a turbocharger on Pandas to speed up data manipulation tasks by distributing them across all your CPU cores. Perhaps, the best part is it's compatible with Pandas, so you can switch over without having to learn a whole new library.

While it's great for turbocharging operations, Modin might still trip up on very specific or advanced Pandas functionalities that aren't fully optimized for parallel processing yet. Modin can be truly helpful when you're working with large datasets on a single machine and need to cut down on processing time without stepping into the more complex territory of distributed computing frameworks like Dask or Spark.

Vaex: For larger-than-memory DataFrames

Vaex is a high-performance Python library for lazy Out-of-Core DataFrames. These are DataFrames that handle data too big to fit in your computer's memory (RAM). Rather than load everything at once, they read and process data lazily, as needed, making it possible to work with huge datasets on a normal computer.

Vaex calculates statistics such as mean, sum, count, standard deviation, etc, on an N-dimensional grid for more than a billion ( 10^9 ) samples/rows per second. Vaex lets you sift through billions of rows almost instantly, a feat Pandas can't match. It enables you to explore and visualize massive tabular datasets.

On the flip side, it doesn't offer the same level of convenience and a wide range of functionalities for data manipulation as Pandas.

R Libraries: For statistical analysis and visualization

R Libraries have a strong focus on statistical analysis, data modeling, and data visualization, making them a go-to for researchers and statisticians.

Compared to Pandas, they have a more extensive range of statistical methods and graphing options right out of the box. However, they might not be as straightforward for general data manipulation tasks. Functioning in memory, R is also not the best choice for big data projects.

How to get started with Pandas

Once you've grasped the fundamentals of Python, learning Pandas is straightforward. Here's a starting point for using the Library.

Pandas documentation. The first and most comprehensive resource you should look into is the official Pandas documentation. It is very detailed and covers all the functionalities of the library, including tutorials and examples.

Practice platforms. Kaggle is not only a platform for data science competitions; it’s a place to learn and practice data science. It has a "Kernels" section where you can write and execute Python code in the browser, with many examples using Pandas on real datasets.

Communities. Stack Overflow is similar to Reddit. You can ask questions and find answers to specific Pandas problems Alternatively, there’s Pandas GitHub Repository, which could help if you’re interested in the development side of Pandas or want to contribute.

See Also

Books. Books. Check out Python for Data Analysis by Wes McKinney, who is also the creator of Pandas. It’s useful for getting the hang of Pandas along with other tools in the Python data analysis ecosystem, such as IPython, NumPy, and Matplotlib. The book lays out interesting use cases and handy tips. Or you might want to go for Pandas for Everyone: Python Data Analysis by Daniel Y. Chen. It's beginner- friendly, makes data analysis with Pandas and Python easier to get into, and focuses on real-world examples.

Online courses and certification. DataCamp offers interactive Python courses on data analysis with Pandas. For example, Pandas Foundations and Data Manipulation with Pandas are good courses to start with. Likewise, Coursera provides several courses that teach Pandas for data science and analysis. The Python Data Science Toolbox part of the IBM Data Science Professional Certificate is another option.

This post is a part of our “The Good and the Bad” series. For more information about the pros and cons of the most popular technologies, see the other articles from the series:

The Good and the Bad of Terraform Infrastructure-as-Code Tool

The Good and the Bad of the Elasticsearch Search and Analytics Engine

The Good and the Bad of Kubernetes Container Orchestration

The Good and the Bad of Docker Containers

The Good and the Bad of Apache Airflow

The Good and the Bad of Apache Kafka Streaming Platform

The Good and the Bad of Hadoop Big Data Framework

The Good and the Bad of Snowflake

The Good and the Bad of C# Programming

The Good and the Bad of .Net Framework Programming

The Good and the Bad of Java Programming

The Good and the Bad of Swift Programming Language

The Good and the Bad of Angular Development

The Good and the Bad of TypeScript

The Good and the Bad of React Development

The Good and the Bad of React Native App Development

The Good and the Bad of Vue.js Framework Programming

The Good and the Bad of Node.js Web App Development

The Good and the Bad of Flutter App Development

The Good and the Bad of Xamarin Mobile Development

The Good and the Bad of Ionic Mobile Development

The Good and the Bad of Android App Development

The Good and the Bad of Katalon Studio Automation Testing Tool

The Good and the Bad of Selenium Test Automation Software

The Good and the Bad of Ranorex GUI Test Automation Tool

The Good and the Bad of the SAP Business Intelligence Platform

The Good and the Bad of Firebase Backend Services

The Good and the Bad of Serverless Architecture