The speech and voice recognition technology market has been booming lately, with its value expected to grow from $9.4 billion in 2022 to $28.1 billion by 2027. This surge highlights the growing demand for Automatic Speech Recognition (ASR) systems in various industries. As more businesses realize the benefits of ASR, it's crucial to understand how these systems work and how to approach the selection process.

This article will explain speech recognition, how it functions, and the most common use cases. It will also outline key factors to consider when selecting the right system, explain the testing process, and review five popular tools. We’ll provide practical advice and insights based on our experience in choosing and integrating an ASR system for our client—an agency specializing in formal meeting reporting.

What is speech recognition?

Speech recognition, also known as automatic speech recognition (ASR), computer speech recognition, or speech-to-text, refers to the AI-driven capability of a program to recognize and interpret human speech and convert it into written text.

This advancement simplifies many tasks by eliminating the need for manual typing. Speech recognition also creates opportunities for hands-free communication and improved accessibility of digital devices for individuals with mobility impairments.

Speech recognition vs. voice recognition

Speech recognition is often confused with voice recognition, so let’s clarify the difference.

Voice recognition focuses on identifying and distinguishing individual voices or speakers based on unique vocal characteristics, such as pitch and tone. It aims to recognize the speaker's identity rather than the specific spoken words. Voice recognition systems are commonly employed in security applications, where an individual's voice is a form of identification. These systems analyze the unique vocal patterns of a person's voice to verify their identity and grant access to secure facilities or to unlock a device.

Speech recognition’s primary purpose is to understand and transcribe spoken language rather than to identify individual voices. This technology has various applications, such as virtual assistants, dictation software, and automated transcription services. The next section will discuss the use cases of speech recognition in more detail.

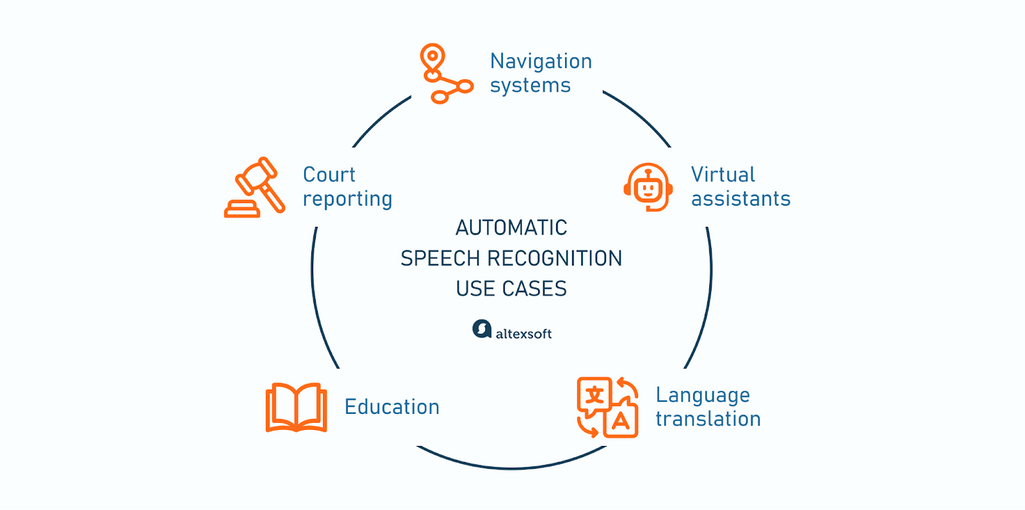

Speech recognition use cases

Speech recognition is used in various industries to save time and enhance convenience. Here are some of the most common use cases.

Automatic speech recognition use cases

Navigation systems. Speech recognition software enhances driver safety and convenience by enabling hands-free interaction with a vehicle. Drivers can use voice commands to control various functions without diverting their attention from the road or taking their hands off the wheel. For example, instead of manually inputting addresses or searching for locations on a touchscreen interface, drivers can simply speak their commands aloud.

Virtual assistants. Voice-activated personal assistants, such as Siri, Google Assistant, and Amazon Alexa, utilize speech-to-text technology to interpret spoken commands or user queries. For example, you may ask to find nearby restaurants, set reminders, call someone, or play a song. These virtual assistants streamline tasks and provide quick access to information, simplifying daily activities.

Language translation. ASR is an integral part of automated translation systems enabling people to effectively communicate across language barriers, whether in travel, business, or personal interactions. After ASR converts a user’s speech into text, the machine translation module analyzes the input to generate the translation. Then, the speech synthesis module takes the translated text and turns it into spoken words. Based on a corpus of speech data in the target language, the module matches the text with the right pronunciation and intonation, selecting appropriate waveforms (or spectrograms) from the database.

Education. When using speech recognition for language instruction, learners speak into a microphone, practicing pronunciation of words or phrases in the target language. The learning platform (e.g., Duolingo) then determines the accuracy of the learner's pronunciation, comparing it to the correct patterns stored in its database.

Court reporting. Traditionally, court reporters capture words spoken during proceedings with stenotype machines or shorthand methods (abbreviated writing techniques). Another approach is to make an audio record of hearings and convert it into text later on. ASR can aid in both scenarios, dramatically reducing manual efforts, cutting time and costs, and minimizing the probability of errors.

The problem of accuracy became especially acute during COVID-19, with the transition to virtual courtrooms. In traditional settings, court reporters not only listen to proceedings but also benefit from visual cues, such as speaker gestures or facial expressions, which enhance the understanding of words. However, in virtual environments like Zoom, these visual cues may be limited or absent, leaving stenographers to rely solely on auditory information and increasing the likelihood of mistakes in transcription.

Now that we've discussed ASR's applications and the problems it addresses, let’s talk about how this technology works.

How does speech recognition work?

Speech recognition systems analyze audio input, identifying phonetic patterns and linguistic structures to transcribe verbal words into written text. Let’s break down this process.

How speech recognition works

Recording audio inputs. Audio input is any sound that is captured by an audio recording device. When a person speaks into a microphone, the sound waves produced by their voice are considered audio input.

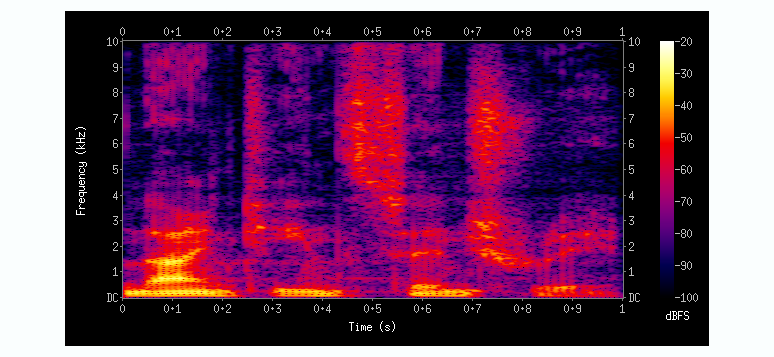

Digitizing acoustic signals. The captured sound is converted into a spectrogram, which is a visual representation of the frequencies. The spectrogram displays the intensity of each frequency as it changes over the duration of the sound.

An example of a spectrogram. Source: Wikipedia

Analyzing acoustic features and detecting phonemes in a spectrogram. Phonemes are units of sound that form the building blocks of speech. For example, the word "cat" consists of three phonemes: /k/, /æ/, and /t/. Each phoneme corresponds to a specific sound, and together, they create the auditory pattern that represents the word "cat." Speech recognition systems identify acoustic features associated with each phoneme by breaking the spectrogram into smaller segments.

Acoustic features are the measurable properties of sound that help differentiate one phoneme from another, such as frequency, duration, and intensity. For example, consider the pronunciation of the consonants "p" and "b." While both sounds are produced by closing the lips and then releasing them, they differ in their acoustic properties.

By analyzing these acoustic features, the ASR system can accurately classify and recognize the specific phonemes in the audio input.

Decoding sequences of phonemes into written words. Speech recognition systems leverage Natural Language Processing (NLP) techniques to map sequences of phonemes into meaningful units, such as words, phrases, and sentences, eventually converting spoken words into written text.

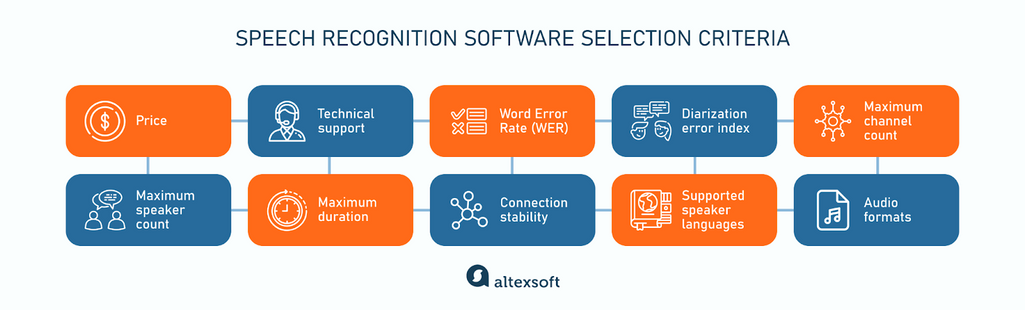

How to choose speech recognition software

As speech recognition software continues to revolutionize industries worldwide, organizations must navigate various options to find the best fit for their requirements. This section explores ten selection criteria that decision-makers should consider.

Speech recognition software selection criteria

Price is one of the most critical considerations, directly impacting the solution's cost-effectiveness. Businesses must invest in software that aligns with their budget while meeting their speech recognition needs. Pricing models may include subscription-based plans, pay-per-use, or one-time licensing fees.

Customer support (service level agreements [SLAs] and responsive technical support) should align with the organization's operational needs and expectations for ongoing assistance and troubleshooting. For example, it is typically harder to contact the support teams of more prominent vendors like AWS and Google, so keep that in mind. Some companies offer customer support as an additional service and charge for it separately.

Word Error Rate (WER) evaluates the accuracy of ASR systems by comparing the transcribed text to the original spoken words. It calculates the percentage of incorrect words or errors, with a lower average WER indicating higher accuracy. This factor was of paramount importance for our clients since transcripts from their meetings are used for reports, references, and other documentation.

Diarization is the ability to distinguish between different speakers in an audio recording. The Diarization Error Index (DER) calculates the percentage of errors in speaker identification and labeling. A lower DER ensures that transcriptions correctly attribute spoken words to the appropriate speakers. Identifying speakers is essential for understanding context.

Maximum speaker count means how many distinct speakers a system can identify within a given audio recording. For instance, if twenty people talk during a recording, but the ASR tool identifies only ten speakers, then the tool's maximum speaker count is ten. It is an important consideration for businesses that handle recordings with multiple speakers, such as conference calls or meetings.

It's worth noting that some ASR systems may struggle with diarization as the speaker number increases. For example, AltexSoft observed this issue when testing the AWS speech recognition service, noting a drop in diarization accuracy when more than five speakers were present. Therefore, when evaluating ASR systems, it's essential for businesses to consider both the maximum speaker count and its impact on diarization accuracy.

Maximum channel count is the capacity to identify and transcribe speech from distinct channels simultaneously. Think of a parliament session where each speaker has a dedicated microphone. These microphones capture individual audio streams, sending them to the ASR system as separate channels. An ASR tool can process a certain number of these streams simultaneously, recognizing and transcribing speech from each of the microphones.

This metric can vary depending on the audio format. For example, formats like MP3 may limit the number of channels.

Maximum duration limits how long the audio can be transcribed without interruptions after the connection is established. ASR systems require reconnection after a certain duration, which can vary widely—from as short as 5 minutes to several hours.

Connection stability is the ability of an ASR system to maintain consistent performance, particularly in the face of fluctuations in the audio input. Imagine a scenario where conversations suddenly become much faster. In this situation, a robust ASR system should adapt seamlessly to the increased speed, adjusting its transcription rate accordingly.

For example, it might transition from transcribing one to two sentences per second. The critical observation here is how the ASR system reacts to these fluctuations. Does it continue transcribing the audio accurately, does it start making more errors, or does it fail to proceed entirely? It's about ensuring the system remains reliable and responsive in dynamic audio environments.

Supported speaker languages is an important consideration for multinational businesses or those with diverse language needs. Companies should prioritize ASR software with comprehensive language support relevant to their operations to ensure accurate transcription and seamless integration into their workflows.

Audio formats are the specific file types that encode audio data. They determine how audio is stored, compressed, and transmitted, impacting transcription accuracy and compatibility with ASR systems. For example, if your organization primarily deals with WAV or MP3 files, you'll want an ASR system that supports these formats to ensure seamless integration.

Note that you have to conduct comprehensive testing to truly understand how the system behaves in terms of the aspects described above. There may be differences between the system's advertised capabilities and actual behavior during use. The following section will explore crucial considerations during the testing process.

Testing speech recognition software

Here are several factors to pay attention to when testing ASR software.

Speech rate. The speed and flow of speech in the audio input can impact the ASR system's performance. Testing should evaluate how well the system handles varying speech rates and whether it accurately transcribes rapid and deliberate speech.

Voice diversity. In scenarios where multiple speakers are present or if there are similarities in voices, the ASR system's ability to differentiate between speakers and accurately transcribe their speech becomes critical. You should assess whether the system correctly identifies speakers and assigns their speech segments. A combination of male and female speakers with diverse speech patterns helps assess the system's ability to transcribe speech from various voices accurately.

Phrase boundaries. ASR software’s ability to identify the start and end of phrases within the audio input is crucial. Testing should evaluate whether the system effectively segments the audio into meaningful phrases, avoiding errors such as cutting off or merging words improperly.

Timestamps. ASR systems should accurately timestamp each phrase regardless of fluctuations in Internet speed. It's important to verify that the software maintains accurate time synchronization even if there are connectivity issues. Testing involves verifying that timestamps precisely reflect the timing of speech events, allowing users to track when each phrase was spoken within the audio recording.

It’s important to check the system’s capability to work with buffered audio. If the Internet connection drops out, it's crucial for the system to accumulate audio in a buffer. Once the connection is restored, the buffered audio should be sent for processing. The ASR system must correct timestamps if necessary and return the transcribed text. Understanding the connection timeout is also key, as it indicates how long the ASR service will wait before terminating the connection if there's no activity.

Specialized terminology. When testing ASR software, it's essential to evaluate its performance using texts relevant to the client's domain. Industries like travel, healthcare, and legal have specialized terminologies and jargon, increasing the chances of transcription errors if the ASR system isn't adept at recognizing these terms.

Testing across various scenarios allows organizations to evaluate the ASR system's performance under real-world conditions, identify potential issues, and assess the system's overall capabilities.

Speech recognition software overview

When selecting an ASR system, look for capabilities that meet your specific needs and conduct thorough testing to ensure satisfactory performance.

Evaluating ASR software across multiple dimensions provides a comprehensive understanding of its capabilities. You should estimate the overall benefits a vendor offers rather than solely focusing on individual standout features. This is how AltexSoft decided Rev AI was the right choice for our client.

Before we start with the ASR software overview, let’s clarify the difference between the three main methods of speech recognition: streaming, asynchronous, and synchronous.

Streaming speech recognition allows you to stream audio and receive a transcribed text in real time.

Asynchronous recognition involves sending pre-recorded audio data to the ASR system and getting results once all audio has been processed after a certain time.

With synchronous recognition, you get the transcribed text in chunks as the audio data is being processed, but with certain delays between each chunk. Unlike streaming, it doesn't provide immediate real-time results, and unlike asynchronous recognition, you don't have to wait for the entire audio file to be transcribed before receiving any output.

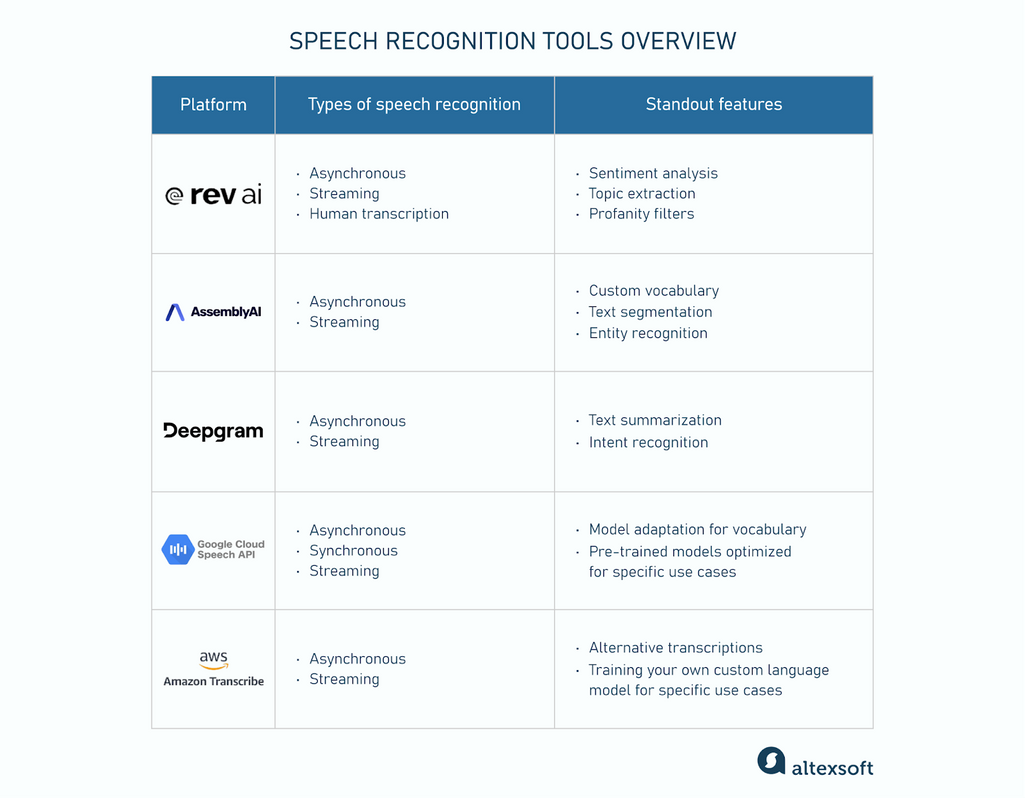

A general overview of speech recognition tools

This section will review five popular ASR systems, focusing on their standout features. Due to the complexity of pricing structures, we won't list the cost for each tool in the text, but we'll provide links to the official pricing pages.

Rev AI: Sentiment analysis, topic extraction, and profanity filters

Rev AI offers three speech-to-text solutions: asynchronous, streaming, and human transcription as an additional service. Asynchronous works in more than 58 languages, streaming is available in 9 languages, and human-created transcription has a turnaround time of approximately 24 hours and is available exclusively for English-language content.

Rev AI trained its speech models on more than three million hours of human-transcribed audio content. The tool offers the following features:

- sentiment analysis to get positive, negative, and neutral statements;

- topic extraction to identify key themes in the text; and

- a profanity filter to remove offensive words from the text.

Rev AI targets several niches, such as media and entertainment (live web or broadcast content), education (lectures, webinars, and events with real-time streaming), call centers (phone calls), and others with real-time meetings and events.

For pricing details, go to this page.

AssemblyAI: Custom vocabulary, text segmentation, and entity recognition

AssemblyAI offers asynchronous and streaming speech-to-text solutions. Their model has been trained on 12.5 million hours of multilingual audio data and supports over 99 languages. The tool has a few notable features:

- confidence score indicating the system's confidence level for each word in the transcription;

- automatically applying proper punctuation and casing; and

- custom vocabulary to incorporate specialized terminology, specify how certain words should be spelled, and choose whether to include disfluencies.

Additionally, AssmeblyAI offers the Speech Understanding service, which includes features like removing sensitive data, text segmentation based on context, and entity recognition, such as the names of individuals, organizations, and other relevant subjects.

AssmeblyAI has flexible pricing plans. Learn the details here.

Deepgram: Text summarization and intent recognition

Deepgram provides three solutions: Speech to Text (asynchronous and streaming), Audio Intelligence, and Text to Speech. Speech to Text includes three models:

- Nova (for those prioritizing affordability and speed),

- Whisper (with precise timestamps and high file size limits), and

- Custom (for enterprise scalability requirements).

Audio Intelligence specializes in summarization, topic detection, intent recognition, and sentiment analysis. Text to Speech converts written text into human-like voice, useful for real-time voicebots and conversational AI applications.

Deepgram operates in over 30 languages and has different prices for each solution.

Google: Model adaptation for vocabulary and pre-trained models optimized for specific use cases

Google’s Speech-to-Text feature works for asynchronous, synchronous, and streaming transcription. It uses Chirp, a speech model developed by Google Cloud that has been trained on millions of hours of audio and 28 billion sentences of text spanning over 100 languages.

The system supports 125 languages and lets users customize the software to recognize specific words or phrases more frequently than others. For example, you could bias the system towards transcribing "weather" over "whether."

Speech-to-Text allows users to select from a variety of pre-trained models optimized for different domains or use cases. For example, it offers an enhanced phone call model tailored to handle audio generated from telephony sources, such as phone calls recorded at an 8 kHz sampling rate. This model is tuned to recognize speech patterns commonly found in phone conversations, ensuring higher accuracy and better performance when dealing with such audio data.

Additionally, Speech-to-Text can transcribe speech even when the audio is distorted by background noise without the need for extra noise cancellation.

Visit this page to view the pricing table and learn what factors determine the costs.

AWS: Alternative transcriptions and training your own custom language model

Amazon Transcribe is an ASR service that supports over 100 languages and can do asynchronous and streaming transcription.

Amazon Transcribe lets users customize their outputs. It provides up to 10 alternative transcriptions for each sentence, allowing you to choose the best option. The feature is especially useful in cases where human reviewers need to verify or adjust transcriptions for accuracy.

You can build and train your own custom language model (CLM) for particular use cases. To do this, submit a corpus of text data that reflects the vocabulary and language patterns specific to your domain. This could include industry-specific terms, product names, or phrases commonly used in your organization. Users can leverage this feature to enhance speech recognition accuracy for specialized content across various domains.

Go to this page to learn the pricing details and view the monthly charges calculations.

Speech recognition software API integration

You can use ASR as a standalone tool or integrate it with your infrastructure. To choose which option is better for your business, consider the following factors.

Volume of data. If the business deals with a high volume or frequent influx of audio data that must be transcribed, integrating an ASR system into its infrastructure would be more efficient. In our case, our client processes a large volume of audio recordings from formal meetings.

Customization and integration needs. Businesses with specific customization requirements may benefit from integration, as it offers more flexibility to tailor the ASR functionality to meet the business's unique needs.

For example, due to confidentiality concerns for certain types of meetings, companies should anonymize speakers' real names in transcriptions and label them as "Speaker A" or "Speaker 1" instead of using actual names. Integration of the ASR system allows agencies to implement this customization, ensuring compliance.

Security and privacy considerations. If the audio data being transcribed contains sensitive or confidential information, businesses must keep the transcription process within their own infrastructure for enhanced security. Integrating an ASR system allows businesses to maintain control over data handling.

For our client, security and compliance with data protection regulations are paramount. There are legal obligations for the data associated with the meetings to be stored in the state. To comply with these requirements, we utilized a specific location cluster on AWS to ensure the data is stored within certain geographical boundaries.

Rev AI’s API integration into the client’s IT infrastructure relies on web sockets, which enable real-time, two-way communication between the client’s system and the server. The client-side architecture involves a browser-based interface with built-in microphone support. Users interact with the interface, and audio data is captured in real-time via browser APIs.

So the client sends audio data to the server in real time via a web socket connection. On the backend side, a proxy server forwards the audio data to Rev AI. The ASR system processes the audio data and sends transcriptions back to the server. Finally, the server passes the transcriptions to the client (again, through the web socket connection), completing the communication loop.

AltexSoft decided to use AWS as their cloud provider for infrastructure services because the client’s DevOps team is already working with AWS. This decision ensures alignment between development and operational processes. Additionally, AWS offers the ability to specify the regionality of resources, ensuring compliance with regulatory requirements.