Autonomous vehicles have been five years away for the last 15 years.

Imagine you’re driving down the highway and, as you pass the 18-wheeler on your right, you notice the driver’s seat is empty. Will you be freaked out or excited?

With the rapid technological advancements in the past few years, this reality isn’t that far away – or is it? The situation is very ambiguous. While high-tech companies are working hard to advance the era of autonomous trucks, drivers wonder if they will lose their jobs to robotic big rigs, and logistics managers look for opportunities to capitalize on the new technology.

As driverless vehicles begin to roll out on roads worldwide, let’s explore how they operate, the impact they’re poised to make on logistics and transportation, and the challenges they face in a world still adjusting to their presence.

What are self-driving trucks?

Self-driving trucks, also known as autonomous trucks or driverless trucks, are heavy goods vehicles equipped with advanced technologies that allow them to navigate and operate without human intervention.

These trucks use a combination of sensors, cameras, GPS, radar, and artificial intelligence (AI) to perceive their environment and make decisions in real time (we’ll describe these technologies in more detail in the next section).

Depending on the degree or level of automation, the original equipment manufacturers (OEMs) like Volvo or PACCAR partner with component providers and software companies to source and integrate the technologies into vehicles. For example, Daimler uses digital maps from HERE, the automated parking system from Bosch, self-driving technology from Torc, and so on.

But before we explore the technicalities, let’s take a step back and look at how it all began.

Evolution of autonomous vehicles

Though some would argue that Leonardo da Vinci was the first to design a self-propelled cart back in the 16th century, the actual explorations into autonomous vehicle (AV) technology began in the 1980s-1990s. Those early experiments focused on the use of cameras and sensors to enable vehicles to navigate and understand their environment.

The Pentagon, through its Defense Advanced Research Projects Agency (DARPA), was one of the entities supporting research into self-driving technologies. Its DARPA Challenges of 2004-2007 were an important milestone in the evolution of autonomous ground vehicles (AGVs). These competitions were held to test AV capabilities and accelerate their development.

Though no car managed to complete the planned desert route in 2004, by 2007, the technology improved substantially. Vehicles successfully fulfilled a 60-mile urban course, showcasing the potential for self-driving technology in complex environments.

In the 2010s, rapid progress in artificial intelligence, machine learning, and sensor technology spurred both giants like Google and various startups to develop and test autonomous trucks, adapting car automation for larger vehicles.

In the late 2010s and into the 2020s, regulatory frameworks started evolving to accommodate autonomous trucks on public roads. Retailers like Walmart have begun incorporating fully automated vehicles into their logistics networks, signaling a shift towards broader deployment. Speaking of automation levels, let’s briefly define what they are.

Automation levels

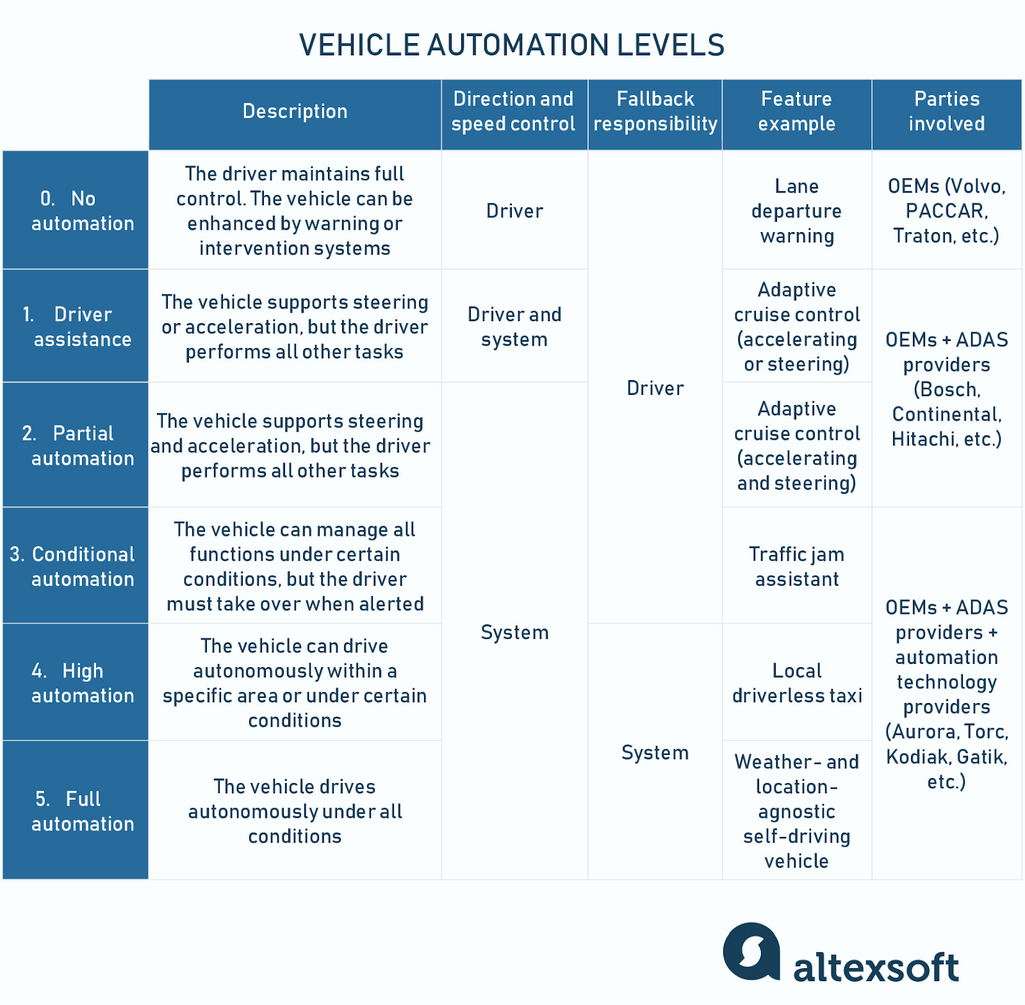

The Society of Automotive Engineers (SAE) defines the levels of automation for vehicles, including self-driving trucks. These levels range from Level 0 (no automation) to Level 5 (full automation) and describe the extent to which a vehicle can take over driving tasks from a human operator.

Automation levels

Level 0: No automation. At this level, the human driver controls all major systems. The vehicle can be equipped with sensors that enable the system to issue warnings and intervene, but there’s no sustained vehicle control.

Level 1: Driver assistance. Vehicles have a driver assistance system to support steering or acceleration. Adaptive cruise control is an example where the car can adjust speed, but the driver maintains steering control. Other features usually included are emergency brake assist, lane keeping, etc.

Level 2: Partial automation. Here, the vehicle must have an advanced driver assistance system (ADAS) that automates at least two functions (typically acceleration and steering), but the driver must always remain engaged with the driving task and monitor the environment.

Some well-known level 2 automation examples are Tesla’s Autopilot and GM Cadillac's Super Cruise. In the trucking world, there’s Daimler Trucks North America (DTNA), which added partial automation features to its Freightliner Cascadia, or Mercedes-Benz, which includes the Active Drive Assist system to its Actros trucks.

See Also

While at levels 0 to 2 the OEMs mainly work with ADAS providers such as Bosch or Continental, more advanced levels require more sophisticated, AI-based technologies, so specialized tech companies are usually involved (e.g., Aurora, Kodiak, Torc, etc.).

Level 3: Conditional automation. The vehicle can manage all safety-critical functions under certain conditions, but the driver is expected to take over when alerted. In some scenarios, like traffic jams, the vehicle controls most aspects of driving without human intervention.

Level 4: High automation. Vehicles at this level can operate in self-driving mode within a specific geographic area or under certain conditions without expecting a human driver to take over. However, human input may be required outside of these conditions or areas.

Level 5: Full automation. At Level 5, the vehicle is fully autonomous and requires no human attention. It can drive in all conditions and environments and may not even include steering wheels or driver controls.

The most advanced autonomous trucks are anticipated to handle everything from highway driving to parking and even navigating to loading docks entirely on their own. But though some robotaxi models already flirt with higher automation levels, as of today, Level 2 is the highest commercially available for both cars and trucks.

Now, let’s look at the technologies that will help make self-driving possible.

Technologies behind autonomous trucks

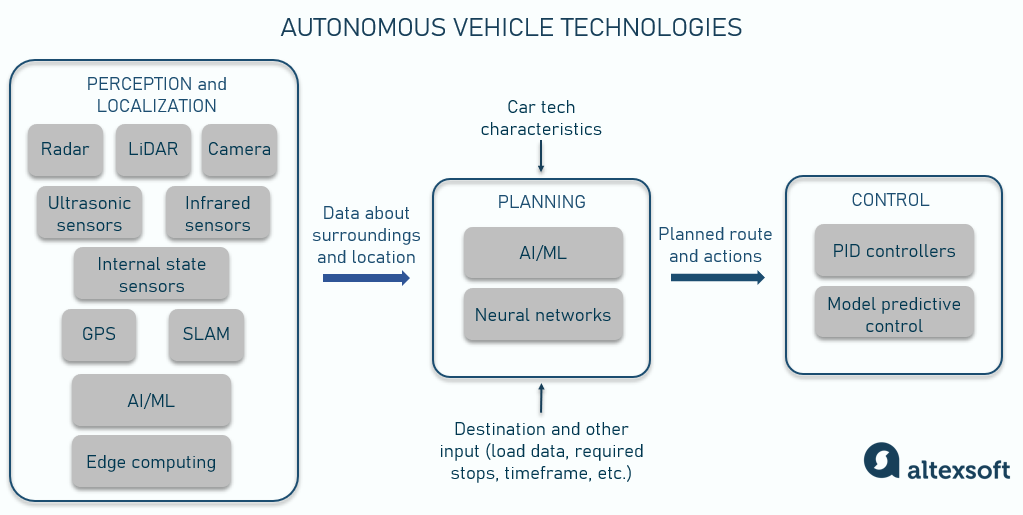

Needless to say, the tech architecture of any AV comprises an intricate blend of sophisticated hardware and software. Though there are several different approaches to AV architecture, the most common one includes four main components: perception, localization, planning, and control.

Autonomous driving technologies

Perception and localization: sensors, cameras, and GPS

First and foremost, AVs must collect real-time data from their environment to create a holistic model of the vehicle and the surrounding objects, including the road, other vehicles, signs, pedestrians, etc. So AVs are equipped with an array of sensors that continuously scan the road and monitor traffic conditions.

Cameras capture visual data about nearby objects (vehicles, pedestrians, road signs, etc.) and detect lane markings. Cameras also help estimate how far away objects are by analyzing changes between multiple images, though they aren’t as precise as LiDAR in measuring distance.

LiDAR (Light Detection and Ranging) is a more advanced technology that uses lasers to measure distances. Long- and short-range LiDAR sensors send out multiple laser beams to objects at fast speeds and then measure how long it takes for the light to bounce back. This information helps create detailed 3D maps of the surroundings.

LiDAR’s main advantages over cameras are higher accuracy and better performance in low-light conditions, though they are still affected by environmental factors such as heavy rain, fog, or dust.

Radar is similar to LiDAR, but instead of lasers, they use radio waves to detect the distance and speed of objects around them. They are especially good at sensing other vehicles, even in poor weather conditions or at night, but are less precise and have a limited range of view.

Cameras, LiDAR, and radar are often used in combination, as each technology has its strengths and weaknesses.

Some other types of perception devices that enable driverless operations are ultrasonic sensors (good for short-range detection, help with parking and close proximity maneuvers) and infrared sensors (useful for night vision capabilities).

In addition, diverse internal state sensors continuously monitor the vehicle’s condition, such as wheel load, battery life, fuel amount, motor health, etc.

Read more about how sensors work together in our separate post dedicated to the Internet of Things (IoT) architecture.

Such sensor variety enables a so-called vehicle-to-everything (V2X) technology that allows AVs to interact with the surrounding environment. This includes communication with

- other vehicles (V2V, vehicle-to-vehicle),

- infrastructure (V2I, vehicle-to-infrastructure),

- pedestrians (V2P, vehicle-to-pedestrian), and

- the network (V2N, vehicle-to-network).

Sensor fusion is the process of combining data from multiple sensors to create a comprehensive understanding of the environment. Not only the system must detect the objects around the vehicle, but it also must identify them and predict how they are likely to behave.

So all these sensors continuously collect massive amounts of data (up to 1TB per hour). Some of it is processed by the onboard computer for faster responses to dynamic driving environments, while a portion is sent to the cloud. Advanced AI and ML algorithms analyze incoming data, recognize patterns, and provide the output for further processes.

As for the localization aspect, to safely navigate, the vehicle must define its precise position at all times. For that, it requires a combination of several kinds of data:

- perceived sensor data about the surrounding environment,

- GPS/GNSS positional information received from satellite navigation systems, and

- high-definition maps.

The AV’s system references positional and perceived data to the map data, determining its location. The perceived data is also used to update the map information and always keep maps up to date.

However, the GPS-based localization approach fails when something blocks the satellite signal (e.g., tall buildings, canyons, dense forests, tunnels, etc.). So most AVs also rely on techniques such as SLAM (Simultaneous Localization and Mapping) to continuously update the vehicle's position relative to its surroundings based only on sensor data.

Planning: neural networks

Once the vehicle has a comprehensive understanding of its environment and its location within it, the planning component takes over to determine the best route to the destination. In addition to defining the course itself, it enables navigating around obstacles, obeying traffic rules, and making other decisions in real time.

This process requires sophisticated AI algorithms such as neural network models. Similar to a human brain, these models analyze past and real-time incoming data, evaluate multiple potential actions, and choose the safest and most efficient course (taking the car’s tech characteristics into account).

Control: PID and other control systems

After the system determines the required actions, the control module physically executes them. It adjusts the vehicle's steering, acceleration, and braking to follow the route safely and smoothly.

Technologies like PID controllers (Proportional, Integral, Derivative), model predictive control, and other advanced control algorithms ensure the vehicle adheres to the planned path and reacts to dynamic changes in the environment.

Together, these four components integrate to form the core of an autonomous vehicle's architecture, enabling it to perform complex navigational tasks that would normally require human input. Each component is underpinned by layers of redundancy to enhance safety and reliability, ensuring that the vehicle can operate even if one component encounters issues.

There is one more tech component worth mentioning. Simulation and digital twin technology don’t directly impact the driving process, but they are crucial for teaching, training, and perfecting AV systems.

AV training: simulation and digital twins

Simulation helps test various scenarios and train the AV decision-making system to choose the best and safest course of action. For example, Aurora, one of the major autonomous truck developers, made the Aurora Driver perform 2.27 million unprotected left turns in simulation before even attempting one in the real world.

A digital twin is a more sophisticated approach that incorporates real-time data and can simulate multiple scenarios dynamically. In its essence, a digital twin is a virtual replica of a physical vehicle, including its systems and dynamics, which mirrors the real-world conditions.

Digital twins help monitor, analyze, and optimize an AV’s performance and maintenance. In the development phases, they help refine vehicle subsystems by comparing the virtual model's performance with actual field data. They can also be used for operational purposes, such as predicting system failures or optimizing energy consumption based on real-time data fed back from the vehicle’s sensors.

For example, Tesla creates a digital twin for every car to monitor its performance, predict maintenance needs, offer software updates, and so on.

Now that you understand how autonomous vehicles work, let’s discuss their potential impact on logistics and transportation. What are the benefits of driverless trucks – and the challenges they face as they enter the real world? And what should you – as a logistics or supply chain manager – expect from their adoption?

The impact of autonomous trucks on logistics: key benefits and adoption challenges

The adoption of autonomous trucks is related to a range of various concerns, primarily revolving around safety, job displacement, and regulatory issues. However, their development is also expected to deliver significant benefits. Let’s evaluate both sides.

Safety and reliability

According to FMCSA’s annual Large Truck and Bus Crash Facts (LTBCF) report, around half a million large truck crashes occur every year. Another FMCSA study reveals that driver-related reasons (such as inattentiveness or poor performance) account for 87 percent of truck crashes. Overall, driver distraction is a major risk factor.

Implementing autonomous systems can’t yet mitigate risks related to bad weather, flat tires, or equipment failure. But it can potentially help avoid accidents caused by driver fatigue and human error.

Multiple reports claim that autonomous vehicles are safer than human-driven ones. For example, Waymo states that AVs have an 85 percent lower crash rate involving injuries, while Cruise’s data showed their vehicles were involved in 53 percent fewer collisions than human-driven ones.

An independent study of collision types between automated and conventional vehicles also proved that the mitigation of human error factor leads to fewer crashes.

AVs are 43 percent more likely to be involved in rear-end crashes. Further, AVs are 16 percent and 27 percent less likely to be involved in sideswipe/broadside and other types of collisions (head-on, hitting an object, etc.), respectively, when compared to conventional vehicles.

AVs don’t get tired, distracted, or impaired by substances both legal and illegal. Their sensors can perceive farther than human eyes, and the system can react faster than human brains.

See Also

While AVs show great results in controlled environments, one of the main concerns with driverless trucks is ensuring they can operate safely under various road conditions. Questions persist about their ability to handle unpredictable scenarios, such as severe weather or unexpected road obstructions. Additionally, the technology's reliability, including sensor and software performance, is questioned.

As of today, driverless vehicles aren’t perfect, but they are persistently trained to handle all sorts of road situations. All automakers put safety as their main priority. They continuously work on perfecting their systems so that all concerns are addressed before driverless trucks hit the road. For example, Aurora introduced a sensor cleaning solution to enable reliable operation in varying weather conditions and published details on how the truck is expected to behave in emergency situations.

Job displacement

Another widespread concern is the possibility of significant job losses in the trucking industry due to automation. Truck driving is a major source of employment in many regions, and the shift toward autonomous trucks threatens these jobs, potentially leading to economic distress and resistance from labor unions and workers.

However, since the industry experiences a massive shortage of drivers (in 2023, IRU reported over 3 million positions unfilled across 36 countries), autonomous trucks are expected to solve the existing problem rather than create a new one.

We see automated driving complementing people driving. And my expectation is that if you are a truck driver today, you will be able to retire a truck driver.

Plus, some studies refer to the high turnover rates in the trucking industry and suggest that even though the labor market will be affected as AVs get broad adoption, it will soon adjust to new job requirements (e.g., shifting from long-haul routes to local deliveries). Moreover, the development of AVs is expected to create additional jobs as specialists are needed to test, deploy, and maintain AVs.

Regulatory and legal framework

Regulations change every year, so stakeholders must monitor them continually to be aware of the latest updates. Currently, there is a lack of comprehensive regulatory frameworks governing the deployment and operation of autonomous trucks. Issues such as liability in the event of an accident, privacy concerns related to data collection, insurance-related questions, and cross-border regulatory inconsistencies need to be addressed.

In the US, autonomous vehicle operations are not regulated nationally. They are regulated state by state, and the laws vary. There are states that are conducting research on how to formulate regulations, and some states don’t have any laws on self-driving vehicles.

Efficiency and cost reduction

While long-haul drivers must comply with Hours of Service regulations and take time to rest, driverless trucks can operate 24/7 without the need for breaks (except for refueling, loading, and maintenance). So instead of driving an average of 500-600 miles, a route of 1200 miles can be covered in one day. Not only can this reduce delivery times and increase the efficiency of transport operations, it also affects the bottom line of logistics companies.

Self-driving technologies are also expected to reduce labor costs associated with drivers – and those account for around 40 percent of operational expenses.

In addition, optimized driving practices can decrease fuel costs and cause less wear and tear on the truck. Research by the University of California San Diego and the AV developer TuSimple claims that autonomous trucks are 10 percent more efficient than the same models operated manually.

Lower fuel consumption can also contribute to a decrease in greenhouse gas emissions (though this reduction might be minimized by the increased share of truck freight transportation due to a potential shift from rail).

So even though the cost of autonomous trucks is yet unknown, there are research papers (e.g., Cost-Effectiveness of Introducing Autonomous Trucks: From the Perspective of the Total Cost of Operation in Logistics) that evaluate various implementation scenarios and suggest Avs' cost-efficiency.

Public acceptance and other concerns

Below are several other controversies around driverless trucks that are worth mentioning.

Public perception. Public skepticism about the safety and reliability of autonomous trucks can hinder their adoption. Autonomous truck crashes in the news (like the ones with Waymo or TuSimple) generate criticism and mistrust.

On the other hand, improved traffic flow might be a potential benefit of AVs as they can communicate with each other and road infrastructure to maintain optimal speed and spacing, reducing traffic congestion. This idea might reassure people tired of the constant traffic chaos.

So it’s crucial to show all the aspects of new technology to the public and nurture a positive attitude through transparent testing results, safety records, and demonstrable benefits.

Ethical implications. Autonomous trucks raise ethical questions, such as decision-making in critical situations where human life may be at risk. The trolley problem is age-old, and it’s not easy for people to make a decision – so how can we teach a robot? Some suggest training AVs to make an impartial decision that causes the least impact, with no discrimination based on age, gender, or other parameters. But of course, there’s still no universal solution.

Data privacy and cybersecurity. AVs exchange massive amounts of data over the Internet, so there’s a possibility of hacking or unauthorized access. Cyber threats include remote control of the vehicle, stealing sensitive information (driver/passenger/load data), etc. Ensuring the security of data collected and shared is paramount.

Infrastructure and investment. Significant investment is needed to develop the necessary infrastructure to support autonomous trucks, including communication systems, road modifications, fueling stations, etc. The high initial costs and need for widespread infrastructural changes pose considerable challenges.

Addressing these challenges requires collaborative efforts among technology developers, regulators, and stakeholders in the trucking industry to create a balanced approach that maximizes benefits while mitigating risks.

Despite the various concerns around autonomous vehicles, auto visionaries are heavily investing in their development, counting on significant returns. So let’s examine the current state of the trucking industry in relation to driverless operations.

Driverless trucks in logistics: Current real-world implementations

A number of companies have begun extensively testing self-driving trucks for cargo delivery. Some of the biggest names in the industry today are Aurora, Gatik, Torc, Applied Intuition, Waabi, Plus.ai, TuSimple, and Kodiak. Let’s see where they are at.

Aurora Innovation, one of the most ambitious autonomous technology developers, plans to launch fully driverless operations (Level 4-5) in Texas by the end of 2024. Its autonomous trucks have already been hauling freight for FedEx, Uber Freight, Werner, Schneider, Hirschbach, and more, but with a human behind the wheel.

As it prepares for a full-scale commercial launch, Aurora reveals that its autonomous system, Aurora Driver, will integrate with a customer’s transportation management system to schedule loads, share tracking data, and manage other delivery aspects.

Gatik became the first company worldwide to operate daily commercial deliveries without a human in the driver’s seat (Level 4). In 2021, it started moving freight for Walmart on short-haul routes, and in 2022, it partnered with Loblaw, a Canadian supermarket chain. Today, it also works with big names such as Tyson Foods, Kroger, Pitney Bowes, and so on.

Gatik aims to fill the “middle mile” niche – routes of 20-30 miles between distribution hubs and retail locations. While its autonomous trucks still run with a safety driver aboard, the company plans to deploy completely driverless operations at a commercial scale in late 2024-2025.

Kodiak Robotics focuses on long-haul deliveries and, besides carrying freight for commercial customers like IKEA or Martin Brower, it works with the US Department of Defense to develop AGVs for the military.

Other companies in the US and worldwide are at a similar stage, testing their autonomous trucks on highways with a safety driver onboard – and planning mass production and operation scaling in the near future.

Some innovators even design trucks with no driver cabin. Source: Einreid

However, despite many automakers’ optimism and anticipation of soon-to-happen changes, several companies that had been working on developing their autonomous trucks for years (e.g., Waymo, Embark, and TuSimple) shut down their projects in 2023-2024. The reasons vary, but it’s clear that the road to driverless truck adoption isn’t as easy as it might seem.

Future prospects of driverless trucks

Automation penetrates all industries, and transportation is no exception. Airplanes fly on autopilot, driverless trains operate in metro systems of many cities, robotaxis drive people around, and self-steering ships keep the course just as well as humans do. The use of AVs in agriculture, mining, defense, manufacturing, and other sectors is being studied, and it’s just a matter of time before smart systems replace people to do heavy, dangerous jobs.

Intelligent trucks with a certain level of autonomy are already on the roads, with tech advancements making them smarter and safer every day. However, the adoption of fully driverless rigs will most likely be gradual to build up public trust, improve technology, and assess cost-effectiveness.

Also, they would probably be allowed at first only on fixed hub-to-hub routes via highways and/or solely in platooning mode. For example, Deloitte created a model that suggests that driverless truck implementation will start from highway lanes in the US Sun Belt states – where driving conditions are most favorable – and progressively expand to northern states and smaller roads.

Other early use cases would include implementing autonomous yard hoppers or terminal drayage trucks that operate in closed, controlled environments.

As technology continues to evolve, we can anticipate a future where autonomous trucks seamlessly integrate into our transportation networks. And if – or when – the adoption of commercial self-driving trucks becomes widespread, it will most likely lead to a rethinking of logistics strategies, warehouse locations, and the overall structure of supply chains. And that’s what you might want to start thinking about today.

Maria is a curious researcher, passionate about discovering how technologies change the world. She started her career in logistics but has dedicated the last five years to exploring travel tech, large travel businesses, and product management best practices.

Want to write an article for our blog? Read our requirements and guidelines to become a contributor.