“AI is technology’s most important priority, and health care is its most urgent application,” said Microsoft’s CEO Satya Nadella announcing the company’s new acquisition. Nuance, acquired for $19.7 billion (Microsoft’s biggest purchase since LinkedIn), provides niche AI products for clinical voice transcription, used in 77 percent of US hospitals. Its deep learning natural language processing algorithm is best in class for alleviating clinical documentation burnout, which is one of the main problems of healthcare technology.

Microsoft’s move tells a lot about the company’s (and the healthcare industry’s) priorities. Indeed, AI provides tons of life-saving opportunities, and healthcare organizations are prepared to accept them. In the US, clinics boast almost 100 percent EHR adoption numbers along with strict interoperability policies in place. Evolving research is set to fill any remaining gaps. And since natural language processing is one of the fastest-growing AI fields in medicine, we wanted to talk about available applications, emerging trends, and ways to prepare for NLP adoption.

What is Natural Language Processing?

Natural language processing or NLP is a branch of AI that uses linguistics, statistics, and machine learning to give computers the ability to understand human speech. NLP-powered systems can derive meaning from what’s said or written, with all the complexities and nuances of natural narrative text. This allows machines to extract value even from unstructured data.

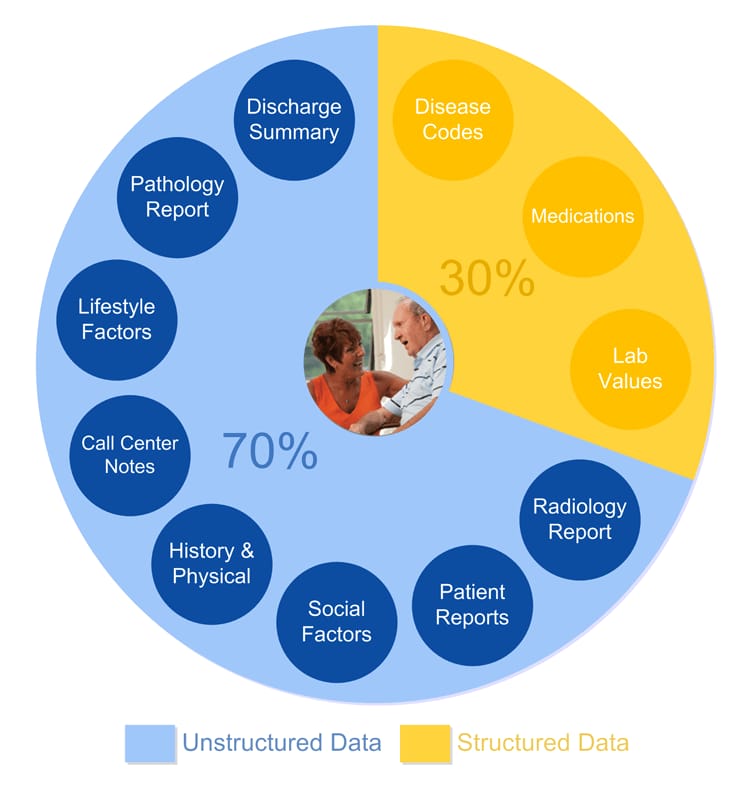

Healthcare organizations generate a lot of text data.

Some of it is structured or organized into specific EHR fields. For example, a patient’s name, age, and gender, their lab values, or financial information are stored in a database according to a predefined schema. This structure allows physicians and other software systems to easily locate needed data, share it, and analyze it, basically -- make use of it.

But a lot of data (by different estimations, 70 or 80 percent of all clinical data) remains unstructured, kept in textual reports, clinical notes, observations, and other narrative text. Unstructured data is unavoidable, yet extremely valuable. It can be manually transformed into structured data by hospital staff, but it’s never a priority in the medical setting. So, whenever physicians need information from textual forms, they must manually rummage through stacks of documents. It creates barriers in already bloated administrative tasks and in case of emergency, can lead to medical hitches and delays.

The many healthcare factors hidden in unstructured data Source: Linguamatics

The many healthcare factors hidden in unstructured data Source: Linguamatics

Most modern NLP applications use state-of-the-art deep learning methods. You can read more about the NLP types and approaches in our dedicated article. But what you need to know here is that deep learning or deep neural networks can understand and analyze data with minimum preprocessing. They’re smart enough to independently perform different NLP processes. These tasks include:

- Text classification -- categorizing unstructured data. For example, organizing patient application forms by urgency or pinpointing fraudulent claims.

- Information extraction -- retrieving valuable information from unstructured data. Say, the system can tag data from patient history, discharge summary, or call center reports and then structure them in an EHR according to a schema.

- Language modeling -- understanding spoken text and producing natural sounding text. The software can accurately transcribe physician notes and then summarize them or further classify and extract data.

How can NLP benefit healthcare organizations?

Natural language processing offers opportunities to tackle several problems physicians, patients, and clinics have.

Alleviate clinician burnout. Physician productivity and motivation suffer from the glut of repetitive administrative tasks that force them to spend extra hours at the computer instead of interacting with patients. This problem even has a name -- the EHR burden. NLP offers several solutions to help doctors from speech-to-text transcribing technology to simplified clinical documentation management.

Streamline administrative processes. Such admin tasks as prior authorization of a patient’s health plan and claims processing contribute to the aforementioned burnout and high billing and insurance-related (BIR) costs. By recent estimations, BIR expenses for healthcare providers range from $20 for a primary care visit to $215 for an inpatient surgical procedure. NLP-powered systems can automate many of the steps in claim filing and reduce turnaround time.

Enhance clinical decision support. Physicians have been using software for making informed care decisions for years now. Typically installed as a part of an EHR, clinical decision support systems (CDSSs) provide helpful prompts on drug selection, diagnostics, and other actions by automatically checking knowledge bases and comparing them with patient records. In some cases, the use of a CDSS is even obligatory, for example, when ordering expensive tests to check that the service provider won’t receive reimbursement for it. However useful, CDSSs are mostly limited to processing only structured data. NLP can provide way more information for a CDSS from sources that it wouldn’t use otherwise and power predictive analytics.

Improve patient interaction and engagement. Patients’ engagement in their own care is one of the key factors in successful health outcomes. So, healthcare providers must actively invite patients to educate themselves, take part in decision-making, and reach out to their physicians when required. NLP has tons of applications to communicate with patients (via automatic calls and chatbots), improve their health comprehension (by estimating their health literacy profiles and replacing medical jargon), and provide simple ways to share their health information, for example, through voice messages.

Let’s look at use cases ready for adoption and list some commercially available, HIPAA-compliant tools.

Medical transcription

Transcription of recorded notes is nothing new in the medical field. For decades, stenographers, nurses, and other healthcare staff transcribed voice-recorded reports dictated by physicians, edited them, and stored them in healthcare records, first on paper, then in digital format.

Today, the demand for human transcriptionists is dropping as physicians switching to dictation software. Such tools are generally good at capturing what is said, but they have their limitations:

- The dictated text has to be reviewed and edited, especially if the tool doesn’t have a vast database of medical terms and is prone to errors.

- Physicians have to record the summaries of patient visits, rather than the whole conversation, thus doing extra mental work.

- This is still unstructured data, so someone must extract valuable information from the recordings.

AI advancements and promised benefits

These shortcomings present a great opportunity to employ NLP. AI-powered speech recognition software replaces many documentation tasks with an automatic workflow. It listens to the natural patient-doctor conversation, edits out only medically relevant information, summarizes it into notes, and sends it to appropriate parts of an EHR. Besides that, algorithms are equipped to adapt to differences in accents and pronunciation.

According to reports from numerous clinics, doctors using AI-based tools noticed improved document quality and completeness, workflow improvements, and even savings on medical transcription tasks.

Available solutions

Nuance Dragon. Dragon by Nuance is a leading transcription tool for clinics that promises to save 2 hours per clinician per shift and reduce documentation time by 45 percent. Its main solution is Dragon Medical One -- a cloud-based system compatible with all leading EHRs that can start listening at a voice command and provides insights on benchmarking results. Physicians can use their phone as a microphone to turn any workstation into a listening device.

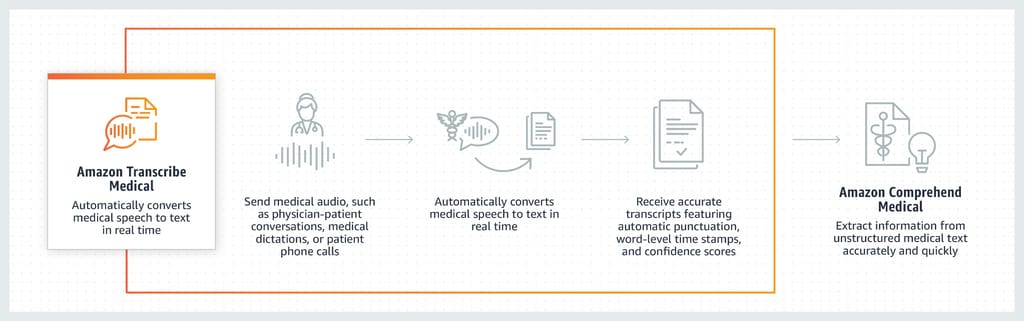

Amazon Transcribe Medical. Such tech giants as Google, Amazon, Microsoft, and IBM have platforms ready to build your very own speech recognition ML solutions. The first HIPAA-eligible service of that kind was Amazon Transcribe Medical that provides an API for building custom speech-to-text solutions. Transcribed text can then be sent to Amazon Comprehend Medical, which extracts valuable data and sends it to appropriate sections of an EHR.

Transcribing process by Amazon

Transcribing process by Amazon

DeepScribe. Providing more sophisticated features than Dragon, DeepScribe works in the background rather than on a wake word and can distinguish between speakers, making it a great choice for recording doctor-patient sessions. It has integrations with a limited choice of EHRs but has its own telemedicine platform.

Computer-assisted coding

Medical coding is the process of extracting billable information from a medical record and then translating it into standardized codes used for medical billing. The traditional way of handling it was employing a professional medical coder who must know enough information about the procedure or at least the ability to consult with a provider who performed the procedure. The lack of communication or the knowledge of regularly updated code sets results in errors. And errors mean that the provider doesn’t get paid.

With the forthcoming update to medical classification codes ICD-11 in January 2022, many organizations are looking to automate the adoption of new codes. That’s where computer-assisted coding steps in.

AI advancements and promised benefits

Computer-assisted coding (CAC) software can be both rule-based and AI-based. Rule-based systems work like keyword search tools with predefined parameters, so expert coders need to write new rules whenever the codeset updates (for example, the ICD-10-CM that goes live October 2021 comes with 490 new codes) and account for tons of language patterns.

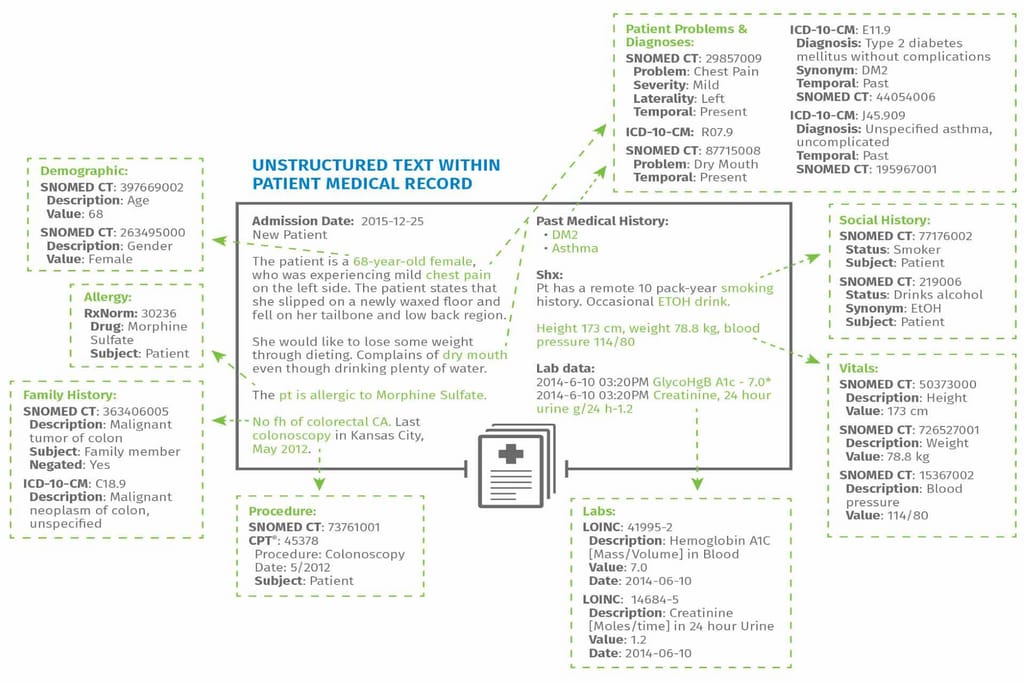

Value hidden in unstructured data Source: Wolters Kluwer

Alternatively, CAC powered by machine learning or even a deep learning engine finds patterns without human intervention and generates its own rules. This way, you simply feed the system admission notes and it returns highlighted and coded phrases, often accounting for the context as well.

While AI-based CAC is showing excellent results, it’s currently used in a hybrid model, assisting human coders in routine tasks, freeing up more time for them to focus on some gray areas, which often come up in the field of medical coding.

Available solutions

3M 360 Encompass. Integrated with 3M’s Coding and Reimbursement system, Encompass provides multiple reporting capabilities with KPIs (potentially preventable readmissions/ complications, patient safety indicators, etc.) and analytics out of the box. The tool’s NLP decisions are also delivered using the principles of explainable AI, meaning that the coders can see the evidence behind the system and make adjustments.

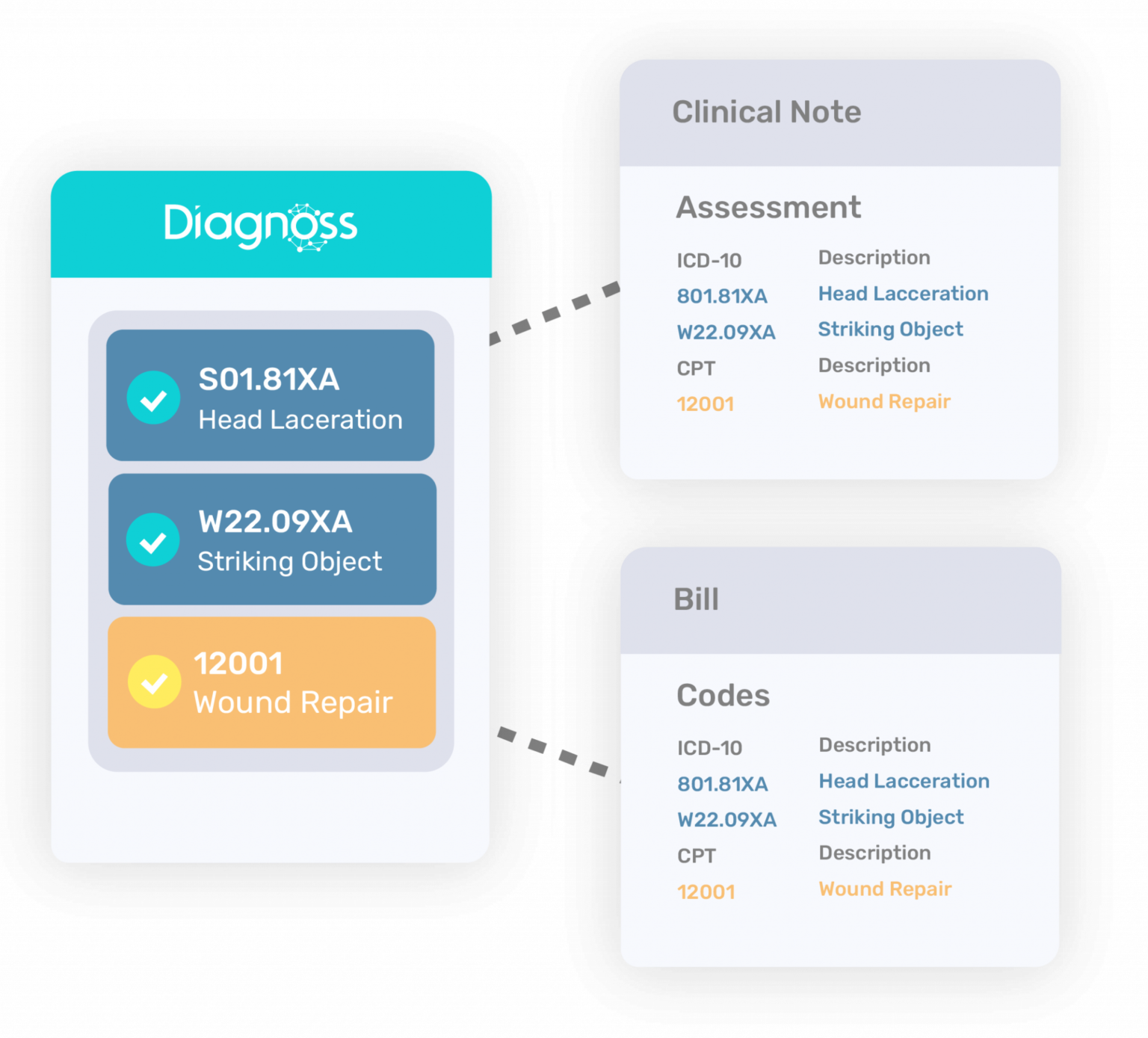

Diagnoss. Diagnoss positions itself as an EHR assistant that helps both documenting and coding, though it shares the same functionality as its competitors. Additionally, the company can help you develop custom solutions, has easy integration via API, and even has coders for outsourcing.

Diagnoss helping to apply billing codes

Diagnoss helping to apply billing codes

Semantic Health. Integrated with all EHRs through a standard FHIR interface, Semantic Health has two tools: SH Coding and SH Auditing. They read clinical documentation as it’s created and apply coding and auditing in real time. The product can be deployed both in the cloud on the company’s HIPAA-compliant environment or on-premise.

Determining patient outcomes

Sepsis and heart failure are among the most common and the most expensive inpatient conditions. Correctly identifying these cases to start treatment sooner is a challenge, since tons of data points need to be monitored: from patient demographics to medications, vital signs, medical problems, and social determinants of health (SDoH).

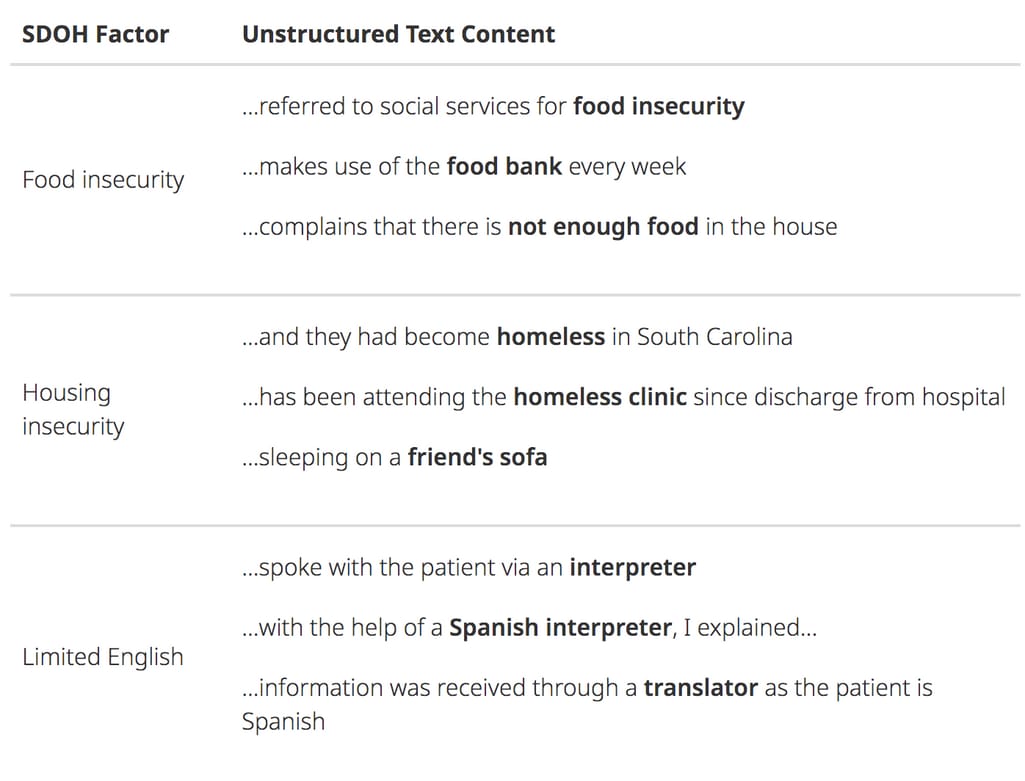

For example, social determinants of health such as economic stability, social context, education, environment, and other factors greatly impact life expectancy and risk of chronic conditions such as heart disease and diabetes. A recent analysis of data by AmeriHealth Caritas revealed that Medicaid members who used SDоH screening experienced a 30 percent decline in hospital admissions. NLP can identify these conditions by extracting key concepts from call center transcriptions, clinical notes, and discharge reports.

Unstructured data containing valuable SDoH information Source: Linguamatics

Unstructured data containing valuable SDoH information Source: Linguamatics

AI advancements and promised benefits

Numerous research has shown that AI-powered predictions are accurate at forecasting mortality rates, risk of emergency department visits, and health outcomes. It’s also prime for adoption with many clinics already reaping the benefits of data analytics and showing outstanding results. Many of those results are possible thanks to data derived using natural language processing.

NLP- extracted data also contributes to advancing precision medicine. In their research, Washington University School of Medicine pulls information about diagnosis, treatments, and outcomes of patients with chronic diseases from EHRs to prepare personalized medical approaches. They think that medical data along with SDoH data is crucial for precision medicine development. And the biggest challenge is accessing unstructured, narrative data.

Available solutions

Linguamatics. A popular provider of text mining systems, Linguamatics works with healthcare providers to help identify at-risk patients as well as pharma giants like AstraZeneca to aid in their clinical research. The Linguamatics NLP platform works like a search engine. It scans multiple data sources to deliver summarized and structured answers to your queries. Its text mining models can be used for various applications and software can be deployed in the cloud, on premises, and using a hybrid approach.

John Snow Labs. Named after the Victorian physician who used analytics to trace the cholera outbreak in 1854, the company offers Spark NLP -- a library with 200+ pretrained models. It can extract such specific characteristics from reports as type of pain and its intensity, symptoms, attempted home remedy, and more. It also enables real-time decision-making by forecasting bed demand, safe staffing levels, and hospital gridlock.

Emerging NLP applications

These are not all NLP applications by any means. We want to further describe a few emerging use cases that are collecting a lot of buzz and ongoing research.

Medical image captioning

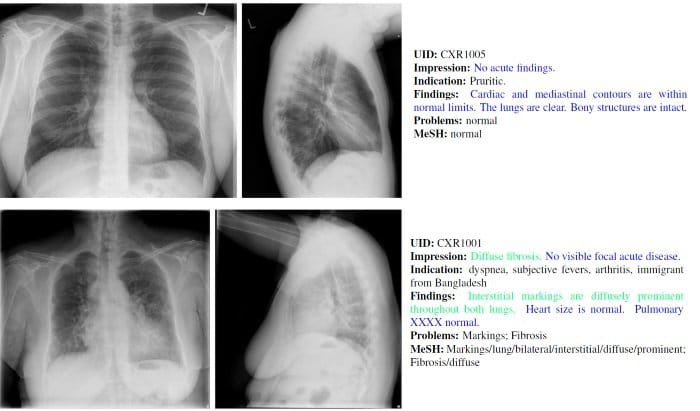

Image captioning is the task of creating a textual description for an image using natural language processing and computer vision. Being one of the more sophisticated AI tasks, image captioning uses deep learning methods such as convolutional neural network and recurrent neural network, like the one we used for building our computer vision prototype for x-ray scanning.

A sample from an x-ray images dataset with annotations Source: Augsburg University

A sample from an x-ray images dataset with annotations Source: Augsburg University

Manual annotation is a time-consuming job that requires a lot of domain knowledge, so it’s even harder to create an automatic model that would do that with the same accuracy. Yet, such a solution would greatly speed up the diagnosis process, so this topic fascinates researchers and there are some semi-successful approaches available. One of the most promising is the Attention Mechanism, which you can read about in our article covering NLP basics.

Virtual patient assistant

Amazon Alexa, Siri, and Google Assistant are some of the first examples of NLP applications that come to mind, Yet, even in everyday life, they’re known to make mistakes or lack comprehension. So, they’re not yet ready to be used in a medical setting, where accuracy of provided information is so critical.

In a 2018 study by Northeastern University, UCONN, and Boston Medical Center, participants asked virtual assistants medical queries in their own words. As a result, assistants were able to provide some form of an answer for less than half of the tasks, while some replies were assessed to even cause harm.

There’s more opportunity for custom development in this area. For example, in 2019, Amazon unveiled the program for creating HIPAA-compliant Alexa skills, allowing such organizations as St. Joseph Health, Boston Hospital, Express Scripts, Cigna, and more to provide health tips, book appointments, and manage prescriptions.

Clinical trial matching

Patient recruitment is one of barriers in the clinical trial process. Often the most expensive and time-consuming part of the trial, when patients can’t be found on time, the trial simply doesn’t happen. NLP, however, can change it -- and in two directions.

Interpreting trial criteria. A system can extract participation criteria from plain text and transform it into coded query format, which can be used to easily search the database for eligible people.

Generating questions. For people who search clinical trial databases themselves, an NLP engine can transform those criteria into questions (such as “What is your weight?”), allowing them to filter through thousands of trials to find the ones they’re eligible for.

IBM helps several institutions, most notably -- Mayo Clinic, with their AI-driven Clinical Trial Matching system, reporting an 80 percent increase in enrollment. Yet, other applications remain in the “under research” area.

So, what does it leave you with?

Healthcare NLP challenges and how to prepare for adoption

As you might have noticed, we tried to keep it real and not create any illusions that you can approach NLP easily in your clinic. There are not many solid, ripe-for-implementation solutions today, but in just a few years, more will be ready, and you might as well start preparing now. Here are a few challenges you have to deal with first.

Medical vocabulary. The healthcare domain does not only have specific terms, but also doctors in their notes or speech often use jargon, abbreviations, and shortened phrases. An NLP system must be trained on the dataset similar to what it will be using in production. This will present a problem when you’re acquiring an off-the-shelf NLP system and will complicate the job during custom development.

EHR limitations. An NLP system won’t be able to organize data if an EHR doesn’t have appropriate fields to organize it in. So, new data-related adoption often comes with extra work on your other applications.

High level of expertise. Someone has to drive your NLP adoption endeavor in terms of data architecture, analytics capabilities, and domain knowledge. If you don’t have a data science team on board, you will need to hire an AI expert to help you tackle the challenges.

Considering this, we want to present three journeys of NLP adoption you may go with.

Pluck the low-hanging fruit

Research opportunities that can be easily integrated with your EHR, such as providers we mentioned above, and focus on just one task. For example, if it takes physicians the longest to find and review radiology reports, start structuring data in that area first.

Also, you don’t have to apply the whole predictive analytics module to help practitioners prioritize patients. By extracting a few parameters from patients’ health history, you can give doctors the crucial information to make a decision.

Use text analysis APIs

The biggest NLP providers in the world -- Google, IBM, Microsoft, and Amazon -- have high-level APIs that don’t require machine learning expertise to work with. All of them either have separate healthcare-focused services or use cases of implementation in this domain. While you would require a technology partner to unleash their possibilities, this is a great approach for those who need something more than the market provides and don’t intend to deploy a full-blown AI operation.

Build custom NLP healthcare tool

This route is for the organizations with the largest budgets and the highest ambitions. Such clinics usually start a long-term partnership with a technology vendor, which allows them to join the table with specific requirements and build a solution around their needs. Here, you can be bold and create features beyond what commercially-available products are ready to offer, situating yourself way above the competition.

Maryna is a passionate writer with a talent for simplifying complex topics for readers of all backgrounds. With 7 years of experience writing about travel technology, she is well-versed in the field. Outside of her professional writing, she enjoys reading, video games, and fashion.

Want to write an article for our blog? Read our requirements and guidelines to become a contributor.