Imagine walking through an art exhibition at the renowned Gagosian Gallery, where paintings seem to be a blend of surrealism and lifelike accuracy. One piece catches your eye: It depicts a child with wind-tossed hair staring at the viewer, evoking the feel of the Victorian era through its coloring and what appears to be a simple linen dress. But here’s the twist – these aren’t works of human hands but creations by DALL-E, an AI image generator.

Bennett Miller, Untitled, 2022-23. Pigment print of AI-generated image. Source: Gagosian

The exhibition, produced by film director Bennett Miller, pushes us to question the essence of creativity and authenticity as artificial intelligence (AI) starts to blur the lines between human art and machine generation. Interestingly, Miller has spent the last few years making a documentary about AI, during which he interviewed Sam Altman, the CEO of OpenAI — an American AI research laboratory. This connection led to Miller gaining early beta access to DALL-E, which he then used to create the artwork for the exhibition.

Now, this example throws us into an intriguing realm where image generation and creating visually rich content are at the forefront of AI's capabilities. Industries and creatives are increasingly tapping into AI for image creation, making it imperative to understand: How should one approach image generation through AI?

In this article, we delve into the mechanics, applications, and debates surrounding AI image generation, shedding light on how these technologies work, their potential benefits, and the ethical considerations they bring along.

Image generation explained

What is AI image generation?

AI image generators utilize trained artificial neural networks to create images from scratch. These generators have the capacity to create original, realistic visuals based on textual input provided in natural language. What makes them particularly remarkable is their ability to fuse styles, concepts, and attributes to fabricate artistic and contextually relevant imagery. This is made possible through Generative AI, a subset of artificial intelligence focused on content creation.

AI image generators are trained on an extensive amount of data, which comprises large datasets of images. Through the training process, the algorithms learn different aspects and characteristics of the images within the datasets. As a result, they become capable of generating new images that bear similarities in style and content to those found in the training data.

There is a wide variety of AI image generators, each with its own unique capabilities. Notable among these are the neural style transfer technique, which enables the imposition of one image's style onto another; Generative Adversarial Networks (GANs), which employ a duo of neural networks to train to produce realistic images that resemble the ones in the training dataset; and diffusion models, which generate images through a process that simulates the diffusion of particles, progressively transforming noise into structured images.

How AI image generators work: Introduction to the technologies behind AI image generation

In this section, we will examine the intricate workings of the standout AI image generators mentioned earlier, focusing on how these models are trained to create pictures.

Text understanding using NLP

AI image generators understand text prompts using a process that translates textual data into a machine-friendly language — numerical representations or embeddings. This conversion is initiated by a Natural Language Processing (NLP) model, such as the Contrastive Language-Image Pre-training (CLIP) model used in diffusion models like DALL-E.

Visit our other posts to learn how prompt engineering works and why the prompt engineer's role has become so important lately.

This mechanism transforms the input text into high-dimensional vectors that capture the semantic meaning and context of the text. Each coordinate on the vectors represents a distinct attribute of the input text.

Consider an example where a user inputs the text prompt "a red apple on a tree" to an image generator. The NLP model encodes this text into a numerical format that captures the various elements — "red," "apple," and "tree" — and the relationship between them. This numerical representation acts as a navigational map for the AI image generator.

During the image creation process, this map is exploited to explore the extensive potentialities of the final image. It serves as a rulebook that guides the AI on the components to incorporate into the image and how they should interact. In the given scenario, the generator would create an image with a red apple and a tree, positioning the apple on the tree, not next to it or beneath it.

This smart transformation from text to numerical representation, and eventually to images, enables AI image generators to interpret and visually represent text prompts.

Generative Adversarial Networks (GANs)

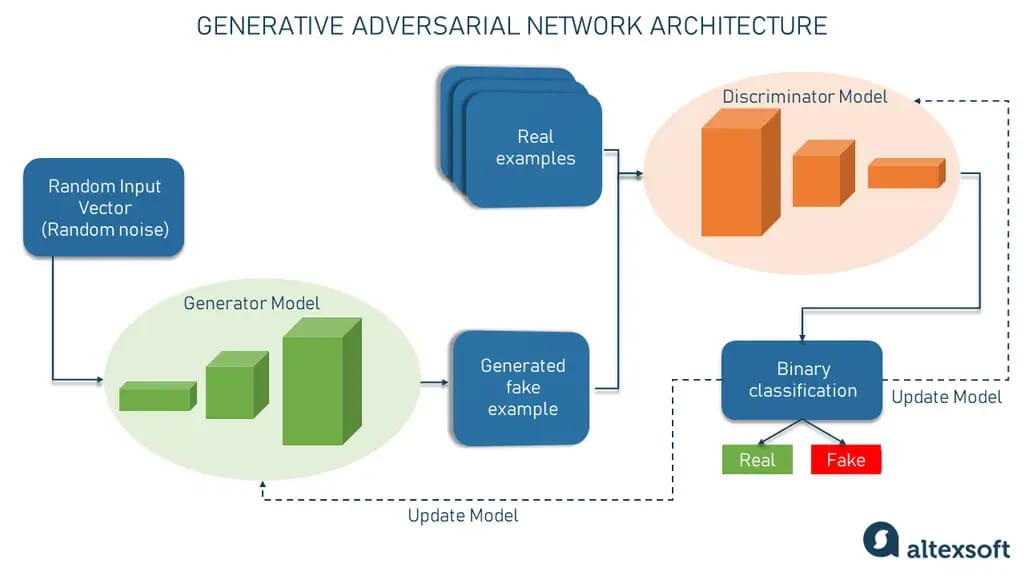

Generative Adversarial Networks, commonly called GANs, are a class of machine learning algorithms that harness the power of two competing neural networks – the generator and the discriminator. The term “adversarial” arises from the concept that these networks are pitted against each other in a contest that resembles a zero-sum game.

In 2014, GANs were brought to life by Ian Goodfellow and his colleagues at the University of Montreal. Their groundbreaking work was published in a paper titled “Generative Adversarial Networks.” This innovation sparked a flurry of research and practical applications, cementing GANs as the most popular generative AI models in the technology landscape.

How GANs work in a nutshell.

GANs architecture. GANs are comprised of two core components, known as sub-models:

- The generator neural network is responsible for generating fake samples. It takes a random input vector — a list of mathematical variables with unknown values — and uses this information to create fake input data.

- The discriminator neural network functions as a binary classifier. It takes a sample as input and determines whether it is real or produced by the generator.

By the way, if you want to know more about the topic, you can watch our video explaining how computer vision applications work.

Computer vision in less than 14 minutes.

The adversarial game. The adversarial nature of GANs is derived from a game theory. The generator aims to produce fake samples that are indistinguishable from real data, while the discriminator endeavors to accurately identify whether a sample is real or fake. This ongoing contest ensures that both networks are continually learning and improving.

Whenever the discriminator accurately classifies a sample, it is deemed the winner, and the generator undergoes an update to enhance its performance. Conversely, if the generator successfully fools the discriminator, it is considered the winner, and the discriminator is updated.

The process is considered successful when the generator crafts a convincing sample that not only dupes the discriminator but is also difficult for humans to distinguish.

For the discriminator to effectively evaluate the images generated, it needs to have a reference for what authentic images look like, and this is where labeled data comes into play.

During training, the discriminator is fed with both real images (labeled as real) and images generated by the generator (labeled as fake). This labeled dataset is the "ground truth" that enables a feedback loop. The feedback loop helps the discriminator to learn how to distinguish real images from fake ones more effectively. Simultaneously, the generator receives feedback on how well it fooled the discriminator and uses this feedback to improve its image generation.

The game is never-ending: When the discriminator gets better at identifying fakes, the cycle continues.

Diffusion Models

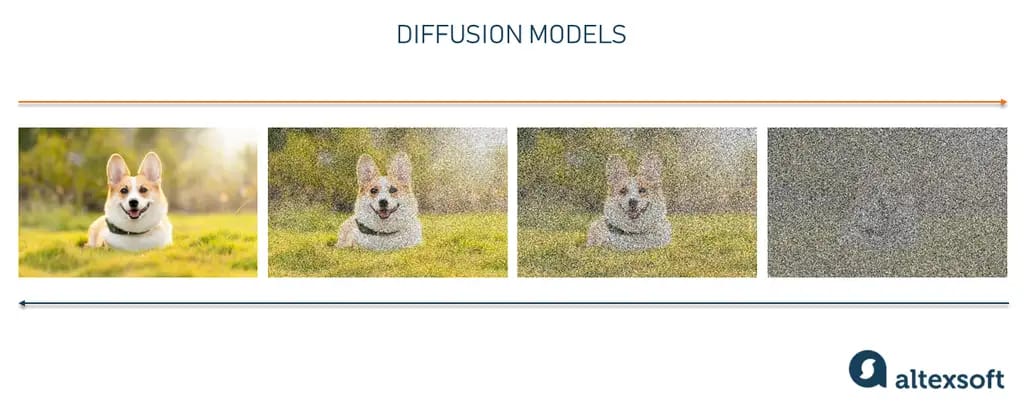

Diffusion models are a type of generative model in machine learning that create new data, such as images or sounds, by imitating the data they have been trained on. They accomplish this by applying a process similar to diffusion, hence the name. They progressively add noise to the data and then learn how to reverse it to create new, similar data.

Think of diffusion models as master chefs who learn to make dishes that taste just like the ones they've tried before. The chef tastes a dish, understands the ingredients, and then makes a new dish that tastes very similar. Similarly, diffusion models can generate data (like images) that are very much like the ones they’ve been trained on.

Diffusion models transitioning back and forth between data and noise.

Let’s take a look at the process in more detail.

Forward diffusion (Adding ingredients to a basic dish). In this stage, the model starts with an original piece of data, such as an image, and gradually adds random noise through a series of steps. This is done through a Markov chain, where at each step, the data is altered based on its state in the previous step. The noise that is added is called Gaussian noise, which is a common type of random noise.

Training (Understanding the tastes). Here, the model learns how the noise added during the forward diffusion alters the data. It maps out the journey from the original data to the noisy version. The aim is to master this journey so well that the model can effectively navigate it backward. The model learns to estimate the difference between the original data and the noisy versions at each step. The objective of training a diffusion model is to master the reverse process.

Reverse diffusion (Recreating the dish). Once the model is trained, it's time to reverse the process. It takes the noisy data and tries to remove the noise to get back to the original data. This is akin to retracing the steps of the journey but in the opposite direction. By retracing steps in this opposite direction along the sequence, the model can produce new data that resembles the original.

Generating new data (Making a new dish). Finally, the model can use what it learned in the reverse diffusion process to create new data. It starts with random noise, which is like a messy bunch of pixels. Alongside, it takes in a text prompt that guides the model in shaping the noise.

The text prompt is like an instruction manual. It tells the model what the final image should look like. As the model iterates through the reverse diffusion steps, it gradually transforms this noise into an image while trying to ensure that the content of the generated image aligns with the text prompt. This is done by minimizing the difference between the features of the generated image and the features that would be expected based on the text prompt.

This method of learning to add noise and then mastering how to reverse it is what makes diffusion models capable of generating realistic images, sounds, and other types of data.

Neural Style Transfer (NST)

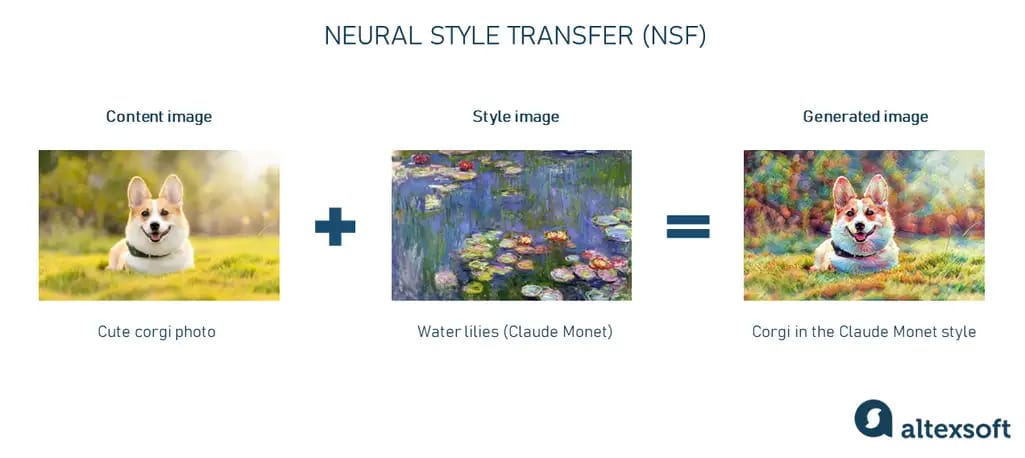

Neural Style Transfer (NST) is a deep learning application that fuses the content of one image with the style of another image to create a brand-new piece of art.

The high-level overview of Neural Style Transfer.

At a high level, NST uses a pretrained network to analyze visuals and employs additional measures to borrow the style from one image and apply it to another. This results in synthesizing a new image that brings together the desired features.

The process involves three core images.

- Content image — This is the image whose content you wish to retain.

- Style image — This one provides the artistic style you want to impose on the content image.

- Generated image — Initially, this could be a random image or a copy of the content image. This image is modified over time to blend the content of the content image with the style of the style image. It is the only variable that the algorithm actually changes through the process.

Delving into the mechanics, it’s worth mentioning that neural networks used in NST have layers of neurons. Layers that come first might detect edges and colors, but as you go deeper into the network, the layers combine these basic features to recognize more complex features, such as textures and shapes. NST cleverly uses these layers to isolate and manipulate content and style.

Content loss. When you want to retain the “content” of the original image, it means that you want the generated image to have recognizable features of the original image. Content loss is a measure of how much the content of the generated image differs from the content of the original content image. NST uses multiple layers of neural networks to capture the main elements in the image and ensure that, in the generated content, these elements are similar to those in the original input.

Style loss. Regarding style, it is more about textures, colors, and patterns in the image. Style loss measures the style differences, e.g., patterns and textures in the generated image and the style image. NST attempts to match the textures and patterns across the layers between the style image and the generated image.

Total loss. NST combines the content loss and style loss into a single measure called the total loss. There's a balancing act here: If you focus too much on matching content, you might lose style, and vice versa. NST allows you to weigh how much you care about content versus style in the total loss. It then uses an optimization algorithm to change the pixels in the generated image to minimize this total loss.

As the optimization progresses, the generated image takes the content and style from different images. The end result is an appealing blend of the two, often bearing a striking resemblance to a piece of art.

GANs, NST, and diffusion models are just a few AI image-generation technologies that have recently garnered attention. Many other sophisticated techniques are emerging in this fast-paced and evolving field as researchers continue to push the boundaries of what's possible with AI in image generation.

Let’s now move to the existing tools you can opt for to generate content.

Exploring popular AI image generators

In this section, we will overview the key text-to-image AI players that can generate incredible visuals based on the provided text prompts.

DALL-E 2

DALL-E is an AI image-generative technology developed by OpenAI. DALL-E is a fusion of Dali and WALL-E, symbolizing the blend of art with AI, with Dali referring to the surrealist artist Salvador Dali and WALL-E referencing the endearing Disney robot.

Overview and features. DALL-E 2, the evolved version of the original DALL-E, was released in April 2022 and is built on an advanced architecture that employs a diffusion model, integrating data from CLIP. Developed by OpenAI, CLIP (Contrastive Language-Image Pre-training) is a model that connects visual and textual representations and is good at captioning images. DALL-E 2 utilizes the GPT-3 large language model to interpret natural language prompts, similar to its predecessor.

By the way, you can learn more about large language models and ChatGPT in our dedicated articles.

Technically, DALL-E 2 comprises two primary components: the Prior and the Decoder. The Prior is tasked with converting user input into a representation of an image by using text labels to create CLIP image embeddings that allow DALL-E 2 to understand and match the textual description with visual elements in the images it creates. The Decoder then takes these CLIP image embeddings and generates a corresponding image.

Compared to the original DALL-E, which used a Discrete Variational Auto-Encoder (dVAE), DALL-E 2 is more efficient and capable of generating images with four times the resolution. Additionally, it offers improved speed and flexibility in image sizes. Users also have the advantage of a wider range of image customization options, including specifying different artistic styles like pixel art or oil painting and utilizing outpainting to generate images as extensions of existing ones.

Price. As for the cost, DALL-E operates on a credit-based system. Users can purchase credits for as low as $15 per 115 credits, and each credit can be used for a single image generation, edit request, or variation request through DALL-E on OpenAI’s platform. Early adopters registered before April 6, 2023, are eligible for free credits.

Midjourney

Midjourney is an AI-driven text-to-picture service developed by the San Francisco-based research lab, Midjourney, Inc. This service empowers users to turn textual descriptions into images, catering to a diverse spectrum of art forms, from realistic portrayals to abstract compositions. Currently, access to Midjourney is exclusively via a Discord bot on their official Discord channel. Users employ the ‘/imagine’ command, inputting textual prompts to generate images, which the bot subsequently returns.

Pictures of corgi cupcakes generated by Midjourney using the prompt “a cupcake made of a cute corgi dog, in cartoon style, draw.”

Overview and features. Midjourney’s AI is configured to favor the creation of visually appealing, painterly images. The algorithm inclines towards images that exhibit complementary colors, an artful balance of light and shadow, sharp details, and a composition characterized by pleasing symmetry or perspective.

It is based on a diffusion model, similar to DALL-E and Stable Diffusion, which turns random noise into artistic creations. As of March 15, 2023, Midjourney utilizes its V5 model, a significant upgrade from its V4 model, incorporating a novel AI architecture and codebase. Notably, Midjourney’s developers have not divulged details regarding their training models or source code.

Currently, the resolution of the images generated by Midjourney is relatively low, with the default size being 1,024 x 1,024 pixels at 72ppi. However, the upcoming Midjourney 6, expected to be released in July 2023, is anticipated to feature higher-resolution images, which would be more suitable for printing.

Pricing. Midjourney offers four distinct subscription plans catering to different user needs. The Basic Plan is priced at $10 per month, the Standard Plan at $30 per month, the Pro Plan at $60 per month, and the Mega Plan at $120 per month. Irrespective of the chosen plan, subscribers gain access to the member gallery, the Discord server, and terms for commercial usage, among other features.

Stable Diffusion

Stable Diffusion is a text-to-image generative AI model initially launched in 2022. It is the product of a collaboration between Stability AI, EleutherAI, and LAION. Along with the ability to create detailed and visually appealing images based on textual descriptions, it can perform tasks such as inpainting (filling in missing parts of images), outpainting (extending images), and image-to-image transformations.

Overview and features. Stable Diffusion utilizes the Latent Diffusion Model (LDM), a sophisticated way of generating images from text. It makes image creation a gradual process, much like "diffusion." It starts with random noise and gradually refines the image to align it with the textual description provided.

Initially, Stable Diffusion used a frozen CLIP ViT-L/14 text encoder, but its second version incorporates OpenClip, a larger version of CLIP, to convert text into embeddings. This makes it capable of generating even more detailed images.

Another remarkable feature of Stable Diffusion is its open-source nature. This trait, along with its ease of use and the ability to operate on consumer-grade graphics cards, democratizes the image generation landscape, inviting participation and contribution from a broad audience.

Pricing. Stable Diffusion is competitively priced at $0.0023 per image. Additionally, there is a free trial available for newcomers who wish to explore the service. However, it is important to note that due to a large number of users, the service may sometimes experience server issues.

Popular applications and use cases of AI image generators

AI image generation technology has myriad applications. For instance, these tools can spur creativity among artists, serve as a valuable tool for educators, and accelerate the product design process by rapidly visualizing new designs.

Entertainment

In the entertainment industry, AI image generators create realistic environments and characters for video games and movies. This saves time and resources that would be used to manually create these elements.

One exceptional example is The Frost, a groundbreaking 12-minute movie in which AI generates every shot. It is one of the most impressive and bizarre examples of this burgeoning genre.

The AI-generated film called The Frost.

The Frost was created by the Waymark AI platform using a script written by Josh Rubin, an executive producer at the company who directed the film. The Waymark fed the script to OpenAI’s image-making model DALL-E 2.

After some trial and error to achieve the desired style, DALL-E 2 was used to generate every single shot in the film. Subsequently, Waymark employed D-ID, an AI tool adept at adding movement to still images, to animate these shots, making eyes blink and lips move.

Marketing and advertising

In marketing and advertising, AI-generated images quickly produce campaign visuals. For instance, instead of organizing a photo shoot for a new product, marketers can use AI to generate high-quality images that can be used in promotional materials.

The magazine Cosmopolitan made a groundbreaking move in June 2022 by releasing a cover entirely created by artificial intelligence. The cover image was generated using DALL-E 2, an AI-powered image generator developed by OpenAI.

The Cosmopolitan magazine cover created by AI. Source: Cosmopolitan

The input given to DALL-E 2 for generating the image was, “A wide angle shot from below of a female astronaut with an athletic female body walking with swagger on Mars in an infinite universe, synthwave, digital art.” Chosen as the official cover was an intricate and futuristic illustration of a female astronaut on Mars. Notably, this marked the first time an AI-generated image was used as the cover of a major magazine, showcasing the potential of AI in the creative industry.

Besides producing visuals, AI generative tools are very helpful for creating marketing content. Read our article to learn more about the best AI tools for business and how they increase productivity.

Medical imaging

In the medical field, AI image generators play a crucial role in improving the quality of diagnostic images. For example, AI can be used to generate clearer and more detailed images of tissues and organs, which helps in making more accurate diagnoses.

For example, in a study conducted by researchers from Germany and the United States, the capabilities of DALL-E 2 were explored in the medical field, specifically for generating and manipulating radiological images such as X-rays, CT scans, MRIs, and ultrasounds. The study revealed that DALL-E 2 was particularly proficient in creating realistic X-ray images from short text prompts and could even reconstruct missing elements in a radiological image. For instance, it could create a full-body radiograph from a single knee image. However, it struggled with generating images with pathological abnormalities and didn't perform as well in creating specific CT, MRI, or ultrasound images.

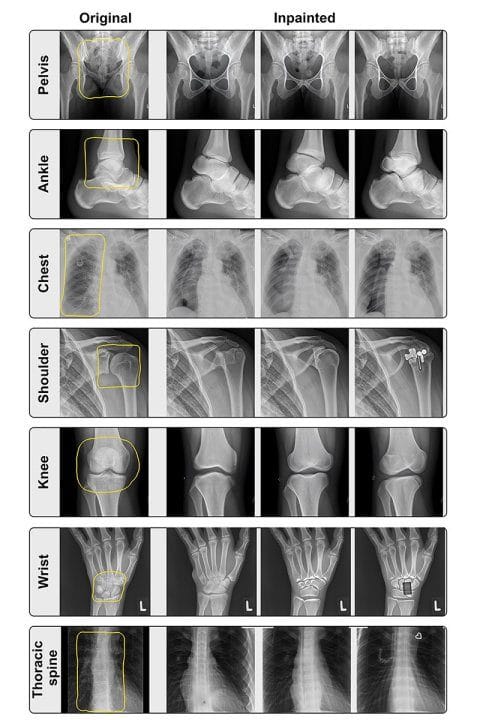

DALL-E 2 was used to reconstruct areas in X-ray images of various anatomical locations. In the experiment, sections of the original images, indicated by yellow borders, were erased before the remaining portions of the images were provided to DALL-E 2 for reconstruction. Source: Adams et al., Journal of Medical Internet Research 2023

The synthetic data generated by DALL-E 2 can potentially speed up the development of new deep-learning tools in radiology. They can also address privacy issues concerning data sharing between medical institutions.

These applications are just the tip of the iceberg. As AI image generation technology continues to evolve, it's expected to unlock even more possibilities across diverse sectors.

As applications built on top of LLMs are also getting more and more popular, we recommend reading our comprehensive article on LLM API integration for business apps.

Limitations and controversies surrounding AI image generators

While AI image generators can create visually stunning and oftentimes hyperrealistic imagery, they bring several limitations and controversies along with the excitement.

Quality and authenticity issues

It’s not a secret that AI systems often struggle with producing images free from imperfections or representative of real-world diversity. The inability to generate flawless human faces, reliance on potentially biased pretrained datasets, and challenges in fine-tuning AI models are some of the critical hurdles in ensuring quality and authenticity.

Challenges in generating realistic human faces. Despite the remarkable progress, AI still faces challenges in generating human faces that are indistinguishable from real photographs. For instance, NVIDIA’s StyleGAN has been notorious for generating human faces with subtle imperfections like unnatural teeth alignments or earrings appearing only on one ear. DALL-E and Midjourney often depict human hands with extremely long fingers or add extra ones. Look at the picture below: Can you tell how many fingers there are on each hand?

This is how DALL-E 2 recreates human hands. Source: Science Focus

Dependency on pre-trained images. The authenticity and quality of AI-generated images heavily depend on the datasets used to train the models. So there’s always a big chance of bias.

For example, the Gender Shades project, led by Joy Buolamwini at the MIT Media Lab, assessed the accuracy of commercial AI gender classification systems across different skin tones and genders. The study exposed significant biases in systems from major companies like IBM, Microsoft, and Face++, revealing higher accuracy for lighter-skinned males compared to darker-skinned females. The stark contrast in error rates emphasized the need for more diverse training datasets to mitigate biases in AI models. This project has been instrumental in sparking a broader conversation on fairness, accountability, and transparency in AI systems.

By the way, we have an engaging video about ML data preparation that highlights the necessity of creating quality training datasets and explains how.

How is data prepared for machine learning?

Difficulty in fine-tuning. Achieving the desired level of detail and realism requires meticulous fine-tuning of model parameters, which can be complex and time-consuming. This is particularly evident in the medical field, where AI-generated images used for diagnosis need to have high precision.

Copyright and intellectual property issues

The deployment of AI-generated images raises significant ethical questions, especially when used in contexts that require authenticity and objectivity, such as journalism and historical documentation.

Resemblance to copyrighted material. AI-generated images might inadvertently resemble existing copyrighted material, leading to legal issues regarding infringement. In January 2023, three artists filed a lawsuit against top companies in the AI art generation space, including Stability AI, Midjourney, and DeviantArt, claiming that the companies were using copyrighted images to train their AI algorithms without their consent.

Ownership and rights. Determining who owns the rights to images created by AI is a gray area. The recent case where an AI-generated artwork won first place at the Colorado State Fair's fine arts competition exemplifies this. The artwork, submitted by Jason Allen, was created using the Midjourney program and AI Gigapixel.

Jason Allen's AI-created piece "Théâtre D’opéra Spatial" that clinched the top spot in the digital category at the Colorado State Fair. Source: The New York Times

Many artists argued that since AI generated the artwork, it shouldn’t have been considered original. This incident highlighted the challenges in determining ownership and eligibility of AI-generated art in traditional spaces.

The proliferation of deepfakes and misinformation

Creation of deceptive media. AI image generators can create deepfakes — realistic images or videos that depict events that never occurred. This has serious implications, as deepfakes can be used to spread misinformation or for malicious purposes.

Fake image of Donald Trump being arrested. Source: The Times

For instance, deepfake videos of politicians have been used to spread false information. In March 2023, AI-generated deepfake images depicting the fake arrest of former President Donald Trump spread across the internet. Created with Midjourney, the images showed Trump seemingly fleeing and being arrested by the NYPD. Eliot Higgins, founder of Bellingcat, shared these images on Twitter, while some users falsely claimed them to be real.

Detection challenges. Deepfakes are becoming increasingly sophisticated, making it difficult to distinguish them from authentic content. Social media platforms and news outlets often struggle to rapidly identify and remove deepfake content, spreading misinformation. The Donald Trump arrest case is a great example of it.

Future: Will AI image generators replace human artists?

As AI image generation technology evolves, the question arises: Will these AI systems eventually supplant professional artists?

The succinct answer is likely no.

Although AI has made remarkable strides, it still lacks the nuanced creativity and emotion that human artists bring to their work. Furthermore, AI image generators are inherently limited by their reliance on text prompts. As noted by writer and artist Kevin Kelley in a recent interview, this constraint can be stifling: “...we’re using a conversational interface to try to make art, but there’s a lot of art that humans create that can’t be reduced to language. You can’t get there by using language. There’s the art that I’ve been trying to make, and I realize I’m never going to be able to make it with an AI because I need language to get there. There’re lots of things that we can’t get to with language.”

Therefore, it is more conceivable that AI will serve as a tool to assist and empower artists in their creative endeavors rather than replace them. It has the potential to enrich the artistic process by offering new avenues for exploration and facilitating the production of high-quality art.