Artificial intelligence is changing the way we design, test, deploy, and maintain software. However, many teams are still unsure where to apply it in their workflows. This article gives a practical overview of how AI fits into each stage of the product development process and which tools are available to build an AI-driven workflow.

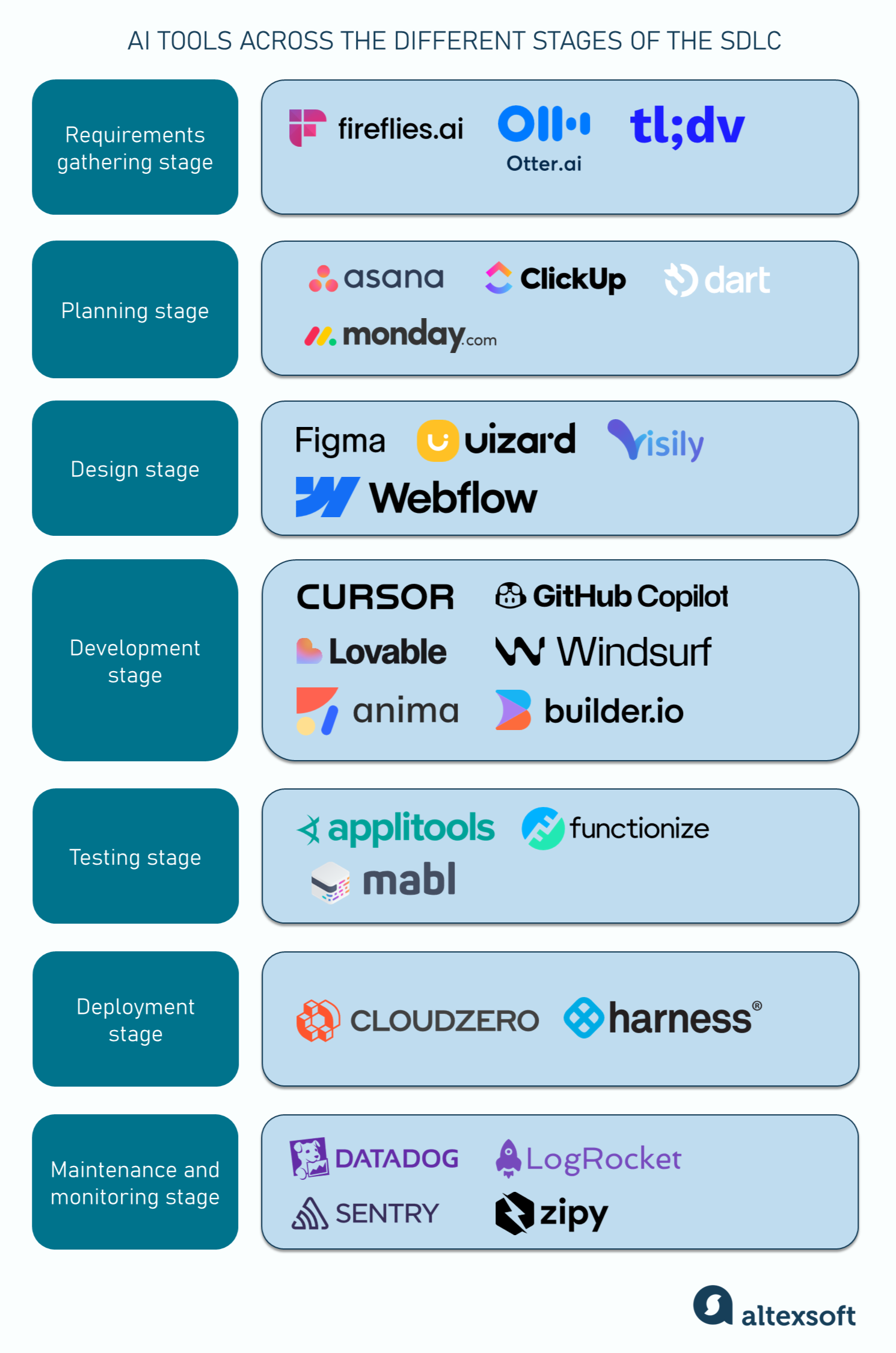

AI tools across the different stages of the SDLC

Requirements gathering stage

The requirements gathering phase guides the rest of the development process. It’s when various stakeholders come together to define what the product should do and who it’s for. Product managers and business analysts typically lead the efforts at this stage. They conduct discovery sessions, stakeholder interviews, and user research to understand business goals, end-user needs, and any technical or regulatory constraints that must be considered.

Capturing and analyzing conversations

Discovery sessions and interviews generate large amounts of unstructured data, like emails, meeting transcripts, and survey responses, which can be processed into a product requirements document (PRD) that captures functional and nonfunctional requirements, a business requirements document (BRD), and other types of technical documentation. Teams can use AI to streamline these activities.

Sometimes we receive Requests for Proposals (RFPs) as lengthy PDF documents. We use AI tools to extract and summarize key requirements directly from those files. It significantly speeds up the gathering process.

AI-powered transcription tools like Otter.ai, tl;dv, and Fireflies.ai can capture and transcribe meetings in real time. They use natural language processing (NLP)—a branch of AI—to identify recurring themes, generate summaries, and flag key action points. The same capabilities are now available in Zoom and partially in the Teams platform. Business analysts rely on these features to define detailed requirements and ensure alignment between teams.

Workspace platforms like Microsoft Teams also include built-in transcription. However, the earlier-mentioned, dedicated SaaS note-taking tools offer more advanced capabilities, like assigning specific meeting moments to team members and AI topic trackers.

From streamlined task tracking to publishing release notes for hundreds of tickets in seconds, read our article on AI for project management to learn how AI can help you with these and other tasks. Also, discover insights from Eugene Ovdiyuk, a project manager at AltexSoft.

Structuring requirements

Once the raw data is collected, teams must convert it into structured requirements. AI helps in these instances, too.

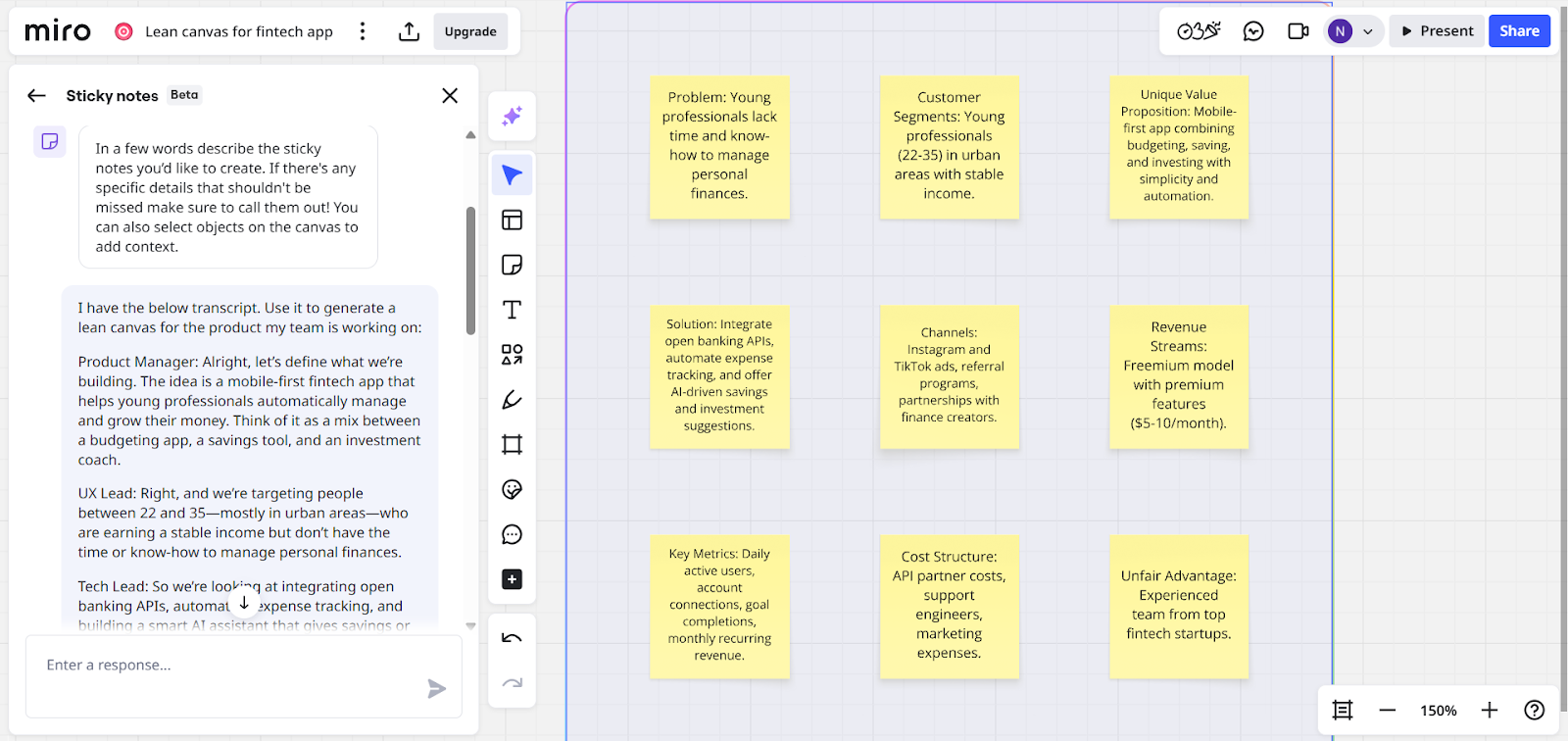

Lean canvases and business model canvases: Tools like Miro, Canvanizer, Jeda.ai, and Boardmix use AI to generate full canvases based on user prompts. These canvases help teams map out problems, customer segments, key activities, value propositions, and other elements.

Instead of manually filling out each section, teams can prompt the AI with meeting transcripts or summaries. The tool then analyzes the content and fills in a lean or business model canvas with segments. This helps frame ideas quickly without starting from scratch.

User stories and acceptance criteria: User stories describe what end users need or want to do with a product, while acceptance criteria define the conditions that must be met for a user story to be considered complete.

Tools like ClickUp, Jira, and StoriesOnBoard include AI features for generating user stories and acceptance criteria from bullet points, chat logs, summaries, or custom prompts, helping teams reduce manual work.

Planning stage

This is where the project team takes the requirements gathered earlier and turns them into a clear product roadmap. The stage defines the project scope and goals, assesses its feasibility, creates and assigns tasks, sets timeframes, and more. It ensures everyone understands what needs to be done, when, and by whom.

Product managers, project managers, and business analysts usually lead this stage, working closely with technical teams and stakeholders to align expectations and prepare for execution. AI-driven project management tools are now changing how teams approach planning.

AI-driven product planning tools

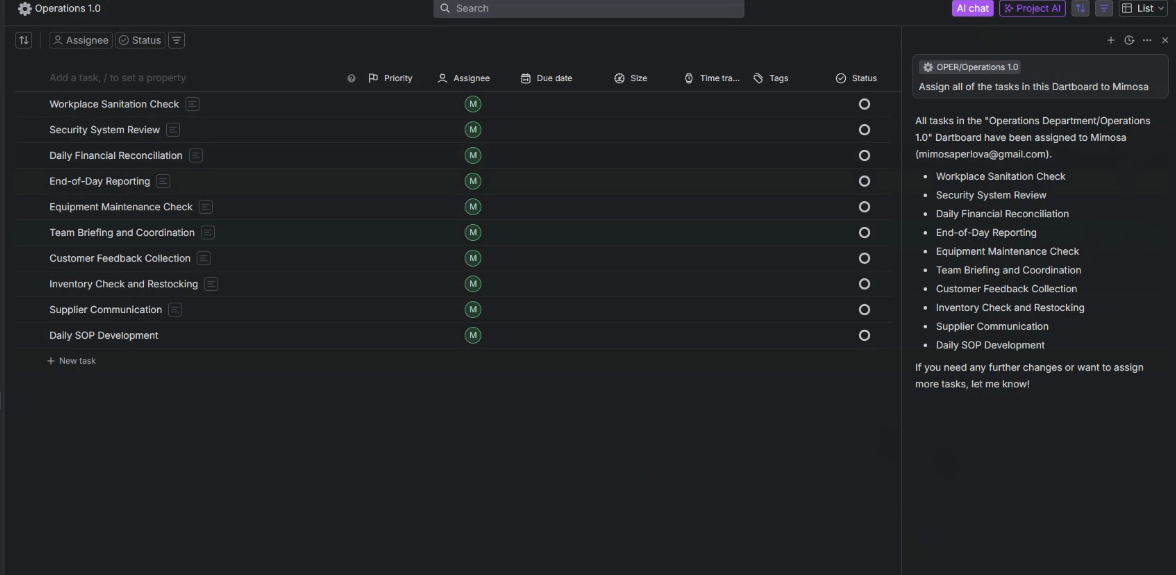

Asana, Dart, ClickUp, and Monday are among the tools that stand out in this space. Their AI systems streamline the full planning cycle, from assigning tasks and deadlines to tracking progress.

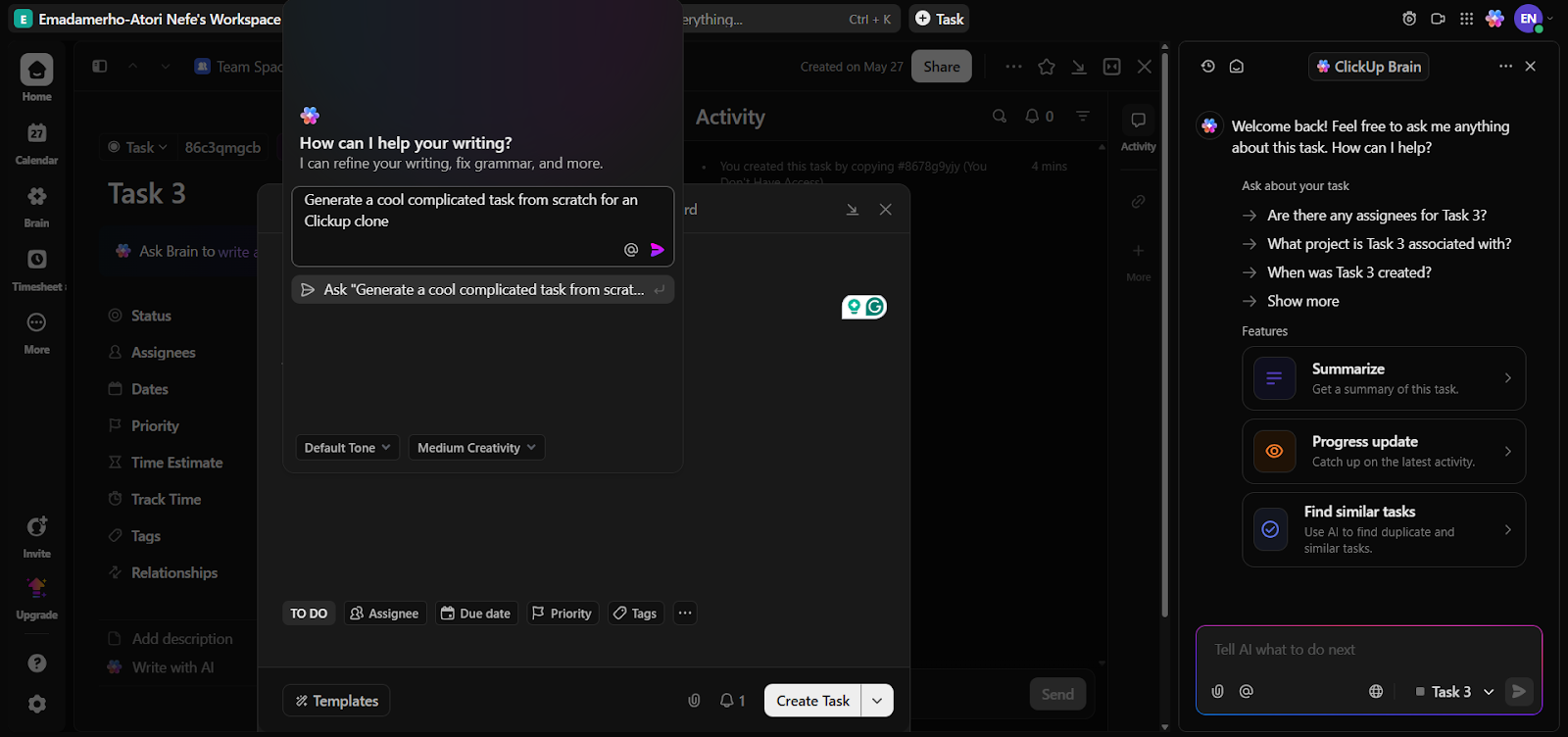

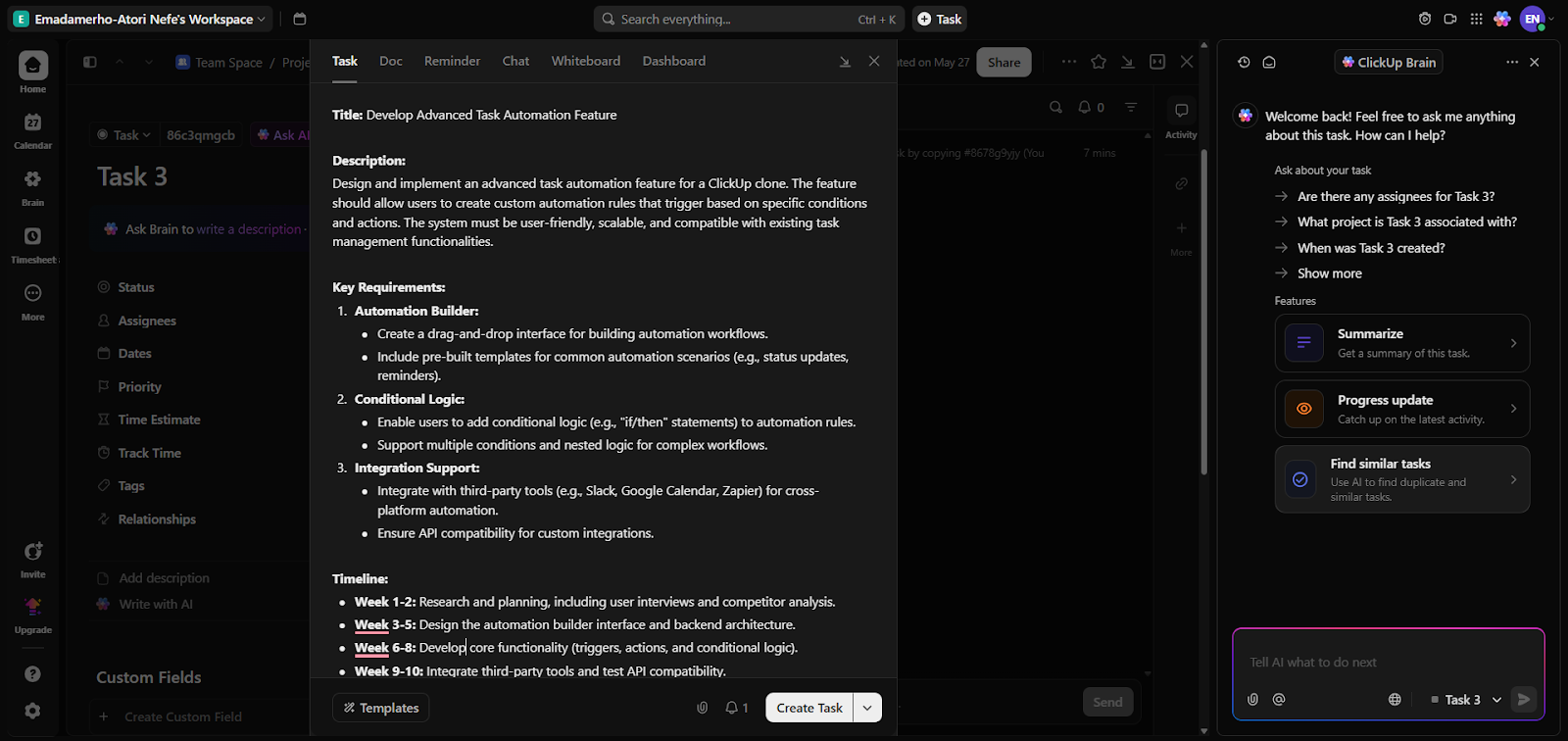

With ClickUp, users can prompt the AI to create a task from scratch. The system then generates everything needed for the task, including its description, requirements, timeline, priority, and expected deliverables.

ClickUp also supports subtask generation, where the AI breaks down main tasks into more manageable pieces. This is useful for organizing work more effectively and ensuring that complex tasks are clearly structured.

Dart also provides subtask generation. However, it takes things a step further by allowing users to automate task assignments with its AI system. Users can highlight specific team members and instruct the AI to assign tasks directly to them. This gives project leads control over task distribution while reducing manual work.

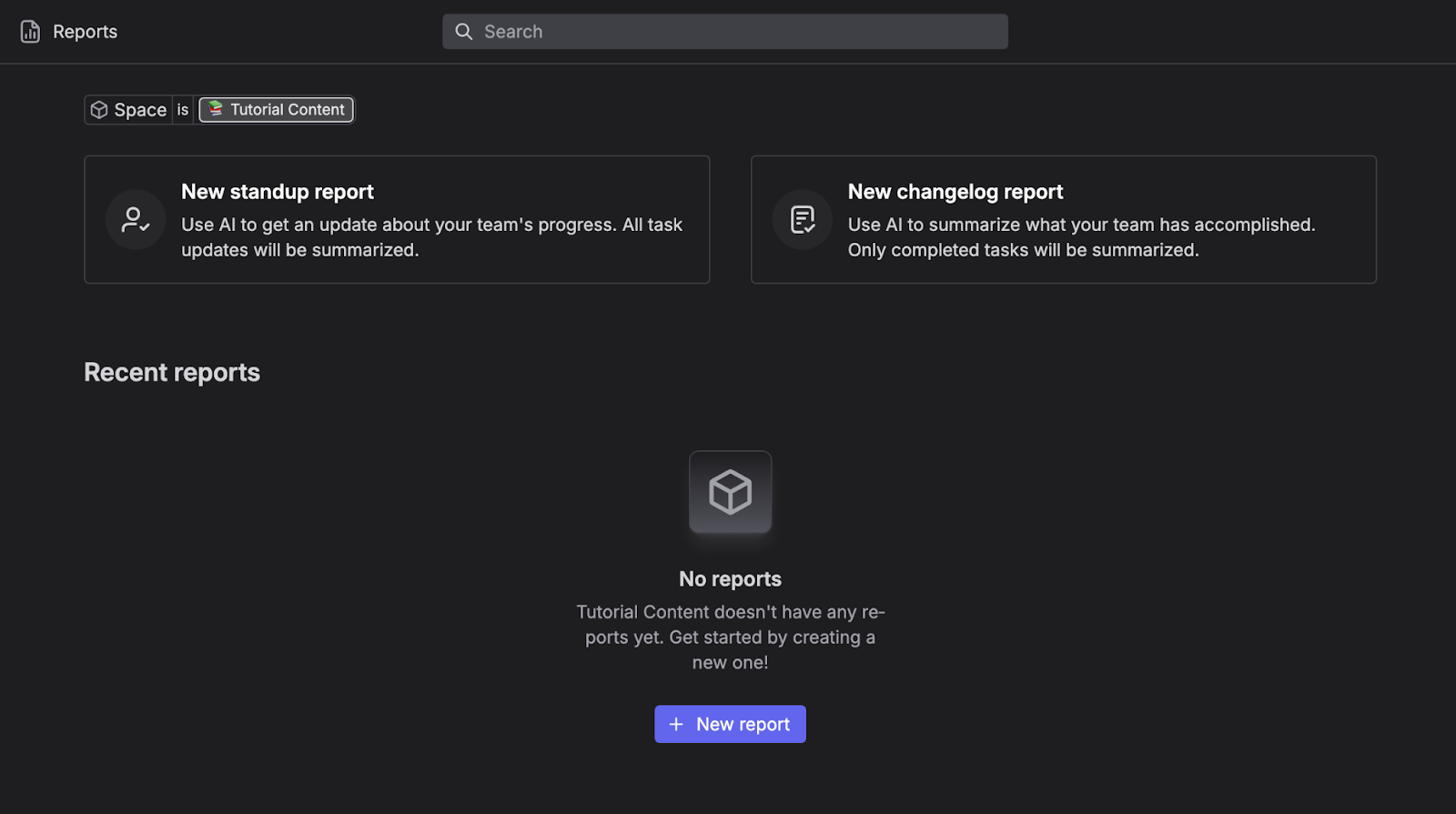

Both platforms also support AI-powered report generation, which is needed for continuous planning and handling tasks. For example, Dart’s AI system can create two types of progress reports: changelog and standups.

Changelog reports summarize only completed tasks across different projects, while standup reports compile relevant project changes, blockers, and updates. These reports make it easier for project leads to understand their team members’ progress without manually digging through each task or ticket.

ClickUp also supports report generation. Users can ask its AI tool for insights and updates on team members and daily tasks. It can also provide details on project timelines, leads, and more.

AI-influence estimates

Many teams are starting to factor in AI as part of their planning calculations. These checks help anticipate where AI might boost productivity or reduce the time needed to complete a task.

Glib Zhebrakov, Head of Center of Engineering Excellence at AltexSoft, gives an example: "We extended an existing estimate template with a dropdown where we could select different levels of AI impact when implementing a certain feature. As a result, we shortened timelines."

It’s also important to note that the impact of AI on planning and delivery timelines isn’t always immediately clear. For teams just starting to explore AI tools, it can be hard to predict exactly how much of a difference they will make in speeding up development or improving processes.

In many cases, the benefits become more obvious over time as teams gradually and consistently introduce AI into their workflows. As this adoption matures, their ability to estimate AI's effect on specific tasks improves, too.

Design stage

This stage defines solution architecture, data models, system components, and UI/UX design or how users will interact with the product. AI tools are especially helpful in speeding up user interface design.

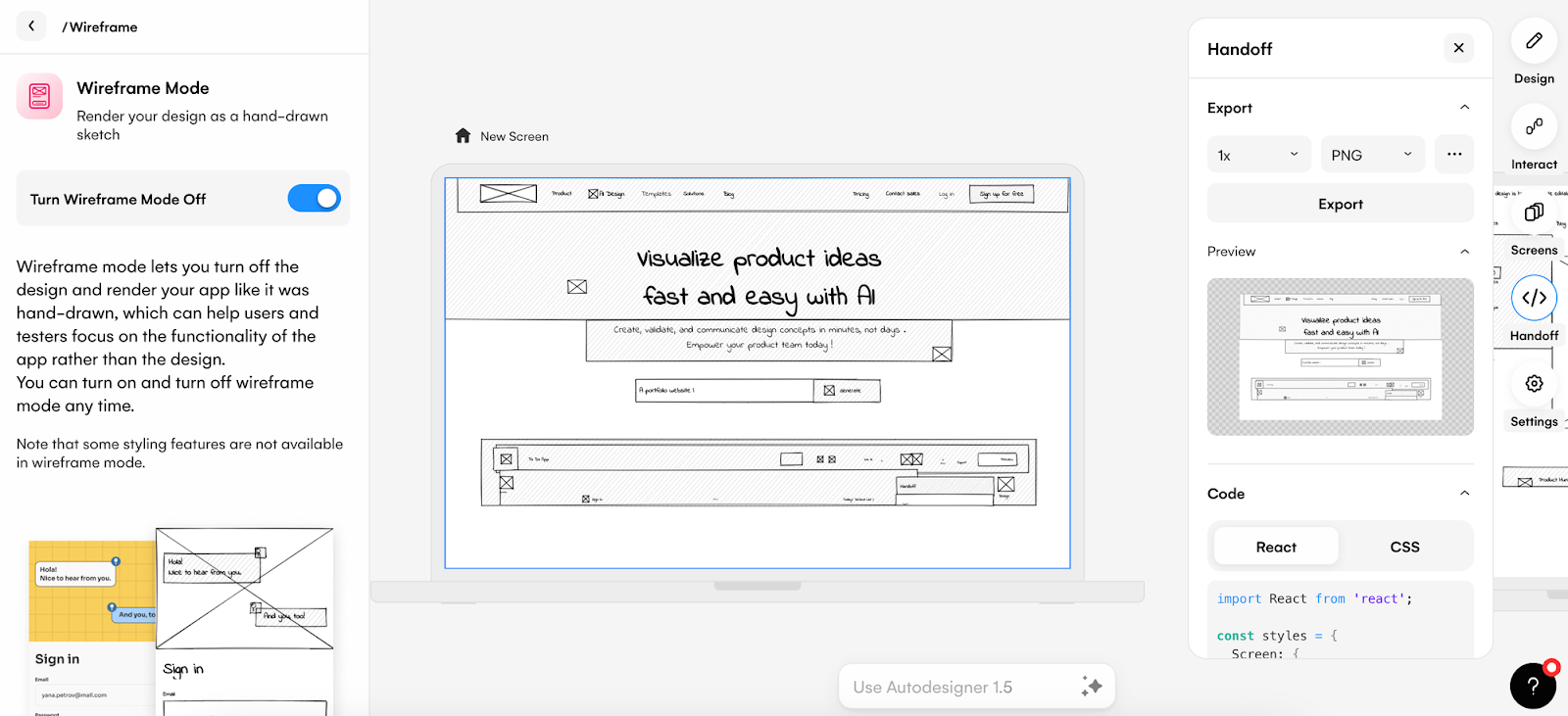

AI-assisted wireframing

Tools like Uizard convert rough, paper-based sketches of interfaces into digital, editable wireframes. This means designers can quickly go from an idea on paper to an interactive mockup.

Visily’s AI tool, instead of relying on sketches, allows users to describe screens or components in plain text and then automatically generate a wireframe based on that input.

UI design

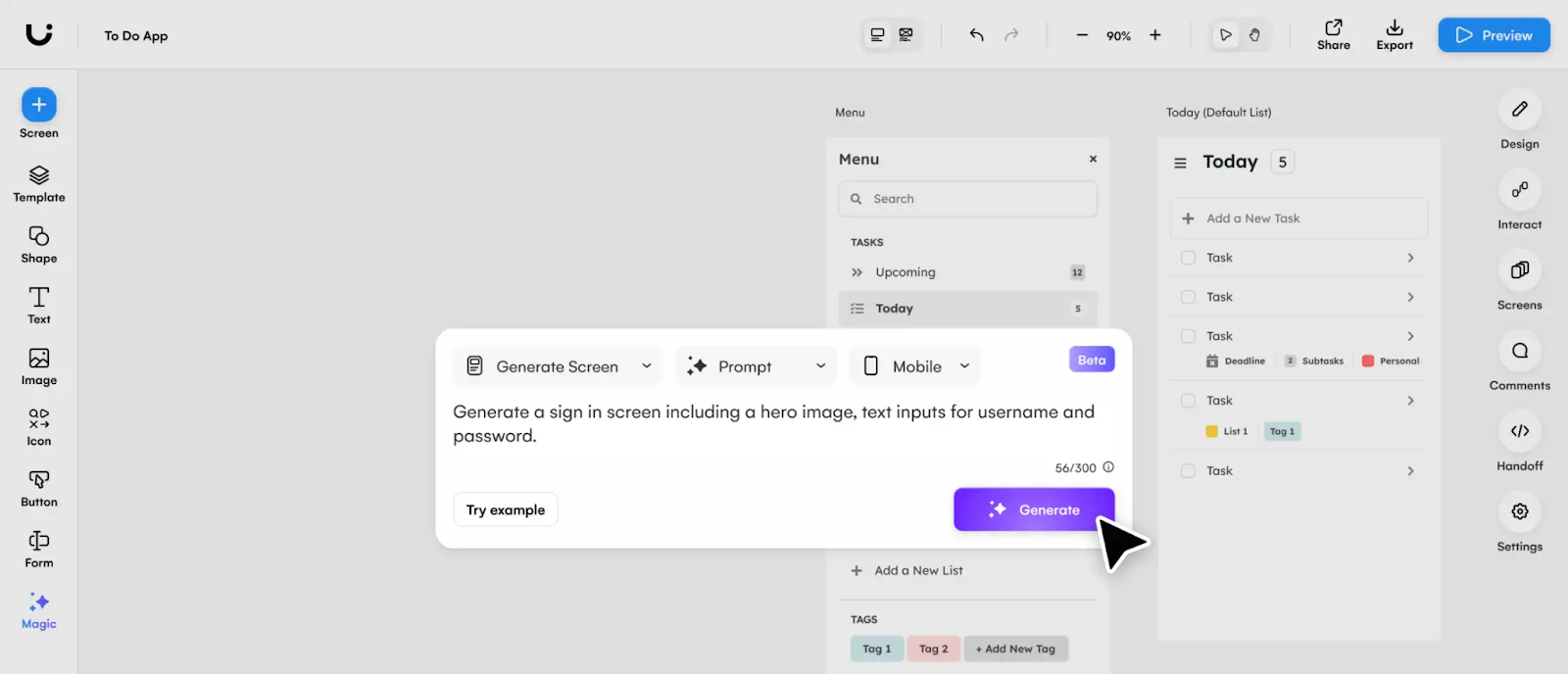

Wireframes are great, but you can’t go further without a full-fledged design. This is where UI generators are useful. Above-mentioned Uizard and Visily, as well as Webflow and Figma—to name a few—allow you to move from blank canvas to design in a flash.

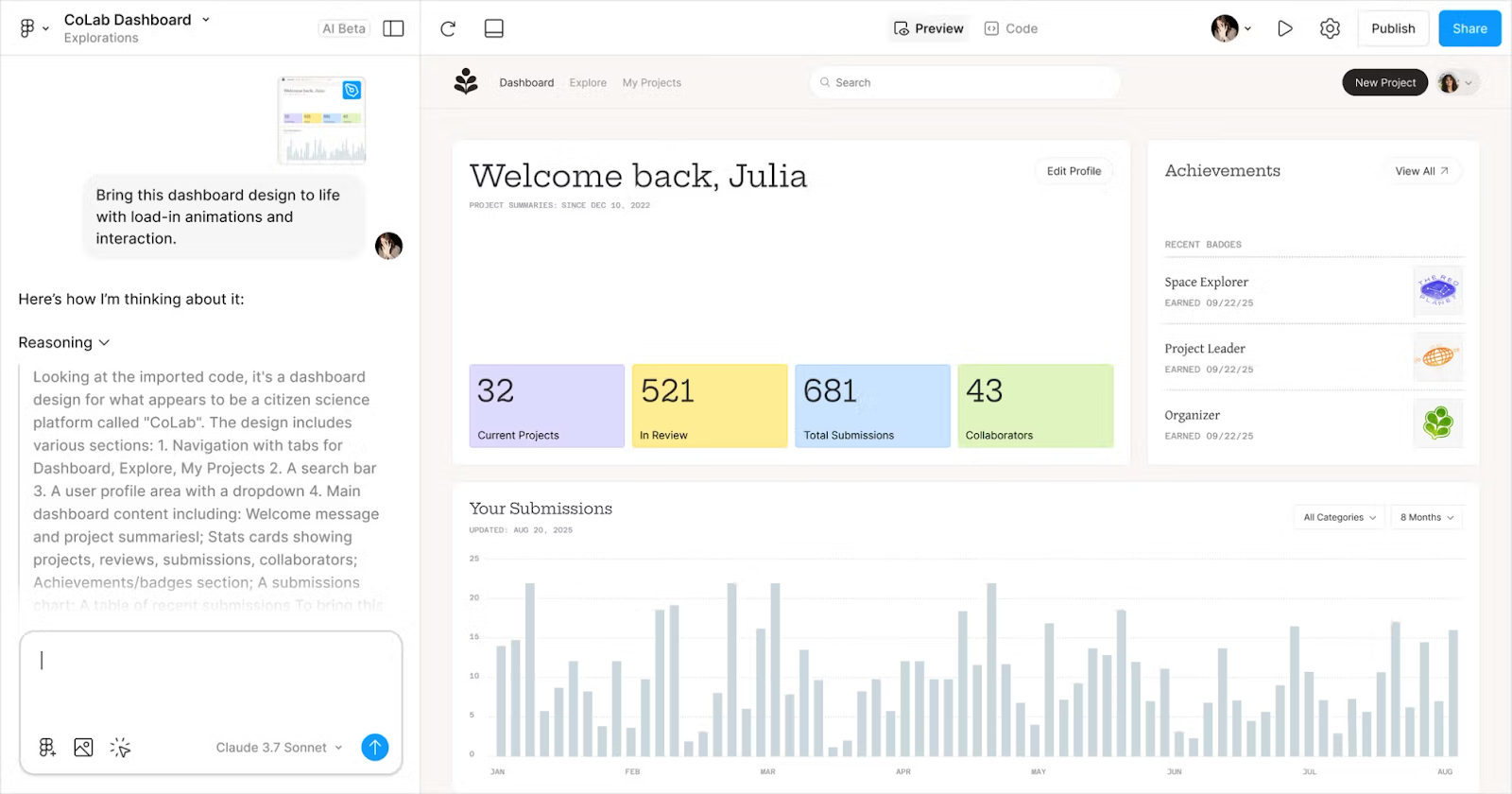

These tools have a chat interface where users can describe what they want, and the AI will generate a visual representation accordingly. Live experts can then fine-tune it, tweak colors, adjust spacing, or apply brand styles. The software also supports producing designs from images. For example, Uizard turns screenshots into mockups in seconds.

AI system being prompted to generate a UI design. Source: Uizard

These platforms also include AI-powered theme generators, allowing users to simply describe the look and feel they want. They also let you “steal” themes from pictures, generate images, remove backgrounds, and make other edits.

Development stage

The development or implementation phase is where the software's design and requirements are translated into functional code. AI tools have become indispensable in this stage, offering capabilities that can accelerate coding and improve workflows if properly used.

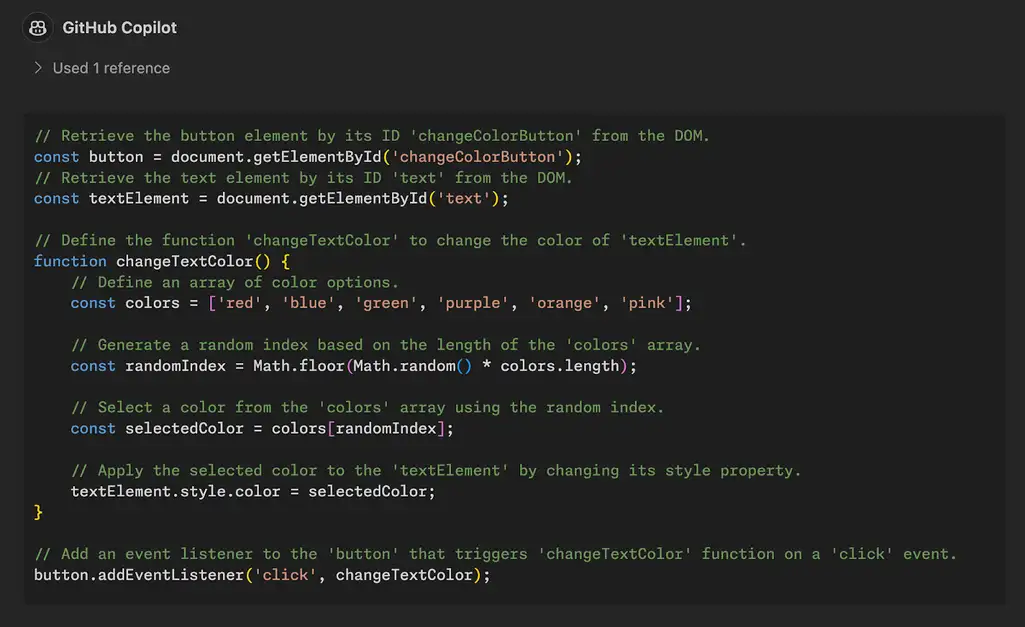

Code generation and autocompletion

Developers are increasingly integrating AI coding tools like GitHub Copilot, Windsurf, Lovable, and Cursor into their processes. These tools can suggest code snippets or generate entire websites and applications from natural language prompts. They’re available as IDE plugins, web-based chat platforms, or full-fledged code editors.

Most AI coding platforms are powered by popular large language models (LLMs)—GPT, Claude, and Gemini—which work under the hood to generate code. These models are trained on massive datasets of open-source codebases, documentation, and developer forums. This allows them to recognize common design patterns, best practices, and even the nuances of specific frameworks or libraries.

We explore various AI coding tools in detail in our dedicated article. Read it to learn more about their capabilities and how they compare.

That said, developers still have to guide the tools properly to get the best results. It’s also important to break tasks into smaller chunks and to review the AI’s output to ensure correctness and spot potentially hallucinated code. As Glib Zhebrakov pointed out, “When it comes to getting the best out of AI coding, context is king. When an LLM generates something for you, it first analyzes the context. So if you want to get a better result, you need to do some work to prepare that context.”

Many developers have experienced that the larger the number of files, the less accurate the code output. Eke Kalu, Software Engineer at AltexSoft, shared his experience when working on large projects: “There are still challenges with context limitations. The model might only look at a single file, but in real-world projects, important information is often spread across many files that need to work together. So the model starts suggesting things that don’t make sense because it doesn’t see the full picture.” He added: “However, feeding too many files into the prompt reduces the accuracy of the model’s output. I’ve found that breaking the task into smaller chunks and limiting the number of files helps the model stay on track.”

Another factor that affects the output is the type of coding LLM you use, as different models give varying results. Gemini 2.5 Pro and Claude 4 currently lead the pack for coding-related tasks. That said, the AI model market is rapidly evolving, so it's important to stay updated on new developments. Most AI coding platforms let you switch between models, making it easier to compare their outputs and find the one that works best for your use case.

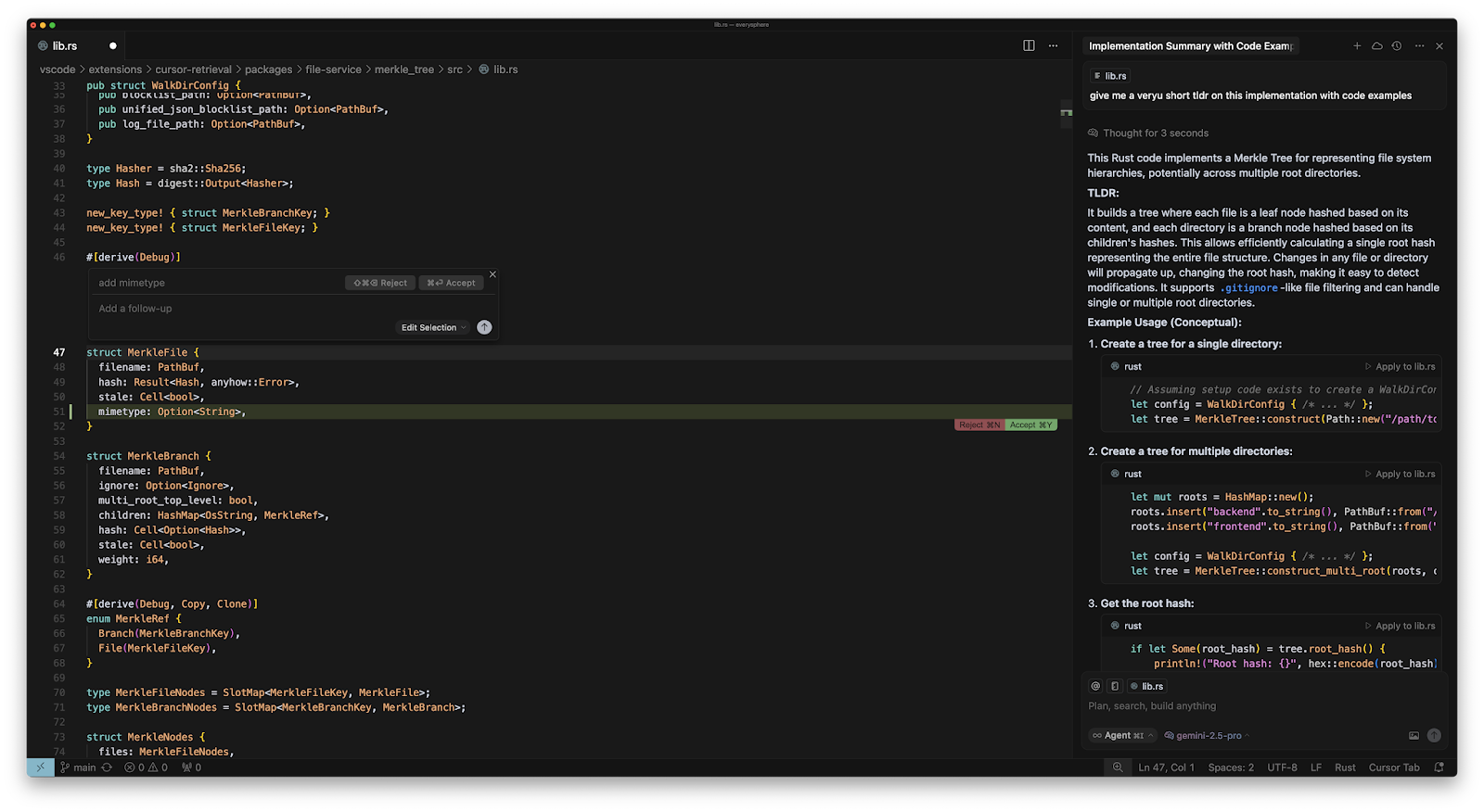

AltexSoft’s engineers tested various tools before choosing Cursor as our approved AI-powered IDE. Cursor outperformed alternatives like GitHub Copilot and Windsurf in both feature set and pace of improvement.

“Compared to Copilot, Cursor is more powerful, has a clearer product roadmap, and is advancing rapidly,” said Glib Zhebrakov. “Copilot is part of Microsoft and moving slower, while Cursor is a standalone business with strong incentives to innovate quickly. That momentum made a difference in our decision.”

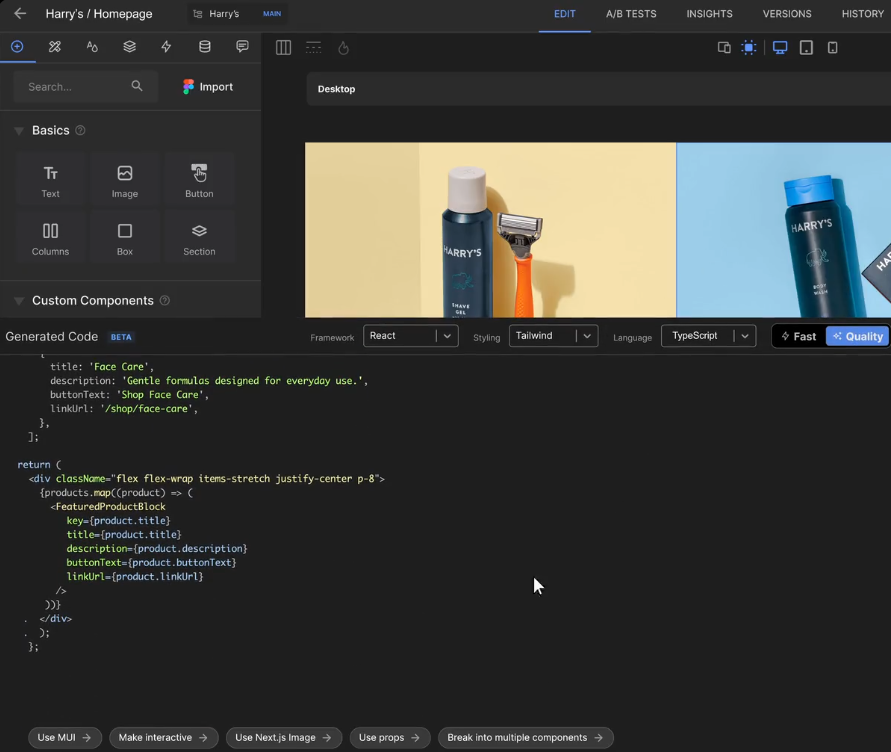

From design to frontend code

AI tools are also making it easier to go from design to frontend code with minimal effort. Platforms like Builder.io and Anima enable you to convert designs made with Figma and other tools into live apps. All you need to do is drop the link to your template or import the file—and the system will translate it into code, using your preferred JavaScript framework and styling library.

After the initial code is generated, you can use prompts to modify the output—for example, asking for layout changes or adding animations.

Thanks to AI tools, designers can now turn their ideas into working prototypes without writing code. Solutions like Figma Make are great for designers who want minimal interaction with code.

Users only need to share the designs with AI via a chat interface, guide it through conversations, and request edits. Once they’re satisfied, they can preview and publish the work as a live web app with its own URL.

As AI continues to blur the lines between design and frontend development, many in the industry are questioning what this means for the future of engineering roles. Ihor Pavlenko, AltexSoft’s Head of Operational Excellence, shared his thoughts on the matter: “Our designers no longer need to wait for developers to implement their work—they can take the design and build the frontend themselves. I believe that in the next few years, the role of the frontend engineer will fade. We’ll see more full-stack jobs emerge while designers increasingly take on frontend responsibilities.”

Still, Ihor doesn’t see this as the end of technical learning: “It still makes sense to learn JavaScript because it's needed for building applications. However, what really matters is having a solid grasp of the core web technologies and focusing on building new skills. That’s what makes someone effective, especially when working with AI.”

Debugging and code reviews

AI coding tools can also speed up the debugging and code review process, which are key for quality assurance. While many modern IDEs already provide built-in debugging features, AI takes it a step further by offering intelligent, real-time code analysis that understands context and can suggest targeted fixes.

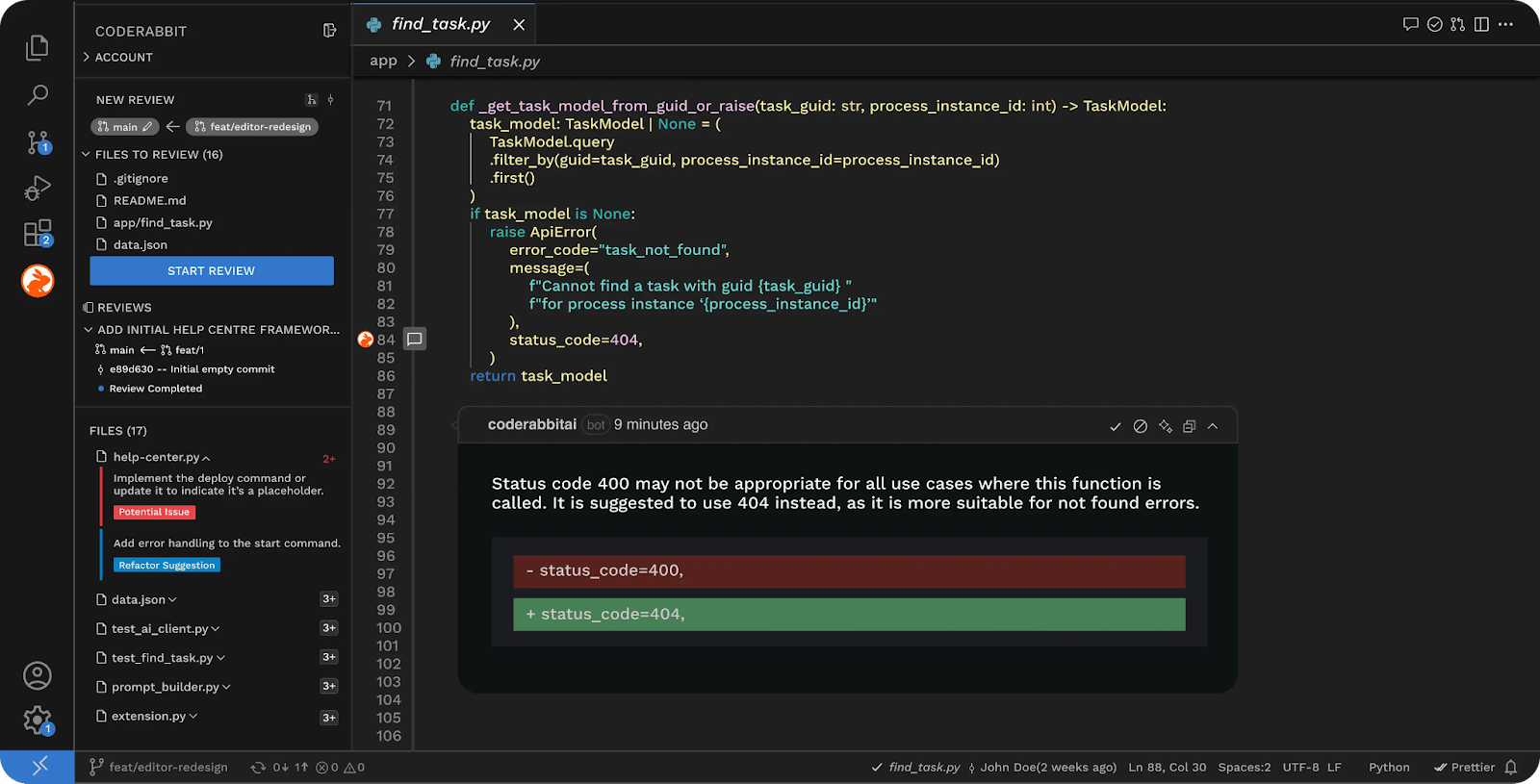

For instance, GitHub Copilot’s recent coding agent analyzes your codebase, spots issues, and suggests or implements fixes based on context. There’s also the CodeRabbit IDE extension, which performs line-by-line code reviews, either directly in the IDE or in pull requests. It flags issues like hallucinated code, missing unit tests, and logic errors before they reach production. When it spots a problem, it suggests fixes you can approve with a single click. You can also chat with the agent to request specific corrections or improvements. No matter your workflow, you stay in control of the final output and your codebase, all while reducing technical debt.

SonarQube, with its AI CodeFix feature, also supports debugging and quality assurance. It applies static code analysis to catch bugs and security vulnerabilities across multiple languages. The platform also has an AI code assurance solution that performs a deep analysis on AI-generated code to ensure it complies with industry best practices and standards, enhancing due diligence.

Code documentation generation

Many developers don’t enjoy writing code documentation. Some even downplay its importance. That is, until weeks later, when they forget why certain decisions were made, which parts of the code are fragile, what was a temporary fix, and where there are areas with technical debt.

You can prompt AI tools to create inline comments, docstrings, or full API references for a piece of code. An example is DocuWriter’s AI documentation generator, which produces clear, structured documentation based on the code’s logic and structure.

Many of these tools also integrate directly into IDEs, allowing you to highlight a function or code block and instantly generate or update documentation. This makes it easier to keep documentation up to date as code changes without breaking the development flow.

Testing stage

In the testing phase, the software is checked to ensure it works correctly and meets the required standards. This phase involves running tests to find bugs, verify that features work as expected, and ensure the software performs well under different conditions.

In most teams, testing is often associated with the work of quality assurance (QA) engineers. They take a more comprehensive approach by simulating real-world usage, checking for edge cases, and verifying system behavior end-to-end.

However, developers also write and run integration and unit tests to confirm that their code works as intended.

For this article, we’re grouping both developer-led and QA-led testing activities under the testing phase and looking at how AI tools support each of them.

Developer-led testing activities

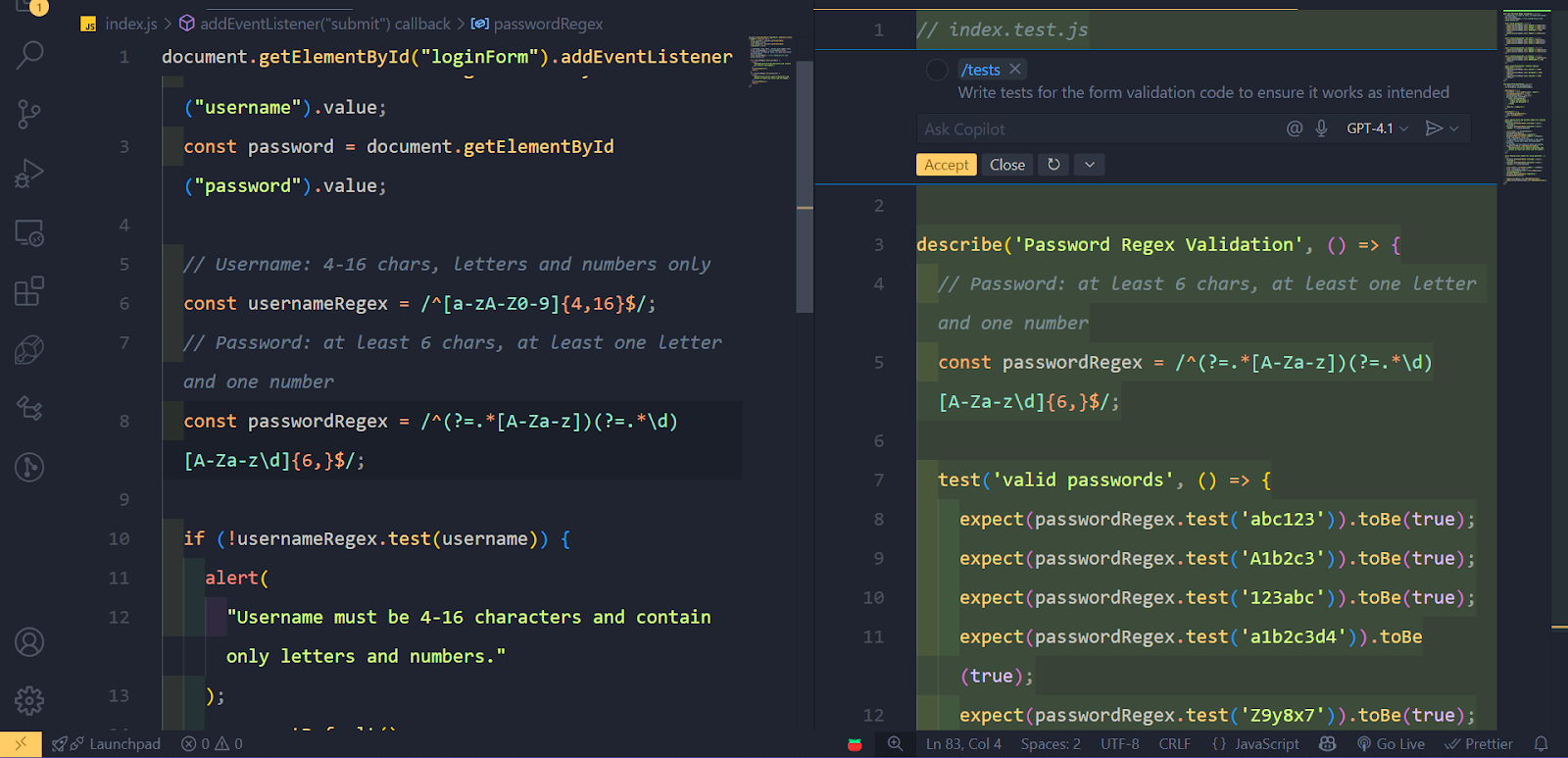

Writing tests for various aspects of a codebase is important, but can quickly become repetitive. The AI coding tools we discussed earlier, like GitHub Copilot, Amazon Q Developer, and Cursor, can speed things up.

These tools help developers generate unit tests, suggest test cases, and even simulate test scenarios while coding. For instance, a developer can highlight a function and ask the tool to create relevant unit tests using frameworks like Jest, Mocha, or Playwright.

However, developers should be aware of potential issues when using AI for testing. As Eke Kalu pointed out from his own experience, "AI tools can be tricky. You might ask them to write a test, and they do. However, if the test keeps failing, instead of fixing the code, the tool changes the test to match the flawed code. This is a behavior you have to watch out for."

QA-led testing activities

There are dedicated testing platforms with a range of AI-powered functionalities that help QA experts perform various tasks.

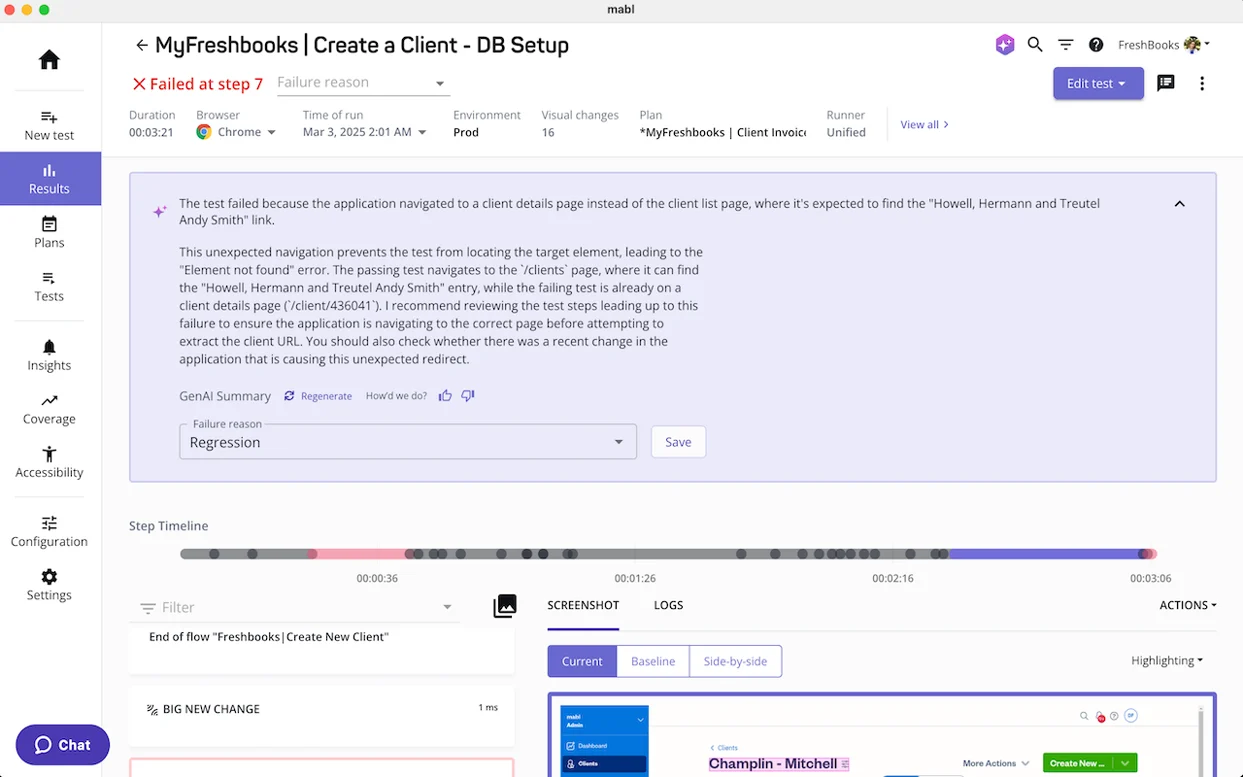

Automated test creation. Tools like Mabl and Functionize allow teams to generate tests from user stories and natural language descriptions.

Root cause analysis. When a test fails, it’s not always clear if the problem is due to a recent code change or an environment issue. AI-powered tools analyze test failures and code behavior to identify likely causes. For example, Functionize provides detailed AI-driven failure diagnostics. Mabl also generates summaries of test failures, enabling faster troubleshooting and smoother collaboration between departments.

Visual and accessibility testing. Platforms like Applitools use Visual AI to detect visual bugs like layout shifts, color contrast issues, or accessibility problems. It catches visual bugs that traditional tests and the human eye might miss.

Test execution and maintenance. Maintaining tests often takes more effort than writing them. Functionize, Rainforest QA, and similar tools address this problem by offering “self-healing” tests that automatically adjust to changes in the application’s interface.

AI assertions and validations. Platforms like Mabl allow testers to write validation steps using natural language prompts. AI then checks that the app behaves correctly after each test step, improving test accuracy without requiring deep scripting knowledge.

Script generation. For more complex scenarios, platforms like Mabl and Testim support extending tests with reusable JavaScript code. Users can simply describe the test script they want, and the AI helps create it. This makes writing automated tests faster and accessible, even for those who aren’t expert programmers.

Deployment stage

The deployment stage involves preparing the finished product (a website, web app, or software feature) for release. DevOps engineers and site reliability engineers are among the key team members at this stage. They ensure the product functions as intended in a live environment and is accessible to the end users.

There are various tasks at this stage where AI tools can play a role.

Automated pipeline generation

With Harness AI, users can chat directly with the system to generate CI/CD pipelines. Instead of manually writing YAML files or configuring pipeline steps, developers can simply describe what they need.

The AI uses context from the codebase—like the framework, language, and previous patterns—to understand the application and build a tailored pipeline. This conversational approach makes pipeline creation faster, reduces setup errors, and lowers the barrier for teams new to DevOps automation.

Log analysis and anomaly detection

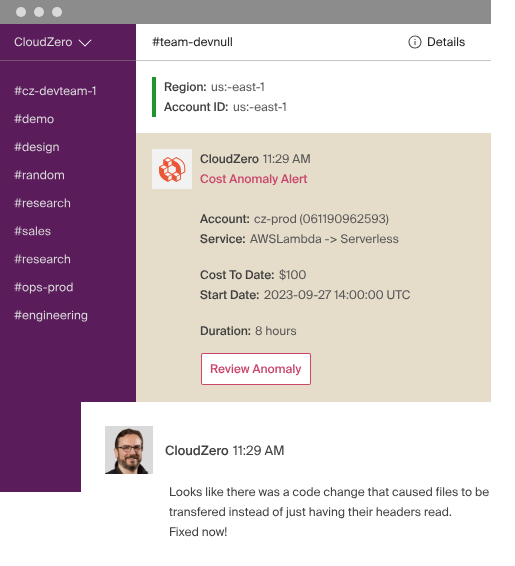

During the deployment stage, it's critical to catch unexpected behavior, performance issues, or cost anomalies before they affect end users. This is where AI-powered tools like CloudZero become valuable.

CloudZero uses AI to continuously analyze hourly cloud spend data. Instead of setting manual thresholds, it learns what "normal" looks like for your system and flags anomalies—sudden spikes in cost or unexpected usage patterns—in real time.

When an anomaly is detected, CloudZero identifies which product, feature, team, or customer is behind the issue. This helps DevOps or SRE teams act quickly and precisely.

Monitoring and maintenance stage

This stage begins after deployment and continues as long as the application is in use. The goal is to keep the system stable, secure, and efficient. Teams monitor system health, track performance, fix bugs, apply updates, and respond to incidents.

Real-time application monitoring

A major AI use case at this stage is real-time monitoring. Platforms like Sentry and Datadog provide a range of monitoring capabilities that help teams detect and respond to issues as they occur in production.

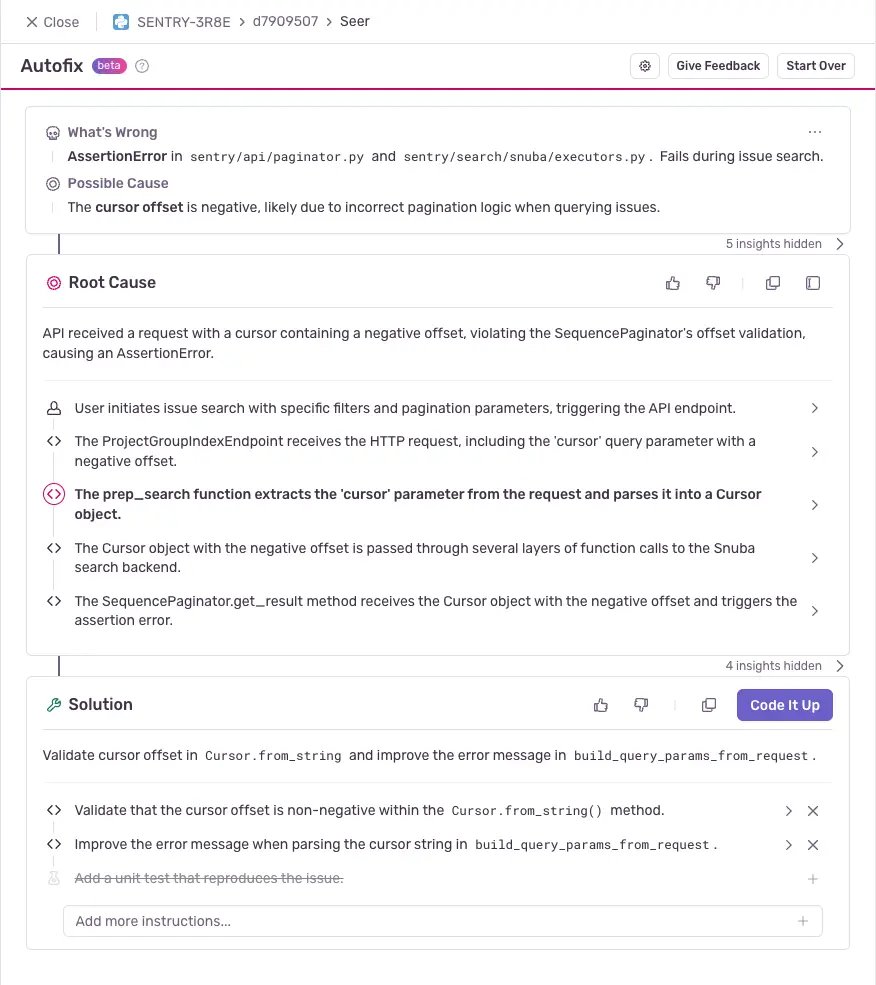

For example, Sentry offers a generative AI agent called Seer that analyzes vast amounts of data—error messages, traces, logs, etc.—and identifies the root cause of problems. Seer provides easy-to-understand summaries of complex issues, saving time spent digging through raw data. It can even suggest fixes or improvements based on patterns it detects in your code and application behavior.

User experience tracking

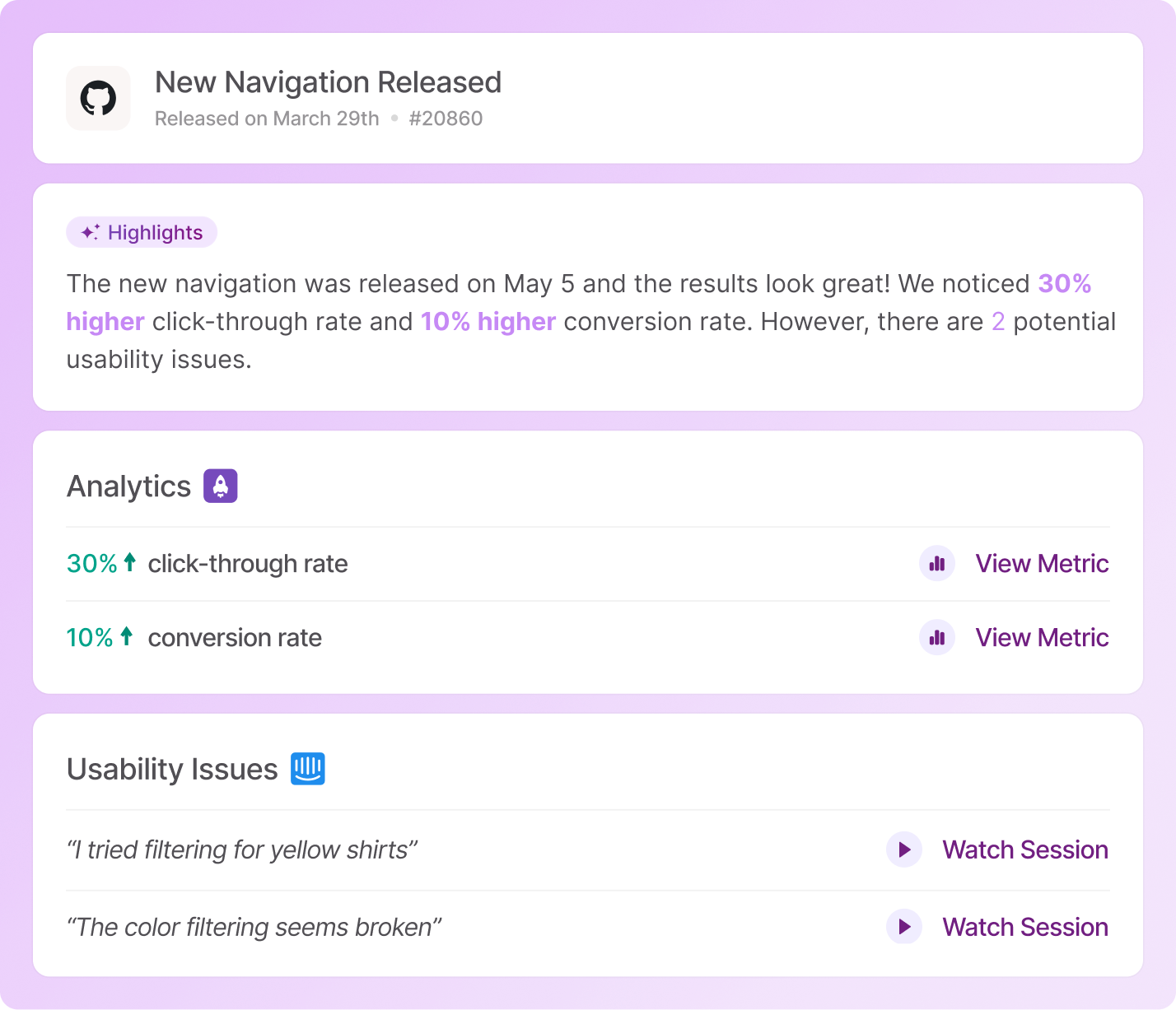

Another important aspect of monitoring is tracking the user experience. Tools like Zipy and Galileo AI (LogRocket’s AI assistant) help in this regard. Galileo runs real-time user sessions to spot where people struggle or get stuck. It looks for patterns and highlights the most critical issues automatically,

After every release, Galileo shows how users are interacting with new features, pointing out what’s working well and what’s causing frustration. It turns complex data into unambiguous summaries so product teams can quickly grasp the situation without needing to dig deep.

Best practices for integrating AI into the SDLC

If your team is looking to adopt AI-first workflows, it's important to do it in a structured and responsible way. These best practices can help you get started and scale effectively.

Understand prompt engineering and set guardrails

AI tools depend heavily on the quality of prompts they receive. Developers should learn to write clear, context-rich prompts to get accurate and useful responses. It's also important to define boundaries for what AI is allowed to do. This includes avoiding its use in production-critical code or security-sensitive areas without human oversight.

Train your team and plan for the transition

Train your developers, testers, and other team members on how to use AI tools properly. Without guidance, teams may underuse these tools or place too much trust in them.

Beyond training, organizations also need to plan for the transition process itself. For example, introducing a new AI-powered IDE, like Cursor, is often accompanied by friction, especially if your team is used to more established tools. As Glib and Ihor pointed out, switching to Cursor was a challenge for developers who were already comfortable with IntelliJ, a widely preferred and more mature IDE among backend developers.

That difference in familiarity can slow down adoption at first. So, accounting for the learning curve and offering support is key.

Break down work into smaller chunks

AI tools respond better to focused problems. Instead of pasting entire files or large code blocks, give the AI smaller, specific inputs such as a function or a class. This helps improve the quality of responses and makes it easier to verify the output.

Start with low-risk tasks

Begin by applying AI to tasks that are easy to verify and not business-critical. Good examples include:

- writing simple or repetitive code,

- generating unit tests, and

- creating technical documentation.

This approach helps the team build confidence while minimizing the chance of introducing critical errors early on.

Keep a human in the loop

Even with powerful AI integrations, human judgment remains key, especially when working on complex logic, performance tuning, or product-critical decisions.

For example, AI coding assistants can hallucinate or generate incorrect or insecure code. If developers copy and paste without reviewing the output, it can introduce bugs or security flaws. That’s why software engineers should always review AI-generated code and make sure it fits the project’s goals and standards.

Human oversight is needed throughout all stages of the software development lifecycle (SDLC). It ensures that AI acts as a helpful assistant, not an unchecked decision-maker.

Track and measure outcomes

It’s important to evaluate how AI tools influence each stage of the SDLC. This includes tracking improvements and spotting areas where they might create new issues.

For example, during requirements gathering, do AI-generated insights actually help clarify needs or cause confusion? In the development phase, does AI speed up coding or introduce more bugs that slow things down later? In testing, does it catch more edge cases or miss critical errors?

Introduce stage-specific metrics to track these effects. This could include time spent, error rates, rework caused by AI-generated output, or feedback from team members. Over time, this helps teams understand where AI is truly effective and where greater human involvement is required.

Establish governance and usage policies

Create internal guidelines for using AI tools. These should cover things like

- when AI-generated code must be reviewed;

- which stages of the workflow can include AI assistance;

- what type of data can be shared with AI systems; and

- when to exclude sensitive documents and codebases.

Teams using tools like Cursor often set policies for how suggestions are reviewed, when they can be committed, and how to handle proprietary or sensitive code. As Glib Zhebrakov explains: “You can instruct Cursor to ignore specific folders, files, or other parts of the codebase using a cursorignore file. This allows you to exclude proprietary algorithms or sensitive information from being indexed by large language models.”