When you buy a pear, you can instantly evaluate its quality: the size and shape, ripeness, and the absence of visible bruising. But only as you take the first bite will you understand if the fruit is that good. Even a beautiful pear might taste sour or have a worm.

The same applies to almost any product, be it physical or digital. A website you find on the Internet may seem fine at first, but as you scroll down, go to another page, or send a contact request, it can start showing some design flaws and errors.

Yet, a sour pear won’t cause as much damage as a self-driving car with poor-quality autopilot software. A single mistake in an EHR system might put a patient’s life at risk. At the same time, website performance issues might cost the owner millions of dollars in revenue.

That is why we at AltexSoft put a premium on the quality of software we build for our clients. This paper will share our insights on the quality assurance and testing process, best practices, and preferred strategies.

1. Software quality assurance vs quality control vs testing

While to err is human, sometimes the cost of a mistake might be just too high. History knows many situations when software flaws caused billions of dollars in waste or even led to casualties: from Starbucks coffee shops forced to give away free drinks because of a register malfunction to the F-35 fighter jet unable to detect the targets because of a radar failure.

Watch the video to learn what events triggered the development of software testing and how it has evolved through the years

The concept of software quality is introduced to ensure the released program is safe and functions as expected. It can be defined as “the capability of a software product to satisfy stated and implied needs under specific conditions. Additionally, quality refers to the degree to which a product meets its stated requirements.”

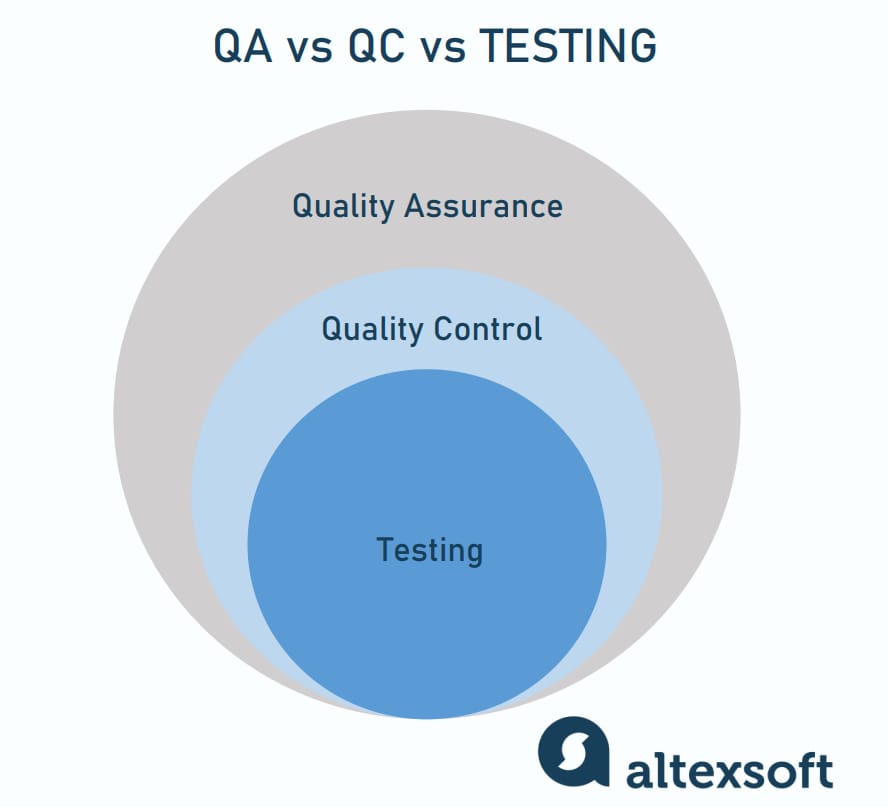

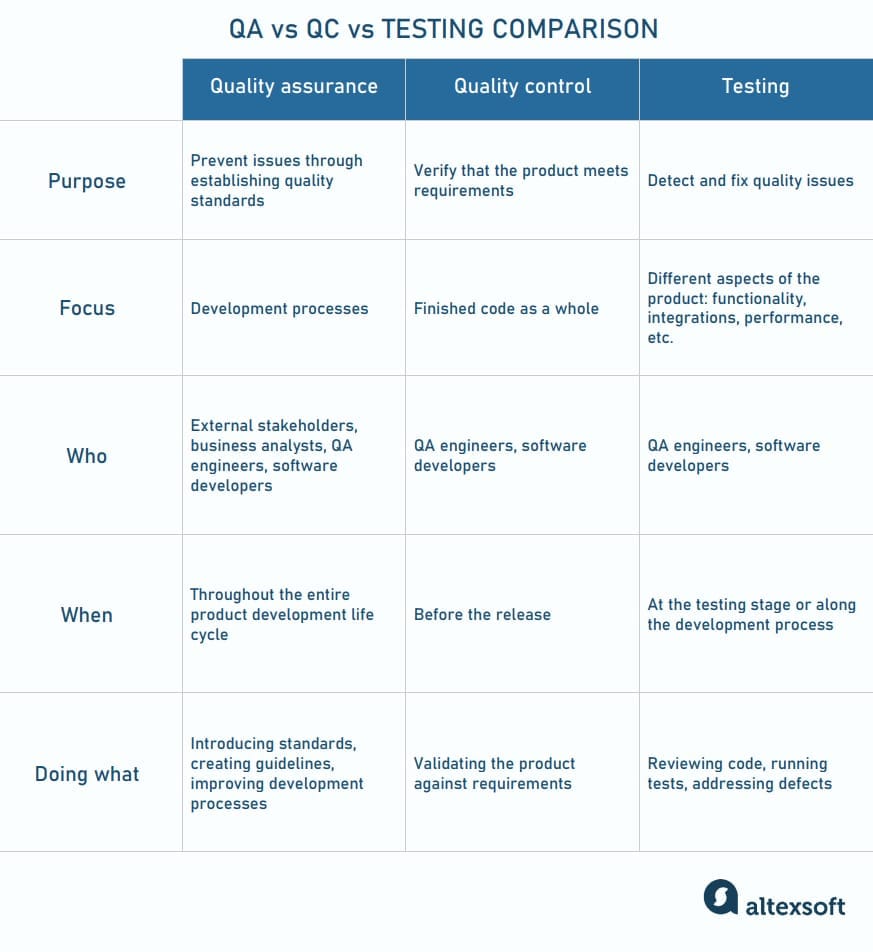

Quality assurance (QA), quality control (QC), and QA software testing are three aspects of quality management to ensure that a program works as intended Often used interchangeably, the three terms cover different processes and vary in their scope, though they have the same goal — to deliver the best possible digital product or service.

Quality assurance vs quality control vs testing

What is quality assurance?

Quality assurance is a broad term, explained on the Google Testing Blog, as “the continuous and consistent improvement and maintenance of process that… gives us confidence the product will meet the need of customers.” QA focuses on organizational aspects of quality management, aiming to improve the end-to-end product development life cycle, from requirements analysis to launch and maintenance.

QA plays a crucial role in the early identification and prevention of product defects. Among its key activities are

- setting quality standards and procedures,

- creating guidelines to follow across the development process,

- conducting measurements,

- reviewing and changing workflows to enhance them.

QA engages external stakeholders and a broad range of internal specialists, including business analysts (BAs), QA engineers, and software developers. Its ultimate goal is to establish an environment ensuring the production of high-quality items and, thus, to build trust with clients.

What is quality control

Quality control is a part of quality management that verifies the product’s compliance with standards set by QA. Investopedia defines it as a “process through which a business seeks to ensure that product quality is maintained or improved and manufacturing errors are reduced or eliminated.”

While QA activities aim to prevent issues across the entire development process, QC is about detecting bugs in the ready-to-use software and checking its correspondence to the requirements before the product launch. It encompasses code reviews and testing activities conducted by the engineering team.

What is software testing?

Testing is the primary activity of detecting and solving technical issues in the software source code and assessing the overall product usability, performance, security, and compatibility. It has a narrow focus and is performed by test engineers in parallel with a development process or at the dedicated testing stage (depending on the methodological approach to the software development cycle).

The concepts of quality assurance, quality control, and testing compared

If applied to car manufacturing, a proper quality assurance process means that every team member understands the requirements and performs their work according to the commonly accepted guidelines. Namely, it ensures the performance of actions in the correct order, proper details implementation, and consistency of the overall flow so that nothing can harm the end product.

Quality control is like a senior manager walking into a production department and picking a random car for an examination and test drive. In this case, testing activities refer to checking every joint and mechanism separately and conducting crash tests, performance tests, and actual or simulated test drives.

Due to its hands-on approach, software testing activities remain a subject of heated discussion. That is why we will focus primarily on this aspect of software quality management. But before we get into the details, let’s define the major software testing principles.

2. Software testing principles

Formulated over the past 40 years, the seven principles of software testing represent the ground rules for the process.

Testing shows the presence of mistakes. Testing aims to detect the defects within a piece of software. But no matter how hard you try, you’ll never be 100 percent sure there are no defects. Testing can only reduce the number of unfound issues.

Exhaustive testing is impossible. There is no way to test all combinations of data inputs, scenarios, and preconditions within an application. For example, if a single app screen contains ten input fields with three possible value options each, test engineers need to create 59,049 (310) scenarios to cover all possible combinations. And what if the app contains 50+ of such screens? Focus on more common scenarios to avoid spending weeks creating millions of less possible ones.

Early testing. As mentioned above, the cost of an error grows exponentially throughout the stages of the software development life cycle (SDLC). Therefore, it is important to start testing the code as soon as possible to resolve issues and not to snowball.

Defect clustering. Often called an application of the Pareto principle to software testing, defect clustering implies that approximately 80 percent of all errors are usually found in only 20 percent of the system modules. If you find a bug in a particular component, there might be others. So, it makes sense to test this area of the product thoroughly.

Pesticide paradox. Running the same set of tests repeatedly won’t help you find more issues. As soon as you fix the detected errors, the previous scenarios become useless. Review and update them regularly to spot hidden mistakes.

Testing is context-dependent. Test applications differently regarding the industry or goals. While safety could be of primary importance for a fintech product, it is less significant for a corporate website, emphasizing usability and speed.

Absence-of-errors fallacy. The absence of errors does not necessarily lead to a product’s success. No matter how much time you have spent polishing the code, the software will fail if it does not meet user expectations.

Some sources note other principles besides the basic ones:

- Testing must be an independent process handled by unbiased professionals.

- Test for invalid and unexpected input values as well as valid and expected ones.

- Conduct tests only on a static piece of software (you should make no changes in the process of testing).

- Use exhaustive and comprehensive documentation to define the expected test results.

Yet the above-listed seven points remain undisputed guidelines for every software testing professional.

3. When testing happens in the software development life cycle

As mentioned above, testing happens at the dedicated stage of the software development life cycle or in parallel with the engineering process — it depends on the project management methodology your team sticks to.

3.1. Waterfall model

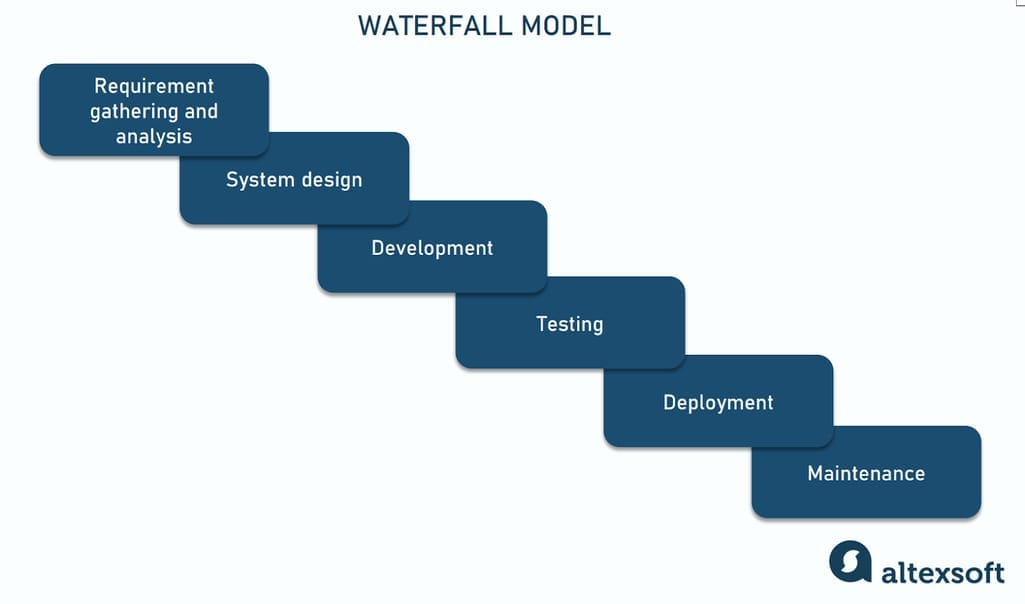

Representing a traditional software development life cycle, the Waterfall model includes six consecutive phases: requirements gathering and analysis, system design, development, testing, deployment, and maintenance.

SDLC waterfall model

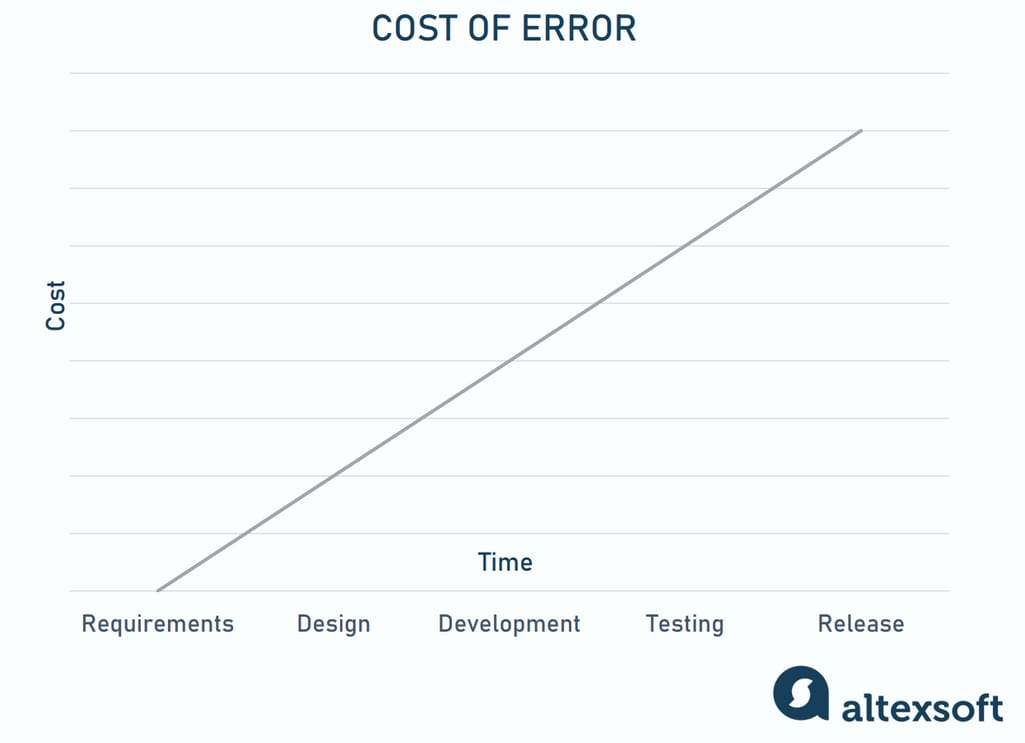

In the testing phase a product, already designed and coded, is being thoroughly validated before the release. However, the practice shows that software errors and defects detected at this stage might be too expensive to fix, as the cost of an error tends to increase throughout the software development process.

How the cost of error rises throughout the SDLC

It’s much cheaper to address errors at earlier stages. If not detected, say, during design, the damage grows exponentially throughout the further phases. Entering the development stage, unsolved errors become embedded in the application core and can disrupt the work of the product. Crucial changes to the program structure after testing or especially after release will require significant effort and investment, not to mention the reputation losses a malfunctioning app may cause.

Therefore, it is better to test every piece of software while the product is still being built. This is where iterative Agile methods prove beneficial.

3.2. Agile testing

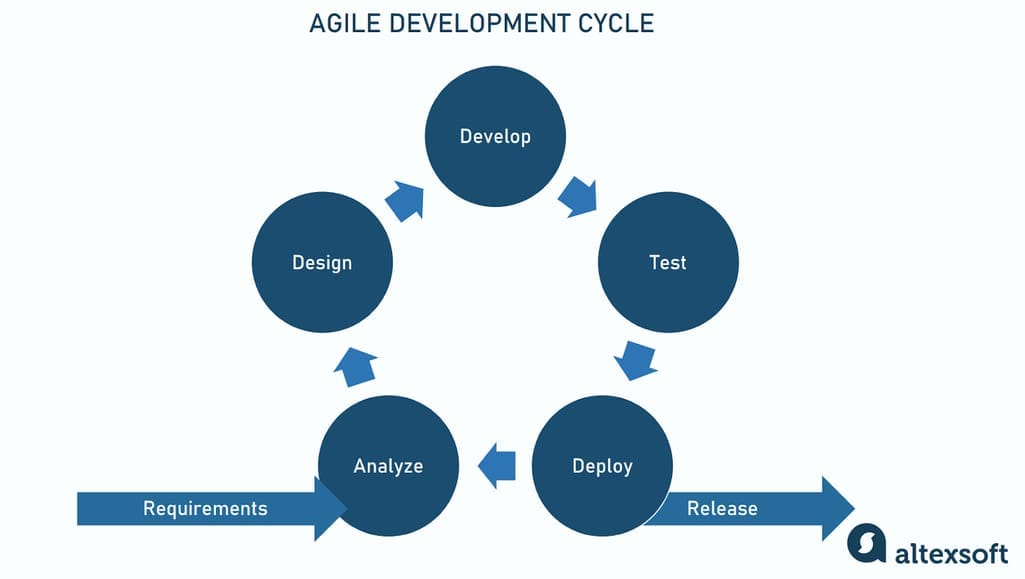

Agile breaks the development process into smaller parts called iterations or sprints. This allows testers to work in parallel with the rest of the team and fix the flaws immediately after they occur.

Software development life cycle under Agile

This approach is less cost-intensive: Affressing the errors early in the development process, before more problems snowball, is significantly cheaper and requires less effort. Moreover, efficient communication within the team and active involvement of the stakeholders speed up the process and allow for better-informed decisions.

You can find out more about roles and responsibilities in a testing team in our dedicated article.

The Agile testing approach is about building up a QA practice as opposed to having a QA team. Amir Ghahrai, a Senior Test Consultant at Amido, comments on this matter: “By constructing a QA team, we fall in the danger of separating the testers from vital conversations with the product owners, developers, etc. In Agile projects, QA should be embedded in the scrum teams because testing and quality are not an afterthought. Quality should be baked in right from the start.”

3.3. DevOps testing

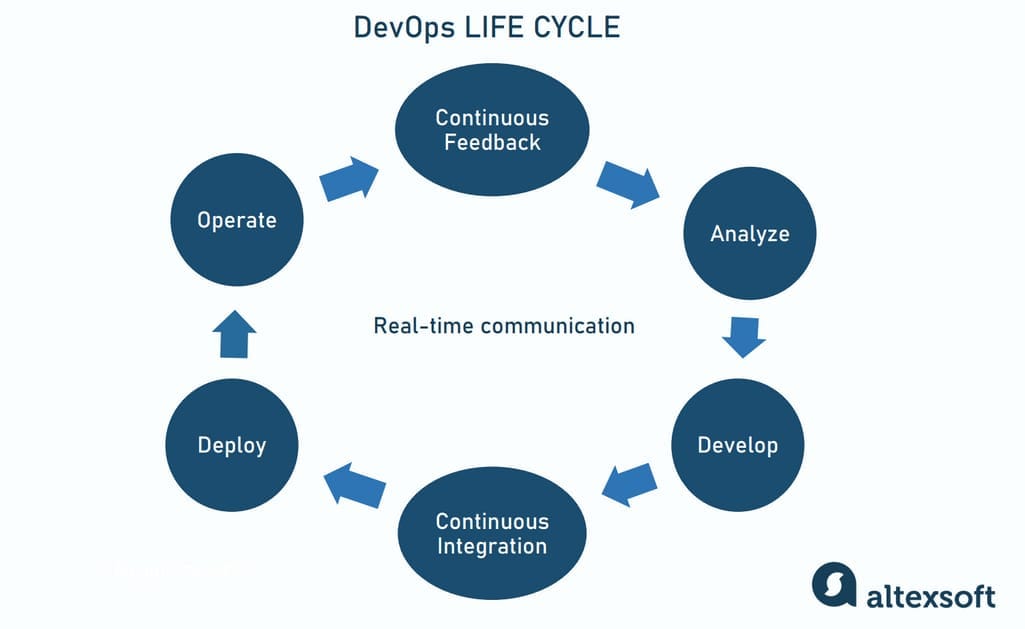

For those who have Agile experience, DevOps gradually becomes a common practice. This software development methodology requires a high level of coordination between various functions of the deliverable chain, namely development, QA, and operations.

Testing takes place at each stage of the DevOps model

DevOps is an evolution of Agile that bridges the gap between development along with QA and operations. It embraces the concept of continuous development, which in turn includes continuous integration and delivery (CI/CD), continuous testing, and continuous deployment. DevOps places a great emphasis on automation and CI/CD tools that allow for the high-velocity shipment of applications and services.

The fact that testing takes place at each stage in the DevOps model changes the role of testers and the overall idea of testing. Therefore, to be able to carry out their activities effectively, testers are now expected to be code-savvy.

According to the 2023 State of Testing survey, of all approaches, Agile is an undisputed leader, with almost 91 percent of respondents working at least in some Agile projects within their organizations. DevOps takes second place, accepted in 50 percent of organizations. Meanwhile, 23 percent of companies are still practicing Waterfall.

4. Software testing life cycle

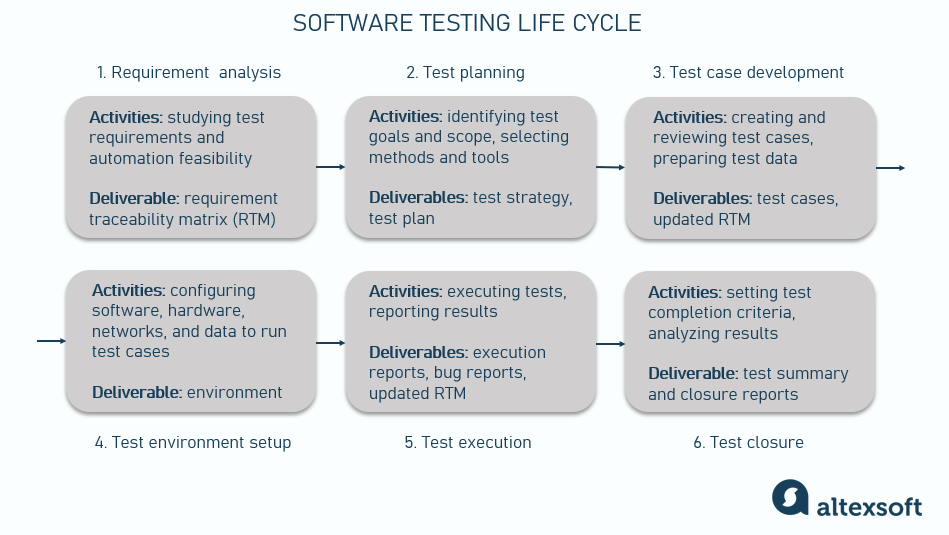

Software testing life cycle (STLC) is a series of activities conducted within the software development life cycle or alongside the SDLC stages. It typically consists of six distinct phases: requirement analysis, test planning, test case development, environment setup, test execution, and test closure. Each phase is associated with specific activities and deliverables.

Stages of software testing

4.1. Software testing requirement analysis

At this phase, a QA team reviews software requirements specifications from a testing perspective and communicates with different stakeholders to gather additional details and identify test priorities. Based on the acquired information, experts decide on testing methods, techniques, and types and conduct an automation feasibility study to understand which processes can be automated and with which tools.

An important document generated by the initial stage is a requirement traceability matrix (RTM). It’s basically a checklist that captures testing activities and connects requirements to related test cases.

4.2 Test planning

As with any other formal process, testing activities are typically preceded by thorough preparations and planning. The main goal is to ensure the team understands customer objectives, the product’s main purpose, the possible risks to address, and the expected outcomes. One of the documents created at this stage, the testing mission or assignment, helps align testing activities with the overall purpose of the product and coordinates the testing effort with the rest of the team’s work.

- Test strategy

Also referred to as test approach or architecture, test strategy is another deliverable of the planning stage. James Bach, a testing guru who created the Rapid Software Testing course, identifies the purpose of a test strategy as “to clarify the major tasks and challenges of the test project.” A good test strategy, in his opinion, is product-specific, practical, and justified.

Roger S. Pressman, a professional software engineer, famous author, and consultant, states: “Strategy for software testing provides a roadmap that describes the steps to be conducted as part of testing, when these steps are planned and then undertaken, and how much effort, time, and resources will be required.”

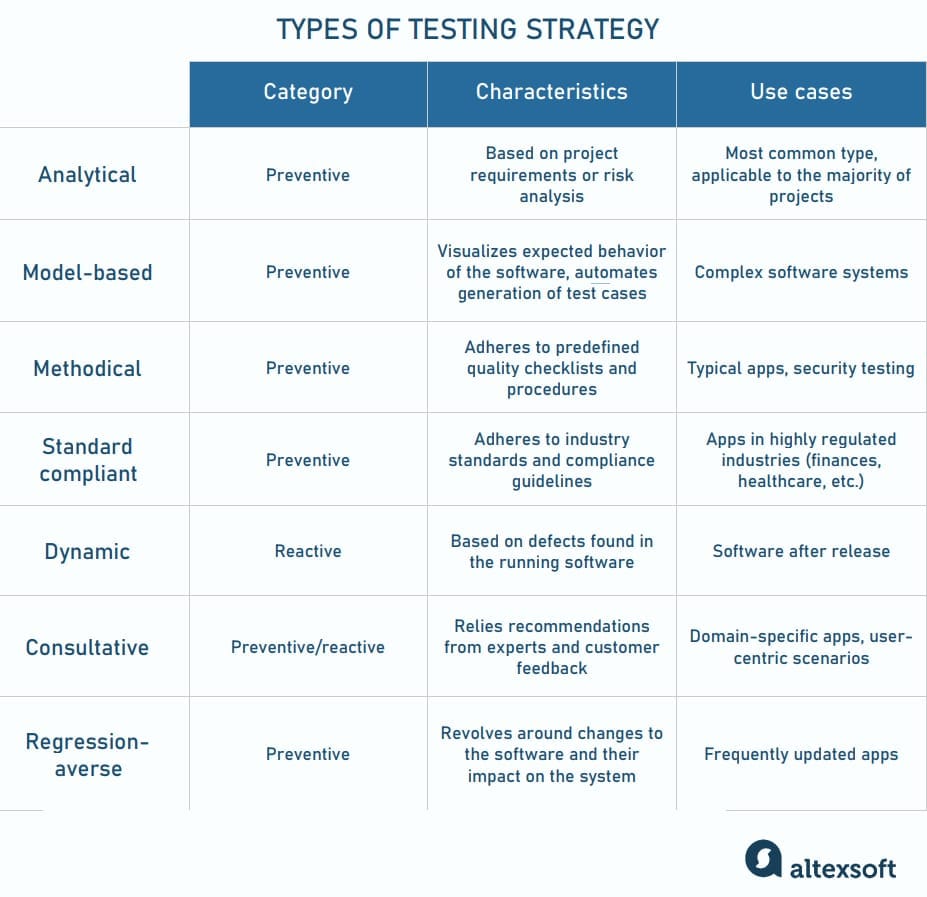

Depending on when exactly in the process they are used, the strategies can be classified as

- preventive when tests are designed early in the SDLC; or

- reactive when test details are designed on the fly in response to user feedback or problems occur.

In addition to that, there are several types of strategies that can be used separately or in conjunction.

Seven types of test strategy

Analytical strategy is the most common type based on the requirements or additional risk analysis. In the latter case, a testing team collaborates with stakeholders to prioritize areas that are most critical for end users. While it’s impossible to reach zero risk, QA experts can at least outline key vulnerabilities and minimize quality threats to an acceptable level.

Model-based strategy follows a prebuilt model of how a program must work. This model typically comes as a diagram visualizing various aspects of expected software behavior — a customer journey through a website, data flows, interactions between components, etc. It helps better understand system functionality, improves communication with stakeholders, and reduces the time and effort required for test automation.

Bringing efficiency to large, complex projects, the model-based strategy, at the same time, can be too expensive and resource-consuming for simple, straightforward applications.

Methodical strategy employs predefined quality checklists and procedures сreated in-house or adopted across the industry. It’s often used for standard apps or particular types of checks — for example, security testing.

Standard compliant strategy adheres to specific regulations, guidelines, and industry standards. It prevents your app from breaking laws and this way, protects you from heavy penalties. For example, if you run a travel platform that accepts, processes, or stores credit card payments, you must check it against the Payment Card Industry Data Security Standards (PCI DSS).

Dynamic strategy is when you apply informal techniques that don’t require pre-planning (such as ad hoc and exploratory testing.) It belongs to a reactive category and comes into play when bumping into defects in the running software.

Consultative (directed) strategy relies on the expertise and recommendations of stakeholders or end-user feedback when deciding on testing scope, methods, etc. It’s relevant to domain-specific apps where additional guidance is needed. It also suits scenarios when you want to enhance the existing app to better meet customer needs.

Regression-averse strategy revolves around reducing risks of regressions — or situations when an app stops working correctly after updates. Typically, it’s a highly automated approach. A QA team can run reusable tests on both typical and exceptional scenarios before release or wherever there are any changes to the software.

Read more about test strategy and how to write a test strategy document (with real-life examples) in our article.

- Test plan

While a test strategy is a high-level document, a test plan has a more hands-on approach, describing in detail what to test, how to test, when to test, and who will do the test. Unlike the static strategy document, which refers to a project as a whole, the test plan covers every phase separately and is frequently updated by the project manager throughout the process.

According to the IEEE standard for software test documentation, a test plan document should contain the following information:

- Test plan identifier

- Introduction

- References (list of related documents)

- Test items (the product and its versions)

- Features to be tested

- Features not to be tested

- Item pass or fail criteria

- Test approach (testing levels, types, techniques)

- Suspension criteria

- Deliverables (Test Plan or this document itself, Test Cases, Test Scripts, Defect/Enhancement Logs, Test Reports)

- Test environment (hardware, software, tools)

- Estimates

- Schedule

- Staffing and training needs

- Responsibilities

- Risks

- Assumptions and Dependencies

- Approvals

Writing a plan, which includes all of the listed information, is a time-consuming task. In Agile methodologies, focusing on the product instead of documents, such a waste of resources seems unreasonable.

To solve this problem, James Whittaker, a Technical Evangelist at Microsoft and former Engineering Director at Google, introduced The 10 Minute Test Plan approach. The main idea behind the concept is to focus on the essentials first, cutting all the fluff by using simple lists and tables instead of large paragraphs of detailed descriptions. No team participating in the original experiment finished their work within ten minutes. However, it took them only 30 minutes to complete 80 percent of the work — while James expected they would need an hour.

4.3. Test case development

Next, QA or software engineers develop test cases — detailed descriptions of how to assess a particular feature. It includes test preconditions, data inputs, necessary actions, and expected outcomes. Upon creation, a QA team reviews test cases and updates the RTM document linking them to requirements. The stage should produce a set of easy-to-understand and concise instructions that ensure a thorough testing process.

4.4. Test environment setup

Once test cases are ready, they need a secured and isolated virtual space for execution — a testing environment. It combines hardware, software, data, and networks configured for the requirements of the program being checked. The test environment must emulate production scenarios as closely as possible.

Usually, companies set up several environments to conduct different tests — unit, system, security, and so on. The end-to-end testing is typically performed in a staging environment which exactly replicates live conditions and employs real data but is not accessible for end users.

4.5. Test execution

A testing team starts to run test cases in the prepared environment. Then, QA engineers analyze the results and share outcomes with developers and stakeholders. If there are any bugs or defects, test execution repeats after developers have addressed the problems. The main deliverables of this stage are different test reports — bug reports, test execution reports, test coverage reports, etc. The RTM also gets updated with test results.

4.6. Test closure

There is no perfect software, so the testing is never 100 percent complete. It is an ongoing process. However, we have the so-called “exit criteria,” which define whether there was “enough testing” based on the risk assessment of the project.

There are common points present in the exit criteria:

- Test case execution is 100 percent complete.

- A system has no high-priority defects.

- The performance of the system is stable regardless of the introduction of new features.

- The software supports all necessary platforms and/or browsers.

- User acceptance testing is completed.

As soon as all of these requirements (or any custom ones you have set in your project) are met, the testing is closed. The test summary and closure reports are prepared and provided to the stakeholders. The team holds a retrospective meeting to define and document the issues that occurred during the development and improve the process.

5. Testing concepts and categories

Software testing comes in a variety of forms, happens at different stages of the development process, checks various parts of the software, focuses on a number of characteristics — and, as a result, divides into many categories. Below, we’ll look into key concepts of software testing and how they interconnect with each other.

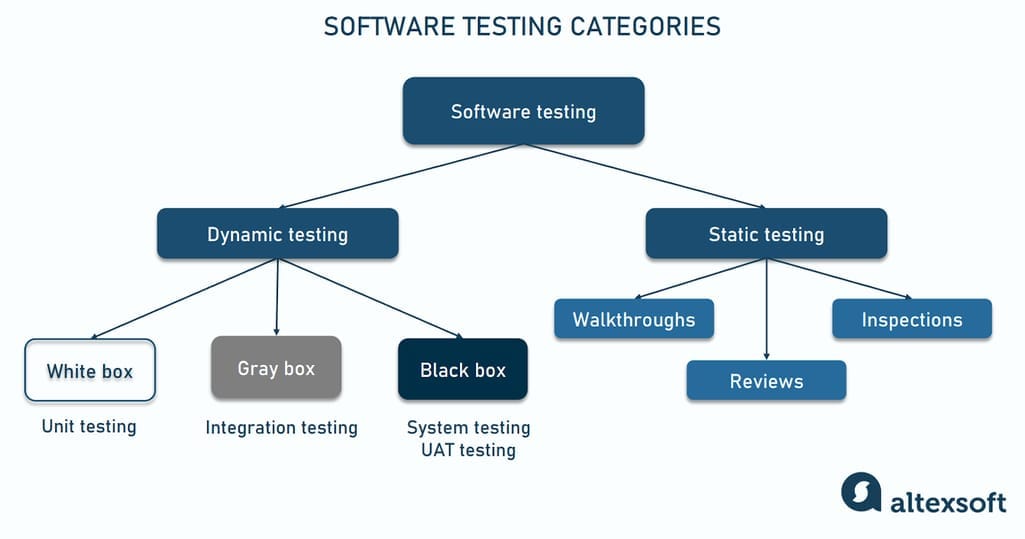

Software testing basic categories and concepts

5.1 Static testing vs dynamic testing

The software testing process identifies two broad categories — static testing and dynamic testing.

Static testing initially examines the source code and software project documents to catch and prevent defects early in the software testing life cycle. Also called a non-execution technique or verification testing, it encompasses

- code reviews — systematic peer inspections of the source code;

- code walkthroughs — informal meetings when a developer explains a program to peers, receives comments and makes modifications to the code; and

- code inspections — formal procedures carried out by experts from several departments to validate product compliance with requirements and standards.

As soon as the primary preparations are finished, the team proceeds with dynamic testing conducted during execution. This whitepaper focuses on dynamic testing as the most common way to validate code behavior. It varies by design techniques, levels, and types. You can do use case testing (a type) during the system or acceptance testing (a level) using black box testing (a design technique). Let’s examine each group separately.

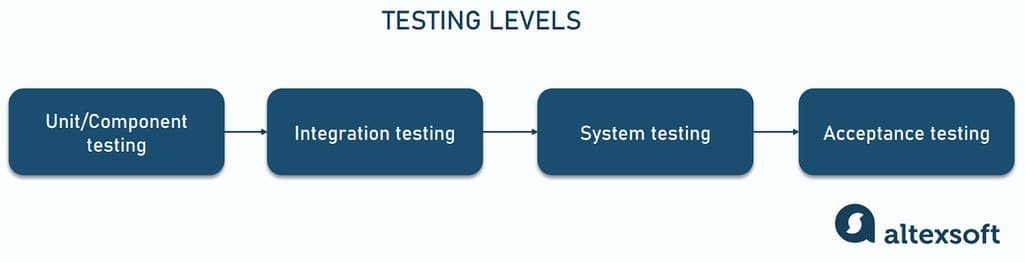

5.2. Levels of software testing

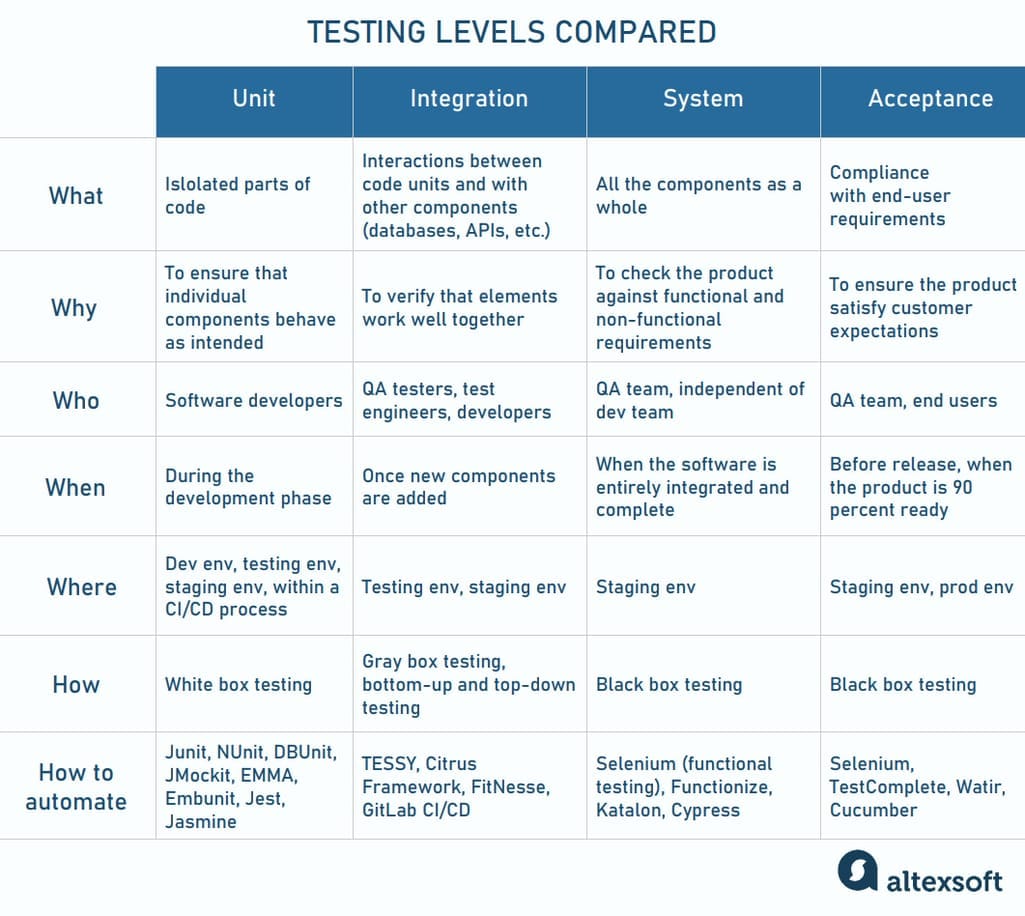

Software testing levels vary in the scope of what is checked, ranging from a single component to an entire program as a whole. Commonly, before release, the code goes through four test layers: unit testing, integration testing, system testing, and acceptance testing.

Four levels of software testing

A unit or component is the smallest testable part of the software system. Therefore, this testing level examines separate parts (functions, procedures, methods, modules) of an application to make sure they conform with program specifications and work as expected.

Unit tests are performed early in the development process by software engineers, not the testing team. They are short in lines of code, quick, and typically automated: every popular programming language has a framework to run unit testing.

- Integration Testing

The objective of this level is to verify that units work well together as a group and also smoothly interact with other system elements — databases, external APIs, etc. It’s a time-consuming and resource-intensive process handled by test engineers, QA testers, or software developers.

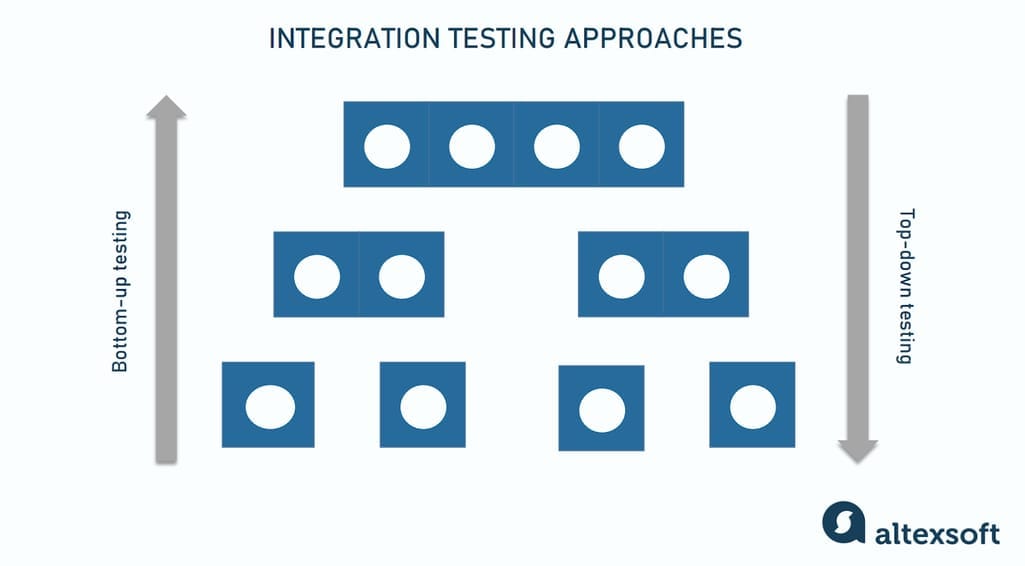

There are two main approaches to this testing: bottom-up and top-down. The former starts with unit tests, successively increasing the complexity of the software parts evaluated. The top-down method takes the opposite approach, focusing on high-level combinations first and examining the simple ones later.

Bottom-up and top-down testing at the integration level

- System Testing

At this level, a complete software system is tested as a whole. The stage verifies the product’s compliance with the functional and non-functional requirements. A highly professional testing team should perform system testing in a staging environment that is as close to the real business use scenario as possible.

- Acceptance Testing

This is where the product is validated against the end-user requirements and for accuracy. Also known as user acceptance testing (UAT), it is a high-level, end-to-end process that helps decide if the product meets acceptance criteria and is ready to be shipped.

Acceptance criteria explained

While small issues should be detected and resolved earlier in the process, this testing level cares about overall system quality, from content and UI to performance issues. The three common stages of UAT are

- alpha testing, performed by internal testers in a staging environment;

- beta testing, handled by a group of real customers in the production environment to verify that the app or website satisfies their expectations; and

- gamma testing, when a limited number of customers check particular specifications (primarily related to security and usability). At this stage, the product is 99 percent ready for release, so developers won’t make any critical changes. Feedback is to be considered in upcoming versions.

That said, many companies skip gamma testing because of tight deadlines, limited resources, and short development cycles.

Four levels of software testing compared

The four levels of testing come one after another, from checking the simpler parts to more complex groups mitigating the risks of bugs penetration to the release. In Agile development, this sequence of testing represents an iterative process, applying to every added feature. In this case, every small unit of the new functionality is being verified. Then, the engineers check the interconnections between units, the way the feature integrates with the rest of the system, and whether the new update meets end user needs.

5.3.Quality testing methods and techniques

Software testing methods are the ways tests at different levels are conducted. They include black box testing, white box testing, gray box testing, ad hoc testing, and exploratory testing.

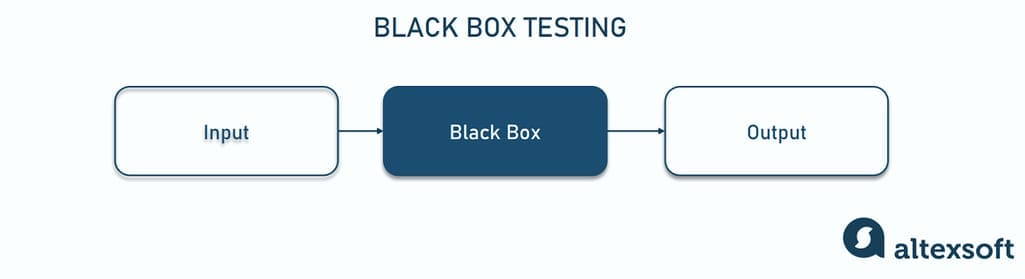

- Black box testing

This method gets its name because a QA engineer focuses on the inputs and the expected outputs without knowing how the application works internally and how these inputs are processed. The main purpose is to check the functionality of the software, making sure that it works correctly and meets user demands. Black box testing applies to any level but is mostly used for system and user acceptance testing.

Black box testing process

One of the popular techniques inside the black box category is use case testing. A use case simulates a real-life sequence of a user’s interactions with the software. QA experts and consumers can check the product against various scenarios to identify frustrating defects and weak points.

- White box testing

Unlike black box testing, this method requires a profound knowledge of the code as it evaluates structural parts of the application. Therefore, developers directly involved in writing the program are generally responsible for conducting such checks. White box testing aims to enhance security, reveal hidden defects, and address them. This method is used at the unit and integration levels.

- Gray box testing

The method combines the two previous ones, testing both functional and structural parts of the application. Here, an experienced engineer is at least partially aware of the source code and designs test cases based on the knowledge about data structures, algorithms used, etc. At the same time, the tester applies straightforward black-box techniques to evaluate the software’s presentation layer from a user’s perspective. Gray box testing is mainly applicable to the integration level.

- Smoke testing

Smoke testing is a popular white box technique to check whether a build added to the software is bug-free. It contains a short series of test runs, evaluating a new feature against critical functionality. The goal is to confirm that the component is ready for further, more time-consuming and expensive testing. Any flaws signal the need to return the piece of code to developers.

- Ad hoc testing

This informal testing technique is performed without documentation, predefined design, and test cases. A QA expert improvises steps and randomly executes them to spot defects missed by structured testing activities. Ad hoc testing can happen early in the development cycle before a test plan is created.

Flexible and adaptive to changes, ad hoc testing is faster and more cost-effective than formal methods. At the same time, you need to complement it by more structured QA activities.

- Exploratory testing

Exploratory testing was first described by Cem Kaner, a software engineering professor and consumer advocate, as “a style of software testing that emphasizes the personal freedom and responsibility of the individual tester to continually optimize the value of their work by treating test-related learning, test design, test execution, and test result interpretation as mutually supportive activities that run in parallel throughout the project.”

Similar to the ad hoc method, exploratory testing does not rely on predefined and documented test cases. Instead, it is a creative and freestyle process of learning the system while interacting with it. The approach enables testers and non-tech stakeholders to quickly validate the quality of the product from a user’s point of view and provide rapid feedback.

Both ad hoc and exploratory techniques belong to black box testing since they are functionality-centric, focus on validating user experience, not code, and don’t require knowledge of internal program structure.

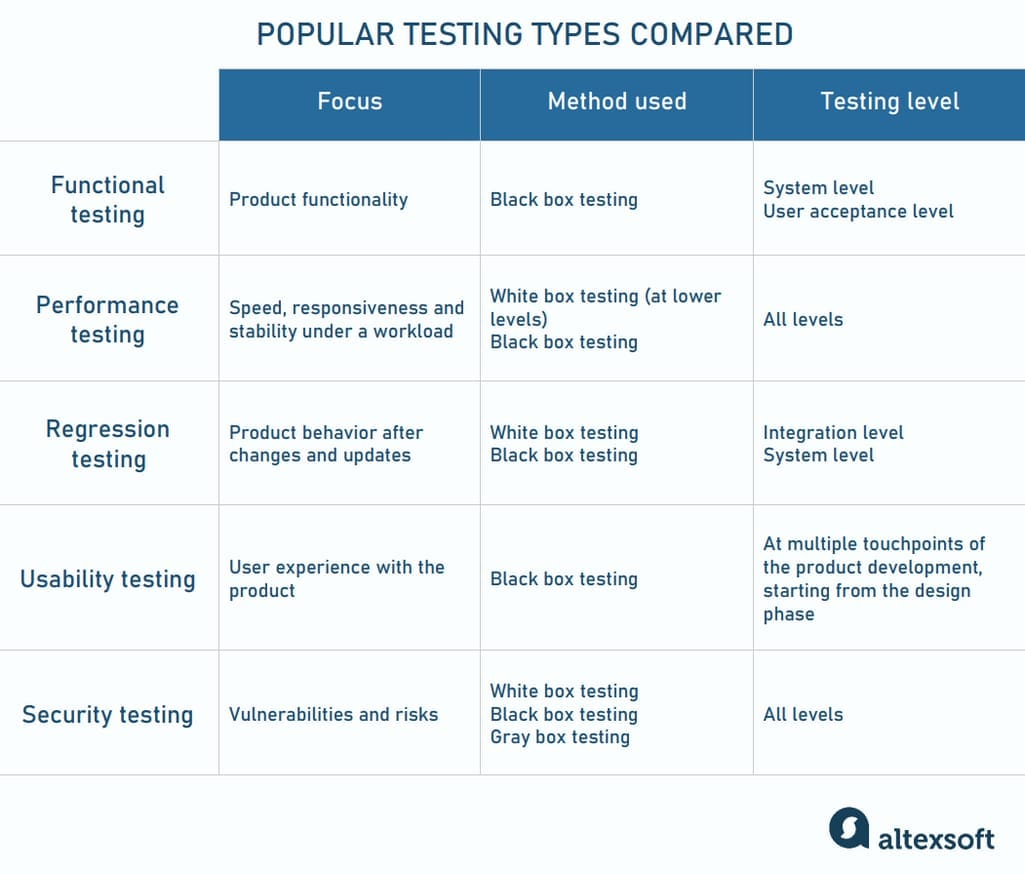

5.4. Types of software testing

Based on the main objective, testing can be of different types. Here are the most popular testing types according to the State of Development Ecosystem Survey 2023 by JetBrains, a company specialized on creating tools for software engineers.

Widely-adopted software testing types

- Functional Testing

This type assesses the system against the functional requirements by feeding its input and examining the output. Typically, the process comprises the following set of actions:

1. Outline the functions for the software to perform

2. Compose the input data depending on function specifications

3. Determine the output depending on function specifications

4. Execute the test case

5. Juxtapose the received and expected outputs

Functional testing employs the black box method where results, not the code itself are of primary significance. It’s usually performed at the system and user acceptance levels.

- Performance Testing

Performance testing investigates the speed, responsiveness and stability of the system under a certain load. Depending on the workload, a system’s behavior is evaluated by different kinds of performance testing:

- load testing — at continuously increasing workload

- stress testing — at or beyond the limits of the anticipated workload

- endurance testing — at continuous and significant workload

- spike testing — at suddenly and substantially increased workload

The types of performance testing differ by their duration. Load and stress testing can last from 5 to 60 minutes. Soak testing requires hours, while spike testing takes just a few minutes.

Performance testing should start early in the development life cycle and often run along its stages. Like with other issues, the cost of addressing performance errors grows with the project’s progress.

- Regression Testing

Regression testing verifies software behavior after updates to ensure that changes haven’t harmed the updated element or other product components and interactions between them.

This type allows QA experts to check functional and non-functional aspects, employing both white box and black box methods. You can reuse previous scripts and scenarios to validate how a feature or whole product works after alterations. While relevant to any level, regression testing is most important for integration and system quality assurance.

- Usability Testing

Usability testing evaluates a user experience when interacting with a website or application. It shouldn’t be confused with user acceptance testing. While both engage end customers, they focus on different shortcomings and happen at different stages of the development cycle.

UAT occurs shortly before release and checks if the product allows customers to achieve their goals. Usability testing considers customer sentiments and assesses how easy-to-use and intuitive the product is. It can be conducted as early as the design phase, making a targeted audience evaluate a product’s prototype before a certain logic or feature solidifies in code. It’s also important to carry out usability tests throughout the entire project, with different people involved.

- Security Testing

This testing type reveals vulnerabilities and threats that may lead to data leaks, malicious attacks, system crashes, and other problems. Common threat checks include but are not limited to

- penetration testing or ethical hacking, which simulates cyber attacks against the software under safe conditions;

- application security testing (AST);

- API security testing;

- configuration scanning to check the system against the list of security best practices; and

- security audits to uncover security gaps and evaluate compliance with regulations and security requirements.

Security activities begin at the requirements analysis stage of SDLC, spanning later phases and all testing levels.

6. Test automation

Test automation is critical in terms of continuous testing as it eases the burden of managing testing needs and saves engineers time and effort they can spend on creating better test cases.The ever-growing adoption of agile methodologies promotes both test automation and continuous integration practices as the cornerstone of effective software development.

The process of test automation typically contains several consecutive steps:

- preliminary project analysis

- framework engineering

- test case development

- test cases implementation

- iterative framework support

Benefits of test automation. Automation can be applied to almost every testing type at every level. It minimizes the human effort required to run tests and reduces time-to-market and the cost of errors because the tests are performed up to 10 times faster when compared to the manual testing process. Moreover, such a testing approach covers over 90 percent of the code, unveiling the issues that might not be visible in manual testing and can scale as the product grows.

Test automation in numbers. According to the 2023 SoftwaSre Testing and Quality Report by a test management platform TestRail, a manual approach still prevails, with 40 percent of tests being automated on average. Yet, automation shows a steady increase — in 2020, its coverage was only 35 percent.

Open-source frameworks lead the pack of most popular tools, even among large enterprises. Selenium, Cypress, JUnit, TestNG, Appium, Cucumber, and Pytest proved to be the most popular instruments across a range of testing types — from regression to API testing.

We invite you to check out our article that compares the most popular automated testing tools, including Selenium, Katalon Studio, TestComplete, and Ranorex.

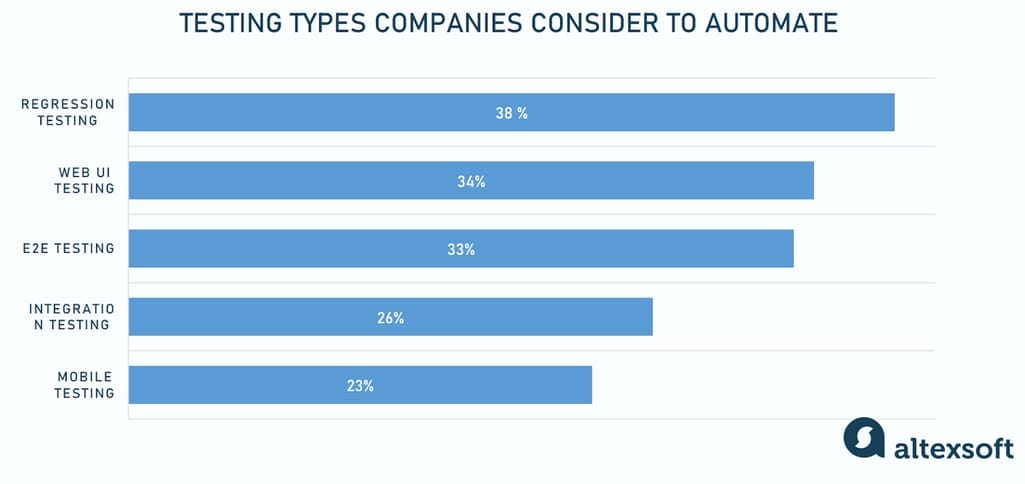

Developing automated testing is indicated as the biggest challenge by 39 percent of organizations. At the same time, participants of TestRail’s survey mentioned automating more tests as their top objective, followed by reducing bugs in production. Most often, companies consider adopting automation for regression testing (38 percent), Web UI testing (34 percent), end-to-end (E2E) testing (33 percent), integration testing (26 percent), and mobile testing (23 percent).

Automated testing activities organizations consider adopting. Source: 2023 Software Testing and Quality Report

It’s worth noting that the most effective testing practices combine manual and automated testing activities to achieve the best results.

7. Quality assurance specialist

A quality assurance specialist is a broad term encompassing any expert involved in QA activities. In small organizations, this position can mean being a master of all trades, responsible for the end-to-end QA process, from quality standards development to test execution. Yet, with business growth, the duties are spread across different roles. Below is a list of key positions to form a full-fledged QA team.

Software test engineer or tester is a QA specialist who mainly relies on manual methods. These experts develop and execute test cases, document bugs and defects, and report test results. The role is mainly concerned with software conformity to functional requirements. Testers don’t necessarily need to know programming languages. They must rather be advanced users of various testing tools.

Test analyst is an entry position with focus on business problems, not technical aspects of testing. Analysts interact with stakeholders and BAs to clarify and prioritize test requirements. Another area of responsibility is designing and updating procedures and documents — test plans, coverage reports, summaries, etc.

QA automation engineer must have programming skills to write testing scripts. People in this position also set up automation environments and prepare data for repeated testing. Other tasks include developing an automation framework and integrating tests into a CI/CD pipeline.

While still doing manual testing, QA engineers have a wider range of responsibilities than STEs. For example, they analyze existing processes and suggest improvements to the development cycle so that errors can be prevented rather than detected at later stages.

Software development engineer in test (SDET) combines testing, development, and DevOps skills to create and implement automated testing processes. In many companies, SDET is used interchangeably with a QA automation engineer. Yet, unlike the latter, SDET is capable of performing source code reviews and evaluating code testability to refine the code quality. This specialist must have a solid software engineering background, master several programming languages, and understand ins and out of the system being developed.

Test architect is a senior specialist who designs complex test infrastructures, identify tech stack for QA processes, and work out high-level test strategies. This position is typically present in large enterprises.

For more information, read our articles on the SDET role and responsibilities and other QA engineering roles.

8. Software testing trends

As a part of technological progress, testing is continually evolving to meet ever-changing business needs as it adopts new tools that allow the tester to push the boundaries of quality assurance.

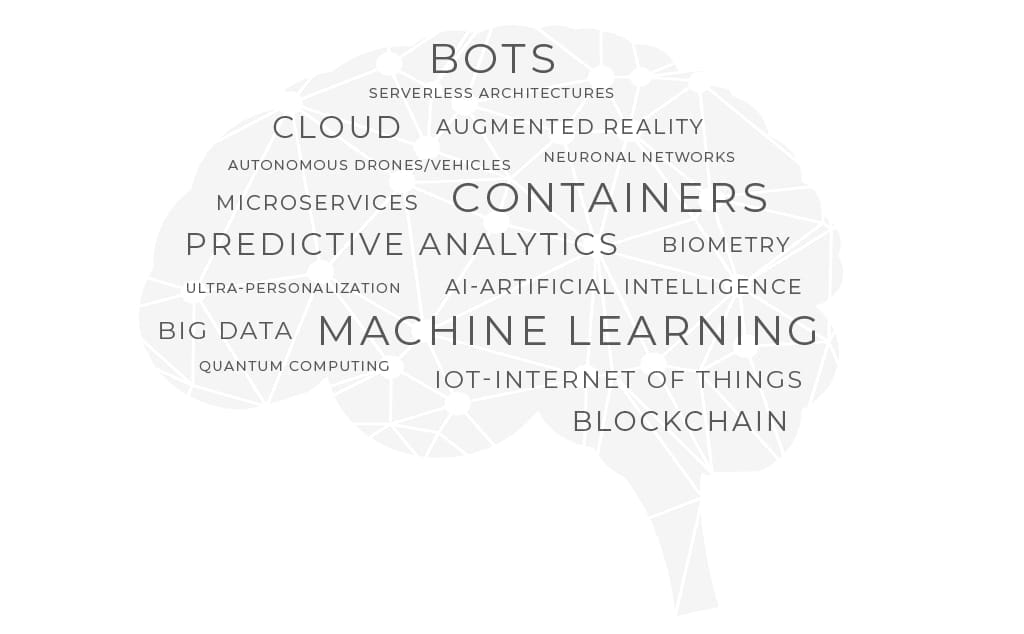

“Hot topics” in software testing in the coming years according to the PractiTest survey

New subjects expected to affect software testing in the near future are security, artificial intelligence, and big data.

- Security

The World Quality Report survey shows that security is one of the most important elements of an IT strategy. Input from security is vital to protecting the business. Security vulnerabilities can seriously tarnish brand reputation. For those reasons, test environments and test data are considered the main challenges in QA testing today.

Data protection and privacy laws also raise concerns about the security of test environments. If an environment contains personal test data and suffers a security breach, businesses must notify the authorities immediately. As a result, it is so important for test environments to be able to detect data breaches.

Most popular in cloud environments, security testing intends to uncover system vulnerabilities and determine how well it can protect itself from unauthorized access, hacking, any code damage, etc. While dealing with the code of application, security testing refers to the white box testing method.

The four main focus areas in security testing:

- Network security

- System software security

- Client-side application security

- Server-side application security

It is highly recommended to include security testing as part of the standard software development process.

- Artificial Intelliigence and the rise of generative AI

Although AI test automation solutions are not well-established yet, the shift towards more intelligence in testing is inevitable. Cognitive automation, machine learning, self-remediation, predictive analysis, are promising techniques for the future of test automation.

That said, a Boston-based startup Mabl already simplifies functional testing by combining it with machine learning. “As we met with hundreds of software teams, we latched on to this idea that developing... is very fast now, but there’s a bottleneck in QA,” says Izzy Azeri, a co-founder of Mabl. “Every time you make a change to your product, you have to test this change or build test automation.”

With Mabl, there is no need to write extensive tests by hand. Instead, you show the application the workflow you want to test, and the service performs those tests. Mabl can even automatically adapt to small user interface changes and alert developers to any visual changes, JavaScript errors, broken links, and increased load times.

One of the most promising technologies in terms of testing is generative AI. It can be employed to improve different aspects of the STLC, including early defect detection, producing synthetic data for tests, automating test case development, and more. Experiments revealed that generative-AI-fueled tools help refactor test cases 30-40 percent faster and decrease bugs by 40 percent compared with traditional instruments.

- Big Data

Managing huge volumes of data that are constantly uploaded on various platforms demands a unique approach to testing, as traditional techniques can no longer cope with existing challenges.

Big data testing aims to assess the quality of data against various characteristics like conformity, accuracy, duplication, consistency, validity, completeness, etc. Other types of QA activities within the Big Data domain are data ingestion testing, database testing, and data processing verification which employs tools like Hadoop, Hive, Pig, and Oozie to check whether business logic is correct.

Conclusion

In 2012, Knight Capital Americas, a global financial firm, experienced an error in its automated routing system for equity orders – the team deployed untested software to a production environment. As a result, the company lost over $460 million in just 45 minutes, which basically led to its bankruptcy.

History knows many more examples of software incidents which caused similar damage. Yet, testing remains one of the most disputed topics in software development. Many product owners doubt its value as a separate process, putting their businesses and products at stake while trying to save an extra penny.

Despite a widespread misbelief that a tester’s only task is to find bugs, testing and QA have a greater impact on the final product's success. Having a deep understanding of the client’s business and the product itself, QA engineers add value to the software and ensure its excellent quality. Moreover, by applying their extensive knowledge of the product, testers can bring value to the customer through additional services, like tips, guidelines, and product use manuals. This results in reduced cost of ownership and improved business efficiency.

References

- The Three Aspects of Software Quality – http://www.davidchappell.com/writing/white_papers/The_Three_Aspects_of_Software_Quality_v1.0-Chappell.pdf

- Foundations of Software Testing: ISTQB Certification – https://www.amazon.com/Foundations-Software-Testing-ISTQB-Certification/dp/1844809897

- Software Engineering: A Practitioner’s Approach – https://www.amazon.com/Software-Engineering-Practitioners-Roger-Pressman/dp/0073375977/

- Test Strategy – http://www.satisfice.com/presentations/strategy.pdf

- Test Strategy and Test Plan – http://www.testingexcellence.com/test-strategy-and-test-plan/

- IEEE Standard for Software and System Test Documentation 2008 – https://standards.ieee.org/findstds/standard/829-2008.html

- ISTQB Worldwide Software Testing Practices REPORT 2017-18 – https://www.turkishtestingboard.org/en/istqb-worldwide-software-testing-practices-report/

- Defining Exploratory Testing – http://kaner.com/?p=46

- Evaluating Exit Criteria and Reporting – http://www.softwaretestingmentor.com/evaluating-exit-criteria-and-reporting-in-testing-process/

- Securities Exchange Act of 1934 – https://www.sec.gov/litigation/admin/2013/34-70694.pdf

- World Quality Report 2017-18 | Ninth Edition – https://www.sogeti.com/globalassets/global/downloads/testing/wqr-2017-2018/wqr_2017_v9_secure.pdf

- 2023 State of Testing report – https://www.practitest.com/state-of-testing/?utm_medium=button&utm_source=qablog

- DevOps Testing Tutorial: How DevOps will Impact QA Testing? – https://www.softwaretestinghelp.com/devops-and-software-testing/

- Benefits of Generative AI in Ensuring Software Quality — https://katalon.com/resources-center/blog/benefits-generative-ai-software-testing

- The 2023 Software Testing and Quality Report — https://www.testrail.com/resource/the-2023-software-testing-quality-report/