The lines between art and technology are blurring. Machine learning models are rapidly advancing new tools for text, music, or image creation..

And it’s not happening in isolation – exciting outcomes are emerging when these areas begin to connect. From text-to-speech narrations to visual art that changes based on sound and music, AI is creating more dynamic and engaging experiences.

In this article, we are exploring how AI models generate a variety of content forms – specifically sound and voice. We’ll explain how different generative models are learning to speak, sing, and create tunes that are not only unique but also quite realistic.

It's no longer just sci-fi. The future of sound and voice generation is already here, and there is one question we have yet to answer — are we ready for the challenges that come with sound transformation?

Let’s find out!

Generative AI in Business: 5 Use Cases

What is sound and voice generation?

Sound and voice generative models use machine learning techniques and a vast amount of training audio data to create new audio content.

You can create custom sound effects, music, and even realistic human speech.

Background and ambient noises. Generative models can be great for creating background noises for games, videos, and other production scenarios. The main training data they use are nature soundscapes, traffic noise, crowds, machinery, and other ambient environments.

Music generation. If you’re a musician who’s stuck on a melody and needs a little bit of push, you can use AI to either create new pieces or finish the existing ones based on your preferences. For this specific modeling process, trainers use large datasets of existing music grouped together based on their unique genres, including instrumental, vocal, and musical notes.

Text-to-speech. Text-to-speech is another great example of what can be done with AI sound and voice generation models. It allows you to create different voices. As you can imagine, the training data for this particular format is mainly recordings of human voices speaking in different languages, accents, and emotional tones.

How machines “see” sounds: waveforms and spectrograms

To create new sounds or voices, generative models analyze large datasets of sounds represented by either waveforms or spectrograms and find patterns in them during the training process. Once the model is trained, it can generate new sounds.

Essentially, there are two main ways to represent sounds digitally: waveforms and spectrograms.

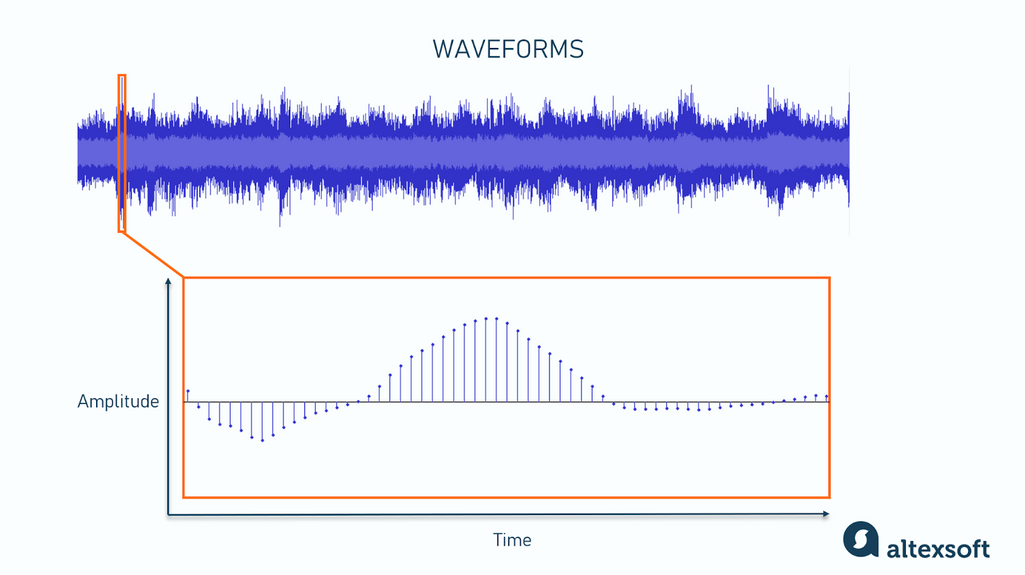

Waveforms or sound waves are raw representations of sound with two parameters -- time and amplitude, the height of the wave defining its loudness. If you zoom in on an image of a waveform, you’ll see points (samples) on the plot describing time and amplitude. These points define a waveform itself.

Each dot in a waveform represents a sound sample. For example, CD audio has 44,100 samples in one second (44.1 kHz sample rate)

Waveforms are heavy in terms of data that needs to be processed since each second may require from 44,000 to 96,000 samples, depending on the sound quality you’re looking for. Still, such generative models as WaveNet by Google DeepMind and Jukebox by OpenAI used waveforms for their models.

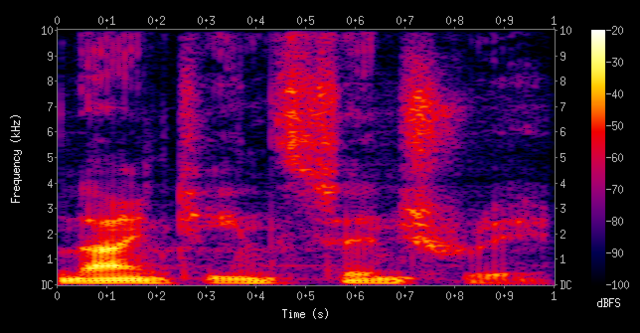

Spectrograms are another way to represent sounds visually and digitally. Unlike waveforms, spectrograms have three parameters of sound: time, frequency, and amplitude. They show how loud the sound is at each frequency at each moment in time. This representation requires less data and computation resources for model training.

A spectrogram of a person talking. Source: Wikimedia

So, spectrograms are also a common way to train both sound generation and sound recognition models.

Once the model has learned relationships and patterns of sounds by looking at such features as frequency, time, and amplitude, it can use this knowledge to create entirely new waveforms and spectrograms.

What are the key technologies behind sound generation?

There are several main types of generative machine learning models used for sound generation, including autoregressive models, variational autoencoders (VAEs), generative adversarial networks (GANs), and transformers.

Autoregressive models

An autoregressive model predicts the next part in a sequence by analyzing and taking into account the previous elements.

Autoregressive models are normally used to create statistical predictions on time series. They forecast natural phenomena, economic processes, and other events that change over time.

In the case of sound generation, it uses its knowledge of sound patterns and the previous elements (like the starting melody or spoken words) to predict what sound should come next.

This prediction process continues one step at a time. The model takes its previous prediction, combines it with the initial input, and predicts the sound that follows. With each prediction, the model builds a longer and longer sequence of sounds.

These AI models can generate very realistic and detailed audio, sometimes indistinguishable from real sounds.

One of the great examples of autoregressive models is WaveNet created by DeepMind. It’s been trained to analyze raw audio waveforms and predict the next elements based on the previous ones — generating highly accurate and natural sounds.

Variational autoencoders (VAEs)

First introduced in 2013 by Diederik P. Kingma and Max Welling, variational autoencoders have since become a popular type of generative model.

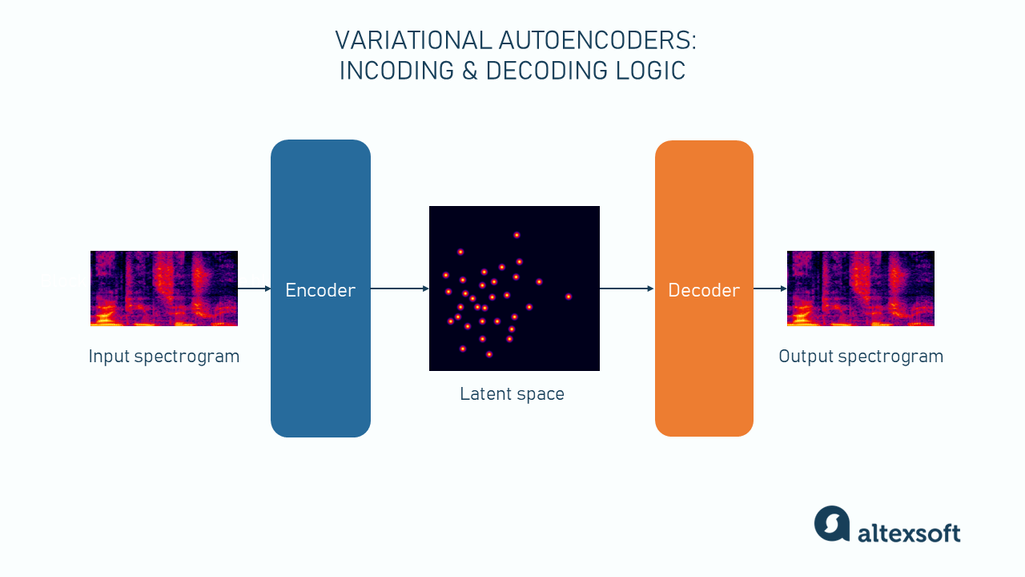

They are unsupervised neural networks that analyze and retrieve essential information from the input data. VAE architecture consists of two major parts – the encoder and the decoder networks.

During the training, the encoder learns to compress complex data, such as a spectrogram or waveform, into a smaller set of data points — a latent space representation.

In simple words, the latent space is a compressed, simplified, and organized original data. It’s sort of an outline of the original data that can be used to recreate the initial input. In the case of VAEs, data in a latent space is stored not as fixed points but rather as a probabilistic distribution of points. This means that this “outline,” while capturing the characteristics of data, still allows for some, well… variation if we were to interpret what this outline means.

The decoder, on the other hand, learns to do exactly that. It tries to reconstruct the original input from the latent space.

How VAEs work

To generate new music or sound, you must sample some data from the latent space and let the decoder recreate a new spectrogram or waveform from it. Obviously, to receive predictable results and sample the correct data to generate music from, say, a text description, you must have some other mechanism of natural language processing (NLP) in place to sample correct points in the latent space.

For example, Google’s MuLan employed for MusicLM -- which we’ll describe in a bit -- does exactly that. It links text descriptions of music to the music itself.

Generative Adversarial Networks (GANs)

First introduced in 2014, GANs, or generative adversarial networks, are also models based on deep learning.

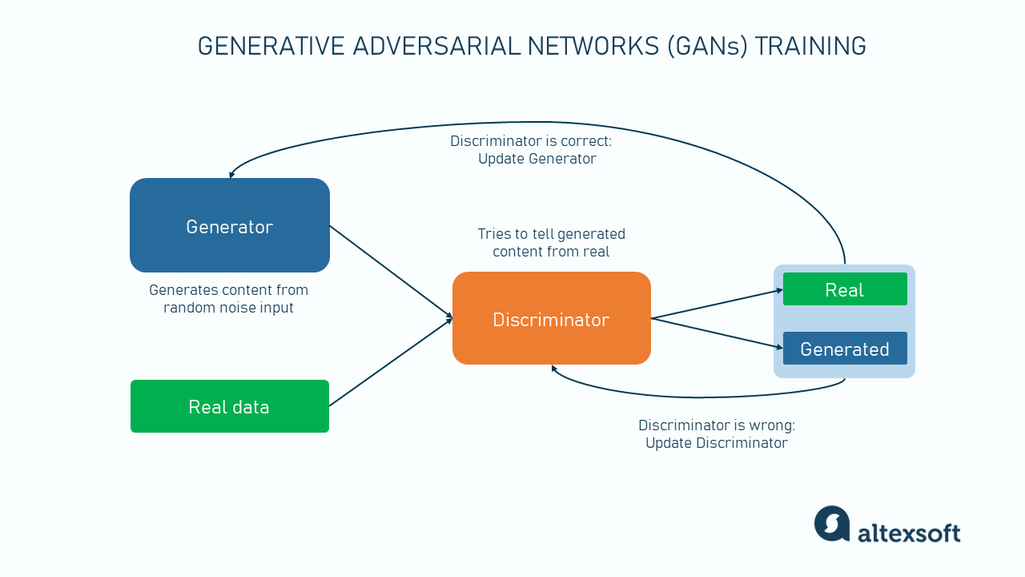

GANs are two networks -- the generator and the discriminator.

During model training, the generator starts with random noise and tries to create some content, i.e., images, music, or sound in our case. Its main goal is to fool the discriminator into thinking that it’s real data.

The discriminator during training receives both real data (e.g., real sounds) and the data produced by the generator. It must recognize what sounds or noises are real and what are not. So, it generates two outputs only – fake or real.

The idea is that the generator and the discriminator don’t work in isolation. If the generator manages to fool the discriminator, the discriminator model gets updated. If the discriminator correctly flags generated content, then the generator gets updated.

How GANs are trained

As the two models get better at their work, you can eventually train a strong generator.

Transformers

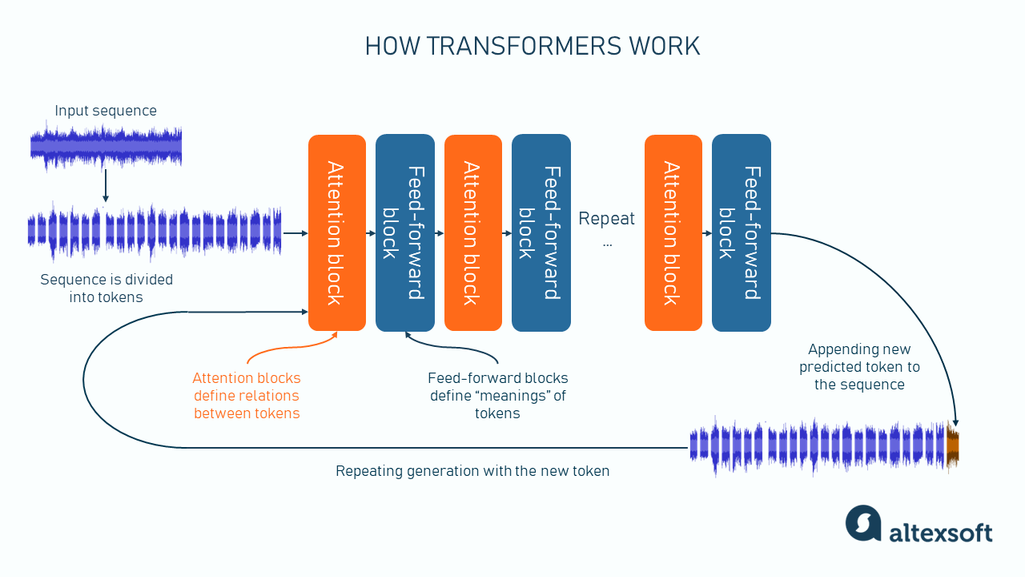

Transformer architecture has taken the world of deep learning by storm as it proved to be revolutionary in text generation with GPT models. Besides writing texts, it can generate images and sounds. In fact, most state-of-the-art sound and music generators now, besides other architectures, employ transformers.

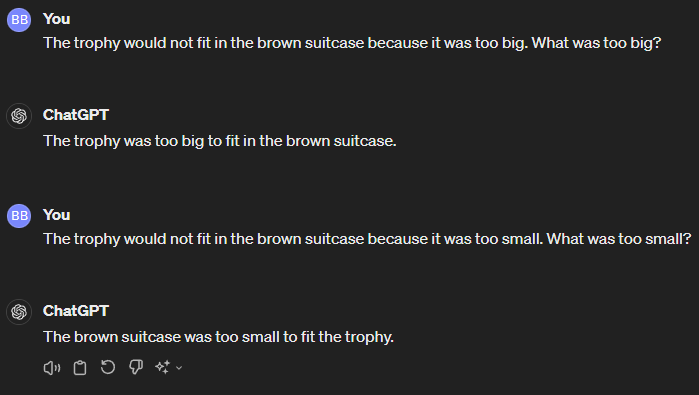

The design part that makes transformers so special is the attention mechanism, the capability to see how different parts of text, sound, or image relate to each other. The somewhat famous Winograd Schema Challenge aimed at testing a machine’s reasoning capability and previously considered unsolvable for computers is now completely manageable for such transformers as GPT because of the attention mechanism.

GPT 4 easily solves the challenge.

This ability to see the existing context, map relations between elements in it, and predict what word must go next applies to sounds and music as well.

How does it work? In very simple terms, a transformer breaks a sentence, waveform, or image into a sequence of tokens (elements in this sequence) and tries to figure out how these elements relate to each other and what they mean to predict, eventually, the last element of the sequence. Once this last element is predicted, it’s added to a sequence, and the process repeats itself.

A very simplified version of what happens inside a transformer during generation

So, the core of the transformer are blocks of attention mechanisms that define relations between tokens and feed-forward networks that must define the eventual “meaning” behind tokens.

Keep in mind that modern and advanced sound and music generator systems may employ and combine several of those architectures or their principles to arrive at their results. For example, Jukebox by OpenAI uses both variational autoencoders to compress input sounds into latent space and a transformer for the generation of new audio from that latent space.

Popular music and voice generators

Now that we know how these sophisticated algorithms work let's find out about some of the recent models and tools you can use to get them to generate sound for you.

Most popular software for music and voice generation

Google MusicLM: AI Test Kitchen experiment

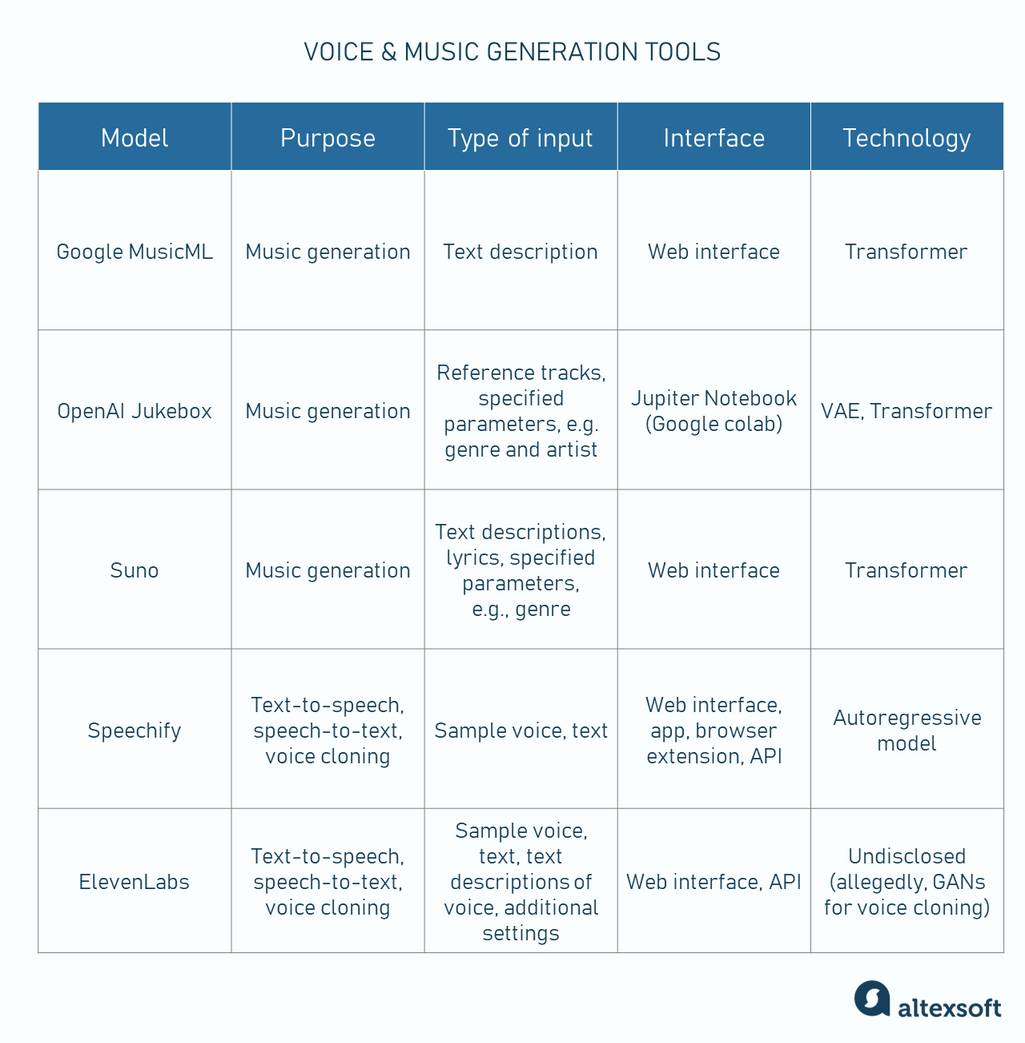

MusicLM by Google AI generates high-fidelity music based on your text descriptions. Simply describe the desired sound ("soothing violin melody with a driving drumbeat"), and MusicLM creates a song.

It is trained on a massive dataset of music and descriptions and even surpasses the prior model AudioLM, generating extended pieces (up to 5 minutes) with impressive audio quality.

It is a versatile tool for musicians because it generates music in a variety of styles, from classical to electronic. Currently, the tool is available as a part of the AI test kitchen, Google’s platform for AI experiments.

Jukebox: OpenAI’s music generator

Jukebox by OpenAI uses the autoencoder model to compress the raw data and upsample it using transformers.

To train this model, we crawled the web to curate a new dataset of 1.2 million songs (600,000 of which are in English), paired with the corresponding lyrics and metadata from LyricWiki

Imagine thousands of different kinds of artists, albums, and lyrics with different moods being given to autoencoder and transformer models.

It’s a great tool for generating novel musical ideas to produce within a vast array of genres whether it’s classical or hip-hop. The quality is also impressive, making outputs sound very detailed and realistic.

The tool hasn’t made it to the ChatGPT interface just yet (while DALL-E has), so you have to run it using Google Cloud Colab, which is Google’s Jupiter Notebook environment in the cloud.

Suno: user-friendly music generation

Unlike Jukebox and MusicLM, Suno is a user-friendly platform for generating music that doesn’t require complex preparations to start making some AI-generated music.

Besides making music, Suno also generates vocals and even writes lyrics if you ask it to. So, you can just give it a simple prompt and it will start from there, or you can provide your own lyrics and define style.

Similar to other sound generators, Suno employs transformer architecture to convert text descriptions into music.

Speechify: voice cloning, text-to-speech generation, and transcription

Speechify is a popular text-to-speech generator tool initially designed for people with dyslexia to hear texts rather than to read them. Currently, the platform has a variety of services.

For instance, you can choose among 100+ voices, including celebrities, to narrate your texts in different languages or upload your own voice and let the model try to replicate it while generating the voiceover. Besides, it can transcribe speech (speech-to-text), generate digital avatars, and automatically translate videos.

The vision behind Speechify voice generation is to aid video and audio producers in cutting time and cost in making last-minute changes in scripts and voiceovers. You can access the service via a web interface, an app, browser extensions, and soon-to-be-released API.

The generator tool uses an autoregressive model.

ElevenLabs: voice cloning and synthesis, generation

ElevenLabs is pretty similar to Speechify and provides relatively the same set of services, from voice cloning and text-to-speech generation to audio dubbing and speech-to-text.

Unlike Speechify, it also suggests an interface to create a fully synthetic voice rather than cloning an existing one. Generally, ElevenLabs provides more granular control over generation, allowing you, for instance, to choose between faster or more quality-oriented models. While Speechify has yet to release its API, ElevenLabs already has it.

Eventually, the choice between ElevenLabs, Speechify, and another popular player Murf AI boils down to subscription specifics, interface, and your subjective preferences of voice quality.

Allegedly, ElevenLabs is using GANs for their voice cloning technology, but they don’t directly disclose the underlying architectures.

Most of the popular sound generation platforms today focus on either music or voice generation, with very few representatives for sound generation. This may change once video generation platforms such as Open AI’s Sora become ubiquitous, and the new videos will reasonably require sound arrangement as well.

How sound and music generation is used

The power of sound and voice generation is no longer just science fiction. From composing music to creating realistic speech, these technologies are rapidly transforming various industries.

So you might be wondering how different markets are using AI sound generation for their own benefit.

Advertising and marketing

Sound and voice generation are slowly transforming the advertising sectors.

Businesses are now using AI to create custom audio elements for their ads, and it’s not just catchy tunes but also voiceovers that are tailored to a specific target audience.

Take Agoda, for example. They’ve recently launched a campaign using sound generation technology to transform one single video into 250 unique ones.

Agoda invited Bollywood star Ayushmann Khurrana for their commercial and then created 250 different videos, adapting the footage and language based on viewer location

Each video shows a different travel destination while the AI is changing the voiceover, lip-syncing the actor’s dialogue, and even adding relevant background imagery.

This is only the beginning for the marketing industry, and voice generation is completely transforming how businesses create personalized and engaging ads.

Video production

Another industry that’s charging ahead with sound and voice generation is the creative field of video production.

We are not just talking about small indie movies or commercials, Lucasfilm has also collaborated with Respeecher, a Ukrainian startup that uses archival recordings and AI to generate actors’ voices for movies and video games.

They have de-aged James Earl Jones’ voice for Darth Vader in the Disney+ series ”Obi-Wan Kenobi.” They have also worked with Lucasfilm to bring back Luke Skywalker’s voice for “The Book of Boba Fett.”

Customer service

The customer service field is fully leveraging the sound and voice generation models. They are not only replacing chat functions with AI but now you can book appointments over the phone call as well.

AI can offer personalized support, handle multiple different languages, and provide 24/7 assistance for customers when humans are not available.

Sensory Fitness gym is a great example of how businesses can leverage AI to maximize their revenue and minimize losses.

It was during their expansion they decided to tackle one of their biggest customer service issues so they implemented FrontdeskAI's AI Assistant – Sasha.

Sasha is a conversational assistant who answers calls with a natural human voice and handles appointments, reschedules, and inquiries while remembering customer details.

The results have been quite impressive as Sasha has already helped to generate an additional $1,500 in monthly revenue from new members who wouldn't have joined otherwise and $30,000 in annual cost savings.

Read our dedicated article about LLM API integration to learn how text-to-speech, speech-to-text, speech-to-speech, and other LLM modalities are integrated into business apps. We also recommend reading our piece on AI agents to learn how they can take customer support AI to the next level.

Education

Text-to-speech conversion with natural-sounding voices has become a great asset for visually impaired students and makes learning more inclusive for other specific learning challenges, such as dyslexia.

For example, the Learning Ally Audiobook Solution offers numerous downloadable books that are narrated by AI and remove reading barriers for kids from kindergarten through high school. In fact, it’s been reported that 90 percent of the students became independent readers after using Ally Audiobooks.

And yet, there are several concerns we should address.

What are potential ethical concerns surrounding sound generation?

Let’s talk about the misuse of technology and what can be done about that.

Deepfakes and the misuse of voice cloning

What would you do if you received a sudden call from your family member asking for your credit card number? Or if your child calls you and says they’ve been kidnapped and you must send money immediately. Sounds terrifying right? Unfortunately, it’s been a reality for many families. Deepfakes are making it possible to make the phone scams more sophisticated and believable.

The misuse of voice cloning has also been used to destroy someone’s reputation and undermine pre-election political processes.

Looking at the plight of one of the top candidates in Slovakia’s election as an example, we’ll see how easy it is to sway people’s opinions.

His voice was first used to create a recording of him saying how he’d already rigged the election. Even worse, there was another recording of him talking about raising beer prices.

It may be unclear whether this particular event was the main reason why he lost the elections but it has certainly shown us that we are not ready for future challenges deepfakes and voice cloning pose.

The existing tools are finding some solutions, though. For instance, ElevenLabs provides a tool to check a recording and define whether it was generated with the help of ElevenLabs’ models. On top of that, if you’re employing their most advanced voice cloning option, you’ll have to go through an additional verification step.

Ownership and copyright issues of AI-generated sounds

Who owns the copyright of music or sounds created by AI models? Is it the developer, the user who prompts the generation, or the AI itself?

Now you can find thousands of tracks on YouTube with famous people collaborating together or Eminem rapping about cats. But who owns the rights to these videos?

Drake AI - ‘Heart on my sleeve’ (Ft. The Weeknd AI)

Some entities, such as the US Copyright Office, have already taken steps to avoid registering AI work. The EU, however, is taking a different approach, saying they will register AI work only if it is original enough. But what are the criteria of originality? This opens up another can of worms.

But one thing is clear, the copyright laws need to be revised to include the new form of music creation.

That is why authorities are agreeing to one general proposal and that is to create a whole new category for intellectual property – specifically for AI-generated music. However, this approach only protects the creator of the AI system and does not prompt writers.

Potential for bias in models trained on imbalanced datasets

We already know that sound generation models rely on the training data to learn and produce more realistic sounds.

But what happens when the data is not diverse? Unfortunately, the models also inherit the same biases.

A model trained on a dataset with limited voice demographics might generate voices with unintended biases in gender, age, or ethnicity.

This has been happening with speech recognition systems that can struggle to understand different accents, leading to misunderstandings or problems in following instructions accurately.

How is data prepared for machine learning?

Based on a recent study published by PNAS (Proceedings of the National Academy of Sciences), there is a significant racial bias in speech recognition models. These programs were twice as likely to misinterpret audio from black speakers compared to white speakers.

The future of sound and voice generation

Will sound generation take over the music industry?

Should we be wary of what we say to our friends and family over the phone?

Even simple questions such as these sound scary because answers are still unknown.

But one thing is for sure — voice generative models are only getting better, promising even more realistic audio synthesis. The main focus will remain on achieving more natural and expressive generated audio as emotional speech synthesis is gaining more and more popularity.

Imagine musicians using AI to experiment with new ideas or filmmakers getting incredible sound effects.

Plus, AI-powered tools have the potential to become more accessible and cost-effective. This could help independent creators, artists, and small businesses do more with sound, creating a richer and more diverse creative landscape.

Companies like Cyanite.ai are introducing prompt-based music searches. So imagine describing the type of music you want to listen to and getting personalized playlists.

However, as AI sound and voice models advance, ethical concerns will become even more alarming. Without clear rules and regulations in place, people’s individual voice data can be easily misused.

Authorities are already creating laws to clarify ownership and usage rights for AI-generated audio content. Measures might target the misuse of AI-generated audio, particularly in preventing deception or misinformation.