Selenium has long been the backbone of web test automation. But, as applications evolve faster and UIs grow more dynamic, QA teams face familiar pain points: brittle locators, endless maintenance, and test flakiness that erodes trust in results.

AI can change that. By pairing Selenium with modern machine learning (ML) and language models (LLMs), QA automation engineers can address the core limitations and build robust testing pipelines.

This article will explore how AI transforms Selenium testing — from auto-generating test scripts and self-healing locators to visual UI validation, flaky test triage, and continuous delivery and integration (CI/CD). Ultimately, we’ll also provide an overview of the most well-known Selenium-compatible AI tools and explore best practices.

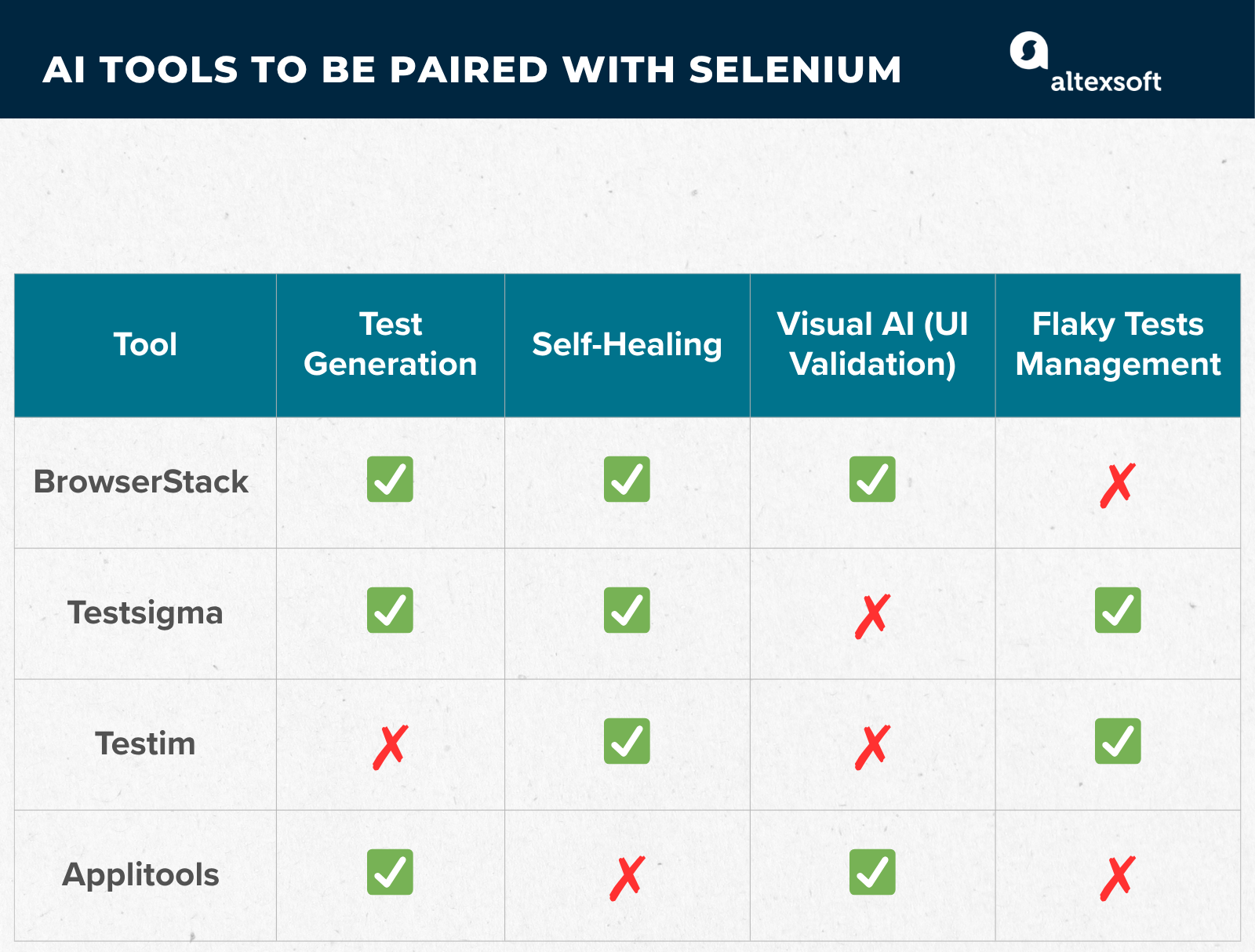

AI tools to be paired with Selenium for various tasks

AI-assisted test generation

Selenium’s core feature is its ability to track user actions in web browsers: clicking elements, filling forms, navigating pages, etc. This feature enables engineers to create test scripts that mimic user interactions throughout various browsers and devices. However, writing and maintaining these tests is time-consuming.

Generative AI can help, as it interprets application behavior, together with requirements and user stories, and then automatically produces test cases that cover key workflows.

By analyzing historical data (such as previous test runs, defect trends, or analytics on real user behavior), AI-driven tools create optimized test sets that focus on the most critical paths through an application. These instruments also synthesize test data, increasing coverage and reducing the need for creating input manually.

Tools to be paired with Selenium for test generation: BrowserStack Low Code Automation, Testsigma, Applitools Autonomous.

Smart, self-healing locators

One of the most significant pain points in Selenium automation is brittle locators (XPath, CSS selectors). They are linked to specific attributes of the web element (like id, class, name) or its exact position in the Document Object Model (DOM)—the structural blueprint of a webpage. In other words, locators tell your test where to find a button, form field, or menu item on a web page.

Locators are static by default and hard-coded into the test script. Even a small front-end change—a renamed class, an extra HTML layer wrapping an element, or a restyled button—can cause dozens of tests to fail.

An AI-driven, self-healing mechanism, on the other hand, can adapt to UI changes, allowing the test to continue running.

How does self-healing work?

Multi-attribute analysis. Rather than relying on a single attribute, such as ID or class, AI considers a comprehensive set of clues—including text, visual position, neighboring elements, and HTML hierarchy.

Backup strategies. If the main locator fails, the system automatically switches to alternatives. It can compare visual patterns, analyze text content, or examine the overall DOM structure to find the most likely match.

Pattern recognition. Using probabilistic and contextual analysis, self-healing algorithms detect patterns in how elements evolve—for example, a “Submit” button that becomes “Confirm” but stays in the same functional place on the page.

Learning and persistence. Once the system successfully finds the element through an alternate route, it remembers this fix. Over time, the AI can suggest updating your test permanently—a process sometimes called historical learning. As a result, tests become less fragile with every run.

Tools to be paired with Selenium for self-healing: Testim, Testsigma, BrowserStack Automate.

Visual AI: detecting UI changes

Even when all functional tests pass, there can be a blind spot. As mentioned, Selenium relies on the DOM, and if the structure and attributes look correct, the test assumes the page functions properly.

However, many UI regressions are visual, rather than structural, such as layout shifts, missing icons, misaligned buttons, or color mismatches that occur after a CSS update. A button might be hidden behind another element, text might overflow, and your test would never notice it.

Visual AI bridges this gap by comparing what’s rendered on screen, not just the HTML behind it.

Instead of doing a strict pixel-by-pixel comparison, which often flags harmless visual variations as bugs, AI-powered systems analyze semantic differences. They understand that minor shifts in spacing or color gradients are normal, but misplaced text or cropped images are not.

Visual AI also recognizes content that naturally changes—like personalized dashboards, rotating ads, or timestamps—and intelligently ignores it during comparison. This helps eliminate noise and focuses only on meaningful visual regressions.

Visual AI tools to be paired with Selenium: Applitools Eyes and BrowserStack Percy.

Flaky tests management

Flaky tests—those that fail intermittently—erode developer confidence, because the team can’t tell if a failure means a real bug or just bad timing. They also slow down CI/CD pipelines, as each failure triggers reruns and manual triage.

What can AI do to address this issue?

Automated Defect Triaging (ADT). When a test fails, AI analyzes the failure pattern and categorizes bugs based on the nature of the error, affected components, and historical patterns. This helps teams instantly distinguish between true defects and environmental noise.

In particular, AI-powered tools can detect unstable conditions—for example, a page that hasn’t fully loaded or a delayed AJAX call (a web request to the server to partially update the page)— and automatically apply adaptive waits or smart retries. This reduces false failures caused by timing or synchronization issues, which are among the most common sources of flakiness in Selenium tests.

Failure grouping. In large test suites, the same root cause can generate dozens of bugs. AI tackles this with specific clustering algorithms to group similar failures based on code coverage area, stack trace, or execution time.

Deduplication. AI compares failure messages and bug descriptions to detect duplicate defects automatically, leading to fewer redundant tickets.

Over time, AI models also learn which tests or environments are more prone to random failures, adjusting execution strategies and prioritization accordingly.

Tools to be paired with Selenium for flake management: Testim and Testsigma.

AI-powered test automation tools to use with Selenium

When choosing an AI tool to pair with Selenium, consider three key criteria:

- integration ease (Can your existing Selenium scripts or grid run side by side?);

- targeted pain-points (Does it fix locator brittleness, visual drift, flaky test triage, or test-suite bloat?); and

- governance/observability (Can you trace AI actions, tune its behavior, and control drift?).

The four tools discussed here hit that compatibility sweet spot with Selenium while specializing in different automation gaps.

BrowserStack: testing infrastructure with AI features

BrowserStack is best known for its cloud-based testing infrastructure, providing access to over 3,000 real browsers and devices for running Selenium, Appium, or Playwright tests at scale. In recent years, it has evolved into an AI-augmented testing platform that goes beyond simple execution.

AI-generated test cases. The BrowserStack Low Code Automation product can transform plain-language instructions into automated test scripts, helping non-technical users create tests faster without writing code.

Self-healing locators & maintenance. When Selenium tests run in BrowserStack’s environment, AI agents can detect when locators break and automatically propose or apply fixes, reducing manual maintenance. BrowserStack Automate is a dedicated tool for this task.

Visual testing. Percy by BrowserStack uses AI-driven visual comparison to detect UI regressions. It distinguishes between real layout or design changes and insignificant visual noise, preventing false positives in visual testing.

Observability & smart execution. Built-in analytics identify flaky tests, analyze failure patterns, and optimize parallel execution across browsers and devices—providing faster feedback and more reliable results.

Testsigma: agentic jack of all trades

Testsigma is an agentic platform that handles test generation, execution, healing, analysis, and optimization. It automates end-to-end testing for web, mobile, APIs, and ERP systems (SAP and Salesforce).

You can write tests in plain English or code; Testsigma automatically translates them into Selenium commands. It’s essentially a no-code wrap for Selenium (and Opium), but it also offers code mode for developers.

AI agents are specialized and have corresponding names (Generator, Runner, Analyzer, Healer, Optimizer). Each automates a particular phase of testing. For example, the Generator agent can create new tests based on requirements, and the Healer will fix failing tests automatically. The Analyzer agent will identify steps with high failure rates. Because the platform is built for cross-browser and сross-device coverage, it helps teams catch flaky behaviours unique to each environment.

Testsigma’s Atto engine powers large-scale parallel execution, making it well-suited for continuous testing at enterprise scale.

Testim: ideal for managing locators

Testim is an AI-augmented test automation tool for custom web, mobile, and Salesforce applications, which can be layered on top of Selenium (and other frameworks).

Testim can generate test flows based on plain-English prompts. It also enhances tests by detecting repeated sequences of steps and suggesting modular components to reuse.

But mainly it’s used for managing locators with its ML-powered tool called Smart Locators. It captures 100+ attributes per element and dynamically adjusts selectors when the UI changes. Specifically, it offers AI-optimized locators or metadata-based strategies tailored for dynamic UI Salesforce applications.

Applitools: visual AI for UI testing

Applitools is a visual testing platform whose core product, Eyes, uses AI to compare UI baselines and detect meaningful visual or functional differences.

Applitools integrates with Selenium through a lightweight SDK. A single command within a Selenium test captures screenshots during execution, which are then compared on browsers and devices using Applitools’ Ultrafast Grid engine for fast, parallel rendering.

The tool links any detected visual difference to the corresponding DOM or CSS elements, helping testers locate the root cause. It also maintains and updates baselines automatically, minimizing manual review and ongoing maintenance.

Applitools also offers AI-driven test creation (including for UI and functional flows) and LLM-generated test steps in its dedicated Autonomous product.

Best practices for integrating AI with Selenium

AI can make test automation faster, smarter, and more resilient—but only if it’s integrated thoughtfully. These best practices help teams apply AI safely and effectively to Selenium testing.

Start small and measure impact

Begin by applying AI to one specific problem—like healing broken locators or detecting UI changes. Measure tangible outcomes such as reduced maintenance time, higher test pass rates, or fewer false positives. A gradual rollout provides you with data to justify broader adoption.

Integrate gradually into CI/CD

When adding AI-powered tests to a CI/CD pipeline, start in parallel mode—run them alongside traditional tests without blocking releases. Once they prove reliable, integrate them into the main pipeline. Monitor metrics like healing success rate, defect detection speed, and maintenance time saved to assess ROI.

Maintain observability and guardrails

AI-driven tools should never operate as black boxes. Every automated action—like updating a locator or reclassifying a test failure—should be logged and traceable.

Set up Identity and Access Control (IAC) so that each AI agent has its own credentials and limited permissions, like a human user with a defined role. This prevents the AI from accidentally changing production systems or accessing sensitive data.

Apply AI guardrails that specify the agent behavior. For example, it might read from test environments but never write to live ones. These restrictions keep automation safe, compliant, and predictable.

Keep humans in the loop

Even as AI automates repetitive work, human in the loop remains essential. QA leads should review AI-generated fixes, confirm test updates, and validate defect classifications before they’re merged. This hybrid model—AI for speed, humans for judgment—ensures accuracy, compliance, and reliability.

Olga is a tech journalist at AltexSoft, specializing in travel technologies. With over 25 years of experience in journalism, she began her career writing travel articles for glossy magazines before advancing to editor-in-chief of a specialized media outlet focused on science and travel. Her diverse background also includes roles as a QA specialist and tech writer.

Want to write an article for our blog? Read our requirements and guidelines to become a contributor.