People mistakenly assume that AI can do more than it actually can.

This article explains the concept of human in the loop (HITL), how it works in the context of AI systems, and its multi-industry use cases. To deepen the perspective, we spoke with Sergii Shelpuk, an AI technology consultant, and Ismail Aslan, a machine learning engineer at AltexSoft, who both shared insights from their experience in the field.

What is human in the loop (HITL), and why do we need it?

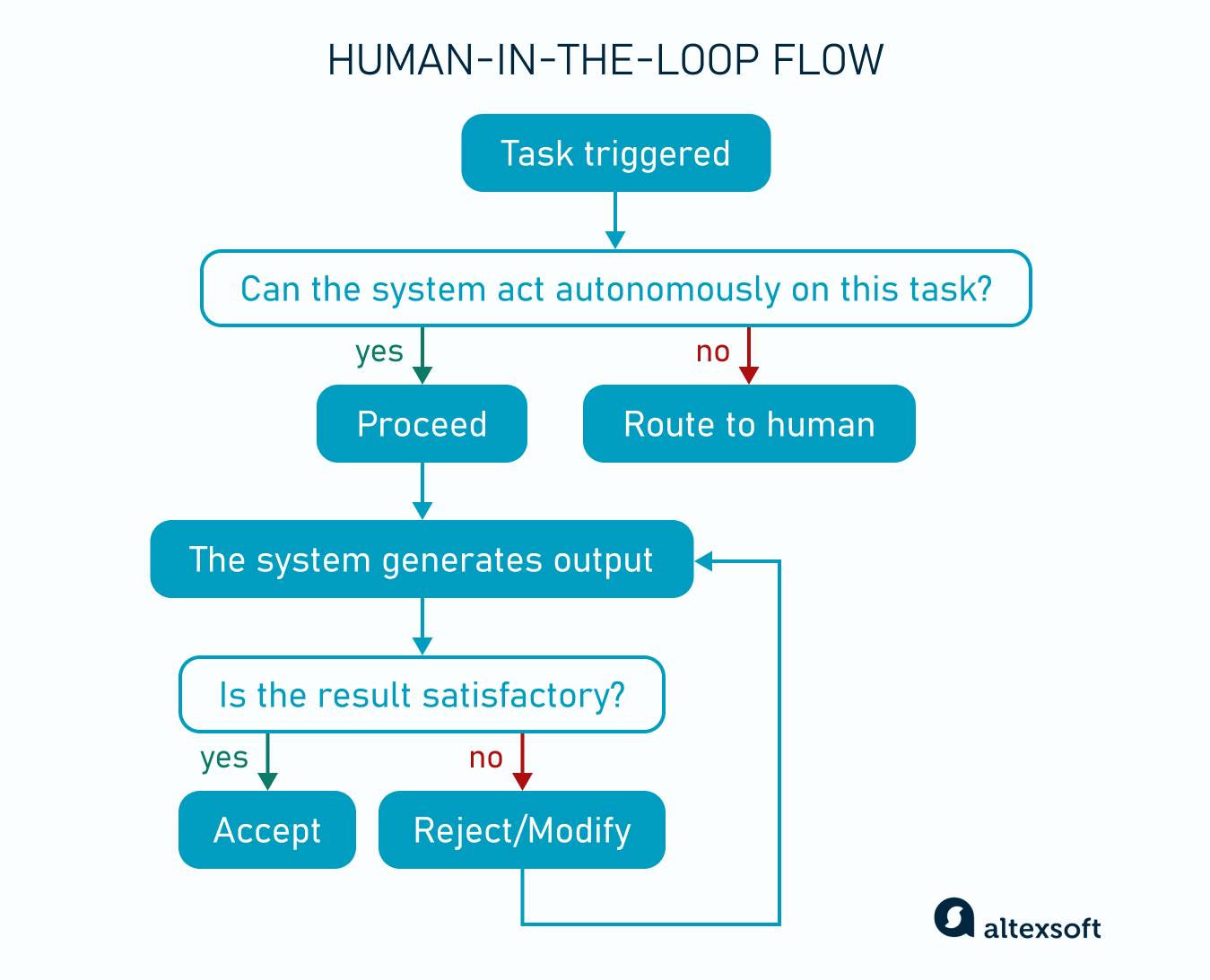

A human-in-the-loop (HITL) approach integrates human judgment, oversight, and decision-making within an otherwise automated sequence.

Human-in-the-loop flow

Not all systems rely on HITL involvement, especially those designed for low-risk, repetitive tasks. For example, spam filters that sort emails do not need user approval.

HITL is more relevant in high-stakes applications or agentic systems, where the AI must make decisions that involve nuance, the use of external tools, or sensitive outcomes. HITL also helps refine results, correct misunderstandings, and steer the conversation with LLMs.

In these cases, AI acts in copilot mode—it proposes actions that humans approve, modify, or reject. For example, when interacting with ChatGPT, a user might rephrase a question, provide feedback, or guide the model toward a more accurate or relevant answer.

Though mostly associated with AI, HITL applies to any automated system where human oversight is required in the decision-making process. Sergii Shelpuk shares his vision: “HITL is a concept grounded in the reality that machines aren’t perfect. For me, it comes down to two core principles: ensuring the quality of a system’s output and taking accountability, whether it's about managing a budget or making decisions that affect human lives.

“This can be seen across many domains: from business systems, where certain financial approvals must be made by a person, to military operations, where drones identify targets autonomously, but a human must authorize the strike.”

Ismail Aslan offered additional perspective on the importance of HITL: “What comes to mind first about HITL is content safety. AI can produce harmful material, and it's our responsibility to oversee and filter that.”

What counts as HITL? Training vs. operational oversight

There are different opinions on the role of HITL in the context of AI systems.

The popular perspective is that HITL is involved throughout the entire development lifecycle, from data labeling and annotation to model training, fine-tuning, and testing. This suggests that any point at which a human contributes judgment or oversight is a valid instance of HITL.

However, not everyone agrees. Sergii Shelpuk, for example, offers a different take: “Tasks like data labeling and model training, while requiring human input, are simply part of the engineering process. In these stages, humans are not collaborating with an intelligent system—they are building it, which shouldn’t be classified as HITL.

"Actual HITL involvement only begins after the system is released and starts interacting with end users. Calling earlier engineering tasks ‘HITL’ dilutes the term's meaning and overlooks its intended purpose: enabling real-time human oversight and collaboration with deployed systems to correct mistakes, validate results, and steer the AI’s behavior in the right direction.”

Models can also keep learning after production if they were set up for continuous training during development. Once a model is released, it leverages user interactions for training. ChatGPT is an example of this. By default, content from conversations is used to train future models. Users can opt out by selecting “do not train on my content” in the privacy portal.

ChatGPT also allows users to rate responses with a thumbs-up or thumbs-down. Ratings allow the model to understand which responses are helpful and accurate, and which are not.

HITL challenges and how to address them

The way HITL works might seem very straightforward: A human either accepts the output of an AI model, modifies it, or rejects it entirely. However, sometimes it is not as simple as it sounds.

To illustrate the possible challenges, we’ll examine a real case of a human-in-the-loop interaction with an LLM. This case highlights that different models and domains require different approaches, and not every method will yield success.

Sergii Shelpuk recalled a past project where he worked on an LLM designed to create business proposals. From the very beginning, they knew that HITL would be essential to the process. But what they didn’t anticipate was how much human involvement would be required and how many iterations it would take to reach the final result.

The original plan with limited HITL. The initial concept was simple and efficient. The system would

- generate a first draft based on a Request for Proposal (RFP),

- let a human edit that draft, and

- use the edits to generate a final version of the proposal.

In theory, this allowed the AI to take the heavy lifting off the human while still relying on human judgment to guide the final result.

Reality check: inconsistent and unreliable output. But the system didn’t perform as expected. Even though they had trained a custom model on over a thousand real proposals, the output varied wildly—sometimes solid, sometimes completely off-base.

Why? The issue came down to the training data. These proposals were collected over many years, during which

- proposal standards evolved,

- industry guidelines shifted,

- client expectations changed, and

- teams gained new areas of expertise.

In short, the AI was attempting to summarize a decade or more of inconsistent practices into a single response. Say, risk management sections became standard only in recent years. The model didn’t consistently include them—not because it failed technically but because it was mimicking the inconsistencies in its training data.

Adding deeper HITL involvement. They tried a new approach: instead of generating the entire proposal in one go, the system would

- generate a high-level structure with section headers,

- then generate content for each section.

While this gave them more control, the results were still lacking. The structure itself needed more expert input.

Including HITL at the start. To address this, they decided that a human would review and edit both the structure and the content. This approach was more successful, but still not foolproof. Important sections were still sometimes left out, simply because the model didn't understand the full context of the RFP or organizational expectations.

So they added an additional layer of human input: in addition to the RFP input, a human now specifies what sections must be included in the proposal. This helps the model stay on track and ensures critical content is never missed.

Lessons learned. This project underscores the fact that most AI models are only as good as the framework and guidance they’re given. It’s not enough to feed a system large amounts of data and expect consistent, high-quality results, especially when that data spans years of shifting standards and practices. Moreover, these limitations may require significantly more HITL input than expected.

So HITL isn’t just a safety net; it ensures critical thinking, domain knowledge, and that up-to-date best practices are considered in the output.

Adding the HITL functionality in agentic AI systems

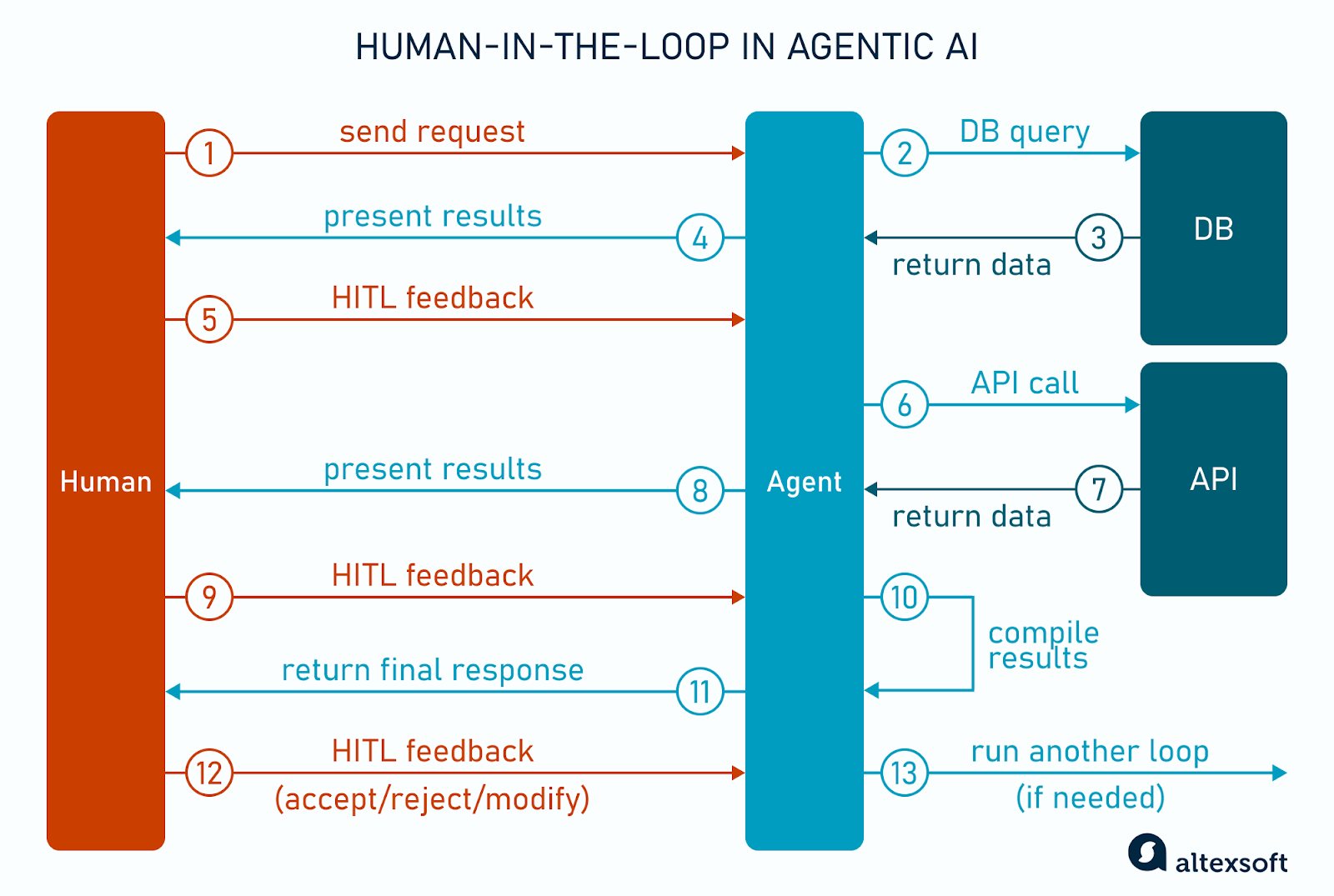

The defining capability that distinguishes AI agents from regular AI tools is function calling—the use of external tools, such as APIs, databases, search engines, calculators, etc. When designing agentic systems, it's crucial to build workflows that halt execution at key points, allowing humans to assess the proposed actions.

For example, when a user asks a question like “What’s the weather in Prague?” the system checks whether it has a tool available to answer the request. In this case, it has a weather tool that needs a city name as input. This tool can run immediately and return the weather result to the user.

However, it’s important to include the HITL step for more critical tasks. This means that instead of letting the agent automatically run the tool, the system pauses and asks the user to confirm whether they want the tool to be used.

“For trivial queries, you may let the AI run freely. But for sensitive operations like deleting files from the database, you should mark them as high‑risk and insert a mandatory human confirmation step,” Ismail Aslan explains. “However, even noncritical API calls can be gated if costly. You can program the AI to check ‘Is this tool usage expensive enough to require approval?’ and, if so, make it request human approval before proceeding.”

Ultimately, the system must evaluate each tool call’s impact. If an action affects data integrity, incurs high cost, or carries safety implications, you should flag an HITL checkpoint.

“You can also define case‑specific policies. For example, a social media bot can auto-post sports updates, but political topics or brand-sensitive content require human review,” Ismail Aslan adds. “Frameworks like AutoGen, CrewAI, or LangGraph let you define checkpoints in multistep flows where the AI must request additional user input before proceeding.”

Below, we’ll break down the workflow of an AI travel agent to demonstrate at which points HITL involvement is possible.

HITL in AI agent

User request. Say, the user requests: “Suggest the best ski resort in Switzerland in the last week of February and find the nearest luxury hotel there.”

Interpretation. The AI agent begins by interpreting the request and breaking it into manageable subtasks. It doesn’t complete these tasks all at once. Instead, it executes them in a loop. In this case, the request includes two key objectives: finding the best ski destination for a specific time window and locating a luxury hotel nearby with available rooms.

Retrieving ski conditions. First, the agent tackles the destination. It knows that determining the best ski resort for late February will require information on resort size, lift infrastructure, cost, and terrain quality. However, the LLM alone may not have current or domain-specific knowledge, so the agent needs to access an external vector database to retrieve relevant data.

The database returns recent snowfall, weather, and terrain data, and the agent presents the top five skiing destinations—St. Moritz, Zermatt, Verbier, Davos, and Andermatt.

HITL feedback. The user reviews the options and selects Zermatt.

Finding a luxury hotel. The agent moves on to the second step: finding a luxury hotel nearby. To do this, it has to call a hotel API. The agent retrieves a list of luxury hotels in Zermatt, ranks them based on review scores and proximity to the ski resorts, checks availability, and highlights five top-ranking hotels.

HITL feedback. The user then chooses their preferred hotel from the presented list.

Agent response. With both parts of the task completed, the agent compiles a summary response. It confirms the chosen ski resort and the selected luxury hotel.

HITL feedback. After reviewing the results, the user replies: “I want a place with fewer crowds.”

Updated agent response. Based on the new request, the agent reruns the loop, now prioritizing less crowded ski areas. It revisits the database, presents new options, and the user selects a quieter destination, such as Andermatt. Then the agent calls the hotel API again to create a new list of luxury hotels for the user to choose.

Making the reservation. With all travel spots confirmed, the agent is preparing to take the final step—booking the hotel. However, due to safety constraints, the agent cannot take this action without human permission. It informs the user of the hotel’s availability and asks whether it should proceed with the booking for the last week of February.

The user approves and completes the booking.

Human-in-the-Loop examples and use cases

Let’s discuss how various industries engage humans in AI workflows.

HITL in travel: AI agents generating itineraries

With OpenAI’s Operator and Perplexity now assisting with travel bookings, agentic AI is transforming trip planning. These platforms can build personalized itineraries in response to user requests. Still, HITL remains essential due to the nuanced nature of travel: Personal preferences that AI cannot catch, cultural considerations, or complex edge cases like visa requirements depend on human oversight.

AltexSoft has hands-on experience with such systems. Our engineers developed an AI travel agent as a single-page app that allows users to describe their trip preferences. The agent interprets the core intent of the prompt and uses function calling to trigger the appropriate external tools. For example, if the user asks for the top ten resorts in Italy, the agent identifies the need to access travel APIs to gather that information.

Here, the main role of HITL is to iteratively refine the prompts based on the agent's responses in order to receive better, more relevant results. This means adjusting criteria, clarifying vague inputs, or correcting misunderstandings. It can also include approving tool calls, as described in the section above.

For more details, check out our article describing the technologies that make up our AI travel agent.

HITL in healthcare: AI for triage, diagnoses, billings, and more

Medical AI heavily relies on having clinicians in the loop. Doctors are applying AI throughout a range of tasks, including automating billing code entries, drafting medical records and visit summaries, creating care plans and discharge instructions, live translation services, assisting with diagnoses, and more.

“For example, an AI triage system, like KATE by Mednition, helps sort patient requests. It can prescreen and prioritize patients, flagging more critical cases for urgent attention. Here, AI performs the initial sorting, but a nurse or doctor verifies or overrides decisions,” Sergii Shelpuk shares.

A more advanced example is a study that proposes an HITL approach for chest X-ray diagnosis, where an intelligent assistant incorporates eye fixation data. It captures where and for how long a radiologist focuses on specific regions of a chest X-ray. The system then offers possible diagnoses based on both the X-ray image and the radiologist’s eye focus patterns.

In 2024, 66 percent of US physicians reported using AI in their clinical workflows, marking a notable increase from 38 percent in 2023. Still, doctors remain cautious, with nearly half of the surveyed emphasizing the need for stronger oversight and regulation when using AI.

“I think healthcare is the last domain where people will fully trust machines to make independent decisions, if ever. The consequences of medical errors are severe—they involve high financial stakes at least and impact lives at most,” Sergii Shelpuk explains.

HITL in finance: From AI-powered risk scores to claim checks

The Bank of England and the FCA’s 2024 survey revealed that 75 percent of financial service institutions used AI in their workflows, and 10 percent planned to do so in the next three years. Fraud detection and cybersecurity are among the most popular use cases for AI in the industry, according to the survey.

Machine learning has become a valuable tool in fraud detection by analyzing large datasets to uncover patterns and irregularities.

For example, PayPal’s Fraud Protection Advanced (FPA) is designed to help risk management teams identify and mitigate fraud. It analyzes each transaction, generates a risk score, and offers recommendations on whether to approve, block, or review the payment. Users can then inspect all transaction details in the dashboard and make final decisions.

HITL is also crucial for handling edge cases and approvals in industries that are heavily regulated or risk-sensitive. Insurance companies may use AI tools to scan claims data to validate them against policy terms or sort by categories (health, car, or property), with human specialists still making all critical decisions.

Read our article about AI agents and selling insurance online to learn more about the role of AI in the insurance industry.

Will humans always be in the loop?

Many wonder whether technology will ever advance to the point where humans are no longer needed in the loop. While the idea of fully autonomous systems may be appealing to some, it is very far from today’s reality.

Here’s what Ismail Aslan has to say about the topic: “When AI systems become more sophisticated and personalized, we might no longer feel the need to question their suggestions as often. Nowadays, when a travel AI agent generates an itinerary, we double-check its choices. In the future, we may get more confident and simply accept the very first itinerary the AI recommends.”

“However, the level of trust we place in AI depends heavily on the stakes involved. For example, even if an AI system demonstrates diagnostic accuracy equal to that of a human doctor, I am still unwilling to give full control to the machine when it comes to my health.”

At the same time, Sergii Shelpuk doubts the very idea of AI advancing any time soon, underscoring the inevitable role of a human in the loop: “The perceived rapid progress of AI is largely driven by hype, not fundamental technological breakthroughs. While it may seem like we’re advancing quickly with each year, many popular architectures have been around for a while. For example, generative pretrained transformers (GPTs) were introduced back in 2018."

"Instead, what we’re seeing is a progress in how existing technologies are applied across industries, not the progress of technology itself.”

In sum, the importance of HITL will remain essential because, regardless of advancements, there will always be nuance, ambiguous inputs, or high-stakes decisions where human intervention is critical.

Linda is a tech journalist at AltexSoft, specializing in travel technologies. With a focus on this evolving industry, she analyzes and reports on the technologies and latest tech that influence the world of travel. Beyond the professional domain, Linda's passion for writing extends to novels, screenplays, and even poetry.

Want to write an article for our blog? Read our requirements and guidelines to become a contributor.