It's a common practice for companies and their marketing teams to try guessing how likely certain groups of customers are going to act under certain circumstances. For this purpose, they create propensity models. Built in a traditional statistical fashion, the accuracy of outcomes predictive tools provide isn’t always high. To help companies unlock the full potential of personalized marketing, propensity models should use the power of machine learning technologies. Alphonso – the US-based TV data company – proves this statement. With AI by its side, the company managed to optimize the spend on advertisements by predicting the conversion of new users and targeting those with a higher likelihood to subscribe on premium. The result was impressive: The accuracy of predictions was increased from 8 to 80 percent.

This post is going to shed light on propensity modeling and the role of machine learning in making it an efficient predictive tool. You will also learn how propensity models are built and where the best place to start.

What is a propensity model?

Propensity modeling is a set of approaches to building predictive models to forecast behavior of a target audience by analyzing their past behaviors. That is to say, propensity models help identify the likelihood of someone performing a certain action. These actions may range from accepting a personalized offer or making a purchase to signing up for a newsletter or churning, etc.

A probabilistic estimation of whether your customers will perform any of such actions or not is called a propensity score. Equipped with these scores, you can also make an educated guess of the value each customer brings in real-time.

While the origins of propensity modeling can be traced back to 1983, it’s only recently that machine learning has made it possible to unfold the technique’s full potential. With the right tools and experienced data science teams, companies can now build advanced propensity models and make accurate predictions.

For companies to be good with predictions, propensity models have to be dynamic, scalable, and adaptive. Let’s go through these characteristics in more detail.

- Dynamic means models should be built in a way to advance with trends, adapt, and learn on new data as it becomes available.

- Scalable means they shouldn’t be created for a single-use scenario but in a way to produce a wide array of predictions.

- Adaptive means models should have a proper data pipeline for regular data ingestion, validation, and deployment to timely adjust to changes.

When implemented in this way, propensity modeling will help you avoid revenue losses and build more targeted and safer marketing campaigns.

Types of propensity models

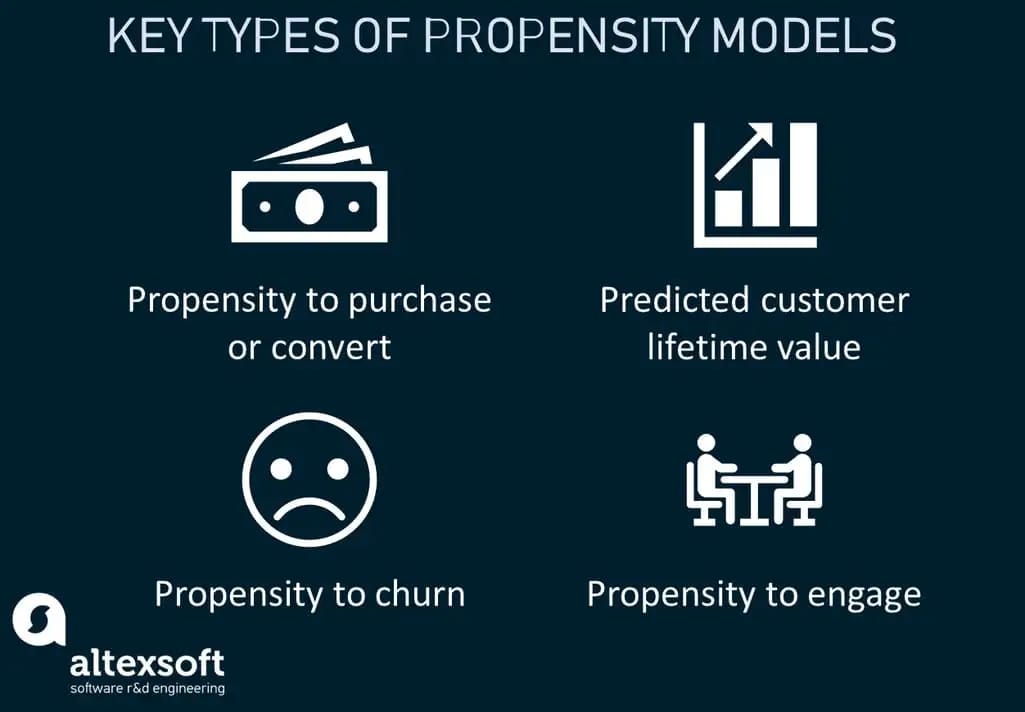

Experts distinguish several types of propensity models based on the customers they target and the problem that needs to be solved. Here, we’re covering the three key ones.

Key types of propensity models

The propensity to purchase/convert model shows you which customers are more likely or less likely to buy your services, products, or perform some target action, e.g. subscribe to a newsletter. Once you know which of your customers are more willing to make a purchase, you can decide on customized offers. Read our article on predictive lead scoring to learn more about its application in lead conversion.

The model to calculate customer lifetime value (CLV) allows you to estimate the monetary value of purchases/transactions each customer makes during their lifetime with your business. Models can provide you with information about which acquisition campaigns produce customers with the highest CLV so you know which deserve investment.

The propensity to churn model helps you identify which leads and customers are at risk, meaning they aren’t happy with the products or services and are prone to abandon your company. By estimating this propensity score, you can try conducting a re-engagement campaign to convince customers to stay or win them back.

The propensity to engage model allows you to evaluate the propensity of your leads and customers to show proactive behavior. It may be a propensity score showing which website visitors are expected to click on an ad or which citizens will possibly vote for a given party in elections.

In some cases, you can combine different models to make more effective campaign decisions. Say, you can offer a higher discount to customers who are prone to churn yet have a higher lifetime value.

Propensity modeling use cases

Personalization goes a long way when it comes to customer engagement, company growth, and brand loyalty. Different businesses, especially those using the subscription model, try to tailor the right services and/or products to the right people to gain the most value. Although the path of personalization is often rocky, propensity modeling is one of the ways to make it smoother. Here are a few real-life examples of how propensity modeling is used.

Barack Obama reelection campaign: Voters segmentation

During Barack Obama's 2012 reelection campaign, a team of data scientists was hired to build propensity-to-convert models. The task was to predict which undecided voters could be encouraged to vote for democrats and which type of political campaign contact such as a door knock, call, flyer, etc., would work best for each voter. The use of Big Data predictive analytics contributed to the Obama reelection win.

US flower delivery company: Enabling faster and greater ROI

As a company operating in the floral industry, The Bouqs. Co needs to master demand forecasting to perform well despite seasonality. The company invested in the development of event propensity models that relied on data about customers using its subscription and special events scheduling services. As a result, the company managed to pinpoint the most valuable audiences and provided them with personalized offers to drive greater ROI.

Scandinavian Airlines: Personalized communication with customers

Scandinavian Airlines (SAS) leverages machine learning and predictive analytics to calculate a customer’s propensity to book a flight ticket. Armed with this data, they can provide timely offers for customers who are more willing to buy a flight and avoid having empty seats.

Vodafone: Customer churn risks identification

Vodafone is the second-largest mobile operator in Ukraine providing services to more than 23 million users. The company was looking for a way to reduce customer churn rate and improve their targeting with a final goal to outpace their top competitor. The company called on SAS Customer Intelligence, which leverages artificial intelligence opportunities, to build more accurate propensity models and make better decisions. With the help of outputs provided by ML-powered propensity modeling, marketers at Vodafone Ukraine managed to form accurate customer segments and determine which products perfectly match the next-best offers. The strategy resulted in the 30 percent customer churn reduction, increasing incremental revenue by 2 percent.

So, how to get started with propensity modeling? And what steps to take to implement such models with machine learning? Let’s see.

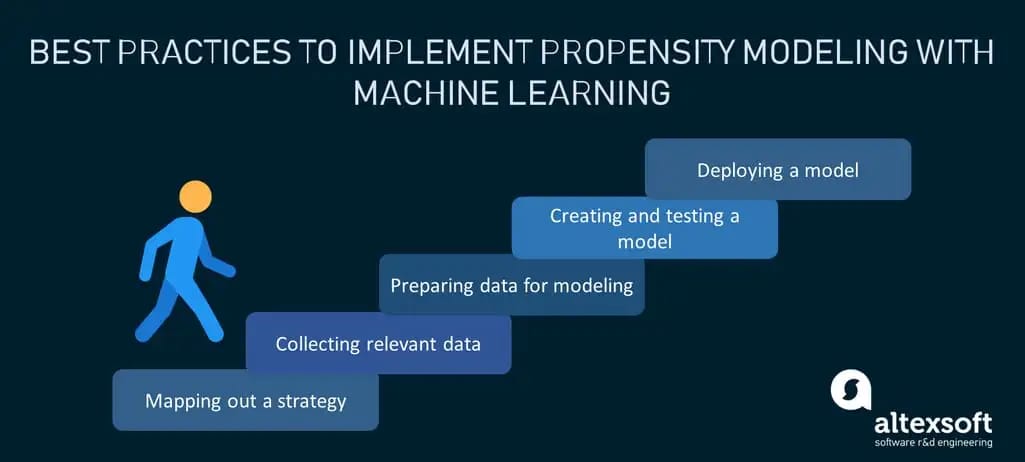

Best practices to implement propensity modeling with machine learning

Propensity models rely on machine learning algorithms. Machines get trained to anticipate what actions customers are likely to take next by finding patterns in past customer behavior data and using them when exposed to new data inputs. From the perspective of machine learning, propensity models can be considered a form of “binary classifier,” meaning that a model can predict whether a certain event, action, or behavior will take place or not.

Steps to implement a propensity model with machine learning

The typical machine learning scenario data scientists leverage to bring propensity modeling to life involves the following steps:

- Mapping out a strategy

- Collecting relevant data

- Preparing data for modeling

- Creating and testing a model

- Deploying a model

You may learn more about these steps in our article dedicated to the topic of machine learning project structure. As for now, let’s see what implementation requirements there are to develop a propensity model.

Mapping out a strategy

The starting point on your way to building efficient propensity models is defining your business objectives and deciding which insights you want to draw eventually.

Say, you want to calculate propensity to buy scores for your customer base in the upcoming year. What exactly should the marketing team do with received probabilities? The model itself won’t tell them which customer group – with a higher or lower propensity score of purchasing – you should reach out to with aggressive offers. Chances are, there’s no point in spending resources on customers who have lower probabilities while focusing on reinforcing those who have higher propensity scores. Or maybe vice versa. So, it is necessary to map out the strategy before any model implementation begins.

Collecting relevant data

During this stage, you must collect relevant data about active and potential customers. The choice of a data source depends on the propensity you want to predict.

Data can come from first-party and third-party sources and can be combined for more accurate predictions.

- First-party data is the information about customers that resides on a company’s website, CRM, mobile applications, contact centers, and so on, provided consumers have given their consent. Such data usually comprise the personal, demographic, and behavioral data of customers.

- Third-party data is the kind of information a company can purchase from third-party vendors that don’t have any direct relationships with the company's customers. Generated and collected by outside sources, such data can provide richer insight into audiences for more targeted marketing campaigns.

As we were saying earlier, it’s not only the type of data that matters but also its amount and quality. Keep in mind that the ML propensity models are only as good as the data they are trained on.

Preparing data for modeling

The next step is to make sure the data selected for propensity modeling is consistent, accurate, and complete. There is an array of data preparation steps you can take to make that happen. In one of our articles, we cover the main techniques to prepare datasets for machine learning, so you can check it out for better understanding, or watch our video:

Data preparation, explained

Here, we’re going to keep it at feature selection.

What does that mean?

You need to select the right mix of features to serve as input for your propensity model. The features are the variables that affect the customers' behaviors. They can be independent (e.g., product attributes) and dependent (e.g., average order value per customer).

To effectively estimate propensity scores and draw a full picture of who your customers are, you may consider the following metrics:

- demographic information (age, gender, religion, education, etc.);

- engagement information (webpage dwell time, the number of emails opened/clicked, web app or mobile app searches, etc.);

- transaction and user behavior information (the number of purchases made, the type of products/services purchased, or the time between an offer and conversion, etc.); and

- psychographic information (personality characteristics, opinions, likes, and dislikes).

When choosing customer features, include only the most important and relevant ones and avoid any duplicates like salary/income for example.

Once the set of features is defined, you can put data with these features into a fitting format (e.g. JSON, CSV) and move on to modeling. Yet, it is important to prepare sets of data for model training, validation, and testing.

Creating and testing a model

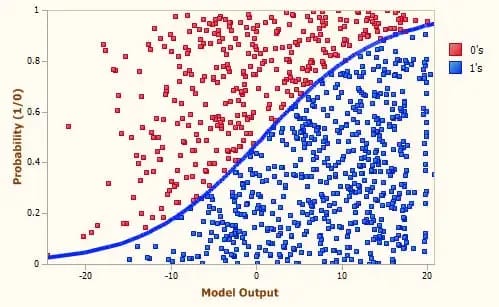

When data is ready, it’s time to build and train propensity models using different algorithmic approaches. ML specialists can choose from an array of machine learning model types including logistic regression, decision trees, random forests, neural networks, and others.

Logistic regression is an algorithm for classifying binary values (1 or 0), e.g., buy/won’t buy. It is used in the prevailing majority of cases for estimating propensity scores.

Logistic regression with probability ranging from 1 to 0. Source: Data Science foundation

Logistic regression aims at estimating the probability of an event occurrence based on the relationship between input dependent and independent variables. With it, you can predict the likelihood of a data point belonging to one of the categories.

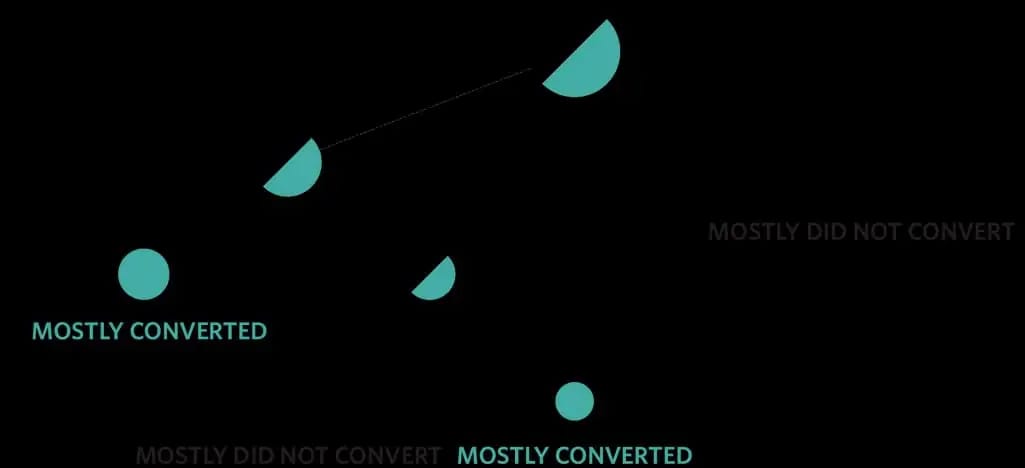

A decision tree is a supervised machine learning algorithm used for classification and regression purposes. There is always a predefined target variable. Within this algorithmic approach, data is divided into nodes with Yes/No questions, narrowing down questions as the branches get lower.

A decision tree showing what kind of customers are more likely to convert. Source: Faraday

The final output represents binary classes, something like whether a customer will renew their subscription (yes/no), so propensity categories are easy to make, interpret, and visualize.

Random forest is another machine learning algorithm used to perform regression and classification tasks. This approach assembles multiple decision trees into a forest, hence the name. The more trees there are in the forest, the higher accuracy of predictions the model shows. The working principle here is simple: Each tree provides a classification of a data point and votes for the class based on attributes. Then the forest picks the class that has received the most votes.

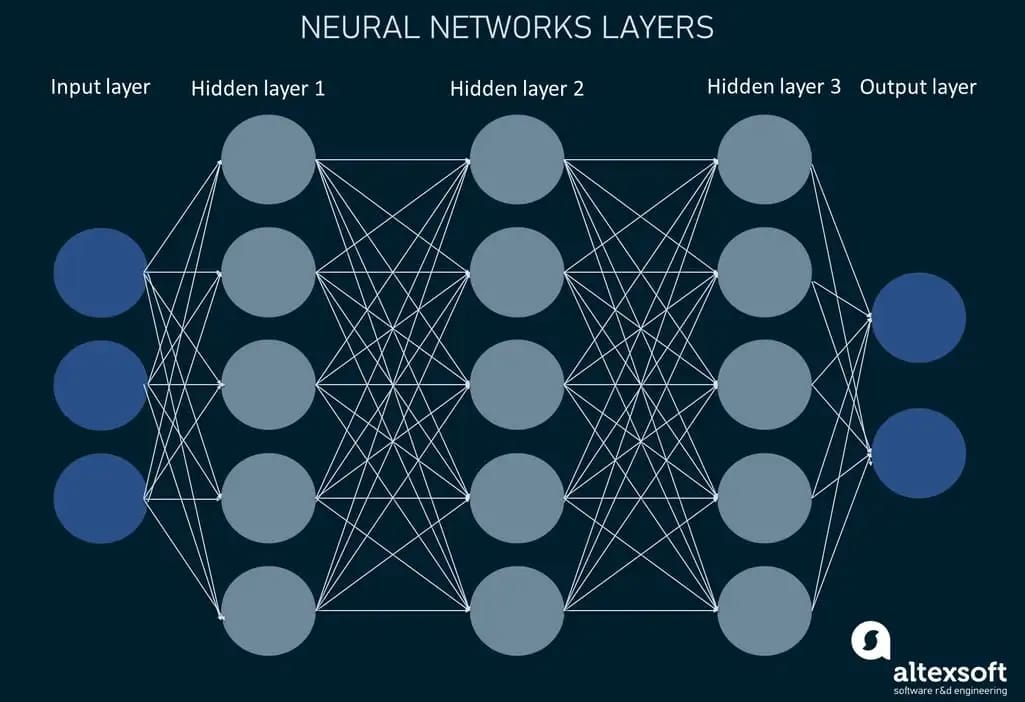

Neural networks, inspired by the design of biological neural systems, are a series of complex algorithms that consist of neurons (nodes) residing in different interconnected layers (input layer, a number of hidden layers, and output layer).

Neural networks structure

Nodes in one layer are connected to all nodes in the next layer. They process and transmit signals (inputs) through directed, weighted edges until those inputs get to the final output layer.

You can build multiple models or combine them to reach higher accuracy of the results. For instance, the outputs of one model can be used as the inputs for another.

Once models are trained, it is necessary to evaluate their performance against validation and test data. This will ensure that models work well when exposed to the real-world data of other customers.

Deploying a model

The final stage of propensity modeling involves deploying the machine learning model or models you selected as the best-performing. However, data scientists should carry on maintaining the deployed models to ensure the accuracy of results over time and improve when necessary.

Having a propensity model created and trained on your historical customer data is only half the battle. It cannot replace human critical thinking and answer questions like, “Should I offer discounts to customers with higher probabilities of churning or those with lower probabilities too?” It is up to your team to decide what to do with the outputs models provide and what business strategy to build. So, it is a good idea to mix propensity modeling with human expertise to gain richer insights and conduct more targeted and effective personalization experiments.

Get predictions with higher accuracy

Propensity modeling is a powerful tool in the hands of companies that want to make an educated guess on their customers' next moves. But the reality is that the accuracy of predictions is only as high as the quality of built models.

While there are quite a few pre-trained machine learning solutions for calculating various propensity scores, they are often limited in functionality and have their drawbacks. Predictions they make won’t be as accurate as anticipated. This can be attributed to the fact that most off-the-shelf solutions have a small number of basic features, usually accounting for limited data. Of course, for small businesses that don’t have enough resources for a data science team, such platforms can serve well.

However, if you are an enterprise-scale company that needs robust propensity models that are scalable and applicable to your specific business needs, having an experienced data science team will work best. In this way, you can be sure that a created model will be taken care of to adapt to even slight changes in data, and as such provide up-to-date predictions with greater accuracy.