For a long time, development and operations were isolated modules. Developers wrote code; the system administrators were responsible for its deployment and integration. As there was limited communication between these two silos, specialists worked mostly separately within a project.

That was fine when Waterfall development dominated. But since Agile and continuous workflow have taken over the world of software development, this model is out of the game. Short sprints and frequent releases occurring every two weeks or even every day require a new approach and new team roles.

Today, DevOps is one of the most discussed software development approaches. It is applied in Facebook, Netflix, Amazon, Etsy, and many other industry-leading companies. So, if you are considering embracing DevOps for the sake of better performance, business success, and competitiveness, you take the first step and hire a DevOps engineer. But first, let’s look at what DevOps is all about and how it helps improve product delivery.

DevOps in a nutshell

What is DevOps?

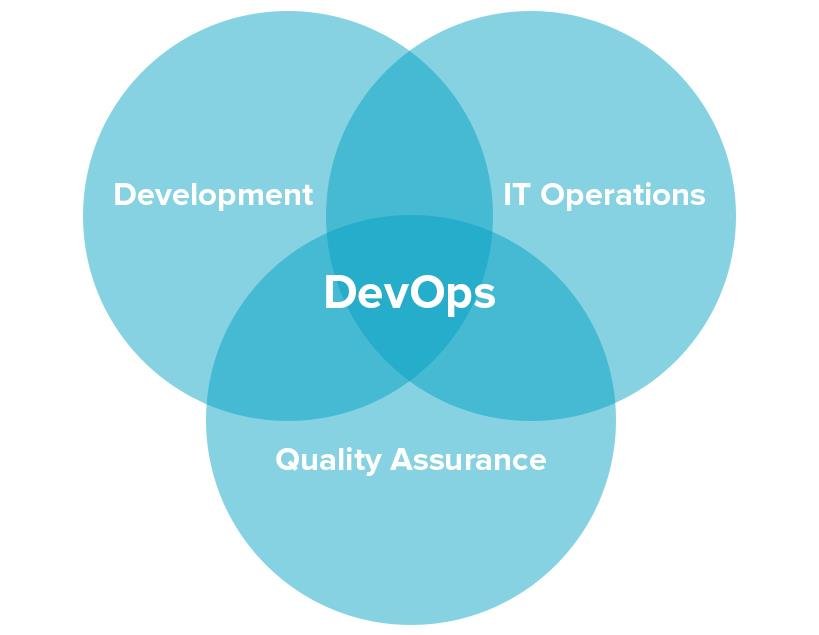

DevOps stands for development and operations. It’s a practice that aims at merging development, quality assurance, and operations (deployment and integration) into a single, continuous set of processes. This methodology is a natural extension of Agile and continuous delivery approaches.

What DevOps looks like

By adopting DevOps companies gain three core advantages that cover technical, business, and cultural aspects of development.

Higher speed and quality of product releases. DevOps speeds up product release by introducing continuous delivery, encouraging faster feedback, and allowing developers to fix bugs in the system in the early stages. Practicing DevOps, the team can focus on the quality of the product and automate a number of processes.

Faster responsiveness to customer needs. With DevOps, a team can react to change requests from customers faster, adding new and updating existing features. As a result, the time-to-market and value-delivery rates increase.

Better working environment. DevOps principles and practices lead to better communication between team members, and increased productivity and agility. Teams that practice DevOps are considered to be more productive and cross-skilled. Members of a DevOps team, both those who develop and those who operate, act in concert.

These benefits come only with the understanding that DevOps isn’t merely a set of actions, but rather a philosophy that fosters cross-functional team communication. More importantly, it doesn’t require substantial technical changes as the main focus is put on altering the way people work. The whole success depends on adhering to DevOps principles.

DevOps principles

In 2010 Damon Edwards and John Willis came up with the CAMS model to showcase the key values of DevOps. CAMS is an acronym that stands for Culture, Automation, Measurement, and Sharing. As these are the main principles of DevOps, we’ll examine them in more detail.

To learn about DevOps principles in less than 8 minutes, please check our video:

Main stages and principles of DevOps

Culture

DevOps is initially the culture and mindset forging strong collaborative bonds between software development and infrastructure operations teams. This culture is built upon the following pillars.

Constant collaboration and communication. These have been the building blocks of DevOps since its dawn. Your team should work cohesively with the understanding of the needs and expectations of all members.

Gradual changes. The implementation of gradual rollouts allows delivery teams to release a product to users while having an opportunity to make updates and roll back if something goes wrong.

Shared end-to-end responsibility. When every member of a team moves towards one goal and is equally responsible for a project from beginning to end, they work cohesively and look for ways of facilitating other members' tasks

Early problem-solving. DevOps requires that tasks be performed as early in the project lifecycle as possible. So, in case of any issues, they will be addressed more quickly.

Automation of processes

Automating as many development, testing, configuration, and deployment procedures as possible is the golden rule of DevOps. It allows specialists to get rid of time-consuming repetitive work and focus on other important activities that can't be automated by their nature.

Measurement of KPIs (Key Performance Indicators)

Decision-making should be powered by factual information in the first place. To get optimal performance, it is necessary to keep track of the progress of activities composing the DevOps flow. Measuring various metrics of a system allows for understanding what works well and what can be improved.

Please visit our separate posts about top 10 DevOps metrics and the four DORA metrics for a deeper dive.

Sharing

Sharing is caring. This phrase explains the DevOps philosophy better than anything else as it highlights the importance of collaboration. It is crucial to share feedback, best practices, and knowledge among teams since this promotes transparency, creates collective intelligence and eliminates constraints. You don't want to put the whole development process on pause just because the only person who knows how to handle certain tasks went on a vacation or quitted.

DevOps model and practices

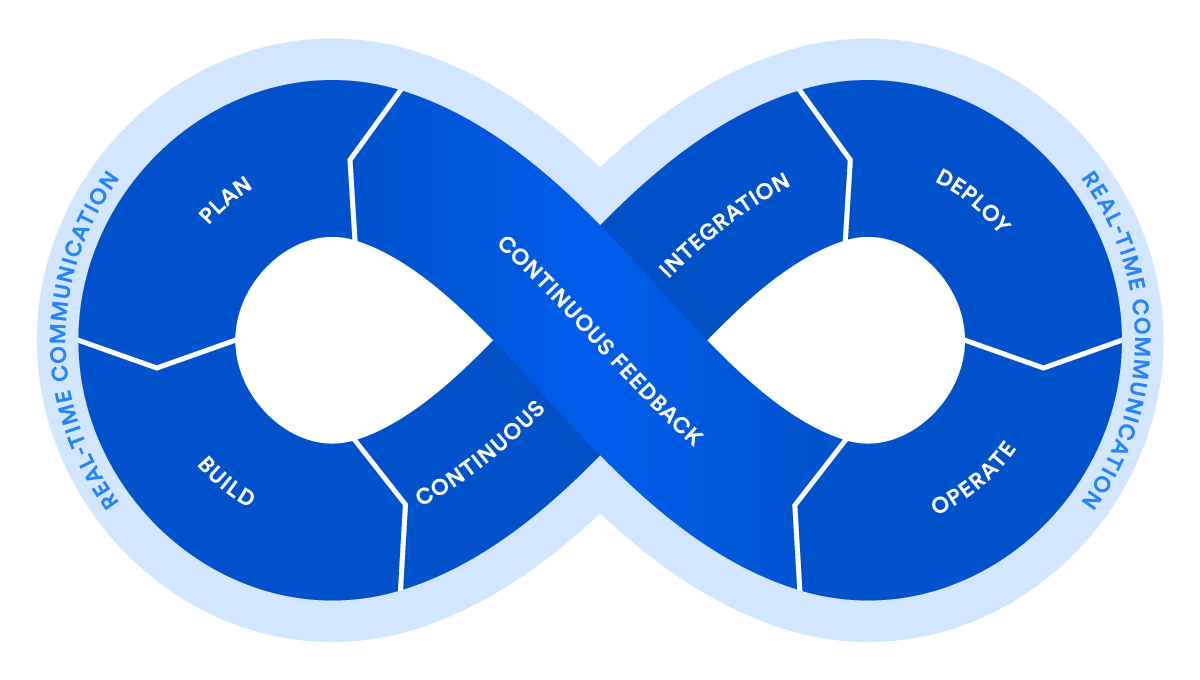

DevOps requires a delivery cycle that comprises planning, development, testing, deployment, release, and monitoring with active cooperation between different members of a team.

A DevOps lifecycle Source: Atlassian

To break down the process even more, let’s have a look at the core practices that constitute the DevOps.

Agile planning

In contrast to traditional approaches of project management, Agile planning organizes work in short iterations (e.g. sprints) to increase the number of releases. This means that the team has only high-level objectives outlined, while making detailed planning for two iterations in advance. This allows for flexibility and pivots once the ideas are tested on an early product increment. Check our Agile infographics to learn more about different methods applied.

Continuous development

The concept of continuous "everything" embraces continuous or iterative software development, meaning that all the development work is divided into small portions for better and faster production. Engineers commit code in small chunks multiple times a day for it to be easily tested. Code builds and unit tests are automated as well.

Continuous automated testing

A quality assurance team sets committed code testing using automation tools like Selenium, Ranorex, UFT, etc. If bugs and vulnerabilities are revealed, they are sent back to the engineering team. This stage also entails version control to detect integration problems in advance. A Version Control System (VCS) allows developers to record changes in the files and share them with other members of the team, regardless of their location.

Continuous integration and continuous delivery (CI/CD)

The code that passes automated tests is integrated in a single, shared repository on a server. Frequent code submissions prevent a so-called “integration hell” when the differences between individual code branches and the mainline code become so drastic over time that integration takes more than actual coding.

Continuous delivery, detailed in our dedicated article, is an approach that merges development, testing, and deployment operations into a streamlined process as it heavily relies on automation. This stage enables the automatic delivery of code updates into a production environment.

Continuous deployment

At this stage, the code is deployed to run in production on a public server. Code must be deployed in a way that doesn’t affect already functioning features and can be available for a large number of users. Frequent deployment allows for a “fail fast” approach, meaning that the new features are tested and verified early. There are various automated tools that help engineers deploy a product increment. The most popular are Chef, Puppet, Azure Resource Manager, and Google Cloud Deployment Manager.

Continuous monitoring

The final stage of the DevOps lifecycle is oriented to the assessment of the whole cycle. The goal of monitoring is detecting the problematic areas of a process and analyzing the feedback from the team and users to report existing inaccuracies and improve the product’s functioning.

Infrastructure as a code

Infrastructure as a code (IaC) is an infrastructure management approach that makes continuous delivery and DevOps possible. It entails using scripts to automatically set the deployment environment (networks, virtual machines, etc.) to the needed configuration regardless of its initial state.

Without IaC, engineers would have to treat each target environment individually, which becomes a tedious task as you may have many different environments for development, testing, and production use.

Having the environment configured as code, you

- Can test it the way you test the source code itself and

- Use a virtual machine that behaves like a production environment to test early.

Once the need to scale arises, the script can automatically set the needed number of environments to be consistent with each other.

Containerization

Virtual machines emulate hardware behavior to share computing resources of a physical machine, which enables running multiple application environments or operating systems (Linux and Windows Server) on a single physical server or distributing an application across multiple physical machines.

Containers, on the other hand, are more lightweight and packaged with all runtime components (files, libraries, etc.) but they don’t include whole operating systems, only the minimum required resources. Containers are used within DevOps to instantly deploy applications across various environments and are well combined with the IaC approach described above. A container can be tested as a unit before deployment. Currently, Docker provides the most popular container toolset.

Microservices

The microservice architectural approach entails building one application as a set of independent services that communicate with each other, but are configured individually. Building an application this way, you can isolate any arising problems ensuring that a failure in one service doesn’t break the rest of the application functions. With the high rate of deployment, microservices allow for keeping the whole system stable, while fixing the problems in isolation. Learn more about microservices and modernizing legacy monolithic architectures in our article.

Cloud infrastructure

Today most organizations use hybrid clouds, a combination of public and private ones. But the shift towards fully public clouds (i.e. managed by an external provider such as AWS or Microsoft Azure) continues. While cloud infrastructure isn’t a must for DevOps adoption, it provides flexibility, toolsets, and scalability to applications. With the recent introduction of serverless architectures on clouds, DevOps-driven teams can dramatically reduce their effort by basically eliminating server-management operations.

An important part of these processes are automation tools that facilitate the workflow. Below we explain why and how it is done.

DevOps tools

The main reason to implement DevOps is to improve the delivery pipeline and integration process by automating these activities. As a result, the product gets a shorter time-to-market. To achieve this automated release pipeline, the team must acquire specific tools instead of building them from scratch.

Currently, existing DevOps tools cover almost all stages of continuous delivery, starting from continuous integration environments and ending with containerization and deployment. While today some of the processes are still automated with custom scripts, mostly DevOps engineers use various products. Let’s have a look at the most popular ones.

Server configuration tools are used to manage and configure servers in DevOps. Puppet is one of the most widely used systems in this category. Chef is a tool for infrastructure as code management that runs both on cloud and hardware servers. One more popular solution is Ansible that automates configuration management, cloud provisioning, and application deployment.

CI/CD stages also require task-specific tools for automation — such as Jenkins that comes with lots of additional plugins to tweak continuous delivery workflow or GitLab CI, a free and open-source CI/CD instrument presented by GitLab.

For more solutions, check our corresponding article where we compare the major CI tools on today’s market.

Containerization and orchestration stages rely on a bunch of dedicated tools to build, configure, and manage containers that allow software products to function across various environments. Docker is the most popular instrument for building self-contained units and packaging code into them. The widely-used container orchestration platforms are commercial OpenShift and open-source Kubernetes.

Monitoring and alerting in DevOps is typically facilitated by Nagios, a powerful tool that presents analytics in visual reports or open-source Prometheus.

While a DevOps engineer – we’ll discuss this role in more detail below – must operate these tools, the rest of the team also uses them under a DevOps engineer’s facilitation.

A DevOps Engineer: role and responsibilities

In the book Effective DevOps by Ryn Daniels and Jennifer Davis, the existence of a specific DevOps person is questioned: “It doesn’t usually make much sense to have a director of DevOps or some other position that puts one person in charge of DevOps. DevOps is at its core a cultural movement, and its ideas and principles need to be used throughout entire organizations in order to be effective.”

Some other DevOps experts partly disagree with this statement. They also believe that a team is a key to effectiveness. But in this interpretation, a team – including developers, a quality assurance leader, a code release manager, and an automation architect – work under the supervision of a DevOps engineer.

So, the title of a DevOps Engineer is an arguable one. Nonetheless, DevOps engineers are still in demand on the IT labor market. Some consider this person to be either a system administrator who knows how to code or a developer with a system administrator’s skills.

Learn about the role of a DevOps engineer, the tools they use, and how they collaborate with other members of the DevOps team in our dedicated article.

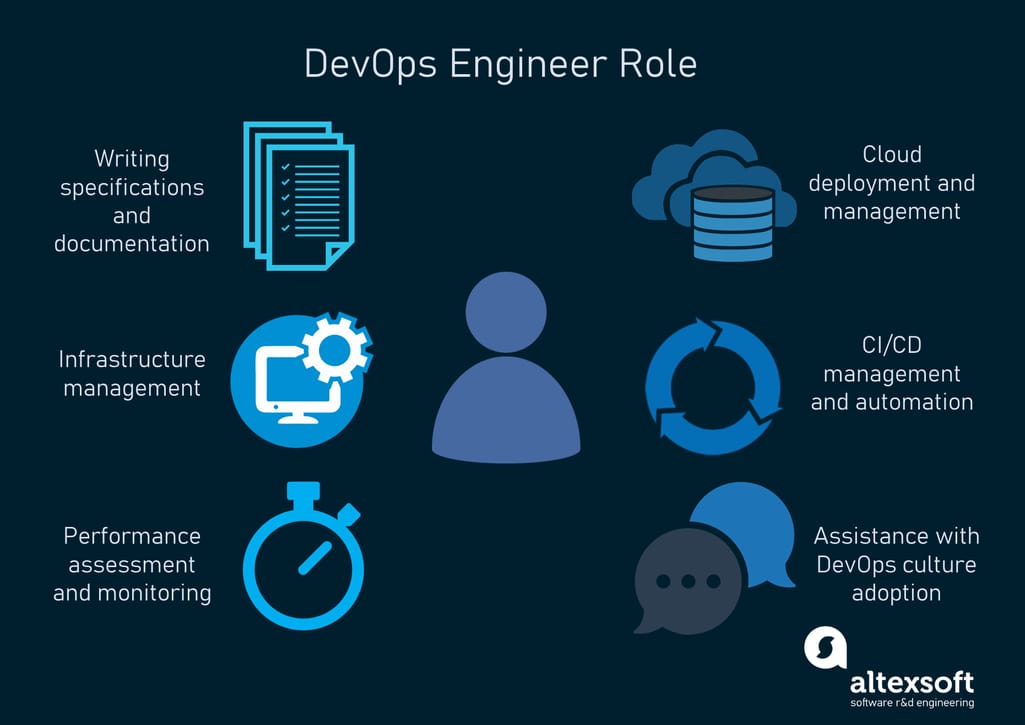

DevOps engineer responsibilities

In a way, both definitions are fair. The main function of a DevOps engineer is to introduce the continuous delivery and continuous integration workflow, which requires the understanding of the mentioned tools and the knowledge of several programming languages.

Depending on the organization, job descriptions differ. Smaller businesses look for engineers with broader skillsets and responsibilities. For example, the job description may require product building along with the developers. Larger companies may look for an engineer for a specific stage of the DevOps lifecycle that will work with a certain automation tool.

DevOps Engineer Role and Requirements

The basic and widely-accepted responsibilities of a DevOps engineer are:

- Writing specifications and documentation for the server-side features

- Continuous deployment and continuous integration (CI/CD) management

- Performance assessment and monitoring

- Infrastructure management

- Cloud deployment and management

- Assistance with DevOps culture adoption

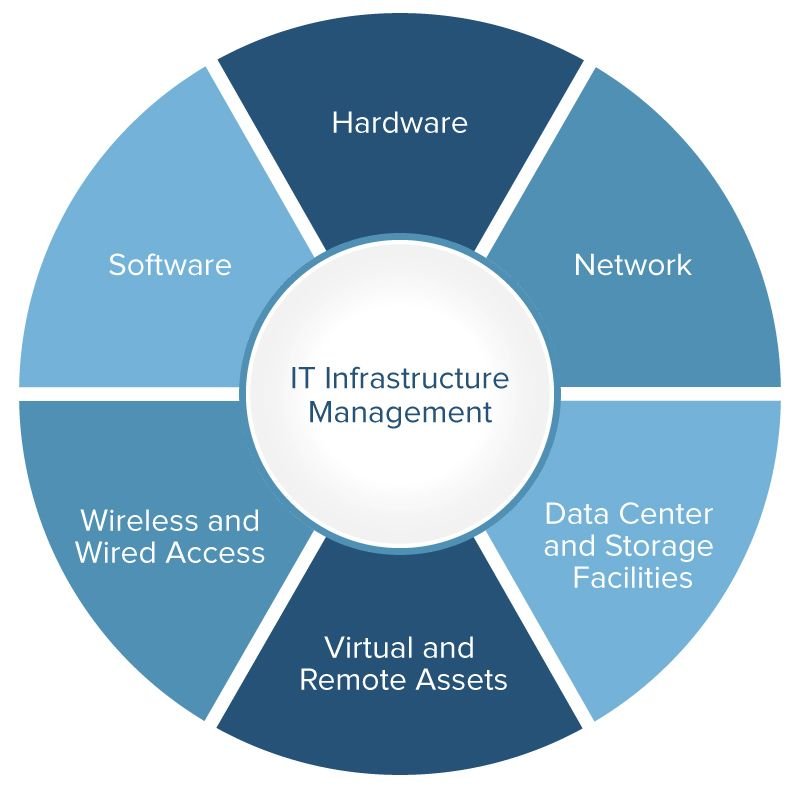

Additionally, a DevOps engineer can be responsible for IT infrastructure maintenance and management, which comprises hardware, software, network, storages, virtual and remote assets, and control over cloud data storage.

Scheme of IT Infrastructure management Source: Smartsheet

This expert participates in IT infrastructure building, works with automation platforms, and collaborates with the developers, operation managers, and system administrators, facilitating processes they are responsible for.

DevOps engineer skillset

While this title doesn’t require a candidate to be a system administrator or a developer, this person must have experience in both fields. When hiring a DevOps engineer, pay attention to the following characteristics:

Tech background. A DevOps engineer must hold a degree in computer science, engineering, or other related fields. Work experience must be greater than 2 years. This includes work as a developer, system administrator, or one of the members of a DevOps-driven team. This is an important requirement along with an understanding of all IT operations.

Automation tool experience. The knowledge of open source solutions for testing and deployment is a must for a DevOps engineer. If you use a cloud server, make sure that your candidate has experience with such tools as GitHub, Chef, Puppet, Jenkins, Ansible, Nagios, and Docker. A candidate for this job also must have experience with public clouds such as Amazon AWS, Microsoft Azure, and Google Cloud.

Programming skills. An engineer not only has to know off-the-shelf tools, but also must have programming experience to cover scripting and coding. Scripting skills usually entail the knowledge of Bash or PowerShell scripts, while coding skills may include Java, C#, C++, Python, PHP, Ruby, etc., or at least some of these languages.

Knowledge of database systems. At the deployment stage, an engineer works with data processing, which requires experience with both SQL or NoSQL database models.

Communication and interpersonal skills. Although a good candidate must be well-versed in tech aspects, a DevOps expert must have strong communication talents. He/she must ensure that a team functions effectively, receives and shares feedback to support continuous delivery. The outcome – a product – depends on his/her ability to effectively communicate with all team members.

Tips on hiring a DevOps ‒ the magic unicorn in the software development world

When you hire a DevOps specialist, you need to define the main requirements and responsibilities that this person will bring to your team. Here are several components for a complete job posting:

- Base the requirements for a candidate on automation tools and programming languages you already use in development.

- Define the technical knowledge and professional experience he/she must have to cover the requirements for this job.

- Understand, whether you need a DevOps specialist to work on a particular stage of a cycle, or if he/she should be involved in every stage of a process, product development included.

- And remember that the DevOps culture is about communication and collaboration, so find a candidate who can be a team player and team leader at the same time.

Just having a person with a DevOps engineer title doesn’t mean that you’ll be immediately steeped in the practice, but this hire can become the crucial first step towards it. A DevOps engineer is largely considered to be a leader’s position. This person may help you build a cross-functional team that works in compliance with DevOps principles.

The future of DevOps

Since the time DevOps entered the game, it has proved to be effective in lots of ways: From speeding up development processes to bringing more value along with high-quality products.

DevOps isn't going anywhere, but it doesn’t stand still either. Here are three DevOps trends for the near future.

As more organizations migrate to the cloud, DevOps will be tightly connected with cloud-native security bringing changes in the way software is built, deployed, and operated. With SecDevOps, companies will be able to integrate security right into the development and deployment workflows.

Some experts predict the wider adoption of BizDevOps, a new approach to software development that eliminates the boundaries between developers, operations teams, and business staff so companies can build user-oriented products more quickly.

Last but not least, development teams will be more involved in the decision-making aspects to lead companies in the right direction of digital transformation.