Most of the data around us—like text, images, audio, and transaction logs—exists in its raw form. It’s often messy and incomplete, which makes it difficult to extract useful information from. Turning it into a format that machine learning models can learn from usually takes a lot of time and effort.

Self-supervised learning offers an alternative. In this article, we’ll explore how it works, when it’s most effective for data science teams, and where it fits in modern ML workflows.

What is self-supervised learning (SSL)?

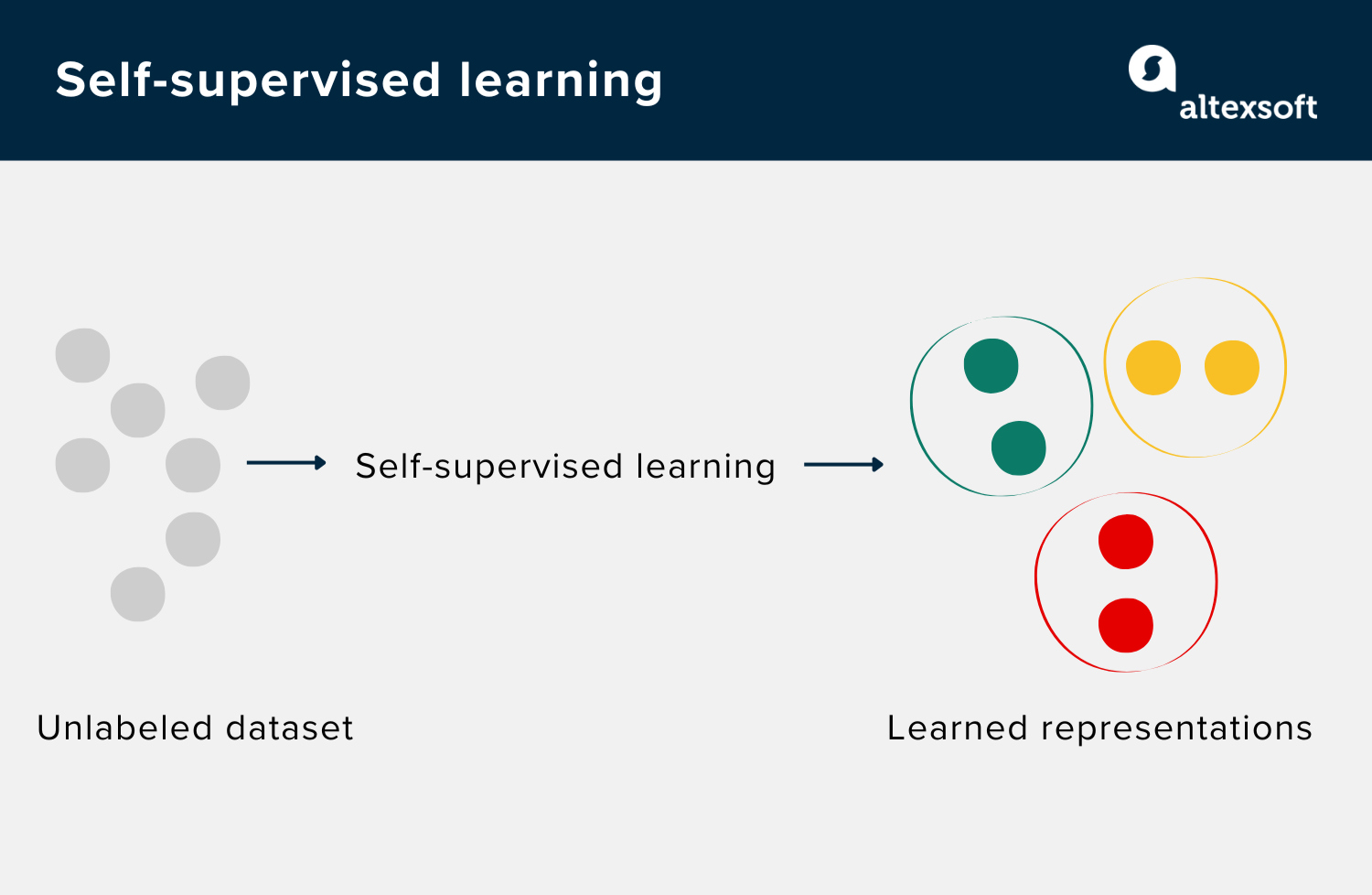

Self-supervised learning (SSL) is an ML approach in which a model generates its own training signals from patterns already present in the data, rather than relying on manually labeled datasets that define the correct output. By identifying structure, relationships, or regularities in the data itself, the model learns representations that can later be reused across a wide range of downstream tasks.

At its core, SSL works by training a model to predict one part of an input from another part of the same input.

In the context of generative AI, SSL plays a central role in pretraining. Large language models (LLMs) such as GPT and LLaMA are trained on vast amounts of text without explicit labels. Instead, they learn by predicting the next token in a sequence, reconstructing masked content, or modeling relationships within the text. Through this process, the model internalizes the structure, patterns, and semantics of language, which form the foundation for downstream tasks such as text generation, summarization, and question answering.

Self-supervised vs supervised vs semi-supervised vs unsupervised vs reinforcement learning

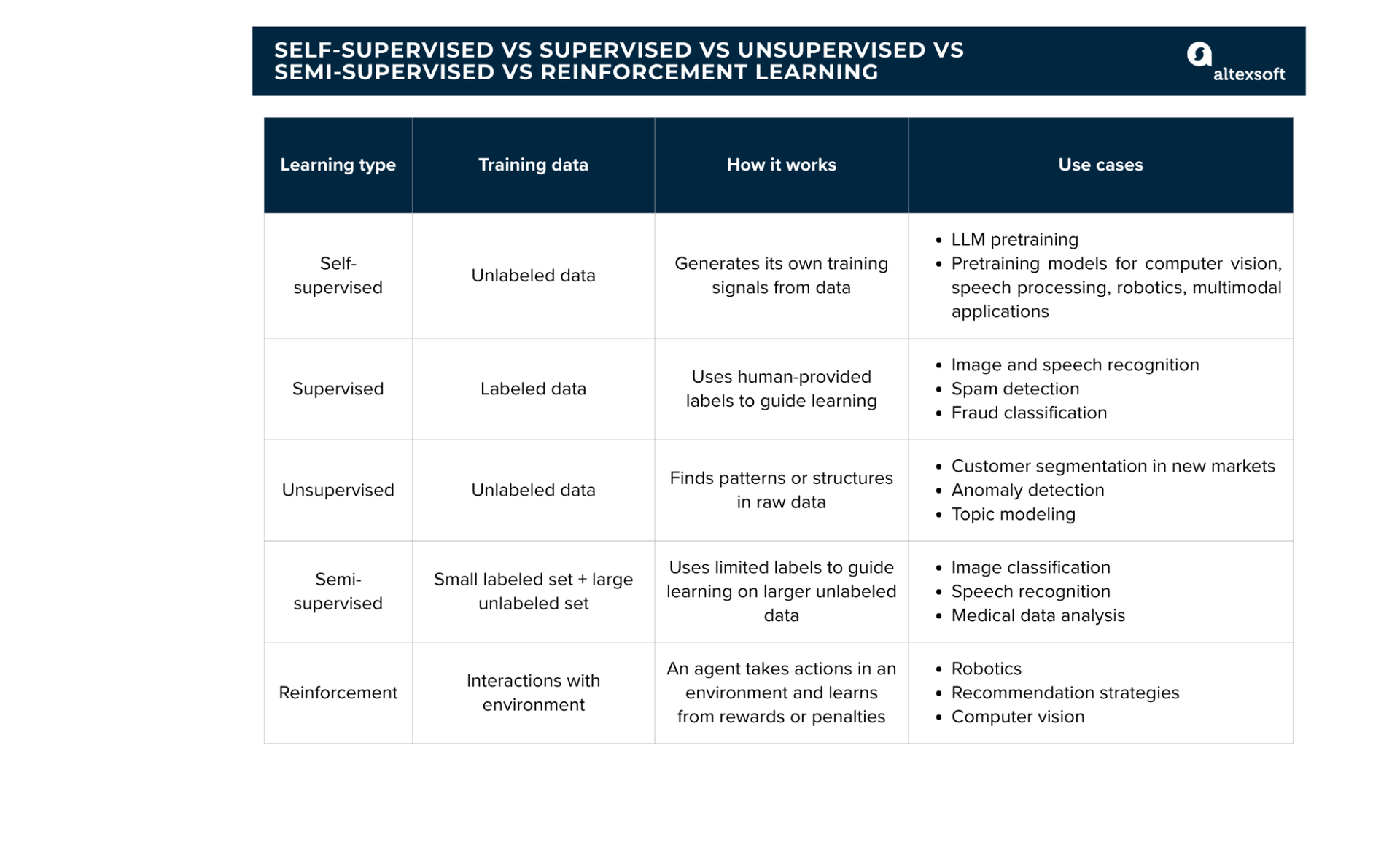

To understand where self-supervised learning fits, it helps to compare it directly with each major learning approach.

Self-supervised learning vs other major machine learning approaches

Supervised learning trains models using labeled datasets, where each input comes with a known correct output. The model compares its predictions to the labels containing expected answers and adjusts its parameters to reduce errors. The major limitations of this approach are the cost and time required for data labeling, especially at scale.

Unsupervised learning works with unlabeled data and focuses on uncovering structure rather than predicting explicit targets. Instead of being guided toward a specific answer, the model looks for patterns and relationships in the data. It is great for exploratory tasks like identifying customer segments in new markets or detecting unusual patterns in financial transactions or network activity.

Semi-supervised learning sits between supervised and unsupervised approaches. It uses a small amount of labeled data together with a much larger pool of unlabeled data. The labeled examples provide direction, while the unlabeled helps the model generalize better. This method requires minimal data preparation compared to fully supervised learning.

Reinforcement learning is focused on decision-making over time. An agent (the algorithm) interacts with an environment, takes actions, and receives feedback in the form of rewards or penalties. Instead of relying on fixed datasets and labels, reinforcement learning happens through trial and error.

Self-supervised learning can also be viewed as a bridge between supervised and unsupervised learning. It does not require labeled examples, but similar to supervised learning, it trains models through tasks such as predicting missing words in a sentence, the next frame in a video, and other forecasting challenges. This approach allows the model to generate labels from the data itself, making SSL especially useful when human-labeled data is scarce or unavailable.

Self-supervised learning methods

There are different self-supervised learning methods you can use to extract meaningful patterns from unlabeled data.

Autoencoding

Autoencoding is a technique where a neural network learns to compress input data into a compact representation (called the latent space) and then reconstruct it.

In traditional autoencoders, this compression results in a fixed vector that captures the essential patterns in the data while reducing redundancy. However, this basic form can be adapted in various ways to improve flexibility and make the learned representations more practical for specific tasks.

Denoising Autoencoders (DAEs): These are trained on partially corrupted data, where a portion of the input is randomly masked or distorted. The model learns to restore the original data, which helps it generalize better and works well for tasks like fixing noisy images or audio.

Variational Autoencoders (VAEs): Unlike traditional autoencoders, VAEs model the data as a distribution over possible values. This allows the model to create new, realistic data by sampling from the learned space. VAEs have been widely used for image generation, including applications such as DeepDream and Face Generation.

Sequence-to-Sequence (Seq2Seq) Autoencoders: They work specifically with time series or sequential data like speech or text. This approach is often used in applications like speech synthesis, machine translation, text summarization, and time-series forecasting.

Predictive learning (autoregression)

Autoregressive models use past data points to predict future values. They’re designed for any type of sequential data—like text, audio, or video—where the order of the information matters. In these models, the variable being predicted is typically the same as the input variable, which is why it’s called “autoregression.” Essentially, the model learns patterns and dependencies in the sequence, allowing it to forecast the next step based on prior observations.

A common example is in LLMs like GPT, LLaMA, or Claude. During training, the model sees the start of a sentence and tries to predict the next word, using the actual next word in the sequence as the reference.

Contrastive learning

Contrastive learning trains models to distinguish between similar and dissimilar inputs. Each input, known as an anchor, is paired with a positive sample (similar) and negative samples (dissimilar). The model learns to bring the anchor closer to the positive samples in the representation space while pushing the negative samples farther away.

There are several ways contrastive learning is applied in practice.

SimCLR and MoCo use augmented images to create positive pairs (e.g., different views of the same image) and treat other images as negative samples. The model learns to differentiate between augmented versions of the same image (positive pairs) and other images (negative pairs).

Contrastive predictive coding (CPC) predicts future parts of a sequence (e.g., the next word in a sentence or the next frame in a video) while discarding low-level noise.

Instance discrimination applies contrastive learning to entire instances, helping the model recognize the core content of an instance (e.g., an object or scene) while remaining unaffected by simple transformations like rotation, cropping, or color changes.

Noncontrastive learning

Noncontrastive learning trains the model using only pairs of similar inputs, called positive pairs. Instead of comparing data points to both similar and different examples (as in contrastive learning), the model minimizes the distance between the representations of these pairs, while ensuring they remain distinct enough to avoid trivial solutions where all data points are mapped to the same value.

These methods are often simpler and more memory-efficient compared to contrastive techniques, as they do not require maintaining a large set of negative samples. Despite their simplicity, non-contrastive approaches can still produce robust and useful features for a wide range of tasks, including image classification, object detection, and semantic segmentation.

How self-supervised learning works

The exact steps in self-supervised learning can differ depending on the data type, model architecture, and task at hand. Having said that, here’s an overview of what the typical process looks like.

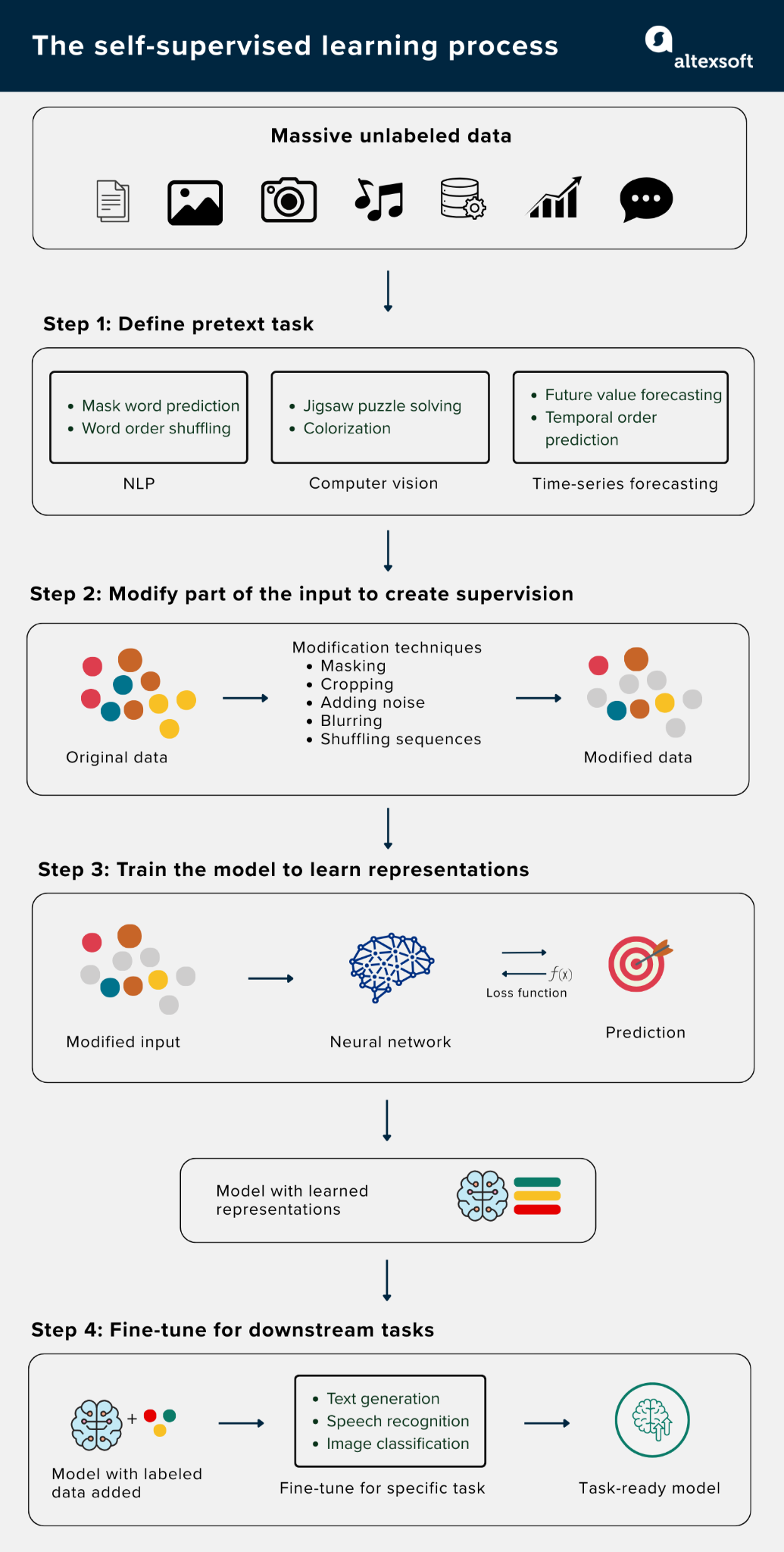

Step 1: Define a learning task from the data

The first step in self-supervised learning is to define a pretext task. While the type of task (e.g., completing a sequence) is chosen by humans, the task itself is formulated based on the data's inherent structure rather than predefined labels.

The pretext task will vary depending on the approach (autoregression, autoencoding, etc.) and the domain you’re dealing with.

In natural language processing (NLP), the pretext task may involve predicting masked words (autoencoding) or the next word (autoregression) in a sequence. In computer vision, the task can be reconstructing hidden parts of an image (autoencoding) or determining whether two augmented pictures come from the same source (contrastive learning).

Step 2: Modify inputs to create supervision

The next step is to modify the input data so that a prediction is possible. This modification could involve hiding, removing, shuffling, or transforming parts of the data. The model is then trained on this modified version of the data and tasked with recovering or relating it to the original data.

In text, this often means masking or replacing words. In images, common transformations include cropping, blurring, color shifts, or masking large patches. For audio or time series data, segments might be skipped, shifted in time, or otherwise modified.

Step 3: Train the model to learn representations

Once the pretext task and data modifications are set up, the model is trained similarly to supervised learning: It makes predictions, a loss function (which calculates the difference between the output and the actual target), measures the error, and the model updates its parameters to improve.

Step 4: Apply the model to downstream tasks (transfer learning)

Once training is complete, the model can be used as a feature extractor or a pretrained backbone. The pretext task is no longer needed, but the useful representations the model has learned can be applied to classification, detection, retrieval, regression, or segmentation.

To apply the model to these downstream tasks, it is typically fine-tuned on a small labeled dataset. Since the model has already learned useful patterns, fine-tuning is faster and requires less labeled data compared to training from scratch.

Use cases of self-supervised learning

Self-supervised learning’s ability to ascertain strong representations from raw data makes it a good fit for many real-world ML systems, especially at scale.

Meta: Expanding hate speech detection in different languages

Meta has developed and used self‑supervised, cross‑lingual language models to improve multilingual language understanding and harmful content detection. One early example is XLM, a cross‑lingual SSL model that helped extend hate speech detection across languages. Meta later introduced XLM‑R (XLM‑RoBERTa), an improved model that provides stronger performance on multilingual classification and moderation tasks across platforms like Facebook and Instagram.

These models are trained on large amounts of unlabeled text and can transfer knowledge throughout languages, enabling better accuracy in less widely spoken languages compared to traditional supervised approaches.

Google: Fine-tuning AI for diverse medical imaging tasks

Google’s REMEDIS (Robust and Efficient MEDical Imaging with Self‑supervision) is a unified representation learning framework designed to improve robustness and data efficiency in medical imaging AI. Instead of training separate models for each task or hospital setting, REMEDIS integrates large‑scale supervised pretraining on natural (nonmedical) images with SSL on unlabeled medical images to produce versatile feature representations that can be fine‑tuned for diverse clinical tasks.

In evaluations across multiple imaging domains—including chest radiography, dermatology, mammography, pathology, and more—REMEDIS demonstrated a 3-100 times reduction in the amount of annotated training data required, compared to traditional supervised methods.

Amazon: Deriving insights from satellite images

Amazon’s DINO framework is an SSL approach for computer vision that uses Amazon SageMaker to train models on large sets of satellite images from around the world. These images show different types of land, such as forests and wetlands, and help evaluate the model's accuracy.

Satellite and aerial images provide insight into a wide range of problems, including precision agriculture, insurance risk assessment, urban development, and disaster response. However, training machine learning models to interpret this data is often bottlenecked by the costly, time-consuming process of human annotation.

The DINO system learns patterns from these satellite images without needing labeled data to start with. After pretraining, it can be used for tasks like identifying land types (e.g., arable land, forests, wetlands) by adding a simple classifier.

Challenges to implementing self-supervised learning

While self-supervised learning solves the label scarcity problem, it introduces a different set of challenges that you should be aware of. Here are some of them.

Difficulties in selecting the right pretext task. The model’s learning depends on the task it’s given. If it’s too easy, the model takes shortcuts that don’t help downstream tasks. If it’s too hard or misaligned, training can fail. Finding the right setup usually takes several iterations and domain knowledge.

High computational cost. The model training process needs massive datasets and heavy computing. Some methods require big batches or memory banks, while others need long training runs to converge. This makes experimentation costly.

Hard evaluation and debugging. Without labels, it’s tricky to know if a model is actually learning useful features. Performance usually has to be checked by fine-tuning on downstream tasks, which slows feedback and debugging.

Sensitivity to data quality. Models can be affected by messy or biased data, which, in turn, could make them learn patterns that aren’t really meaningful. These problems might only become obvious after the model is trained for downstream tasks.

When to use and not use self-supervised learning

Self-supervised learning is not a default choice for every machine learning problem. It works best under specific conditions and can be a poor fit in others. Understanding these boundaries is important before committing time and resources to it.

When self-supervised learning makes sense

Self-supervised learning is a strong option when you have large volumes of raw data. This is common in domains where data collection is largely automated—such as sensor networks, online platforms, satellite systems, or autonomous machines—but annotations need input from domain experts, which makes large-scale labeling slow and costly

It also makes sense when the goal is to build reusable representations rather than solve a single, narrow task, since SSL models are often trained once and reused in multiple downstream problems. It's particularly useful for teams working on evolving products or research pipelines where the task definitions change over time.

Self-supervised learning is also a good fit when generalization matters more than short-term accuracy. Models trained this way tend to learn broader patterns instead of overfitting to a specific, labeled dataset. As a result, they are less likely to break or perform poorly when the data changes or when they are deployed in new environments.

When self-supervised learning may not be the right choice

Self-supervised learning is unnecessary when high-quality, large volumes of labeled data are already available. In such cases, supervised learning is usually simpler, faster to train, easier to evaluate, and more predictable in performance.

It is also a poor fit when the dataset is small. Self-supervised learning relies on large amounts of data to be effective. With limited data, the model may not learn useful representations, and the extra training stage may provide little benefit over simply training the model for the specific task.

Self-supervised learning can also be the wrong choice when your computing and experimentation budgets are tight. Training these models often requires long runs, large batches, or repeated trials to get the setup right. Simpler methods may be more practical if you’re under strict time or resource constraints.

With a software engineering background, Nefe demystifies technology-specific topics—such as web development, cloud computing, and data science—for readers of all levels.

Want to write an article for our blog? Read our requirements and guidelines to become a contributor.