Imagine sitting in a foreign café, hungry, checking out the menu for a tasty bite to eat. Unfortunately, but not surprisingly, the menu’s, say, in Portuguese and you only speak English. The good news is the menu has a photo of each dish. So, you look at the pictures, recognize the dishes offered, and place your order.

While learning by example comes naturally for us humans, it's not that easy for machines and software applications. To know how to recognize at least one object, they must learn about its distinguishing features from a ton of its images made from various angles – a complex process that takes a lot of time and effort.

Enabling computers to understand the content of digital images is the goal of computer vision (CV). Machine learning specialist Jason Brownlee points out that computer vision typically involves developing methods that attempt to reproduce the capability of human vision.

Let's get back to our food ordering situation. If a computer had to solve this problem, it could use its image recognition capability.

What is image recognition and computer vision?

Image recognition (or image classification) is the task of categorizing images and objects and placing them into one of several predefined distinct classes. Solutions with image recognition capability can answer the question “What does the image depict?” For example, it can distinguish between types of handwritten digits, a person and a telephone pole, a landscape and a portrait, or a cat and a dog (a frequent example).

Image recognition is one of the problems being solved within the computer vision field.

Computer vision is a broader set of practices that solve such issues as:

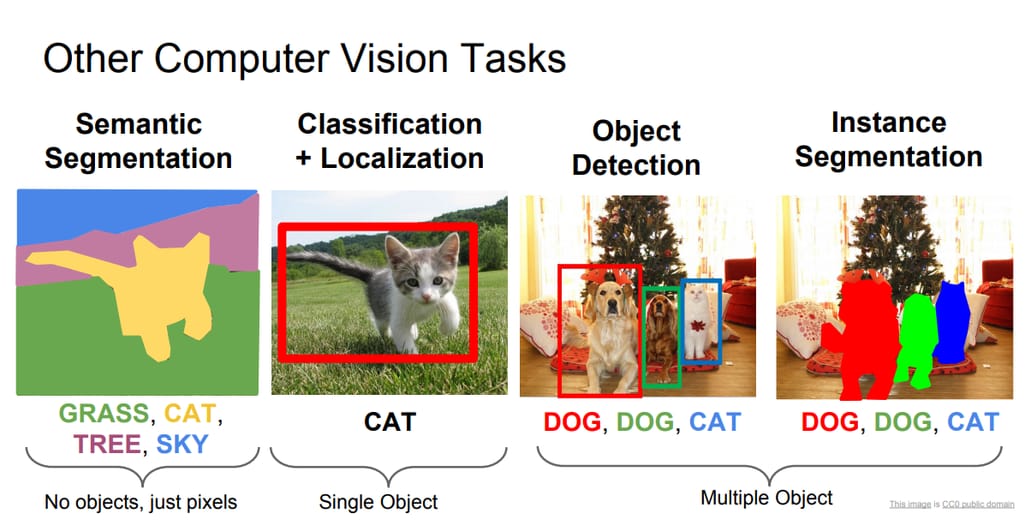

- Image classification with localization – identifying an object in the image and showing where it’s located by drawing a bounding box around it.

- Object detection – assigning label classes to multiple objects in the image and showing the location of each of them with bounding boxes, a variation of image classification with localization tasks for numerous objects.

- Object (semantic) segmentation – identifying specific pixels that belong to each object in an image.

- Instance segmentation – harder than semantic segmentation because it’s about differentiating multiple objects (instances) belonging to the same class (breeds of dogs).

Learn how computer vision works in our video

This slide from the lecture on detection and segmentation helps us understand the difference between computer vision tasks:

Different computer vision problems. Source: Stanford Lecture Slides. Lecture 11: Detection and Segmentation

With the basics in mind, let’s explore off-the-shelf APIs and solutions you can use to integrate visual data analysis into your new or existing product.

Image recognition APIs: features and pricing

Computer vision products are usually one of the features customers can access through MLaaS platforms. MLaaS stands for machine learning as a service – cloud-based platforms providing tools for data preprocessing, model training, and evaluation, as well as analysis of visual, textual, audio, video data, or speech. MLaaS platforms are developed for both seasoned data scientists and those with minimal expertise. The platforms can be integrated with cloud storage solutions.

Providers offer various features for visual data processing, which address use cases typical for given industries. Image classification, object detection, visual product search, processing of documents with printed or handwritten text, medical image analysis – these and other tasks are available on a pay-as-you-go basis in most cases.

Let’s overview some of them, focusing on the two main aspects:

1) types of entities that these systems can recognize

2) pricing.

Google: Cloud Vision and AutoML APIs for solving various computer vision tasks

Google provides two computer vision products through Google Cloud via REST and RPC APIs: Vision API and AutoML Vision.

Cloud Vision API enables developers to integrate such CV features as object detection, explicit content, optical character recognition (OCR), and image labeling (annotation).

You can detect:

Faces and facial landmarks. You can identify face landmarks (i.e., eyes, nose, mouth) and get confidence ratings for face and image properties (i.e., joy, surprise, sorrow, anger). Individual face recognition isn’t supported.

Entities (labels). With the Vision API, you can detect and extract information about entities in an image, across a broad group of categories. Labels can represent general objects, products, locations, animal species, activities, etc. The API supports English labels, but you can use Cloud Translation API to translate them into other languages.

Logos. Identify the features of popular product logos.

Optical character recognition (OCR). Detect printed and handwritten text in images and PDF or TIFF files.

Popular landmarks. The landmark detection feature allows for detecting natural and man-made structures within an image.

Explicit content. The API evaluates content against five categories – adult, spoof, violence, medical, and racy. It also returns the likelihood score of each being presented in an image.

Web references. The API returns web references to an image like description, entity id, full matching images, pages with matching images, visually similar images, and best guess labels.

Image properties. This identifies characteristics like a dominant color.

AutoML Vision is another Google product for computer vision that allows for training ML models to classify images according to custom labels. You can upload labeled images directly from the computer. If images aren’t annotated but located in folders for each label, the tool will assign those labels automatically. Users can get their dataset annotated by human operators. The product is currently in beta.

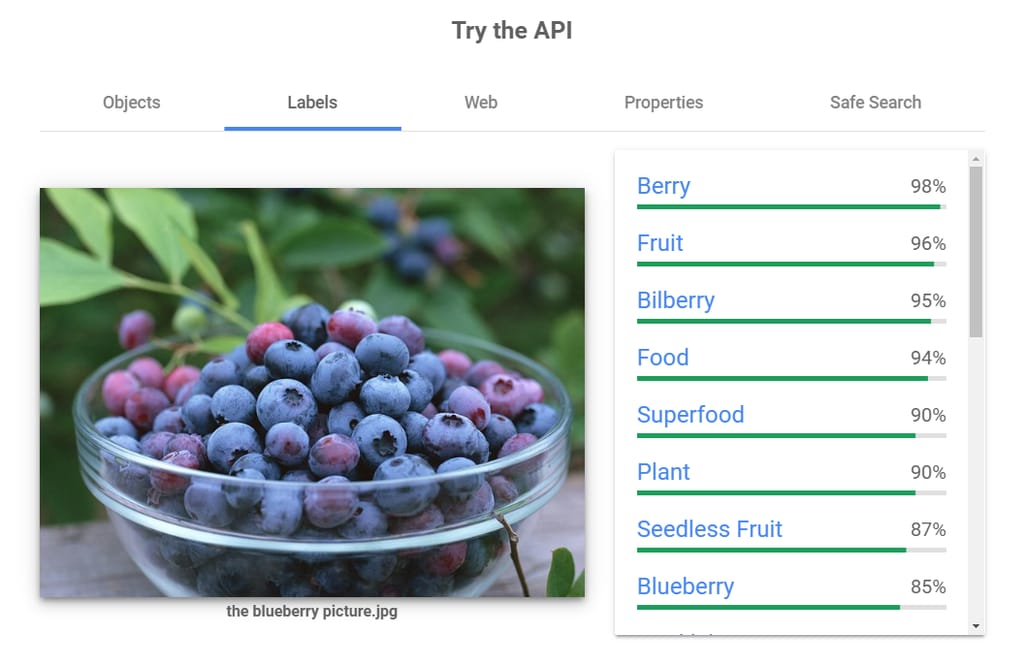

Google allows users to see how the API analyzes an image of their choice:

The API analyzes an image against five categories. Picture source: Wallcoo.net

Pricing. Vision API users are charged per image, particularly, per billable unit – each feature applied to an image. The first 1000 units per month are free. From the 1001st unit to 5,000,000 units per month costs from $1.50 to $3.50. Units 5,000,001–20,000,000 per month would cost $1.00 for label detection and the rest of the features cost $0.60 per image. You can check their price calculator.

AutoML Vision pricing depends on the used feature. For example, prices for usage of image classification depend on the amount of training required ($20 per hour), the amount of human labeling requested, the number of images, and the prediction type (online or batch). Online prediction is billable after 1000 images. Analyzing 1,001–5,000,000 images costs $3 per 1,000 images. If you choose batch prediction, the first node hour is free per account (one time), and then $2.02 per node hour.

Amazon Rekognition: integrating image and video analysis without ML expertise

Amazon Rekognition allows for embedding image and video analysis for applications. The service is based on the same technology used to analyze image and video data for the Amazon Photos service. Users aren’t required to have machine learning expertise.

The Recognition API features let you to do the following tasks:

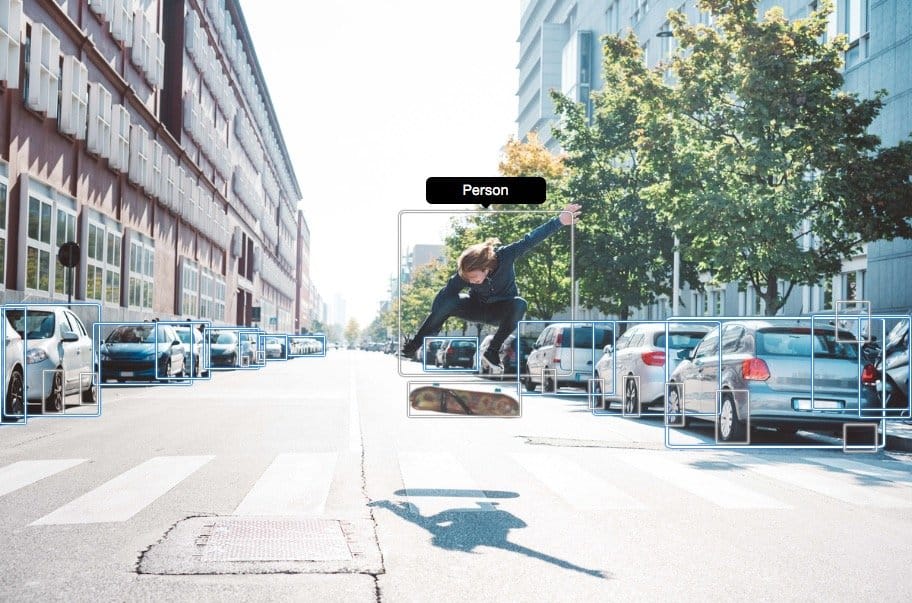

Recognize entities, objects, activities. Detect labels – objects (i.e., people, cars, furniture, clothes, pets), scenes (i.e., woods, beach, a city street) or concepts (outdoors), activities (i.e., playing soccer, skating)

Recognize and analyze faces. You can detect a person in a photo or video, detect facial landmarks, expressed emotion, get a percent confidence score for the detected face and its attributes, and save facial metadata. You can also compare a face in an image with faces detected in another image.

Recognize celebrities. You can identify famous people in video and images.

Capture movements. The service allows you to track the path people take in a video, their location, and detect their facial landmarks.

Detect unsafe content. Amazon Rekognition identifies explicit nudity, suggestive (underwear or swimwear), violence (i.e., physical weapon), and disturbing scenes (corpses, hanging).

Detect text in images. Detect and recognize text, such as captions, street names, product names, and license plates.

Detection of multiple objects in an image. Source: Amazon Rekognition documentation

Pricing. Amazon has a free tier for its recognition services. Users pay for the number of media files they analyze. Pricing also depends on a region, so customers from Ireland and Northern Virginia, for instance, would pay slightly different sums. You can use the pricing page to get a quote.

Users can analyze 1,000 minutes of video, 5,000 images, and store up to 1,000 face metadata for free per month, for the first year.

Below we provide costs for Northern Virginia (US East) customers as an example.

Analyzing subsequent archived video costs $0.10 per min (billed per-second); live stream video analysis is $0.12 per min. Storage of face metadata is $0.01 per month per 1000 records.

Image analysis pricing decreases based on number of images. The first 1 million images processed cost $1.00, next 9 million images – $0.80, next 90 million – $0.60. If your workload is over 100 million images per month, you’d pay $0.40. Storage of face metadata is $0.01 per 1,000 records.

IBM Watson Visual Recognition: using off-the-shelf models for multiple use cases or developing custom ones

IBM provides the Watson Visual Recognition service on the IBM Cloud which relies on deep learning algorithms for analyzing images for scenes, objects, and other content.

Users can build, train, and test custom models within or outside of the Watson Studio.

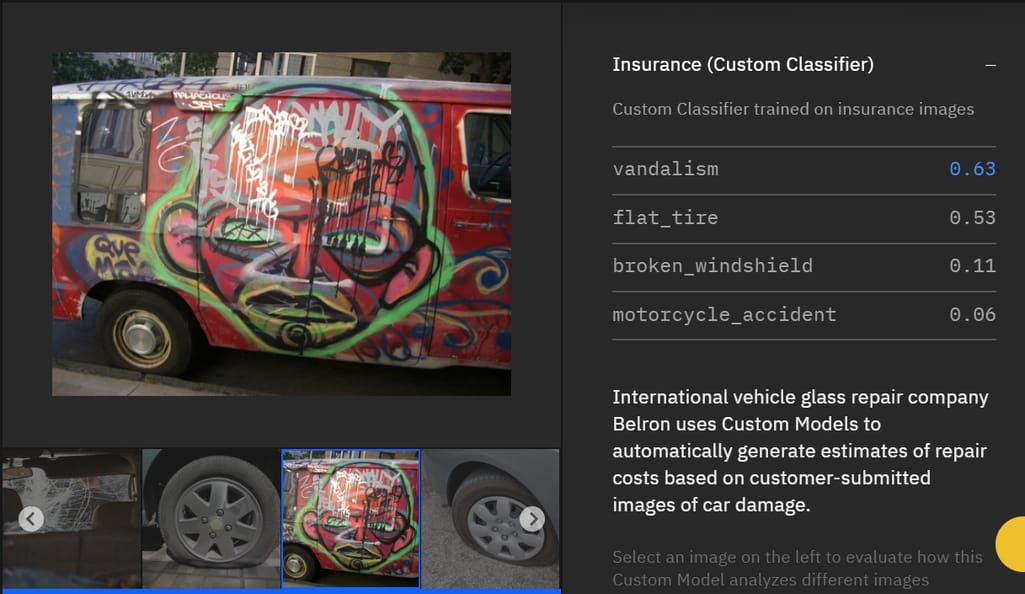

The demo of a custom model by vehicle glass repair company Belron. Source: IBM

Another feature available in beta enables users to train object detection models.

Pre-trained models include:

General model – provides default classification from thousands of classes

Explicit model – defines whether an image is inappropriate for general use

Food model – identifies food items in images

Text model – extracts text from natural scene images.

Also, developers can include custom models in iOS apps with Core ML APIs and work in a cloud collaborative environment with Notebooks in Watson Studio.

Pricing. IBM offers two pricing plans – Lite and Standard.

Lite: Users can analyze 1,000 images per month with custom and pre-trained models for free and create and retrain two free custom models. The provider also offers Core ML exports as a special promotional offer.

Standard: Image classification and custom image classification costs $0.002 per image and training a custom model costs $0.10 per image. Free Core ML exports are also included in the plan.

Microsoft: processing of images, videos, and digital documents

Microsoft Azure Cloud users have a variety of features to choose from among Microsoft’s Cognitive Services. Vision services are classified into six groups that cover image and video analysis, face detection, written and printed text recognition and extraction. The APIs are RESTful.

Here is a short list of Microsoft Cognitive Services features:

Face detection. Detect up to 100 people in one image with their location, identifying attributes like age, gender, emotion, head pose, smile, makeup, or facial hair. Detect 27 landmarks for each face (Face API).

Adult content detection. With Computer Vision API, detect whether an image is pornographic or suggestive.

Brand recognition. Detects brands within an image, including the approximate location (Computer Vision API). The feature is only available in English.

Landmark detection. Identify landmarks if they are detected in the image (Computer Vision API).

Celebrity recognition. Recognize celebrities if they are present in an image (Computer Vision API).

Image properties definition. Define the image’s accent color, dominant color, and whether it’s black and white (Computer Vision API).

Image content description and categorization. Describe the image content with a complete sentence and categorize content (Computer Vision API).

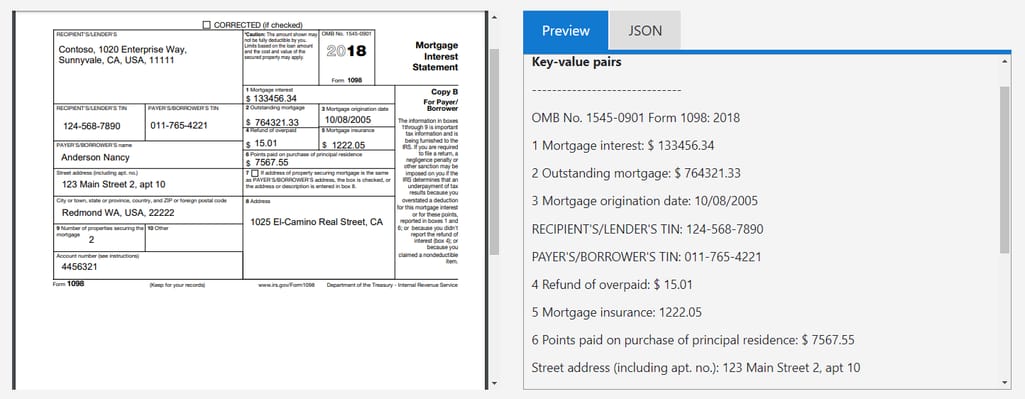

Information extraction from documents. Extract text, key/value pairs, and tables from documents, receipts, and forms (the Form Recognizer service).

Text recognition. Recognize digital handwriting, common polygon shapes and layout coordinates of inked documents (the Ink Recognizer service).

The demo with a digital document processed with Form Recognizer. Source: Microsoft Azure

Pricing. The cost of services depends on the API used, the region, and the number of transactions (not API calls). For example, if you do up to 1 million transactions with the Face API, it will cost you $1 per 1,000 transactions. Making over 100 million transactions would cost $0.40 per 1,000 transactions. Detecting adult content with Computer Vision API and making up to 1M transactions – $1.50 per 1,000 transactions; 100 million or more transactions cost $0.65 per 1,000 transactions.

Clarifai: custom-built and pre-built models tailored for different business needs

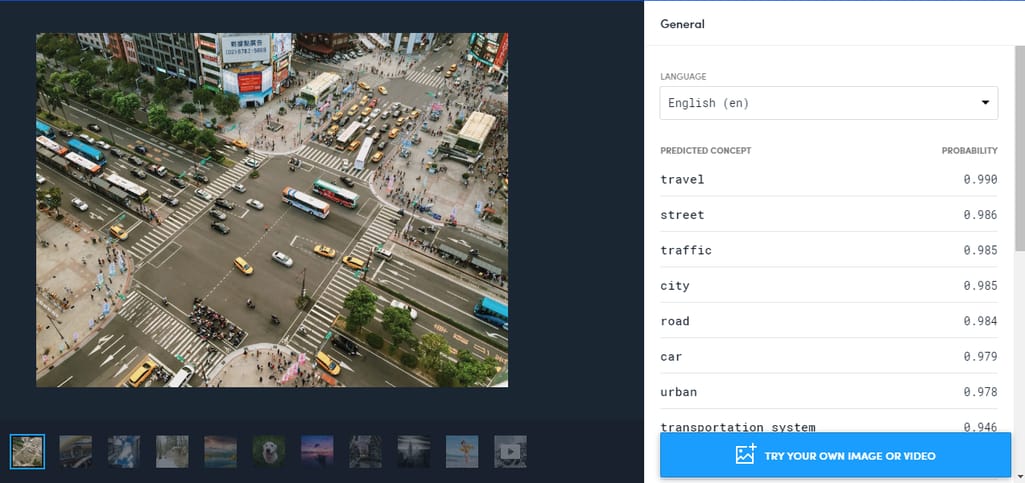

Clarifai has developed 14 pre-built computer vision models for recognizing visual data. The service is accessible through the Clarifai API. The provider emphasizes the simplicity of using its computer vision service: You send inputs (an image or video) to the service and it returns predictions. The type of predictions depend on the model you run.

Each of the pre-built models identifies given image properties and contained concepts. With off-the-shelf models, you can, for instance:

- identity clothing, accessories, and other items typical for the fashion industry

- detect dominant colors present in the image

- recognize celebrities

- recognize more than 1000 food items down to the ingredient level

- detect nsfw (Not Safe For Work) content and unwanted content (NSFW and Moderation models)

- detect faces and their location, as well as predict their attributes like gender, age, descent.

The demo of the General model. Source: Clarifai

The company also considers peculiarities of such businesses as travel and hospitality and wedding planning by providing models that “see” related concepts. Training models based on specific images and concepts is also available.

Pricing. Clarifai’s pricing is also usage-based. Customers have three pricing plans to choose from – Community, Essential (pay as you go and monthly invoice), and Enterprise & Public Sector (pricing is available on demand). Plan services include machine learning operations, hosting, consultation, mobile SDKs, infrastructure, and more.

Community: includes 5,000 free operations, 10 free custom concepts, 10,000 free input images, among other features.

Essencial: Users can train custom models for $1.20 per 1,000 model versions. Predictions with pre-built models cost $1.20 per 1,000 operations. Predictions with custom models are $3.20 per 1,000 operations. Search for images costs $1.20 per 1,000 operations; adding or Editing Input Images costs $1.20 per 1,000 operations.

Zebra Medical Vision: medical image analysis tools for radiologists

Specialists from the healthcare sector aren’t left out of image recognition tools. Zebra Medical Vision provides solutions for analyzing medical images – computerized tomography and X-ray scans – in real time. The company notes it uses a proprietary database of millions of imaging scans, along with machine and deep learning tools to develop the software for managing radiologists’ workflows. There are three solutions focused on identifying specific conditions and one for flagging and prioritizing cases. One can detect brain, cardiovascular, lung, liver, and bone disease in CT scans, 40 different conditions in X-rays scans, and breast cancer in 2D mammograms. Zebra Medical Vision is HIPPA and GDPR compliant.

Pricing. Zebra’s AI1 all-in-one solution comes with a fee of up to $1 per scan.

You can review tools by other vendors, such as DeepAI, Hive, Nanonets, or Imagga. Image and video moderation API by Sightengine, xModerator's image moderation service, or APIs and SDKs for facial recognition and body recognition solutions by Face++ AI Open Platform could also be a good fit for you.

How to choose an image recognition API?

Plenty of commercial APIs for image recognition and other computer vision tasks are available, so the mission here is to select the one that would meet your needs and requirements. You can evaluate offerings against these criteria:

Visual analysis features. Explore product pages and documentation to learn which entities the API can recognize and detect. The documentation always contains more detailed information, so we advise to give it a read.

Type of visual data and analysis mode. Does the API or product support image analysis, video analysis, or both? Also, vendors specify what types of predictions (batch and online) they provide.

Billing. Vendors offer usage-based pricing and keep most of the pricing information open, so you can estimate how much each solution would cost you based on the projected workload.

API usage. APIs only become useful when developers know how to use them. Tutorials on how to enable APIs, make API calls along with examples of responses – all this knowledge you will find in the documentation.

Support. Technical support must be available 24/7 via multiple channels (phone, email, forum, etc.) Vendors usually offer multiple support plans for purchase.