Future-forward companies are always looking for ways to improve their processes, boost efficiency, and stay ahead of the curve. One way to achieve this is by adopting AI across different areas of the business. But AI transformation is anything but straightforward—it’s an ongoing journey full of challenges and learning.

In this article, we take a behind-the-scenes look at AltexSoft’s continuous AI transformation process, the lessons learned along the way, and the insights that can guide organizations looking to harness AI effectively.

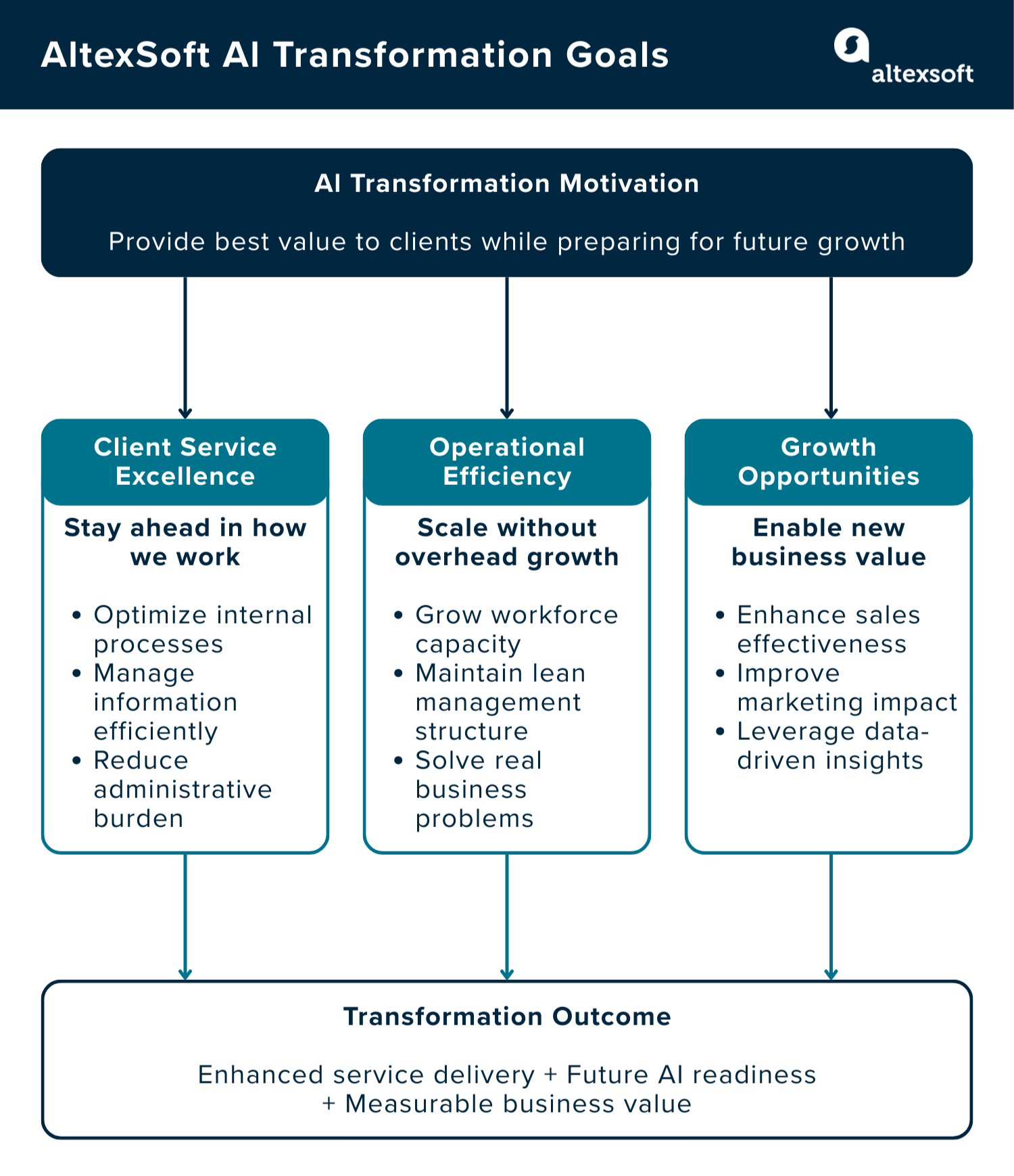

The motivation behind AltexSoft’s AI transformation

Our journey began with a clear purpose: to provide the best value to clients while preparing for future growth. As Ihor Pavlenko, Director of AI Practice at AltexSoft, puts it, “To deliver the best service to our clients, we first need to be ahead in the way we work ourselves. We have processes, information, and a lot of administrative work, and we need to optimize it smartly.” The goal isn’t to adopt technology for its own sake, but to enhance how we deliver services and to stay prepared for the changing market.

Operational efficiency is another key driver. With a team of over 400 people, the challenge is scaling without increasing administrative overhead. Ihor explains, “Our aim is to grow the workforce without adding more managers or support staff.”

The transformation also opens the door to new growth opportunities. “We want to develop tools that make our sales, marketing, and account management more effective,” Ihor said. By performing data analytics on historical client data and using insights to guide decisions, we can explore new possibilities and create measurable value. All of these efforts fall under our AI transformation.

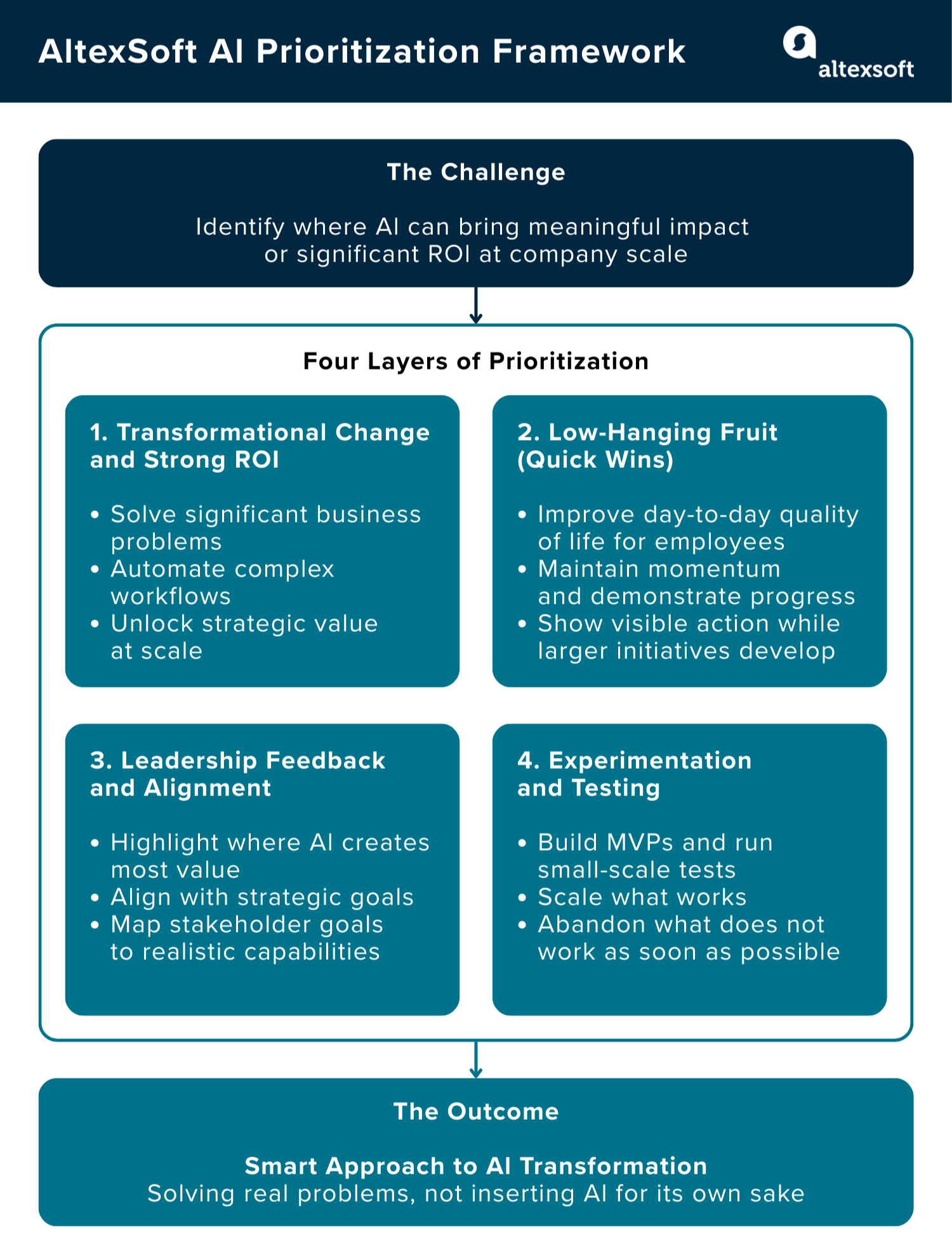

How we prioritized: our approach and playbook

As a company with hundreds of employees and complex processes across teams, deciding where to focus first was a key challenge. Ihor explains that the team doesn’t just apply AI everywhere—it starts by identifying areas where it can bring meaningful impact or a significant return on investment: “We need to solve real problems, not just to put AI and GPT everywhere. For example, we have a huge process of onboarding or pre-onboarding, and it might be interesting to use AI here, but honestly, it would save maybe several hours of HR work per month. That’s not transformative at the company scale.”

Our approach combines several layers of prioritization.

Transformational impact. We looked for initiatives that could create transformational change or a strong ROI. These are projects where AI can solve significant problems, automate complex workflows, or unlock strategic value.

Quick wins for daily efficiency. At the same time, we targeted low-hanging fruit that improved employees' day-to-day quality of life, even if it didn’t directly affect revenue or core processes. As Ihor explains, “For example, we’ve published some bots, and while they don't solve any significant business problems, they show that we are improving employees’ quality of life to some extent.” These quick wins maintain momentum and demonstrate tangible progress while larger initiatives are underway.

Leadership-driven alignment. Feedback from leadership helped us highlight where AI can create the most value and align with strategic goals. We gather insights on what stakeholders want to achieve and map them against what’s realistically possible with our current capabilities, processes, and resources.

Continuous experimentation. Experimentation was—and is—also a key part of the transformation process. MVPs and small-scale tests have helped us determine which projects should scale and which should be abandoned. As Ihor puts it, “Something works, we scale it. Something doesn’t work well, we abandon it as soon as possible.”

Ihor describes this playbook as a careful, “smart” approach that solves real problems rather than just inserting AI for the sake of it. In companies less familiar with AI, this process would also involve education and explanation to prepare teams for change. At AltexSoft, where AI adoption was already at a high level, this step was less critical.

AI initiatives across departments: challenges and lessons learned

Our AI initiatives are spread across multiple departments. Here are some example projects and things we've learned from building and executing them.

Marketing and content

One of our major goals was to empower the marketing team—via generative AI—to produce content faster without lowering the bar on quality. Speed alone wasn’t the objective. The real challenge was helping writers move faster while still preserving our brand voice, tone, and overall positioning.

Out-of-the-box AI chatbots like ChatGPT don’t solve that problem. They can generate text, but they don’t fully capture how we communicate as a company. The output often requires heavy editing to align with our standards, which defeats the purpose if the team still spends significant time rewriting and polishing.

So instead of relying on generic LLMs, we built something tailored to us.

We created a unified knowledge base that consolidates all our published articles. On top of that, we developed a helper tool that writers can use during the content creation process. This tool is grounded in our own materials, so when it generates or supports content, it reflects the structure, tone, and style that define AltexSoft.

Sales and pre-sales

Over the years, our sales and pre-sales teams have accumulated thousands of files—calls, proposals, clarifications, and technical discussions. The challenge is how to make this data, which exists in multiple formats, usable and accessible in day-to-day work.

Manually searching through that volume of material was slow and inconsistent, and finding information in time for conversations with clients was challenging.

To address this, we started building and testing a bot trained on our historical pre-sales data. The idea is simple: when a similar client question comes up, the bot can retrieve relevant context from past engagements and provide structured answers based on what we’ve already done.

The dataset is massive, and working with it manually was simply not practical. As Ihor pointed out, “It’s a huge amount of files. With the bot, we turned historical data into a scalable internal asset.”

HR and recruitment

Our focus in HR was removing friction from daily back-office work, including the talent acquisition process.

Previously, hiring managers needed to go through a manual process when opening new roles. This involves submitting a request through Jira and filling in details such as salary range, location, role description, and other specifications. The process worked, but it wasn’t efficient.

To simplify this, we built an AI agent using MS Copilot Studio and Power Automate and deployed it directly inside Microsoft Teams. Now, instead of navigating across systems, a manager can initiate the process through a chat interface. The agent asks structured questions about the role, and based on those inputs, it generates a job description aligned with our internal standards and automatically submits the request to Jira Service Desk.

The agent connects to internal data sources and relies on company-approved terminology. This way, the generated job descriptions stay consistent with our brand and match the tone used in human-written postings. Beyond this, we’ve also introduced smaller tools for structured interview feedback and handling routine HR-related requests. These don’t redefine the function, but they remove repetitive tasks from our HR specialists.

Building AI–ready infrastructure: challenges and lessons learned

When you build a house, you start with a solid base—because everything on top depends on it. AI transformation works the same way.

Assessing our existing infrastructure

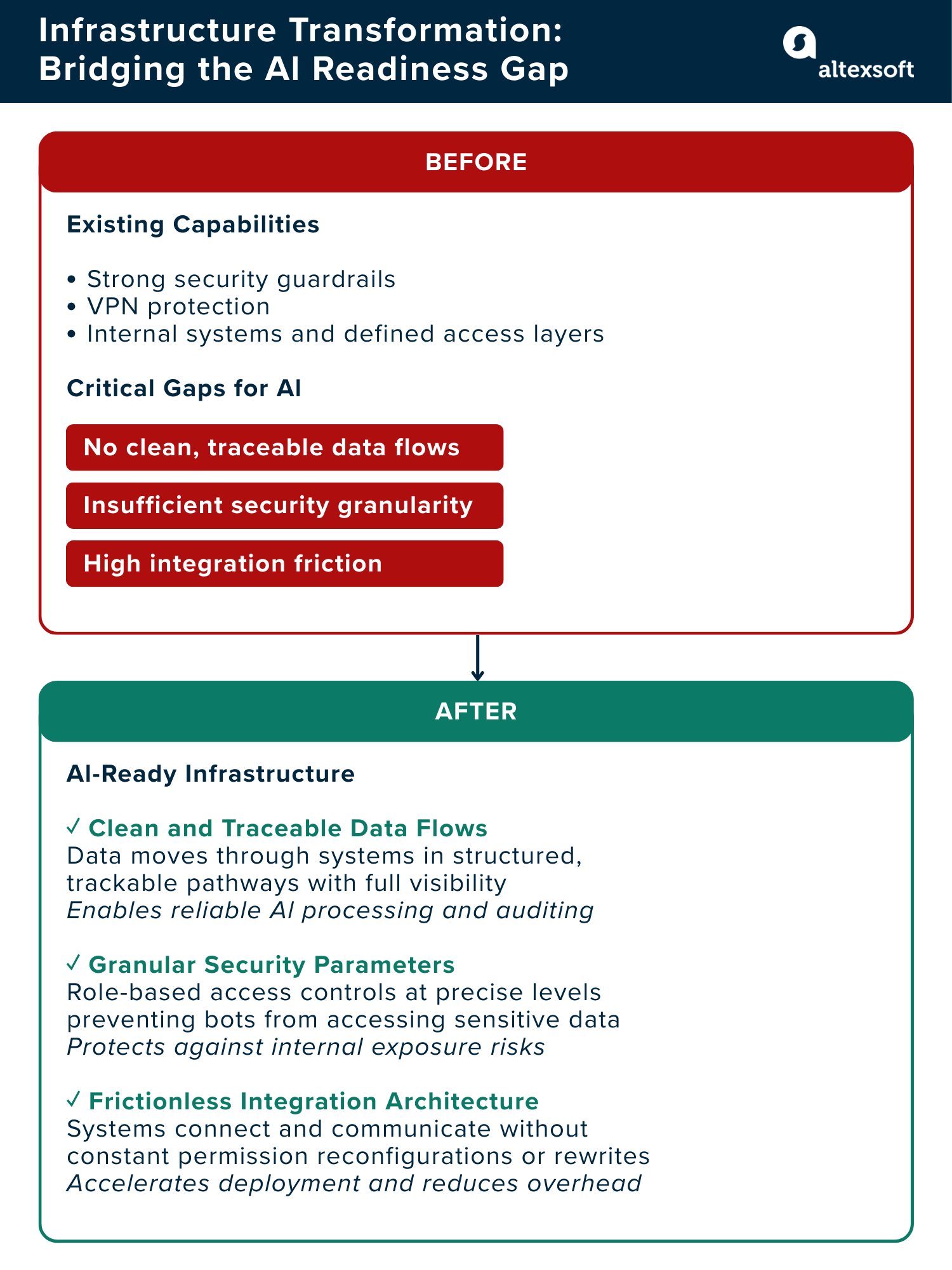

From the outside, our infrastructure looked mature. “We already had strong security guardrails, VPN protection, internal systems, and defined access layers. However, we knew we weren't AI-ready,” remarks Ihor.

AI readiness, in practical terms, came down to three things:

- clean and traceable data flows,

- well-defined security parameters, and

- architecture that allows integration without constant friction.

Ihor notes that infrastructure was the first friction point and that even launching a few internal bots required adjustments across systems—we had to reconfigure permissions, rewrite services, and deploy and support new integrations. So we took several practical steps to tackle the gaps.

Tackling security and internal access risks

External data security is a common concern for many companies. In our case, we rely on enterprise-grade APIs that specify customer data isn’t used for model training. As Ihor explains, the more critical challenge is internal access control: “If an AI bot has wide permissions and someone gains access to it, it could expose sensitive information across delivery.”

That pushed us to adopt more granular, role-based access control across systems. We also needed to organize unstructured content—emails, documents, presentations, images, and video—by applying classification and metadata tagging in centralized, governed storage. This made it possible to enforce structured access policies rather than leaving everything accessible by default. For example, an email thread might contain both financial details and customer data; developers should only see the fragments relevant to their work, and project managers shouldn’t have access to specific contract clauses. Tying access to defined roles and permissions helped enforce these boundaries.

Managing data inconsistency

Data fragmentation and inconsistency were other major obstacles. Information was spread across multiple systems—HR platforms, shared drives, internal tools—with varying formats and departmental ownership. In several cases, we first had to identify which dataset represented the single source of truth before any automation could begin. Cleaning, reconciling, and aligning that information often required more effort than building the AI layer itself.

To support this process, we developed an internal tool—primarily using Python libraries—to parse, normalize, and process different content types, including presentations, documents, images, and email threads, and feed them into AI workflows. The goal wasn’t something exotic. It was practical: build instruments that can operate across formats without forcing teams to restructure everything manually.

This is a good point to mention another important decision point businesses face: should we build internal systems or use third-party solutions to meet our needs? For the data inconsistency issues, we went with a custom-built tool. However, that’s not always the case, since it’s always a balance between flexibility, cost, and long-term support. Ihor explains it clearly: “When you calculate margins, building solutions in-house is often more expensive than using SaaS tools. However, there are cases where we need a level of flexibility that off-the-shelf solutions simply can’t provide.”

Redesigning processes for AI integration

Process design created another turning point. There’s a temptation to “plug in” AI and expect results. But as Ihor notes, the sequence matters: optimization first, automation second, AI third. If a workflow has unnecessary approvals or unclear ownership, adding AI just speeds up confusion. In some cases, we had to redesign processes that had been built over several years, and that took time. Much of our environment is protected by VPN. While it's the right choice from a security standpoint, it became an integration headache. We had to rewrite some services just to allow systems to communicate with each other.

Building model-agnostic, flexible systems

Building for flexibility was essential, and that long-term thinking shaped our model strategy. Rather than tightly coupling systems to a single provider, we aim for a model-agnostic architecture wherever possible. As Ihor explains: “In most generic cases, the specific model doesn’t really matter—GPT, Gemini, and Claude will continue to evolve. We design our solutions so they aren’t dependent on any one model. If we see that Gemini performs better than GPT for a specific use case, we can switch providers without rebuilding the system.”

By abstracting model integrations behind a unified interface, we retain the ability to change providers with minimal disruption instead of rewriting core workflows.

Team, roles, and culture

AI transformation also forced us to rethink roles and responsibilities. For us, titles matter less than the ability to take ownership and deliver value. AI initiatives often begin as research projects where there is no clear blueprint, and the right solution is not always obvious from the start. That means software engineers, ML specialists, data engineers, and project managers must be comfortable working with some level of ambiguity and taking responsibility for outcomes.

Building an internal startup culture

We treat AI transformation as an internal startup team that operates with its own budget and a high level of autonomy. However, “startup culture” in our case does not mean chaos. As Ihor explains, “It’s about taking ownership of experiments and providing value.”

This mindset allows us to test ideas quickly, measure results, and decide what deserves further investment.

Prioritizing responsibility over strict specialization

AI systems rarely live in a single technology stack. For example, we may have a solution written in Python that includes components in .NET and Node.js. In this kind of environment, strict role boundaries do not always work. “It doesn’t really matter if you know Node.js or you don’t know Node.js—if there is a failure in that module, you have to go and fix it,” Ihor said.

The expectation is clear: the team owns the outcome. If something breaks, the priority is solving the problem, not defining whose responsibility it is.

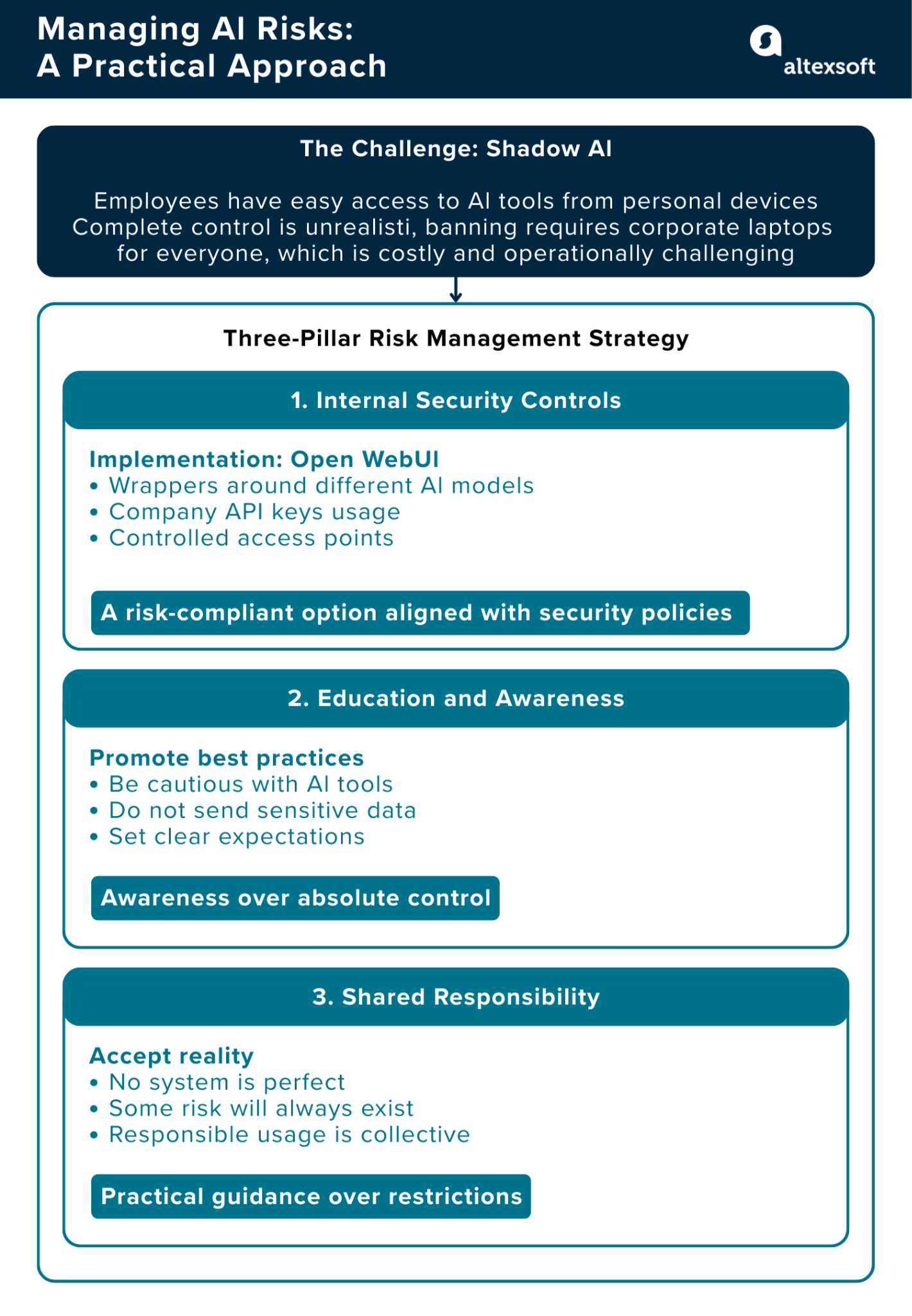

Managing AI risks across the company

Beyond infrastructure and formal integrations, AI transformation also introduced another challenge: shadow AI. Employees today have easy access to a wide range of AI tools, often from personal devices. Completely controlling that usage is not always realistic.

As Ihor explains, some large corporations choose to ban AI tools entirely and allow only approved internal solutions. That approach requires strict device control and centralized infrastructure.

Instead of pursuing full restriction, we focused on building governance mechanisms that reduce risk while keeping teams productive.

Implementing internal security solutions

We implemented an internal solution based on Open WebUI that acts as a wrapper around multiple AI models. All interactions are routed through company-managed API keys, ensuring requests pass through controlled and approved access points.

This gives employees a secure, standardized alternative while remaining aligned with our broader security policies. Under enterprise API terms, providers state that customer data isn’t used for model training. By routing usage through a monitored, centralized environment rather than allowing unmanaged external access, we reduce data leakage and governance risks.

Promoting responsible AI usage

At the same time, we recognize that no system is perfect. Even with approved tools in place, it is difficult to eliminate all risks.

Managing AI risk across the company, therefore, is not only a technical task. It also requires awareness, education, and clear expectations. Rather than relying solely on restrictions, we combine secure infrastructure with practical guidance, recognizing that safe usage depends on shared accountability across the organization.

Budgeting and ROI

AI transformation is anything but cheap. As Ihor puts it, “If you want transformation, you should invest in it. No money, no transformation. You can’t push AI initiatives with a budget of one piece of bread and one sausage.” When it comes to budgeting, we separate two major components: team allocation and tools and infrastructure.

Team allocation

“The most expensive part is our team,” Ihor shares. “We gave an estimate, hired a list of people, and understood more or less that with this setup, we can provide value for stakeholders within an agreed timeline.”

One of the early lessons was clear: nothing moved without having a dedicated team ready. There was little progress in the first few months because the team was not fully staffed. However, once we allocated the proper budget to getting skilled hands, our speed of delivery increased. We understood that the ROI wouldn’t be instant—say in the first few months—but eventually, now that a properly funded and structured team was in place.

Managing unpredictable tool costs

Tool budgeting introduced a different type of complexity. Some SaaS services have predictable pricing while others are usage-based and harder to control.

For example, certain AI development tools can exceed limits without clear administrative controls. “We’ve had some surprise expenses occur,” Ihor admits. In one case, a single user generated a significantly higher monthly cost, likely due to selecting more powerful models. From an administrative perspective, tracking and limiting such expenses is not always straightforward.

Some platforms are more manageable, but others don’t provide hard spending caps or proactive alerts. That means you must manually monitor expenses and limits through dashboards, which adds operational overhead.

To maintain control, we introduced a monthly budget for AI tools. However, it isn’t fixed—it varies depending on active projects and business priorities. “In one internal project, we invested heavily in a single generation,” Ihor notes. “We knew it was the only practical way to move things forward.”

In other words, ROI is assessed in context. If a generation or experiment speeds up work or delivers real value, higher short-term costs can be worthwhile. The focus should be on keeping track of spending and making informed decisions rather than letting costs spiral out of control.

Encouraging adoption and experimentation

AI adoption is never automatic. Even with strong leadership and a clear strategy, we’ve had some initial pushback from people who doubted whether making more processes AI-first could actually bring value.

To address this, we invest in adoption efforts that go beyond just providing tools. Training sessions, internal discussions, and workshops helped people see how AI can improve their work and not replace them.

Hands-on initiatives like our AI hackathons have been particularly effective. These events allow teams to experiment freely with AI instruments and tackle challenges they couldn’t address otherwise. Ihor reflected on their impact:

"Hackathons were a huge adoption boost. Teams could use any AI tools to do something they weren’t able to do before. We saw some really cool ideas, like automating travel bookings from vague customer requests, and it gave people confidence to explore AI."

Measuring progress and value at the executive level

When reporting AI transformation progress to the C-suite stakeholders, the emphasis is on measurable business impact—primarily revenue performance and cost efficiency.

As revenue expands, direct costs—mainly salaries—increase as more people are hired to support delivery. That’s expected. What matters more is how indirect expenses behave.

“If overhead doesn’t increase while revenue and headcount rise, it shows our processes are effective,” Ihor explains. “It means we can support more people and businesses without adding additional resources.”

We also track commercial performance—including lead generation, conversion rates, and overall sales momentum. These indicators demonstrate that AI initiatives move beyond internal experimentation and drive measurable business value.

Lessons learned, challenges, and recommendations

AltexSoft’s AI transformation is still very much underway. However, we’ve learned various things from the journey thus far.

Start earlier and commit to a real budget

Looking back, one of Ihor’s main reflections is timing and investment. “I guess we should have started earlier and put more budget into it,” he says. “In that case, we would already have more tangible results by today.”

AI transformation takes time. Delaying the start or underfunding the initiative slows down learning, experimentation, and delivery. The earlier you commit, the sooner meaningful results can compound.

Avoid committee-driven transformation

Another lesson relates to structure. At the beginning, we experimented with a transformation committee. “I do not believe in transformation using some committees. In the beginning, we had such a transformation committee. It’s absolutely useless because it’s about three to four managers who are trying to solve the problem.”

Transformation needs ownership and execution, not only discussion. A small group of managers without dedicated delivery capacity cannot move initiatives forward in a meaningful way.

Build a stable, dedicated team

The stability of the team members directly involved in the AI-first initiatives has also been very important. Internal AI projects often compete with client-facing work, especially in service-based organizations. There is always a temptation to reassign skilled people to revenue-generating projects. While financially logical in the short term, it slows long-term transformation.

“We could have had a more stable, dedicated team,” Ihor noted. “We had a few changes of solution architect and a few changes inside the team, and it really stopped our progress.” Continuity matters. Frequent role changes disrupt momentum and delay outcomes.

Understand that results take time, and be ready to invest and think long term

For companies starting their AI journey, expectations must be realistic. “It won’t be a quick journey. You won't be able to build really cool stuff in a couple of months. You won’t get any feasible results so fast,” Ihor said.

AI transformation is not a short-term project. It requires belief in the direction and patience during the early stages when there are little or no visible outcomes.

“You have to believe in it. You should be ready to invest in it,” Ihor emphasized. “The result could be much more than you expected. It could be really great—but not in a month or two or three.”

If a problem is solved instantly by plugging in AI, it was likely a simple operational issue to begin with. Real transformation addresses deeper structural and process challenges.

With a software engineering background, Nefe demystifies technology-specific topics—such as web development, cloud computing, and data science—for readers of all levels.

Want to write an article for our blog? Read our requirements and guidelines to become a contributor.