As AI systems take on more complex tasks, one of the biggest challenges is keeping their behavior and output consistent. Static prompts—or predefined instructions given to an AI system—only work up to a point: AI agents often lose important details during sessions, as they move from one step to the next or handle new situations.

This is where agentic context engineering (ACE) comes in. Let’s examine how ACE helps agents manage evolving information, stay aligned with their goals, and avoid common issues such as context drift and generic outputs.

What is agentic context engineering, and what problems does it solve?

Preserving the context is crucial for large language models (LLMs) as the precision of their outputs depends on the input they can access. When reasoning steps, past decisions, or domain-specific knowledge are lost, the model can repeat mistakes, produce generic responses, or drift away from the original goals.

Over time, many context management strategies have been developed to better manage context and reduce the need for LLM retraining. These include

- retrieval-augmented generation (RAG), which lets models pull relevant information from a domain-specific knowledge base—although it doesn’t naturally retain long-term reasoning or strategies;

- in-context learning (ICL), where examples are provided in the input to guide predictions;

- dynamic cheat sheets (DC), which store short-term lessons or strategies for repeated use, enabling models to apply learned knowledge across sessions;

- sliding window or buffer approaches, which keep the most recent context and remove older information. They help with short-term continuity but lead to lost long-term knowledge;

- summarization and compression methods, which condense older context into shorter summaries, saving space but risking loss of important details and nuance; and

- knowledge graph memory, where structured relationships and facts are stored for reasoning. This allows the model to track entities, their properties, and how they relate to each other over time, giving it access to precise, connected information instead of isolated facts. It still requires careful upkeep to make sure the stored knowledge stays accurate and relevant.

These methods are effective but not without limitations. Two common issues they face are brevity bias and context collapse.

Brevity bias occurs when methods focus on short, general instructions instead of more detailed, task-related ones. As a result, the system may miss important strategies, tips for using tools, or key insights from the field. This bias not only limits the search space but also causes repeated mistakes in future attempts, since new prompts often carry over the same issues as the original ones.

Context collapse happens when the system rewrites the entire context at each step. As the context grows, the model tends to condense it into much shorter, less informative summaries, causing a dramatic loss of detail.

Both context collapse and brevity bias can hurt performance, especially in multistep tasks or knowledge-heavy problems.

Agentic context engineering (ACE) was introduced by researchers from Stanford University, SambaNova Systems, and UC Berkeley to address the drawbacks of previous methods. By treating context as a dynamic, evolving resource, ACE keeps track of strategies, domain-specific insights, and lessons learned from prior interactions. Unlike earlier approaches, ACE can continuously adapt and update context in real-time, ensuring that it remains relevant and accurate as the conversation progresses. Below, we’ll explore how it works in more detail.

How does agentic context engineering work?

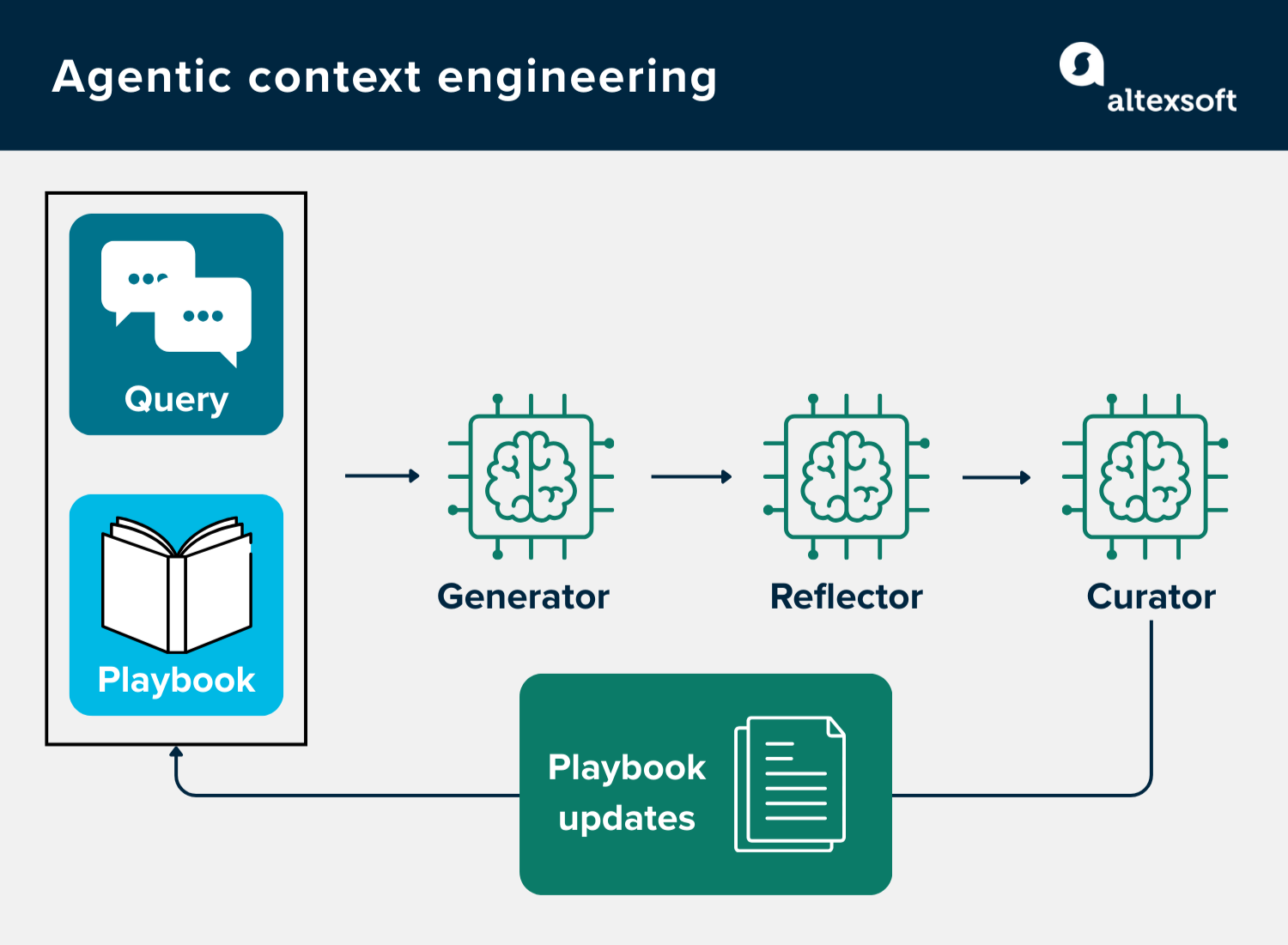

ACE's ability to manage context via a learning loop relies on the Playbook—a structured repository of guidelines that grows and changes over time—and three components—a Generator, a Reflector, and a Curator—which, depending on your setup, can be implemented by a single LLM or a multi-agent system.

Playbook: The ever-updating memory

The Playbook is the central backbone of ACE, acting as a repository for strategies, domain knowledge, guidelines, standard solutions, and more. It isn’t empty at the outset; the Playbook comes preloaded with foundational content, providing the agent with an initial base to work from. Over time, as tasks are executed, the Playbook evolves and grows.

Each entry in the Playbook is a concise bullet point that focuses on a single idea. These bullets are assigned unique IDs and organized into sections, making it simple to update and refine specific parts as needed. The Playbook is typically stored in a structured format, such as a JSON file, which allows for easy management and integration with other systems.

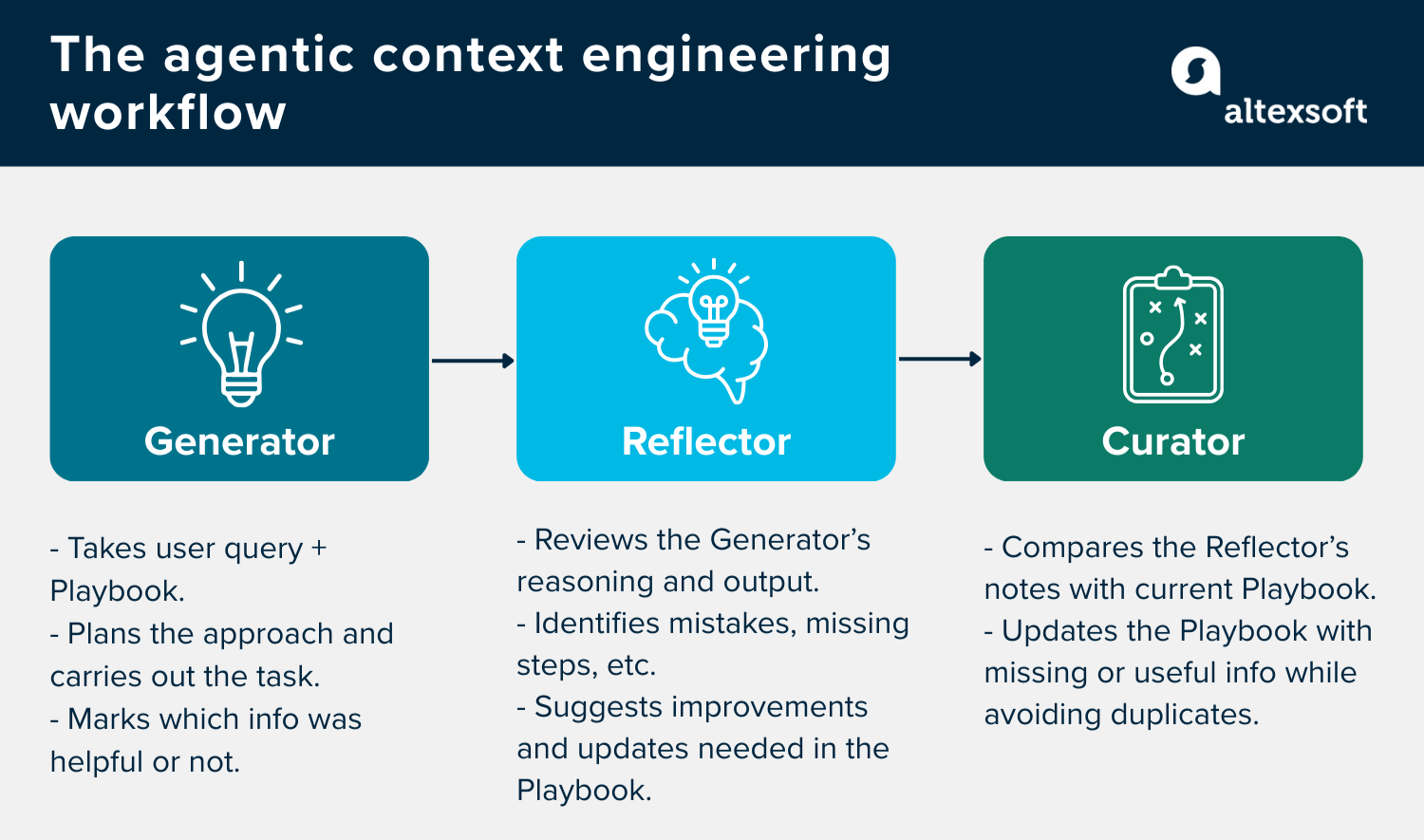

Generator: Planning and execution

Everything starts with the Generator. It takes two inputs: the user query and the Playbook at its current state. Its job is to plan how to complete the task at hand and carry it out accordingly. The Playbook guides the Generator’s reasoning and decisions, so each execution doesn’t start from scratch.

Besides creating the plan and producing the output—a calculation, piece of code, or answer to a question—the Generator also gives feedback about which bullet points in the Playbook were helpful, misleading, or irrelevant during performance of the task.

Reflector: Reviewing the path

Once the Generator has executed its plan and produced an output, the Reflector takes over as the system’s analytical engine. It uses the Generator’s feedback and output to evaluate how well the AI system performed on its last attempt.

The Reflector identifies what works, key mistakes, missing reasoning steps, misconceptions, and ineffective strategies. It then generates clear recommendations for improving the Playbook, ensuring better results in the next run.

Curator: Updating the Playbook

The Curator is what makes ACE truly self-improving. Instead of rewriting or summarizing the entire context, it adds only new information, making sure every addition is actionable and directly useful.

When the Curator receives recommendations from the Reflector, it reviews the existing Playbook alongside the new notes. Its job is to identify gaps that are not yet captured in the Playbook—such as missing strategies, warnings, or insights. It compares the Reflector’s recommendations with existing bullets using semantic embeddings to avoid duplicates, so only new bullets are added. Each update is stored as a bullet point with a unique identifier.

Incremental delta updates to the Playbook are the core mechanism that makes this process efficient and reliable. Rather than regenerating the entire Playbook each time, the Curator produces compact sets of new or updated bullets and adds them to the Playbook. This preserves the existing knowledge exactly as it is, prevents context collapse (a situation where important contextual information is lost or degraded over time), and allows the Playbook to grow steadily.

Challenges and considerations when implementing ACE

One key challenge with ACE is its reliance on a capable Reflector. If the Reflector fails to extract meaningful insights from the Generator’s reasoning or outcomes, the Playbook may contain unhelpful entries. In highly specialized areas, where even advanced models struggle to spot useful lessons, the context it builds can naturally be weak or incomplete.

Another consideration is that not every task requires a detailed, evolving Playbook. For simple question-answering or problems with fixed rules, a few clear instructions may suffice. Adding too much context could even hinder performance by overcomplicating the process.

Best use cases for ACE

ACE is particularly beneficial in situations involving complex, multi-step, or domain-specific tasks, where losing crucial details could lead to critical errors. It’s also needed in scenarios where the system must maintain evolving context throughout multiple interactions, manage detailed knowledge, and continuously self-improve without supervision. These include

- financial analysis, where the system needs to track evolving metrics, detect trends, or maintain historical context across multiple reports;

- customer relationship management (CRM), where interactions with clients and internal notes must be retained and applied to future decisions;

- enterprise-level process automation, where workflows span multiple systems and require precise coordination; and

- technical support or troubleshooting environments, where prior resolutions and system states must be remembered to avoid repeating errors.

In all the above-mentioned cases, ACE offers significant benefits in terms of efficiency and cost. Its incremental delta updates, together with context merging and deduplication that don’t require full LLM rewrites, allow the system to expand its knowledge steadily while reducing unnecessary token usage and repeated computations. That means the system can become more accurate and perform better without driving up computation costs or slowing things down, which makes it well-suited for real-world tasks where you need both precision and speed.

With a software engineering background, Nefe demystifies technology-specific topics—such as web development, cloud computing, and data science—for readers of all levels.

Want to write an article for our blog? Read our requirements and guidelines to become a contributor.