Moving data from one system to another is an inevitable part of modernization — and rarely a painless one. Industry statistics show that more than 80 percent of data migration projects miss their time or budget targets, largely due to the complexity involved.

Whether the transfer is from on-premises infrastructure to the cloud or between cloud environments, teams must untangle years of accumulated data, dependencies, and tightly coupled processes. In this article, we share our experience migrating over 5,000 databases for a hospitality BI provider and the key lessons learned.

Why do you need to move data at all?

Data migration isn’t something taken lightly. It’s a strategic move driven by a range of business needs, including system upgrades, performance improvements, cost efficiency, and compliance with evolving regulations.

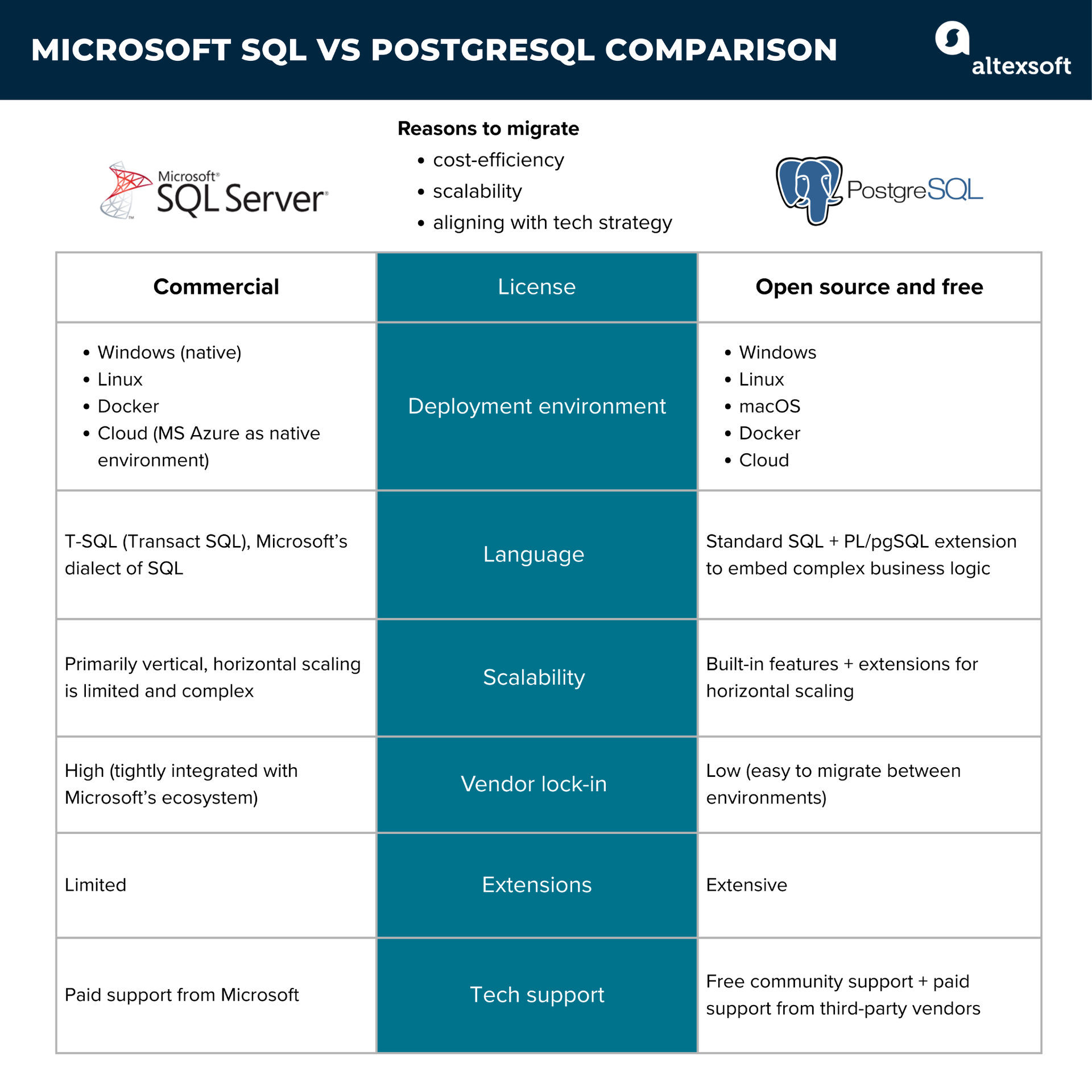

Our client, a company that provides financial software to the hospitality industry and serves hundreds of businesses with unique data infrastructures, recently took this important step. They transitioned from Microsoft SQL (MS SQL) to a new storage solution — PostgreSQL — to reduce costs, improve scalability, and align with their long-term technology goals.

Key differences between MS SQL and PostgreSQL databases

Here’s a broader explanation behind their choice.

Cost-effectiveness. PostgreSQL is an open-source database, which means it’s completely free to use, without burdensome licensing fees. It also comes with a variety of free tools and extensions to enhance its capabilities. On the flip side, Microsoft SQL Server requires a paid license, which can get pretty pricey depending on the edition and number of users.

Scalability. Microsoft SQL is primarily designed for vertical scaling (scaling up) — basically, adding more resources like CPU, RAM, and storage to a single server. While this can improve performance, it also comes with higher costs as the system grows. Eventually, you're faced with the challenge of upgrading a machine that’s reached its limit, making it a less flexible solution as your business expands.

PostgreSQL, however, has strong support for horizontal scaling (scaling out) — distributing workloads across multiple machines. This is achieved via a combination of built-in features and extensions, making it particularly useful in cloud environments, where resources can be scaled on demand. For businesses requiring sophisticated multidimensional analysis — like our client, who focuses on financial forecasting, revenue management, and budgeting — this ability to scale across multiple servers is a game-changer.

While Microsoft SQL does offer some level of horizontal scaling, it's often more complex and limited to higher-priced plans, increasing costs further.

Technology considerations. PostgreSQL is cloud-agnostic and works seamlessly with all major cloud platforms, including AWS, Azure, and Google Cloud. It integrates easily with cloud-native tools like Docker, Kubernetes, and serverless architectures.

While MS SQL Server is supported in cloud environments, it is more tightly integrated with the Microsoft ecosystem, particularly within Azure, and often requires additional setup when used on non-Microsoft platforms. This can complicate integration with other cloud-native tools.

Additionally, PostgreSQL can manage multiple schemas within a single database, making it ideal for multi-tenant applications. This is particularly useful for cloud-based financial platforms that need to serve multiple clients while ensuring data isolation and security—without the added complexity of managing separate databases for each client.

Given these advantages, it was clear that migrating to PostgreSQL was the logical step. But, of course, transitioning from Microsoft SQL to PostgreSQL comes with its own set of challenges.

Migration challenges

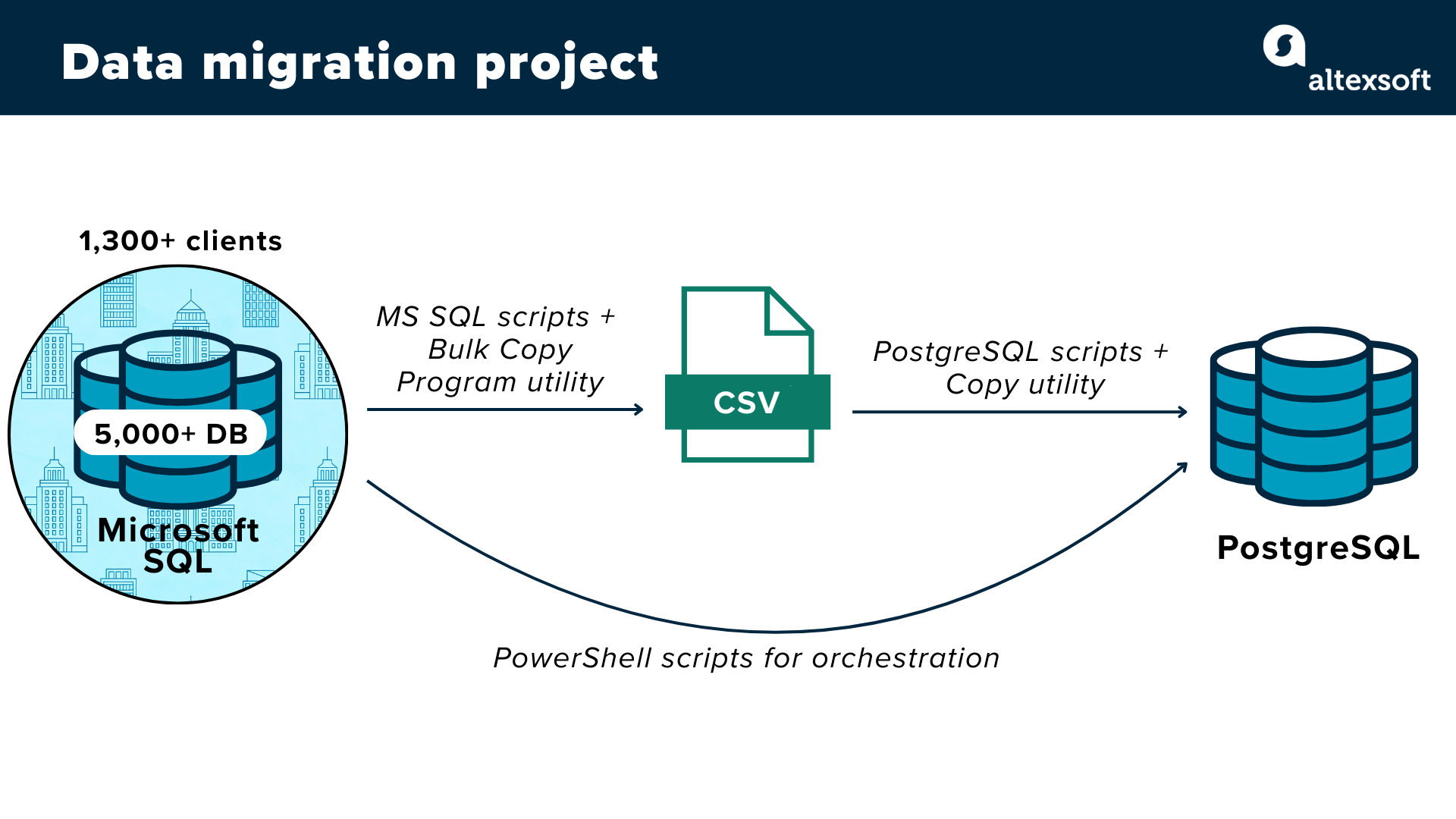

Migrating data for a client that serves over 1,300 customers and manages more than 5,000 databases is no small feat. The complexity was compounded by a series of evolving requirements.

During the migration, the company needed to continuously release new feature updates and hotfixes. This meant migration scripts had to be regularly aligned with the latest software and database versions.

Initially, the migration was conducted on a per-database basis. However, to streamline the process, the team pivoted to migrating entire tenant groups (customers) at once. This adjustment required expanding the migration strategy to accommodate all tenants collectively.

A significant hurdle was the need to run two versions of the website in parallel. While organizations that had migrated were switched to the new PostgreSQL-based site, those still using MS SQL continued on the old version.

To tackle these challenges, our expert team took a comprehensive approach. "We developed a detailed migration plan," says Olga Ladyk, Delivery Manager involved in the project. "Our goal was to ensure a gradual transition for each organization, with its own database and structure, so they wouldn’t feel any disruption."

Data migration steps

The migration took several months to complete, with all customers eventually transitioning to the new system. Here’s a detailed breakdown of how the process unfolded.

Step 1: Bringing users to the same version of MS SQL

Hotels using our client’s software were running on different versions of MS SQL. Before migration could begin, it was essential to upgrade all companies to the most current release. This step was crucial, especially considering that during the migration, our client was also rolling out feature updates and bug fixes to their products.

“With development ongoing, supporting multiple versions of the database in both SQL Server and PostgreSQL — and maintaining changes in both environments — would have been a nightmare,” says Roman Bohun, Software Engineer responsible for creating the migration scripts.

To streamline the process, the client prioritized hotels for migration based on their compatibility with the target schema.

Step 2: Testing migration approaches

Before the project kicked off, we explored a few different ways to tackle the task. At first, we thought about transferring the data directly using:

- a Linked Server, which acts like a bridge, letting SQL Server communicate with external databases as if they were part of the local system, and

- a PostgreSQL ODBC driver, which is like a universal translator that lets other systems speak to PostgreSQL.

This seemed like the simplest route and one that many people use, but it quickly revealed its limitations for large-scale migration. ODBS transfers data row by row through multiple layers of abstraction. This introduces high CPU overhead and poor throughput.

“A 1.3 GB database took over an hour to migrate, and some of their databases were 30 GB or more,” explains Roman Bohun. "So, we decided to ditch this method and switched to a faster route: bulk loading data through an intermediate CSV file.”

It was like switching from a slow, bumpy road to a smooth highway, cutting migration time for smaller databases from 30 minutes to just 5-10 seconds. So our team proceeded with this solution.

Step 3: Writing scripts for bulk migration

To automate the end-to-end migration process, our engineer developed a series of scripts that streamlined the entire workflow across both MS SQL and PostgreSQL environments.

How the bulk migration unfolds

PowerShell scripts. PowerShell is a versatile tool developed by Microsoft that combines a scripting language and automation framework. It's built on the .NET framework and is cross-platform, meaning it works on different types of systems. In our migration project, PowerShell acted as the orchestrator: It connected to both MS SQL and PostgreSQL, triggered the necessary automation steps handled by other scripts, and coordinated the entire process.

MS SQL scripts. The scripts written in T-SQL —a special version of SQL used by Microsoft SQL Server —were responsible for pulling the required data from the MS SQL database and getting it ready for the next steps in the process. Once the data was prepared, it was exported to a CSV file using the BCP (Bulk Copy Program) utility. This command-line tool is very efficient for transferring large amounts of data and helps move the information quickly into an intermediate format.

PostgreSQL scripts: Once the data was exported to CSV files, scripts created the necessary tables in PostgreSQL and imported the data using PostgreSQL’s built-in COPY command. The COPY utility is one of the fastest ways to transfer large datasets from files into PostgreSQL tables, ensuring rapid, efficient data loading.

After the import, additional post-processing steps were applied to clean and adjust the data as needed. “We recreated the database in PostgreSQL, mirroring the MS SQL setup,” shares Olexiy Karpov, Software Engineer, leading the migration process. “There are some mismatches between the two systems. For example, images and anything binary look slightly different."

Step 4: Adapting the infrastructure to changes

As part of the migration project, we updated an internal tool used for database operations such as merging, splitting, and creating backups. The utility required additional development to align it with the upcoming changes. We also modified the data source package. The original component enabled .NET applications to communicate with SQL Server. We replaced this layer and adapted the existing codebase to work with PostgreSQL.

Planning further modernization

As the client moved their data, the limitations of their legacy .NET codebase became increasingly evident. This migration highlighted the need for a complete modernization of the application—not just the data layer. The plan is to move to .NET Core, driven by the need for greater flexibility, scalability, and faster deployment.

.NET Core is a popular choice for modernizing legacy systems because it's open-source, cross-platform, and optimized for high performance. It offers greater speed and efficiency compared to the older .NET framework, making it an ideal solution for handling large workloads. The ability to run on multiple platforms, including Linux, also opens up more deployment options, reducing infrastructure costs. Plus, its lightweight nature and support for microservices allow for more agile development, which speeds up feature delivery.

To help guide this transition, our team proposed a proof-of-concept using the Strangler Fig pattern—a proven approach we've successfully implemented in previous modernization projects. It works by incrementally rewriting and deploying individual parts of the legacy system to the new environment. During this phased approach, the old infrastructure continues to run alongside the new system. Over time, as more components are migrated, the legacy system is gradually and entirely replaced.

One standout example is when we migrated a 20-year-old property management system (PMS). Instead of doing a risky, all-at-once migration, we opted for a gradual replacement of the old system with modern components.

You can learn more details from our article about our hands-on experience with the Strangle Fig pattern in travel system migration.

All-in-all, the proposed method minimizes disruption, optimizes resource usage, and ensures that the system evolves at a manageable pace.

With 25 years of experience, Liudmyla is a seasoned editor and IT journalist. Over the last five years, she has focused on travel tech, travel payments, and the advancements in NDC implementation.

Want to write an article for our blog? Read our requirements and guidelines to become a contributor.